mirror of

https://github.com/Security-Onion-Solutions/securityonion.git

synced 2026-01-23 08:31:30 +01:00

Compare commits

3 Commits

2.4.90-202

...

kaffytaffy

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d91dd0dd3c | ||

|

|

a0388fd568 | ||

|

|

05244cfd75 |

2

.github/.gitleaks.toml

vendored

2

.github/.gitleaks.toml

vendored

@@ -536,7 +536,7 @@ secretGroup = 4

|

||||

|

||||

[allowlist]

|

||||

description = "global allow lists"

|

||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''', '''ssl_.*password''']

|

||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''']

|

||||

paths = [

|

||||

'''gitleaks.toml''',

|

||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

||||

|

||||

1

.github/workflows/close-threads.yml

vendored

1

.github/workflows/close-threads.yml

vendored

@@ -15,7 +15,6 @@ concurrency:

|

||||

|

||||

jobs:

|

||||

close-threads:

|

||||

if: github.repository_owner == 'security-onion-solutions'

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

|

||||

1

.github/workflows/lock-threads.yml

vendored

1

.github/workflows/lock-threads.yml

vendored

@@ -15,7 +15,6 @@ concurrency:

|

||||

|

||||

jobs:

|

||||

lock-threads:

|

||||

if: github.repository_owner == 'security-onion-solutions'

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: jertel/lock-threads@main

|

||||

|

||||

@@ -1,17 +1,17 @@

|

||||

### 2.4.90-20240729 ISO image released on 2024/07/29

|

||||

### 2.4.60-20240320 ISO image released on 2024/03/20

|

||||

|

||||

|

||||

### Download and Verify

|

||||

|

||||

2.4.90-20240729 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.90-20240729.iso

|

||||

2.4.60-20240320 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

||||

|

||||

MD5: 9A7714F5922EE555F08675D25E6237D5

|

||||

SHA1: D3B331452627DB716906BA9F3922574DFA3852DC

|

||||

SHA256: 5B0CE32543944DBC50C4E906857384211E1BE83EF409619778F18FC62017E0E0

|

||||

MD5: 178DD42D06B2F32F3870E0C27219821E

|

||||

SHA1: 73EDCD50817A7F6003FE405CF1808A30D034F89D

|

||||

SHA256: DD334B8D7088A7B78160C253B680D645E25984BA5CCAB5CC5C327CA72137FC06

|

||||

|

||||

Signature for ISO image:

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.90-20240729.iso.sig

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

||||

|

||||

Signing key:

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||

@@ -25,29 +25,27 @@ wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.

|

||||

|

||||

Download the signature file for the ISO:

|

||||

```

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.90-20240729.iso.sig

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

||||

```

|

||||

|

||||

Download the ISO image:

|

||||

```

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.90-20240729.iso

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

||||

```

|

||||

|

||||

Verify the downloaded ISO image using the signature file:

|

||||

```

|

||||

gpg --verify securityonion-2.4.90-20240729.iso.sig securityonion-2.4.90-20240729.iso

|

||||

gpg --verify securityonion-2.4.60-20240320.iso.sig securityonion-2.4.60-20240320.iso

|

||||

```

|

||||

|

||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||

```

|

||||

gpg: Signature made Thu 25 Jul 2024 06:51:11 PM EDT using RSA key ID FE507013

|

||||

gpg: Signature made Tue 19 Mar 2024 03:17:58 PM EDT using RSA key ID FE507013

|

||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||

gpg: WARNING: This key is not certified with a trusted signature!

|

||||

gpg: There is no indication that the signature belongs to the owner.

|

||||

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||

```

|

||||

|

||||

If it fails to verify, try downloading again. If it still fails to verify, try downloading from another computer or another network.

|

||||

|

||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

|

||||

13

README.md

13

README.md

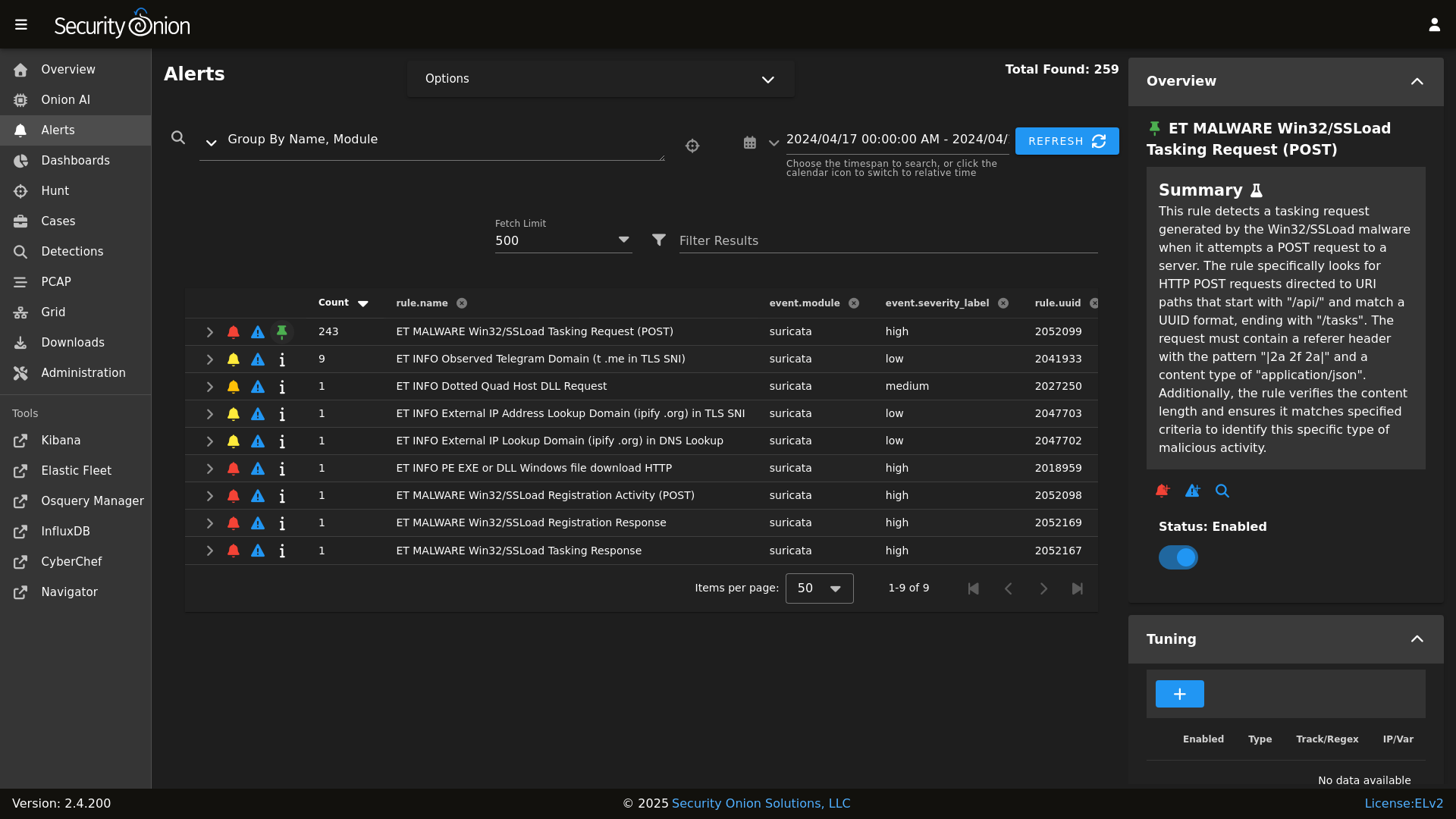

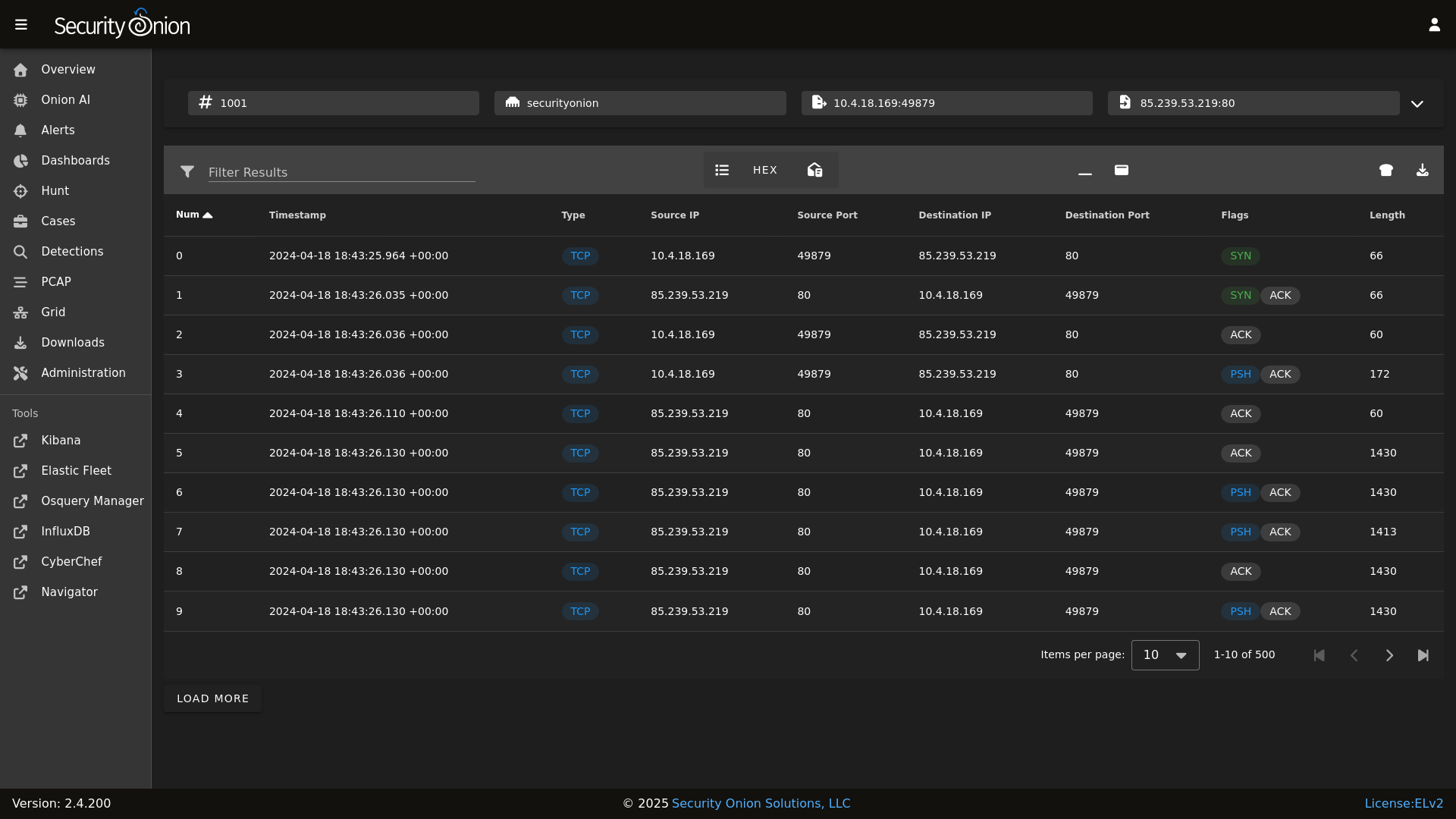

@@ -8,22 +8,19 @@ Alerts

|

||||

|

||||

|

||||

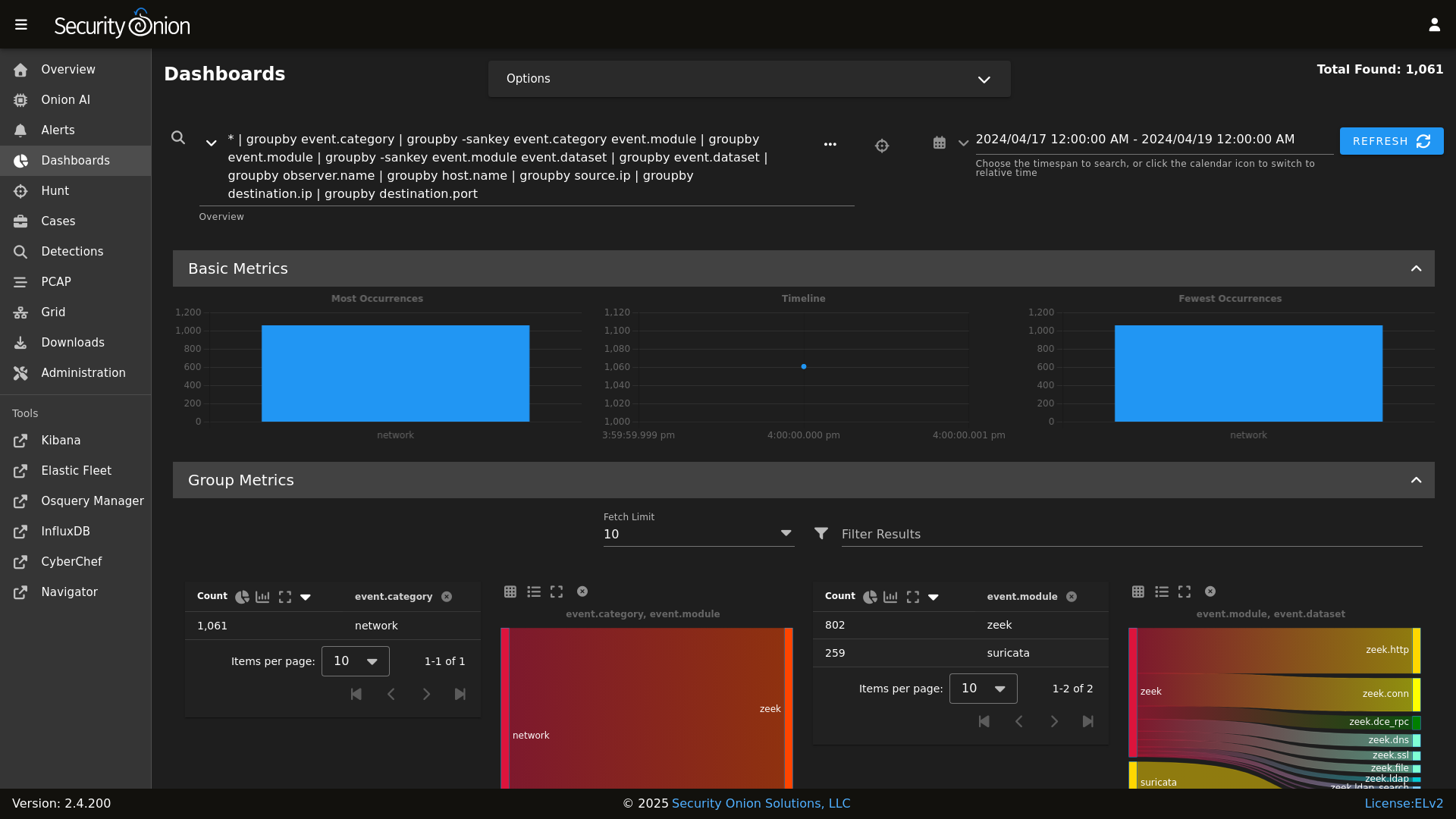

Dashboards

|

||||

|

||||

|

||||

|

||||

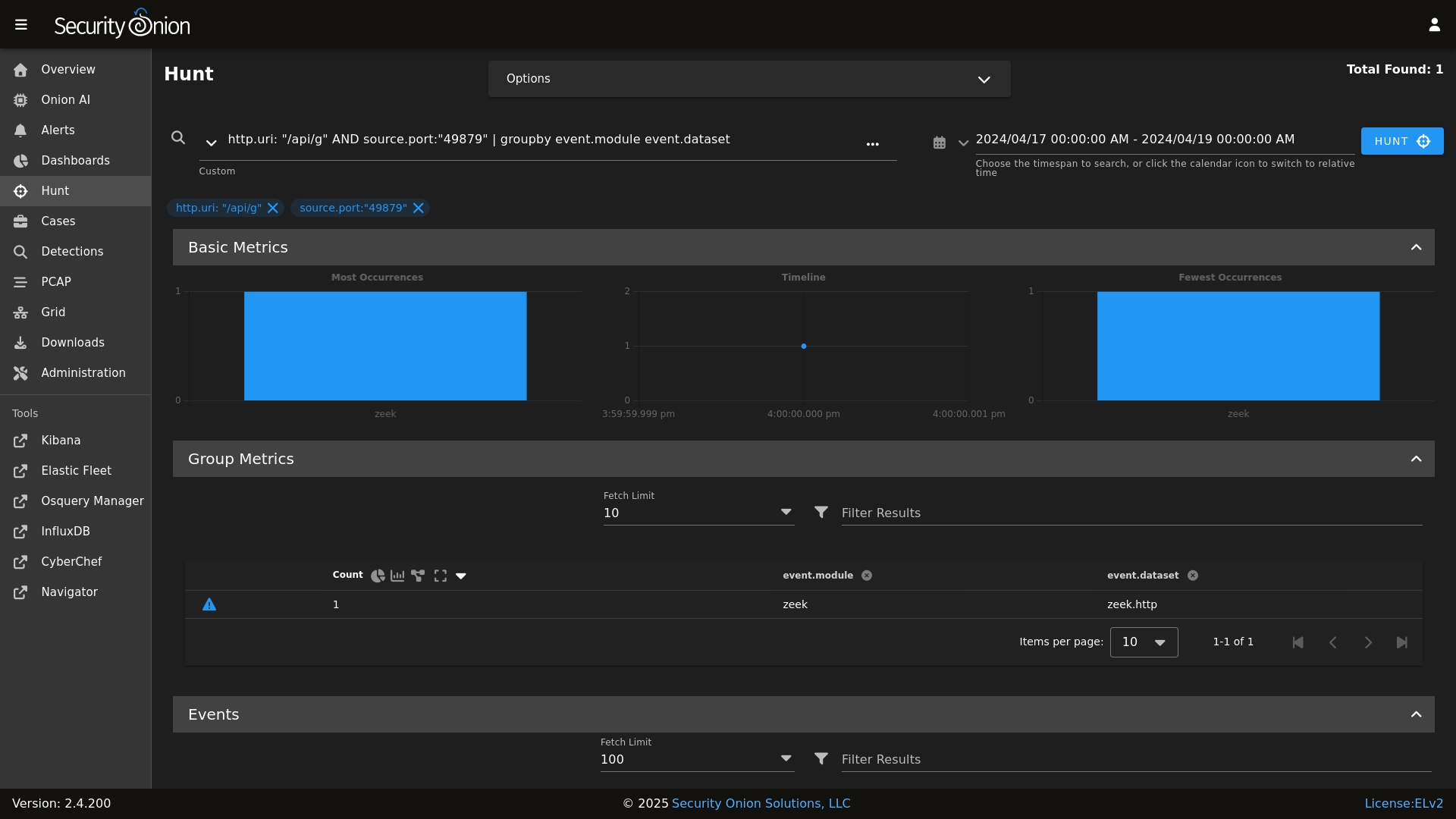

Hunt

|

||||

|

||||

|

||||

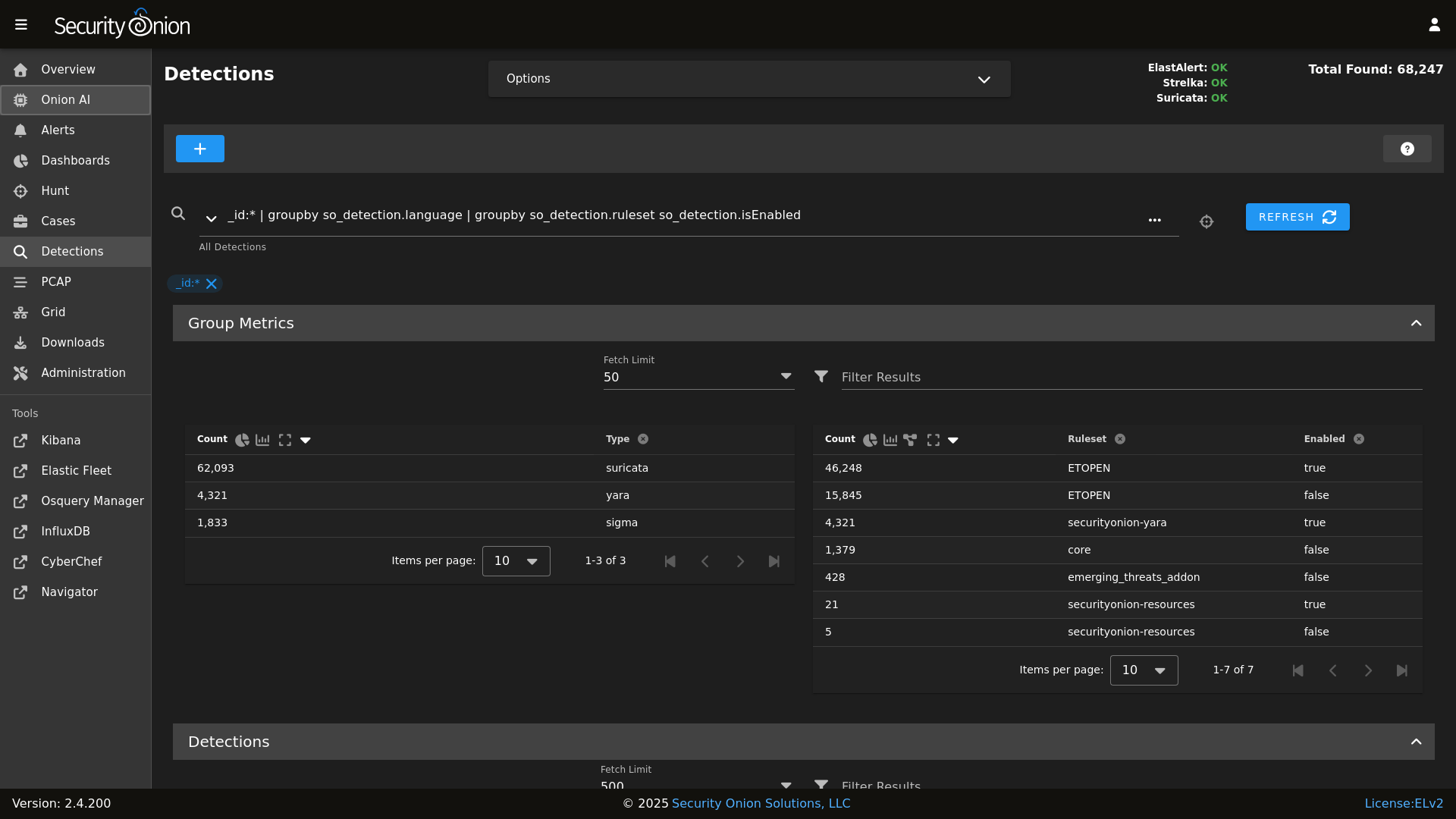

Detections

|

||||

|

||||

|

||||

|

||||

PCAP

|

||||

|

||||

|

||||

|

||||

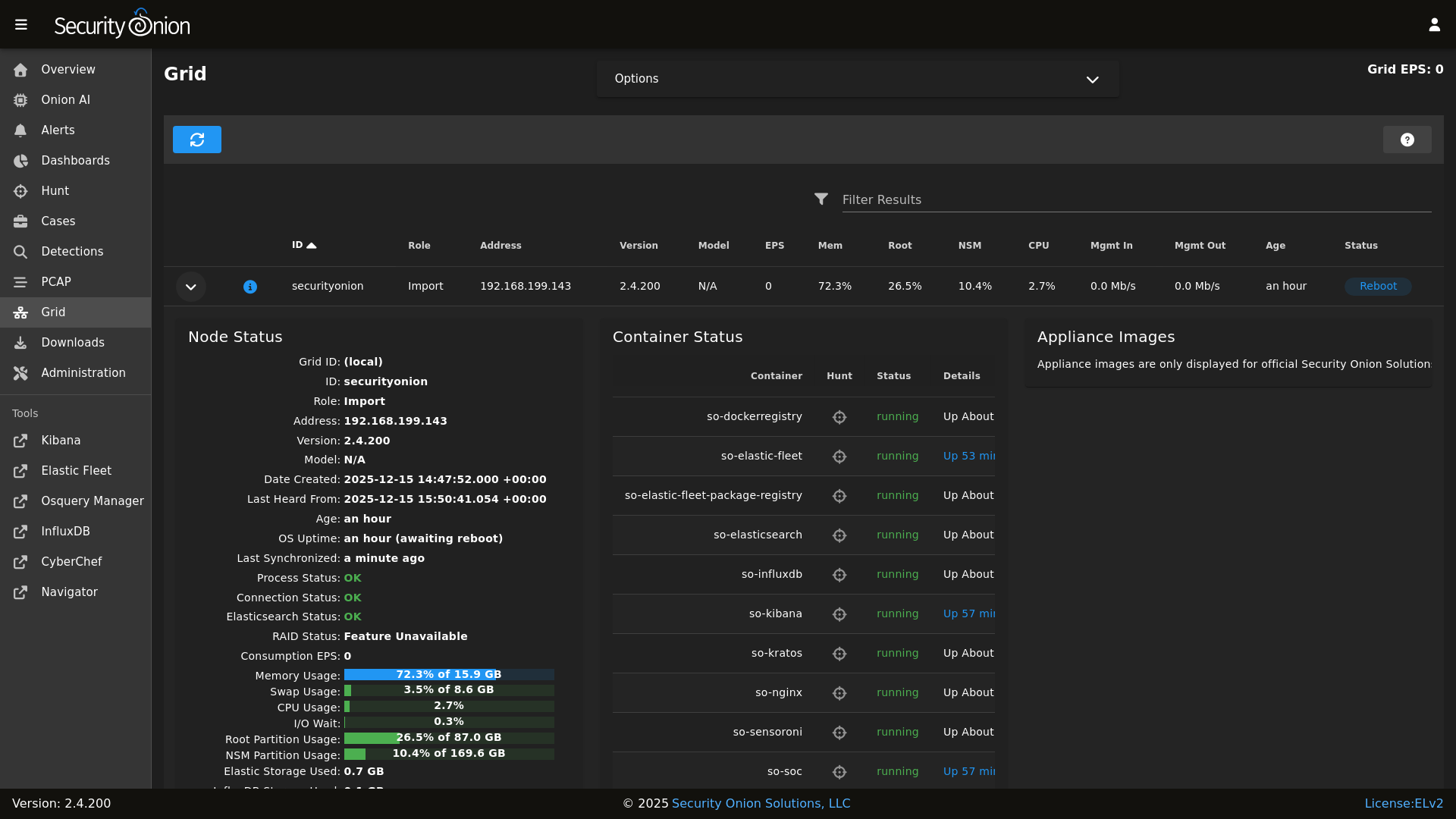

Grid

|

||||

|

||||

|

||||

|

||||

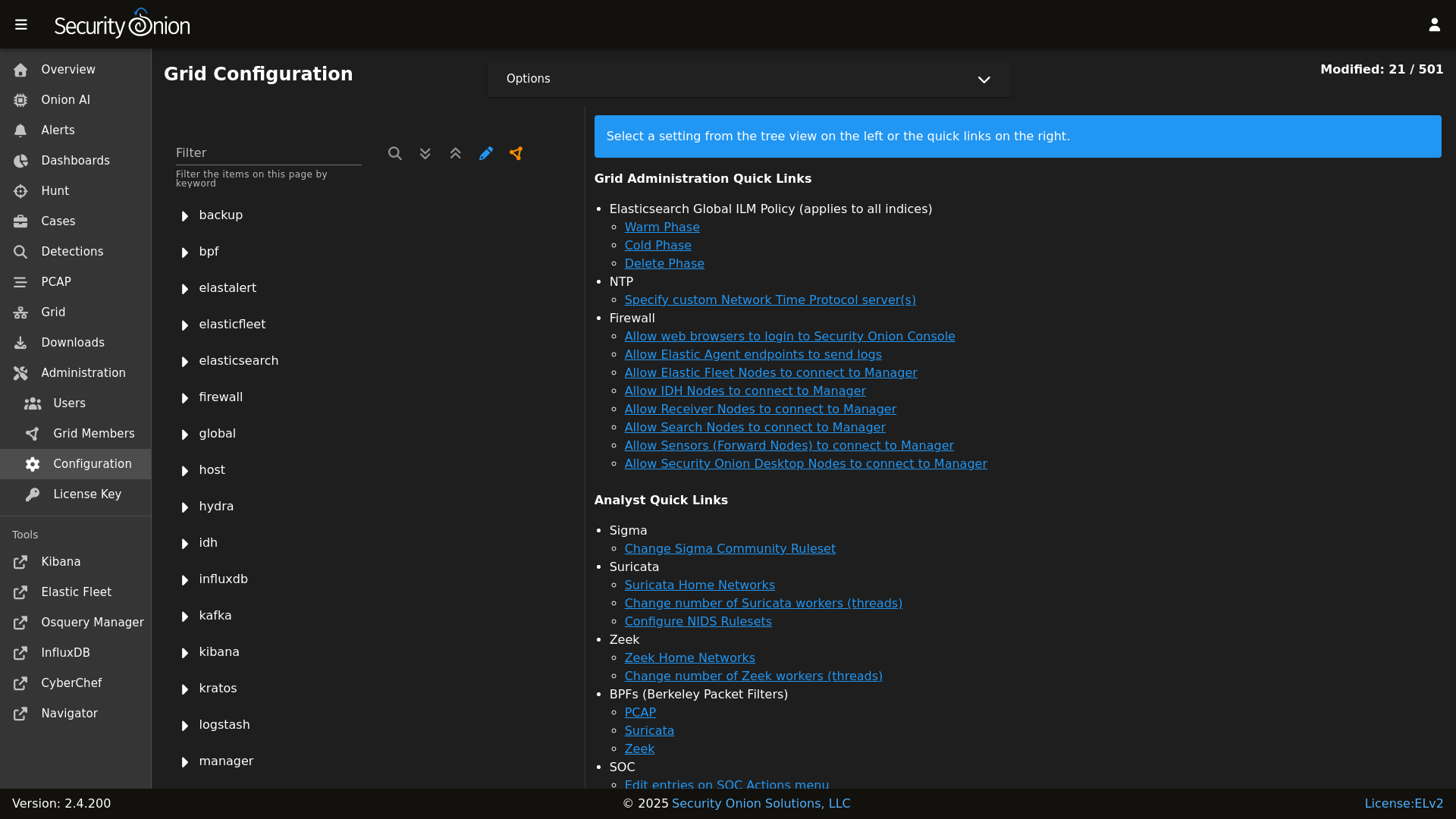

Config

|

||||

|

||||

|

||||

|

||||

### Release Notes

|

||||

|

||||

|

||||

@@ -1,34 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='elasticsearch:enabled:true',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

elasticsearch:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -1,2 +1,30 @@

|

||||

{% set current_kafkanodes = salt.saltutil.runner('mine.get', tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-receiver', fun='network.ip_addrs', tgt_type='compound') %}

|

||||

{% set pillar_kafkanodes = salt['pillar.get']('kafka:nodes', default={}, merge=True) %}

|

||||

|

||||

{% set existing_ids = [] %}

|

||||

{% for node in pillar_kafkanodes.values() %}

|

||||

{% if node.get('id') %}

|

||||

{% do existing_ids.append(node['nodeid']) %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

{% set all_possible_ids = range(1, 256)|list %}

|

||||

|

||||

{% set available_ids = [] %}

|

||||

{% for id in all_possible_ids %}

|

||||

{% if id not in existing_ids %}

|

||||

{% do available_ids.append(id) %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

{% set final_nodes = pillar_kafkanodes.copy() %}

|

||||

|

||||

{% for minionid, ip in current_kafkanodes.items() %}

|

||||

{% set hostname = minionid.split('_')[0] %}

|

||||

{% if hostname not in final_nodes %}

|

||||

{% set new_id = available_ids.pop(0) %}

|

||||

{% do final_nodes.update({hostname: {'nodeid': new_id, 'ip': ip[0]}}) %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

kafka:

|

||||

nodes:

|

||||

nodes: {{ final_nodes|tojson }}

|

||||

|

||||

@@ -1,15 +1,16 @@

|

||||

{% set node_types = {} %}

|

||||

{% set cached_grains = salt.saltutil.runner('cache.grains', tgt='*') %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='logstash:enabled:true',

|

||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-searchnode or G@role:so-heavynode or G@role:so-receiver or G@role:so-fleet ',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

tgt_type='compound') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% set hostname = cached_grains[minionid]['host'] %}

|

||||

{% set node_type = minionid.split('_')[1] %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

|

||||

@@ -1,34 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='redis:enabled:true',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

redis:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -47,12 +47,10 @@ base:

|

||||

- kibana.adv_kibana

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- redis.nodes

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.nodes

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

@@ -149,12 +147,10 @@ base:

|

||||

- idstools.adv_idstools

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- redis.nodes

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.nodes

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

@@ -219,22 +215,17 @@ base:

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- elasticsearch.nodes

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

- redis.nodes

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

- stig.soc_stig

|

||||

- soc.license

|

||||

- kafka.nodes

|

||||

- kafka.soc_kafka

|

||||

- kafka.adv_kafka

|

||||

|

||||

'*_receiver':

|

||||

- logstash.nodes

|

||||

@@ -250,7 +241,6 @@ base:

|

||||

- kafka.nodes

|

||||

- kafka.soc_kafka

|

||||

- kafka.adv_kafka

|

||||

- soc.license

|

||||

|

||||

'*_import':

|

||||

- secrets

|

||||

|

||||

14

pyci.sh

14

pyci.sh

@@ -15,16 +15,12 @@ TARGET_DIR=${1:-.}

|

||||

|

||||

PATH=$PATH:/usr/local/bin

|

||||

|

||||

if [ ! -d .venv ]; then

|

||||

python -m venv .venv

|

||||

fi

|

||||

|

||||

source .venv/bin/activate

|

||||

|

||||

if ! pip install flake8 pytest pytest-cov pyyaml; then

|

||||

echo "Unable to install dependencies."

|

||||

if ! which pytest &> /dev/null || ! which flake8 &> /dev/null ; then

|

||||

echo "Missing dependencies. Consider running the following command:"

|

||||

echo " python -m pip install flake8 pytest pytest-cov"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

pip install pytest pytest-cov

|

||||

flake8 "$TARGET_DIR" "--config=${HOME_DIR}/pytest.ini"

|

||||

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

||||

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

||||

@@ -65,7 +65,6 @@

|

||||

'registry',

|

||||

'manager',

|

||||

'nginx',

|

||||

'strelka.manager',

|

||||

'soc',

|

||||

'kratos',

|

||||

'influxdb',

|

||||

@@ -92,7 +91,6 @@

|

||||

'nginx',

|

||||

'telegraf',

|

||||

'influxdb',

|

||||

'strelka.manager',

|

||||

'soc',

|

||||

'kratos',

|

||||

'elasticfleet',

|

||||

@@ -114,7 +112,6 @@

|

||||

'nginx',

|

||||

'telegraf',

|

||||

'influxdb',

|

||||

'strelka.manager',

|

||||

'soc',

|

||||

'kratos',

|

||||

'elastic-fleet-package-registry',

|

||||

@@ -136,9 +133,7 @@

|

||||

'firewall',

|

||||

'schedule',

|

||||

'docker_clean',

|

||||

'stig',

|

||||

'kafka.ca',

|

||||

'kafka.ssl'

|

||||

'stig'

|

||||

],

|

||||

'so-standalone': [

|

||||

'salt.master',

|

||||

@@ -197,7 +192,7 @@

|

||||

'schedule',

|

||||

'docker_clean',

|

||||

'kafka',

|

||||

'stig'

|

||||

'elasticsearch.ca'

|

||||

],

|

||||

'so-desktop': [

|

||||

'ssl',

|

||||

|

||||

@@ -1,3 +1,6 @@

|

||||

mine_functions:

|

||||

x509.get_pem_entries: [/etc/pki/ca.crt]

|

||||

|

||||

x509_signing_policies:

|

||||

filebeat:

|

||||

- minions: '*'

|

||||

|

||||

@@ -1,8 +1,3 @@

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0.

|

||||

|

||||

{% if '2.4' in salt['cp.get_file_str']('/etc/soversion') %}

|

||||

|

||||

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

||||

@@ -20,8 +15,6 @@ remove_common_so-firewall:

|

||||

file.absent:

|

||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

||||

|

||||

# This section is used to put the scripts in place in the Salt file system

|

||||

# in case a state run tries to overwrite what we do in the next section.

|

||||

copy_so-common_common_tools_sbin:

|

||||

file.copy:

|

||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

||||

@@ -50,21 +43,6 @@ copy_so-firewall_manager_tools_sbin:

|

||||

- force: True

|

||||

- preserve: True

|

||||

|

||||

copy_so-yaml_manager_tools_sbin:

|

||||

file.copy:

|

||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-yaml.py

|

||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

||||

- force: True

|

||||

- preserve: True

|

||||

|

||||

copy_so-repo-sync_manager_tools_sbin:

|

||||

file.copy:

|

||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-repo-sync

|

||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

||||

- preserve: True

|

||||

|

||||

# This section is used to put the new script in place so that it can be called during soup.

|

||||

# It is faster than calling the states that normally manage them to put them in place.

|

||||

copy_so-common_sbin:

|

||||

file.copy:

|

||||

- name: /usr/sbin/so-common

|

||||

@@ -100,13 +78,6 @@ copy_so-yaml_sbin:

|

||||

- force: True

|

||||

- preserve: True

|

||||

|

||||

copy_so-repo-sync_sbin:

|

||||

file.copy:

|

||||

- name: /usr/sbin/so-repo-sync

|

||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

||||

- force: True

|

||||

- preserve: True

|

||||

|

||||

{% else %}

|

||||

fix_23_soup_sbin:

|

||||

cmd.run:

|

||||

|

||||

@@ -5,13 +5,8 @@

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0.

|

||||

|

||||

|

||||

|

||||

. /usr/sbin/so-common

|

||||

|

||||

cat << EOF

|

||||

|

||||

so-checkin will run a full salt highstate to apply all salt states. If a highstate is already running, this request will be queued and so it may pause for a few minutes before you see any more output. For more information about so-checkin and salt, please see:

|

||||

https://docs.securityonion.net/en/2.4/salt.html

|

||||

|

||||

EOF

|

||||

|

||||

salt-call state.highstate -l info queue=True

|

||||

salt-call state.highstate -l info

|

||||

|

||||

@@ -31,11 +31,6 @@ if ! echo "$PATH" | grep -q "/usr/sbin"; then

|

||||

export PATH="$PATH:/usr/sbin"

|

||||

fi

|

||||

|

||||

# See if a proxy is set. If so use it.

|

||||

if [ -f /etc/profile.d/so-proxy.sh ]; then

|

||||

. /etc/profile.d/so-proxy.sh

|

||||

fi

|

||||

|

||||

# Define a banner to separate sections

|

||||

banner="========================================================================="

|

||||

|

||||

@@ -184,21 +179,6 @@ copy_new_files() {

|

||||

cd /tmp

|

||||

}

|

||||

|

||||

create_local_directories() {

|

||||

echo "Creating local pillar and salt directories if needed"

|

||||

PILLARSALTDIR=$1

|

||||

local_salt_dir="/opt/so/saltstack/local"

|

||||

for i in "pillar" "salt"; do

|

||||

for d in $(find $PILLARSALTDIR/$i -type d); do

|

||||

suffixdir=${d//$PILLARSALTDIR/}

|

||||

if [ ! -d "$local_salt_dir/$suffixdir" ]; then

|

||||

mkdir -pv $local_salt_dir$suffixdir

|

||||

fi

|

||||

done

|

||||

chown -R socore:socore $local_salt_dir/$i

|

||||

done

|

||||

}

|

||||

|

||||

disable_fastestmirror() {

|

||||

sed -i 's/enabled=1/enabled=0/' /etc/yum/pluginconf.d/fastestmirror.conf

|

||||

}

|

||||

|

||||

@@ -201,10 +201,6 @@ if [[ $EXCLUDE_KNOWN_ERRORS == 'Y' ]]; then

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Unknown column" # Elastalert errors from running EQL queries

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|parsing_exception" # Elastalert EQL parsing issue. Temp.

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|context deadline exceeded"

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Error running query:" # Specific issues with detection rules

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|detect-parse" # Suricata encountering a malformed rule

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|integrity check failed" # Detections: Exclude false positive due to automated testing

|

||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|syncErrors" # Detections: Not an actual error

|

||||

fi

|

||||

|

||||

RESULT=0

|

||||

@@ -240,8 +236,6 @@ exclude_log "playbook.log" # Playbook is removed as of 2.4.70, logs may still be

|

||||

exclude_log "mysqld.log" # MySQL is removed as of 2.4.70, logs may still be on disk

|

||||

exclude_log "soctopus.log" # Soctopus is removed as of 2.4.70, logs may still be on disk

|

||||

exclude_log "agentstatus.log" # ignore this log since it tracks agents in error state

|

||||

exclude_log "detections_runtime-status_yara.log" # temporarily ignore this log until Detections is more stable

|

||||

exclude_log "/nsm/kafka/data/" # ignore Kafka data directory from log check.

|

||||

|

||||

for log_file in $(cat /tmp/log_check_files); do

|

||||

status "Checking log file $log_file"

|

||||

|

||||

@@ -1,98 +0,0 @@

|

||||

#!/bin/bash

|

||||

#

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0."

|

||||

|

||||

set -e

|

||||

# This script is intended to be used in the case the ISO install did not properly setup TPM decrypt for LUKS partitions at boot.

|

||||

if [ -z $NOROOT ]; then

|

||||

# Check for prerequisites

|

||||

if [ "$(id -u)" -ne 0 ]; then

|

||||

echo "This script must be run using sudo!"

|

||||

exit 1

|

||||

fi

|

||||

fi

|

||||

ENROLL_TPM=N

|

||||

|

||||

while [[ $# -gt 0 ]]; do

|

||||

case $1 in

|

||||

--enroll-tpm)

|

||||

ENROLL_TPM=Y

|

||||

;;

|

||||

*)

|

||||

echo "Usage: $0 [options]"

|

||||

echo ""

|

||||

echo "where options are:"

|

||||

echo " --enroll-tpm for when TPM enrollment was not selected during ISO install."

|

||||

echo ""

|

||||

exit 1

|

||||

;;

|

||||

esac

|

||||

shift

|

||||

done

|

||||

|

||||

check_for_tpm() {

|

||||

echo -n "Checking for TPM: "

|

||||

if [ -d /sys/class/tpm/tpm0 ]; then

|

||||

echo -e "tpm0 found."

|

||||

TPM="yes"

|

||||

# Check if TPM is using sha1 or sha256

|

||||

if [ -d /sys/class/tpm/tpm0/pcr-sha1 ]; then

|

||||

echo -e "TPM is using sha1.\n"

|

||||

TPM_PCR="sha1"

|

||||

elif [ -d /sys/class/tpm/tpm0/pcr-sha256 ]; then

|

||||

echo -e "TPM is using sha256.\n"

|

||||

TPM_PCR="sha256"

|

||||

fi

|

||||

else

|

||||

echo -e "No TPM found.\n"

|

||||

exit 1

|

||||

fi

|

||||

}

|

||||

|

||||

check_for_luks_partitions() {

|

||||

echo "Checking for LUKS partitions"

|

||||

for part in $(lsblk -o NAME,FSTYPE -ln | grep crypto_LUKS | awk '{print $1}'); do

|

||||

echo "Found LUKS partition: $part"

|

||||

LUKS_PARTITIONS+=("$part")

|

||||

done

|

||||

if [ ${#LUKS_PARTITIONS[@]} -eq 0 ]; then

|

||||

echo -e "No LUKS partitions found.\n"

|

||||

exit 1

|

||||

fi

|

||||

echo ""

|

||||

}

|

||||

|

||||

enroll_tpm_in_luks() {

|

||||

read -s -p "Enter the LUKS passphrase used during ISO install: " LUKS_PASSPHRASE

|

||||

echo ""

|

||||

for part in "${LUKS_PARTITIONS[@]}"; do

|

||||

echo "Enrolling TPM for LUKS device: /dev/$part"

|

||||

if [ "$TPM_PCR" == "sha1" ]; then

|

||||

clevis luks bind -d /dev/$part tpm2 '{"pcr_bank":"sha1","pcr_ids":"7"}' <<< $LUKS_PASSPHRASE

|

||||

elif [ "$TPM_PCR" == "sha256" ]; then

|

||||

clevis luks bind -d /dev/$part tpm2 '{"pcr_bank":"sha256","pcr_ids":"7"}' <<< $LUKS_PASSPHRASE

|

||||

fi

|

||||

done

|

||||

}

|

||||

|

||||

regenerate_tpm_enrollment_token() {

|

||||

for part in "${LUKS_PARTITIONS[@]}"; do

|

||||

clevis luks regen -d /dev/$part -s 1 -q

|

||||

done

|

||||

}

|

||||

|

||||

check_for_tpm

|

||||

check_for_luks_partitions

|

||||

|

||||

if [[ $ENROLL_TPM == "Y" ]]; then

|

||||

enroll_tpm_in_luks

|

||||

else

|

||||

regenerate_tpm_enrollment_token

|

||||

fi

|

||||

|

||||

echo "Running dracut"

|

||||

dracut -fv

|

||||

echo -e "\nTPM configuration complete. Reboot the system to verify the TPM is correctly decrypting the LUKS partition(s) at boot.\n"

|

||||

@@ -10,7 +10,7 @@

|

||||

. /usr/sbin/so-common

|

||||

. /usr/sbin/so-image-common

|

||||

|

||||

REPLAYIFACE=${REPLAYIFACE:-"{{salt['pillar.get']('sensor:interface', '')}}"}

|

||||

REPLAYIFACE=${REPLAYIFACE:-$(lookup_pillar interface sensor)}

|

||||

REPLAYSPEED=${REPLAYSPEED:-10}

|

||||

|

||||

mkdir -p /opt/so/samples

|

||||

@@ -89,7 +89,6 @@ function suricata() {

|

||||

-v ${LOG_PATH}:/var/log/suricata/:rw \

|

||||

-v ${NSM_PATH}/:/nsm/:rw \

|

||||

-v "$PCAP:/input.pcap:ro" \

|

||||

-v /dev/null:/nsm/suripcap:rw \

|

||||

-v /opt/so/conf/suricata/bpf:/etc/suricata/bpf:ro \

|

||||

{{ MANAGER }}:5000/{{ IMAGEREPO }}/so-suricata:{{ VERSION }} \

|

||||

--runmode single -k none -r /input.pcap > $LOG_PATH/console.log 2>&1

|

||||

@@ -248,7 +247,7 @@ fi

|

||||

START_OLDEST_SLASH=$(echo $START_OLDEST | sed -e 's/-/%2F/g')

|

||||

END_NEWEST_SLASH=$(echo $END_NEWEST | sed -e 's/-/%2F/g')

|

||||

if [[ $VALID_PCAPS_COUNT -gt 0 ]] || [[ $SKIPPED_PCAPS_COUNT -gt 0 ]]; then

|

||||

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20event.module*%20%7C%20groupby%20-sankey%20event.module*%20event.dataset%20%7C%20groupby%20event.dataset%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port%20%7C%20groupby%20network.protocol%20%7C%20groupby%20rule.name%20rule.category%20event.severity_label%20%7C%20groupby%20dns.query.name%20%7C%20groupby%20file.mime_type%20%7C%20groupby%20http.virtual_host%20http.uri%20%7C%20groupby%20notice.note%20notice.message%20notice.sub_message%20%7C%20groupby%20ssl.server_name%20%7C%20groupby%20source_geo.organization_name%20source.geo.country_name%20%7C%20groupby%20destination_geo.organization_name%20destination.geo.country_name&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

||||

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

||||

|

||||

status "Import complete!"

|

||||

status

|

||||

|

||||

@@ -180,8 +180,6 @@ docker:

|

||||

custom_bind_mounts: []

|

||||

extra_hosts: []

|

||||

extra_env: []

|

||||

ulimits:

|

||||

- memlock=524288000

|

||||

'so-zeek':

|

||||

final_octet: 99

|

||||

custom_bind_mounts: []

|

||||

@@ -192,7 +190,6 @@ docker:

|

||||

port_bindings:

|

||||

- 0.0.0.0:9092:9092

|

||||

- 0.0.0.0:9093:9093

|

||||

- 0.0.0.0:8778:8778

|

||||

custom_bind_mounts: []

|

||||

extra_hosts: []

|

||||

extra_env: []

|

||||

|

||||

@@ -20,30 +20,30 @@ dockergroup:

|

||||

dockerheldpackages:

|

||||

pkg.installed:

|

||||

- pkgs:

|

||||

- containerd.io: 1.6.33-1

|

||||

- docker-ce: 5:26.1.4-1~debian.12~bookworm

|

||||

- docker-ce-cli: 5:26.1.4-1~debian.12~bookworm

|

||||

- docker-ce-rootless-extras: 5:26.1.4-1~debian.12~bookworm

|

||||

- containerd.io: 1.6.21-1

|

||||

- docker-ce: 5:24.0.3-1~debian.12~bookworm

|

||||

- docker-ce-cli: 5:24.0.3-1~debian.12~bookworm

|

||||

- docker-ce-rootless-extras: 5:24.0.3-1~debian.12~bookworm

|

||||

- hold: True

|

||||

- update_holds: True

|

||||

{% elif grains.oscodename == 'jammy' %}

|

||||

dockerheldpackages:

|

||||

pkg.installed:

|

||||

- pkgs:

|

||||

- containerd.io: 1.6.33-1

|

||||

- docker-ce: 5:26.1.4-1~ubuntu.22.04~jammy

|

||||

- docker-ce-cli: 5:26.1.4-1~ubuntu.22.04~jammy

|

||||

- docker-ce-rootless-extras: 5:26.1.4-1~ubuntu.22.04~jammy

|

||||

- containerd.io: 1.6.21-1

|

||||

- docker-ce: 5:24.0.2-1~ubuntu.22.04~jammy

|

||||

- docker-ce-cli: 5:24.0.2-1~ubuntu.22.04~jammy

|

||||

- docker-ce-rootless-extras: 5:24.0.2-1~ubuntu.22.04~jammy

|

||||

- hold: True

|

||||

- update_holds: True

|

||||

{% else %}

|

||||

dockerheldpackages:

|

||||

pkg.installed:

|

||||

- pkgs:

|

||||

- containerd.io: 1.6.33-1

|

||||

- docker-ce: 5:26.1.4-1~ubuntu.20.04~focal

|

||||

- docker-ce-cli: 5:26.1.4-1~ubuntu.20.04~focal

|

||||

- docker-ce-rootless-extras: 5:26.1.4-1~ubuntu.20.04~focal

|

||||

- containerd.io: 1.4.9-1

|

||||

- docker-ce: 5:20.10.8~3-0~ubuntu-focal

|

||||

- docker-ce-cli: 5:20.10.5~3-0~ubuntu-focal

|

||||

- docker-ce-rootless-extras: 5:20.10.5~3-0~ubuntu-focal

|

||||

- hold: True

|

||||

- update_holds: True

|

||||

{% endif %}

|

||||

@@ -51,10 +51,10 @@ dockerheldpackages:

|

||||

dockerheldpackages:

|

||||

pkg.installed:

|

||||

- pkgs:

|

||||

- containerd.io: 1.6.33-3.1.el9

|

||||

- docker-ce: 3:26.1.4-1.el9

|

||||

- docker-ce-cli: 1:26.1.4-1.el9

|

||||

- docker-ce-rootless-extras: 26.1.4-1.el9

|

||||

- containerd.io: 1.6.21-3.1.el9

|

||||

- docker-ce: 24.0.4-1.el9

|

||||

- docker-ce-cli: 24.0.4-1.el9

|

||||

- docker-ce-rootless-extras: 24.0.4-1.el9

|

||||

- hold: True

|

||||

- update_holds: True

|

||||

{% endif %}

|

||||

|

||||

@@ -63,42 +63,6 @@ docker:

|

||||

so-elastic-agent: *dockerOptions

|

||||

so-telegraf: *dockerOptions

|

||||

so-steno: *dockerOptions

|

||||

so-suricata:

|

||||

final_octet:

|

||||

description: Last octet of the container IP address.

|

||||

helpLink: docker.html

|

||||

readonly: True

|

||||

advanced: True

|

||||

global: True

|

||||

port_bindings:

|

||||

description: List of port bindings for the container.

|

||||

helpLink: docker.html

|

||||

advanced: True

|

||||

multiline: True

|

||||

forcedType: "[]string"

|

||||

custom_bind_mounts:

|

||||

description: List of custom local volume bindings.

|

||||

advanced: True

|

||||

helpLink: docker.html

|

||||

multiline: True

|

||||

forcedType: "[]string"

|

||||

extra_hosts:

|

||||

description: List of additional host entries for the container.

|

||||

advanced: True

|

||||

helpLink: docker.html

|

||||

multiline: True

|

||||

forcedType: "[]string"

|

||||

extra_env:

|

||||

description: List of additional ENV entries for the container.

|

||||

advanced: True

|

||||

helpLink: docker.html

|

||||

multiline: True

|

||||

forcedType: "[]string"

|

||||

ulimits:

|

||||

description: Ulimits for the container, in bytes.

|

||||

advanced: True

|

||||

helpLink: docker.html

|

||||

multiline: True

|

||||

forcedType: "[]string"

|

||||

so-suricata: *dockerOptions

|

||||

so-zeek: *dockerOptions

|

||||

so-kafka: *dockerOptions

|

||||

@@ -82,36 +82,6 @@ elastasomodulesync:

|

||||

- group: 933

|

||||

- makedirs: True

|

||||

|

||||

elastacustomdir:

|

||||

file.directory:

|

||||

- name: /opt/so/conf/elastalert/custom

|

||||

- user: 933

|

||||

- group: 933

|

||||

- makedirs: True

|

||||

|

||||

elastacustomsync:

|

||||

file.recurse:

|

||||

- name: /opt/so/conf/elastalert/custom

|

||||

- source: salt://elastalert/files/custom

|

||||

- user: 933

|

||||

- group: 933

|

||||

- makedirs: True

|

||||

- file_mode: 660

|

||||

- show_changes: False

|

||||

|

||||

elastapredefinedsync:

|

||||

file.recurse:

|

||||

- name: /opt/so/conf/elastalert/predefined

|

||||

- source: salt://elastalert/files/predefined

|

||||

- user: 933

|

||||

- group: 933

|

||||

- makedirs: True

|

||||

- template: jinja

|

||||

- file_mode: 660

|

||||

- context:

|

||||

elastalert: {{ ELASTALERTMERGED }}

|

||||

- show_changes: False

|

||||

|

||||

elastaconf:

|

||||

file.managed:

|

||||

- name: /opt/so/conf/elastalert/elastalert_config.yaml

|

||||

|

||||

@@ -1,6 +1,5 @@

|

||||

elastalert:

|

||||

enabled: False

|

||||

alerter_parameters: ""

|

||||

config:

|

||||

rules_folder: /opt/elastalert/rules/

|

||||

scan_subdirectories: true

|

||||

|

||||

@@ -30,8 +30,6 @@ so-elastalert:

|

||||

- /opt/so/rules/elastalert:/opt/elastalert/rules/:ro

|

||||

- /opt/so/log/elastalert:/var/log/elastalert:rw

|

||||

- /opt/so/conf/elastalert/modules/:/opt/elastalert/modules/:ro

|

||||

- /opt/so/conf/elastalert/predefined/:/opt/elastalert/predefined/:ro

|

||||

- /opt/so/conf/elastalert/custom/:/opt/elastalert/custom/:ro

|

||||

- /opt/so/conf/elastalert/elastalert_config.yaml:/opt/elastalert/config.yaml:ro

|

||||

{% if DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

||||

{% for BIND in DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

||||

|

||||

@@ -1 +0,0 @@

|

||||

THIS IS A PLACEHOLDER FILE

|

||||

38

salt/elastalert/files/modules/so/playbook-es.py

Normal file

38

salt/elastalert/files/modules/so/playbook-es.py

Normal file

@@ -0,0 +1,38 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0.

|

||||

|

||||

|

||||

from time import gmtime, strftime

|

||||

import requests,json

|

||||

from elastalert.alerts import Alerter

|

||||

|

||||

import urllib3

|

||||

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

|

||||

|

||||

class PlaybookESAlerter(Alerter):

|

||||

"""

|

||||

Use matched data to create alerts in elasticsearch

|

||||

"""

|

||||

|

||||

required_options = set(['play_title','play_url','sigma_level'])

|

||||

|

||||

def alert(self, matches):

|

||||

for match in matches:

|

||||

today = strftime("%Y.%m.%d", gmtime())

|

||||

timestamp = strftime("%Y-%m-%d"'T'"%H:%M:%S"'.000Z', gmtime())

|

||||

headers = {"Content-Type": "application/json"}

|

||||

|

||||

creds = None

|

||||

if 'es_username' in self.rule and 'es_password' in self.rule:

|

||||

creds = (self.rule['es_username'], self.rule['es_password'])

|

||||

|

||||

payload = {"tags":"alert","rule": { "name": self.rule['play_title'],"case_template": self.rule['play_id'],"uuid": self.rule['play_id'],"category": self.rule['rule.category']},"event":{ "severity": self.rule['event.severity'],"module": self.rule['event.module'],"dataset": self.rule['event.dataset'],"severity_label": self.rule['sigma_level']},"kibana_pivot": self.rule['kibana_pivot'],"soc_pivot": self.rule['soc_pivot'],"play_url": self.rule['play_url'],"sigma_level": self.rule['sigma_level'],"event_data": match, "@timestamp": timestamp}

|

||||

url = f"https://{self.rule['es_host']}:{self.rule['es_port']}/logs-playbook.alerts-so/_doc/"

|

||||

requests.post(url, data=json.dumps(payload), headers=headers, verify=False, auth=creds)

|

||||

|

||||

def get_info(self):

|

||||

return {'type': 'PlaybookESAlerter'}

|

||||

@@ -1,63 +0,0 @@

|

||||

# -*- coding: utf-8 -*-

|

||||

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0.

|

||||

|

||||

|

||||

from time import gmtime, strftime

|

||||

import requests,json

|

||||

from elastalert.alerts import Alerter

|

||||

|

||||

import urllib3

|

||||

urllib3.disable_warnings(urllib3.exceptions.InsecureRequestWarning)

|

||||

|

||||

class SecurityOnionESAlerter(Alerter):

|

||||

"""

|

||||

Use matched data to create alerts in Elasticsearch.

|

||||

"""

|

||||

|

||||

required_options = set(['detection_title', 'sigma_level'])

|

||||

optional_fields = ['sigma_category', 'sigma_product', 'sigma_service']

|

||||

|

||||

def alert(self, matches):

|

||||

for match in matches:

|

||||

timestamp = strftime("%Y-%m-%d"'T'"%H:%M:%S"'.000Z', gmtime())

|

||||

headers = {"Content-Type": "application/json"}

|

||||

|

||||

creds = None

|

||||

if 'es_username' in self.rule and 'es_password' in self.rule:

|

||||

creds = (self.rule['es_username'], self.rule['es_password'])

|

||||

|

||||

# Start building the rule dict

|

||||

rule_info = {

|

||||

"name": self.rule['detection_title'],

|

||||

"uuid": self.rule['detection_public_id']

|

||||

}

|

||||

|

||||

# Add optional fields if they are present in the rule

|

||||

for field in self.optional_fields:

|

||||

rule_key = field.split('_')[-1] # Assumes field format "sigma_<key>"

|

||||

if field in self.rule:

|

||||

rule_info[rule_key] = self.rule[field]

|

||||

|

||||

# Construct the payload with the conditional rule_info

|

||||

payload = {

|

||||

"tags": "alert",

|

||||

"rule": rule_info,

|

||||

"event": {

|

||||

"severity": self.rule['event.severity'],

|

||||

"module": self.rule['event.module'],

|

||||

"dataset": self.rule['event.dataset'],

|

||||

"severity_label": self.rule['sigma_level']

|

||||

},

|

||||

"sigma_level": self.rule['sigma_level'],

|

||||

"event_data": match,

|

||||

"@timestamp": timestamp

|

||||

}

|

||||

url = f"https://{self.rule['es_host']}:{self.rule['es_port']}/logs-detections.alerts-so/_doc/"

|

||||

requests.post(url, data=json.dumps(payload), headers=headers, verify=False, auth=creds)

|

||||

|

||||

def get_info(self):

|

||||

return {'type': 'SecurityOnionESAlerter'}

|

||||

@@ -1,6 +0,0 @@

|

||||

{% if elastalert.get('jira_user', '') | length > 0 and elastalert.get('jira_pass', '') | length > 0 %}

|

||||

user: {{ elastalert.jira_user }}

|

||||

password: {{ elastalert.jira_pass }}

|

||||

{% else %}

|

||||

apikey: {{ elastalert.get('jira_api_key', '') }}

|

||||

{% endif %}

|

||||

@@ -1,2 +0,0 @@

|

||||

user: {{ elastalert.get('smtp_user', '') }}

|

||||

password: {{ elastalert.get('smtp_pass', '') }}

|

||||

@@ -13,19 +13,3 @@

|

||||

{% do ELASTALERTDEFAULTS.elastalert.config.update({'es_password': pillar.elasticsearch.auth.users.so_elastic_user.pass}) %}

|

||||

|

||||

{% set ELASTALERTMERGED = salt['pillar.get']('elastalert', ELASTALERTDEFAULTS.elastalert, merge=True) %}

|

||||

|

||||

{% if 'ntf' in salt['pillar.get']('features', []) %}

|

||||

{% set params = ELASTALERTMERGED.get('alerter_parameters', '') | load_yaml %}

|

||||

{% if params != None and params | length > 0 %}

|

||||

{% do ELASTALERTMERGED.config.update(params) %}

|

||||

{% endif %}

|

||||

|

||||

{% if ELASTALERTMERGED.get('smtp_user', '') | length > 0 %}

|

||||

{% do ELASTALERTMERGED.config.update({'smtp_auth_file': '/opt/elastalert/predefined/smtp_auth.yaml'}) %}

|

||||

{% endif %}

|

||||

|

||||

{% if ELASTALERTMERGED.get('jira_user', '') | length > 0 or ELASTALERTMERGED.get('jira_key', '') | length > 0 %}

|

||||

{% do ELASTALERTMERGED.config.update({'jira_account_file': '/opt/elastalert/predefined/jira_auth.yaml'}) %}

|

||||

{% endif %}

|

||||

|

||||

{% endif %}

|

||||

|

||||

@@ -2,99 +2,6 @@ elastalert:

|

||||

enabled:

|

||||

description: You can enable or disable Elastalert.

|

||||

helpLink: elastalert.html

|

||||

alerter_parameters:

|

||||

title: Alerter Parameters

|

||||

description: Optional configuration parameters for additional alerters that can be enabled for all Sigma rules. Filter for 'Alerter' in this Configuration screen to find the setting that allows these alerters to be enabled within the SOC ElastAlert module. Use YAML format for these parameters, and reference the ElastAlert 2 documentation, located at https://elastalert2.readthedocs.io, for available alerters and their required configuration parameters. A full update of the ElastAlert rule engine, via the Detections screen, is required in order to apply these changes. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

multiline: True

|

||||

syntax: yaml

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

jira_api_key:

|

||||

title: Jira API Key

|

||||

description: Optional configuration parameter for Jira API Key, used instead of the Jira username and password. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

sensitive: True

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

jira_pass:

|

||||

title: Jira Password

|

||||

description: Optional configuration parameter for Jira password. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

sensitive: True

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

jira_user:

|

||||

title: Jira Username

|

||||

description: Optional configuration parameter for Jira username. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

smtp_pass:

|

||||

title: SMTP Password

|

||||

description: Optional configuration parameter for SMTP password, required for authenticating email servers. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

sensitive: True

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

smtp_user:

|

||||

title: SMTP Username

|

||||

description: Optional configuration parameter for SMTP username, required for authenticating email servers. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

helpLink: elastalert.html

|

||||

forcedType: string

|

||||

files:

|

||||

custom:

|

||||

alertmanager_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to an AlertManager server. To utilize this custom file, the alertmanager_ca_certs key must be set to /opt/elastalert/custom/alertmanager_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

gelf_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to a Graylog server. To utilize this custom file, the graylog_ca_certs key must be set to /opt/elastalert/custom/graylog_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

http_post_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to a generic HTTP server, via the legacy HTTP POST alerter. To utilize this custom file, the http_post_ca_certs key must be set to /opt/elastalert/custom/http_post2_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

http_post2_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to a generic HTTP server, via the newer HTTP POST 2 alerter. To utilize this custom file, the http_post2_ca_certs key must be set to /opt/elastalert/custom/http_post2_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

ms_teams_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to Microsoft Teams server. To utilize this custom file, the ms_teams_ca_certs key must be set to /opt/elastalert/custom/ms_teams_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

pagerduty_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to PagerDuty server. To utilize this custom file, the pagerduty_ca_certs key must be set to /opt/elastalert/custom/pagerduty_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

rocket_chat_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to PagerDuty server. To utilize this custom file, the rocket_chart_ca_certs key must be set to /opt/elastalert/custom/rocket_chat_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

smtp__crt:

|

||||

description: Optional custom certificate for connecting to an SMTP server. To utilize this custom file, the smtp_cert_file key must be set to /opt/elastalert/custom/smtp.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

smtp__key:

|

||||

description: Optional custom certificate key for connecting to an SMTP server. To utilize this custom file, the smtp_key_file key must be set to /opt/elastalert/custom/smtp.key in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

slack_ca__crt:

|

||||

description: Optional custom Certificate Authority for connecting to Slack. To utilize this custom file, the slack_ca_certs key must be set to /opt/elastalert/custom/slack_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||

global: True

|

||||

file: True

|

||||

helpLink: elastalert.html

|

||||

config:

|

||||

disable_rules_on_error:

|

||||

description: Disable rules on failure.

|

||||

|

||||

@@ -37,7 +37,6 @@ elasticfleet:

|

||||

- azure

|

||||

- barracuda

|

||||

- carbonblack_edr

|

||||

- cef

|

||||

- checkpoint

|

||||

- cisco_asa

|

||||

- cisco_duo

|

||||

@@ -119,8 +118,3 @@ elasticfleet:

|

||||

base_url: https://api.platform.sublimesecurity.com

|

||||

poll_interval: 5m

|

||||

limit: 100

|

||||

kismet:

|

||||

base_url: http://localhost:2501

|

||||

poll_interval: 1m

|

||||

api_key:

|

||||

enabled_nodes: []

|

||||

|

||||

@@ -27,9 +27,7 @@ wait_for_elasticsearch_elasticfleet:

|

||||

so-elastic-fleet-auto-configure-logstash-outputs:

|

||||

cmd.run:

|

||||

- name: /usr/sbin/so-elastic-fleet-outputs-update

|

||||

- retry:

|

||||

attempts: 4

|

||||

interval: 30

|

||||

- retry: True

|

||||

{% endif %}

|

||||

|

||||

# If enabled, automatically update Fleet Server URLs & ES Connection

|

||||

@@ -37,9 +35,7 @@ so-elastic-fleet-auto-configure-logstash-outputs:

|

||||

so-elastic-fleet-auto-configure-server-urls:

|

||||

cmd.run:

|

||||

- name: /usr/sbin/so-elastic-fleet-urls-update

|

||||

- retry:

|

||||

attempts: 4

|

||||

interval: 30

|

||||

- retry: True

|

||||

{% endif %}

|

||||

|

||||

# Automatically update Fleet Server Elasticsearch URLs & Agent Artifact URLs

|

||||

@@ -47,16 +43,12 @@ so-elastic-fleet-auto-configure-server-urls:

|

||||

so-elastic-fleet-auto-configure-elasticsearch-urls:

|

||||

cmd.run:

|

||||

- name: /usr/sbin/so-elastic-fleet-es-url-update

|

||||

- retry:

|

||||

attempts: 4

|

||||

interval: 30

|

||||

- retry: True

|

||||

|

||||

so-elastic-fleet-auto-configure-artifact-urls:

|

||||

cmd.run:

|

||||

- name: /usr/sbin/so-elastic-fleet-artifacts-url-update

|

||||

- retry:

|

||||

attempts: 4

|

||||

interval: 30

|

||||

- retry: True

|

||||

|

||||

{% endif %}

|

||||

|

||||

|

||||

@@ -1,36 +0,0 @@

|

||||

{% from 'elasticfleet/map.jinja' import ELASTICFLEETMERGED %}

|

||||

{% raw %}

|

||||

{

|

||||

"package": {

|

||||

"name": "httpjson",

|

||||

"version": ""

|

||||

},

|

||||

"name": "kismet-logs",

|

||||

"namespace": "so",

|

||||

"description": "Kismet Logs",

|

||||

"policy_id": "FleetServer_{% endraw %}{{ NAME }}{% raw %}",

|

||||

"inputs": {

|

||||

"generic-httpjson": {

|

||||

"enabled": true,

|

||||

"streams": {

|

||||

"httpjson.generic": {

|

||||

"enabled": true,

|

||||

"vars": {

|

||||

"data_stream.dataset": "kismet",

|

||||

"request_url": "{% endraw %}{{ ELASTICFLEETMERGED.optional_integrations.kismet.base_url }}{% raw %}/devices/last-time/-600/devices.tjson",

|

||||

"request_interval": "{% endraw %}{{ ELASTICFLEETMERGED.optional_integrations.kismet.poll_interval }}{% raw %}",

|

||||

"request_method": "GET",

|

||||

"request_transforms": "- set:\r\n target: header.Cookie\r\n value: 'KISMET={% endraw %}{{ ELASTICFLEETMERGED.optional_integrations.kismet.api_key }}{% raw %}'",

|

||||

"request_redirect_headers_ban_list": [],

|

||||

"oauth_scopes": [],

|

||||

"processors": "",

|

||||

"tags": [],

|

||||

"pipeline": "kismet.common"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"force": true

|

||||

}

|

||||

{% endraw %}

|

||||

@@ -1,35 +0,0 @@

|

||||

{

|

||||

"policy_id": "so-grid-nodes_general",

|

||||

"package": {

|

||||

"name": "log",

|

||||

"version": ""

|

||||

},

|

||||

"name": "soc-detections-logs",

|

||||

"description": "Security Onion Console - Detections Logs",

|

||||

"namespace": "so",

|

||||

"inputs": {

|

||||

"logs-logfile": {

|

||||

"enabled": true,

|

||||

"streams": {

|

||||

"log.logs": {

|

||||

"enabled": true,

|

||||

"vars": {

|

||||

"paths": [

|

||||

"/opt/so/log/soc/detections_runtime-status_sigma.log",

|

||||

"/opt/so/log/soc/detections_runtime-status_yara.log"

|

||||

],

|

||||

"exclude_files": [],

|

||||

"ignore_older": "72h",

|

||||

"data_stream.dataset": "soc",

|

||||

"tags": [

|

||||

"so-soc"

|

||||

],

|

||||

"processors": "- decode_json_fields:\n fields: [\"message\"]\n target: \"soc\"\n process_array: true\n max_depth: 2\n add_error_key: true \n- add_fields:\n target: event\n fields:\n category: host\n module: soc\n dataset_temp: detections\n- rename:\n fields:\n - from: \"soc.fields.sourceIp\"\n to: \"source.ip\"\n - from: \"soc.fields.status\"\n to: \"http.response.status_code\"\n - from: \"soc.fields.method\"\n to: \"http.request.method\"\n - from: \"soc.fields.path\"\n to: \"url.path\"\n - from: \"soc.message\"\n to: \"event.action\"\n - from: \"soc.level\"\n to: \"log.level\"\n ignore_missing: true",

|

||||

"custom": "pipeline: common"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

},

|

||||

"force": true

|

||||

}

|

||||

@@ -79,29 +79,3 @@ elasticfleet:

|

||||

helpLink: elastic-fleet.html

|

||||

advanced: True

|

||||

forcedType: int

|

||||

kismet:

|

||||

base_url:

|

||||

description: Base URL for Kismet.

|

||||

global: True

|

||||

helpLink: elastic-fleet.html

|

||||

advanced: True

|

||||

forcedType: string

|

||||

poll_interval:

|

||||

description: Poll interval for wireless device data from Kismet. Integration is currently configured to return devices seen as active by any Kismet sensor within the last 10 minutes.

|

||||

global: True

|

||||

helpLink: elastic-fleet.html

|

||||

advanced: True

|

||||

forcedType: string

|

||||

api_key:

|

||||

description: API key for Kismet.

|

||||

global: True

|

||||

helpLink: elastic-fleet.html

|

||||

advanced: True

|

||||

forcedType: string

|

||||

sensitive: True

|

||||

enabled_nodes:

|

||||

description: Fleet nodes with the Kismet integration enabled. Enter one per line.

|

||||

global: True

|

||||

helpLink: elastic-fleet.html

|

||||

advanced: True

|

||||

forcedType: "[]string"

|

||||

|

||||

@@ -19,7 +19,7 @@ NUM_RUNNING=$(pgrep -cf "/bin/bash /sbin/so-elastic-agent-gen-installers")

|

||||

|

||||

for i in {1..30}

|

||||

do

|

||||

ENROLLMENTOKEN=$(curl -K /opt/so/conf/elasticsearch/curl.config -L "localhost:5601/api/fleet/enrollment_api_keys?perPage=100" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' | jq .list | jq -r -c '.[] | select(.policy_id | contains("endpoints-initial")) | .api_key')

|

||||

ENROLLMENTOKEN=$(curl -K /opt/so/conf/elasticsearch/curl.config -L "localhost:5601/api/fleet/enrollment_api_keys" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' | jq .list | jq -r -c '.[] | select(.policy_id | contains("endpoints-initial")) | .api_key')

|

||||

FLEETHOST=$(curl -K /opt/so/conf/elasticsearch/curl.config 'http://localhost:5601/api/fleet/fleet_server_hosts/grid-default' | jq -r '.item.host_urls[]' | paste -sd ',')

|

||||

if [[ $FLEETHOST ]] && [[ $ENROLLMENTOKEN ]]; then break; else sleep 10; fi

|

||||

done

|

||||

@@ -72,5 +72,5 @@ do

|

||||

printf "\n### $GOOS/$GOARCH Installer Generated...\n"

|

||||

done

|

||||

|

||||

printf "\n### Cleaning up temp files in /nsm/elastic-agent-workspace\n"

|

||||

printf "\n### Cleaning up temp files in /nsm/elastic-agent-workspace"

|

||||

rm -rf /nsm/elastic-agent-workspace

|

||||

|

||||

@@ -21,104 +21,64 @@ function update_logstash_outputs() {

|

||||

# Update Logstash Outputs

|

||||

curl -K /opt/so/conf/elasticsearch/curl.config -L -X PUT "localhost:5601/api/fleet/outputs/so-manager_logstash" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d "$JSON_STRING" | jq

|

||||

}

|

||||

function update_kafka_outputs() {

|

||||

# Make sure SSL configuration is included in policy updates for Kafka output. SSL is configured in so-elastic-fleet-setup

|

||||

SSL_CONFIG=$(curl -K /opt/so/conf/elasticsearch/curl.config -L "http://localhost:5601/api/fleet/outputs/so-manager_kafka" | jq -r '.item.ssl')

|

||||

|

||||

JSON_STRING=$(jq -n \

|

||||

--arg UPDATEDLIST "$NEW_LIST_JSON" \

|

||||

--argjson SSL_CONFIG "$SSL_CONFIG" \

|

||||

'{"name": "grid-kafka","type": "kafka","hosts": $UPDATEDLIST,"is_default": true,"is_default_monitoring": true,"config_yaml": "","ssl": $SSL_CONFIG}')

|

||||

# Update Kafka outputs

|

||||

curl -K /opt/so/conf/elasticsearch/curl.config -L -X PUT "localhost:5601/api/fleet/outputs/so-manager_kafka" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d "$JSON_STRING" | jq

|

||||

}

|

||||

# Get current list of Logstash Outputs

|

||||

RAW_JSON=$(curl -K /opt/so/conf/elasticsearch/curl.config 'http://localhost:5601/api/fleet/outputs/so-manager_logstash')

|

||||

|

||||

{% if GLOBALS.pipeline == "KAFKA" %}

|

||||

# Get current list of Kafka Outputs

|

||||

RAW_JSON=$(curl -K /opt/so/conf/elasticsearch/curl.config 'http://localhost:5601/api/fleet/outputs/so-manager_kafka')

|

||||

# Check to make sure that the server responded with good data - else, bail from script

|

||||

CHECKSUM=$(jq -r '.item.id' <<< "$RAW_JSON")

|

||||

if [ "$CHECKSUM" != "so-manager_logstash" ]; then

|

||||

printf "Failed to query for current Logstash Outputs..."

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Check to make sure that the server responded with good data - else, bail from script

|

||||

CHECKSUM=$(jq -r '.item.id' <<< "$RAW_JSON")

|

||||

if [ "$CHECKSUM" != "so-manager_kafka" ]; then

|

||||

printf "Failed to query for current Kafka Outputs..."

|

||||

exit 1

|

||||

fi

|

||||

# Get the current list of Logstash outputs & hash them

|

||||

CURRENT_LIST=$(jq -c -r '.item.hosts' <<< "$RAW_JSON")

|

||||

CURRENT_HASH=$(sha1sum <<< "$CURRENT_LIST" | awk '{print $1}')

|

||||

|

||||

# Get the current list of kafka outputs & hash them

|

||||

CURRENT_LIST=$(jq -c -r '.item.hosts' <<< "$RAW_JSON")

|

||||

CURRENT_HASH=$(sha1sum <<< "$CURRENT_LIST" | awk '{print $1}')

|

||||

|

||||

declare -a NEW_LIST=()

|

||||

|

||||

# Query for the current Grid Nodes that are running kafka

|

||||

KAFKANODES=$(salt-call --out=json pillar.get kafka:nodes | jq '.local')

|

||||

|

||||

# Query for Kafka nodes with Broker role and add hostname to list

|

||||

while IFS= read -r line; do

|

||||

NEW_LIST+=("$line")

|

||||

done < <(jq -r 'to_entries | .[] | select(.value.role | contains("broker")) | .key + ":9092"' <<< $KAFKANODES)

|

||||

|

||||

{# If global pipeline isn't set to KAFKA then assume default of REDIS / logstash #}

|

||||

{% else %}

|

||||

# Get current list of Logstash Outputs

|

||||

RAW_JSON=$(curl -K /opt/so/conf/elasticsearch/curl.config 'http://localhost:5601/api/fleet/outputs/so-manager_logstash')

|

||||

|

||||

# Check to make sure that the server responded with good data - else, bail from script

|

||||

CHECKSUM=$(jq -r '.item.id' <<< "$RAW_JSON")

|

||||

if [ "$CHECKSUM" != "so-manager_logstash" ]; then

|

||||

printf "Failed to query for current Logstash Outputs..."

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# Get the current list of Logstash outputs & hash them

|

||||

CURRENT_LIST=$(jq -c -r '.item.hosts' <<< "$RAW_JSON")

|

||||

CURRENT_HASH=$(sha1sum <<< "$CURRENT_LIST" | awk '{print $1}')

|

||||

|

||||

declare -a NEW_LIST=()

|

||||

|

||||

{# If we select to not send to manager via SOC, then omit the code that adds manager to NEW_LIST #}

|

||||

{% if ELASTICFLEETMERGED.enable_manager_output %}

|

||||

# Create array & add initial elements

|

||||

if [ "{{ GLOBALS.hostname }}" = "{{ GLOBALS.url_base }}" ]; then

|

||||

NEW_LIST+=("{{ GLOBALS.url_base }}:5055")

|

||||

else

|

||||

NEW_LIST+=("{{ GLOBALS.url_base }}:5055" "{{ GLOBALS.hostname }}:5055")

|

||||

fi

|

||||

{% endif %}

|

||||

|

||||

# Query for FQDN entries & add them to the list

|

||||

{% if ELASTICFLEETMERGED.config.server.custom_fqdn | length > 0 %}

|

||||

CUSTOMFQDNLIST=('{{ ELASTICFLEETMERGED.config.server.custom_fqdn | join(' ') }}')

|

||||

readarray -t -d ' ' CUSTOMFQDN < <(printf '%s' "$CUSTOMFQDNLIST")

|

||||

for CUSTOMNAME in "${CUSTOMFQDN[@]}"

|

||||

do

|

||||

NEW_LIST+=("$CUSTOMNAME:5055")

|

||||

done

|

||||

{% endif %}

|

||||

|

||||

# Query for the current Grid Nodes that are running Logstash

|

||||

LOGSTASHNODES=$(salt-call --out=json pillar.get logstash:nodes | jq '.local')

|