@@ -1,18 +1,18 @@

|

||||

### 2.4.2-20230531 ISO image built on 2023/05/31

|

||||

### 2.4.3-20230711 ISO image built on 2023/07/11

|

||||

|

||||

|

||||

|

||||

### Download and Verify

|

||||

|

||||

2.4.2-20230531 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.2-20230531.iso

|

||||

2.4.3-20230711 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.3-20230711.iso

|

||||

|

||||

MD5: EB861EFB7F7DA6FB418075B4C452E4EB

|

||||

SHA1: 479A72DBB0633CB23608122F7200A24E2C3C3128

|

||||

SHA256: B69C1AE4C576BBBC37F4B87C2A8379903421E65B2C4F24C90FABB0EAD6F0471B

|

||||

MD5: F481ED39E02A5AF05EB50D319D97A6C7

|

||||

SHA1: 20F9BAA8F73A44C21A8DFE81F36247BCF33CEDA6

|

||||

SHA256: D805522E02CD4941641385F6FF86FAAC240DA6C5FD98F78460348632C7C631B0

|

||||

|

||||

Signature for ISO image:

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.2-20230531.iso.sig

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.3-20230711.iso.sig

|

||||

|

||||

Signing key:

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||

@@ -26,22 +26,22 @@ wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.

|

||||

|

||||

Download the signature file for the ISO:

|

||||

```

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.2-20230531.iso.sig

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.3-20230711.iso.sig

|

||||

```

|

||||

|

||||

Download the ISO image:

|

||||

```

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.2-20230531.iso

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.3-20230711.iso

|

||||

```

|

||||

|

||||

Verify the downloaded ISO image using the signature file:

|

||||

```

|

||||

gpg --verify securityonion-2.4.2-20230531.iso.sig securityonion-2.4.2-20230531.iso

|

||||

gpg --verify securityonion-2.4.3-20230711.iso.sig securityonion-2.4.3-20230711.iso

|

||||

```

|

||||

|

||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||

```

|

||||

gpg: Signature made Wed 31 May 2023 05:01:41 PM EDT using RSA key ID FE507013

|

||||

gpg: Signature made Tue 11 Jul 2023 06:23:37 PM EDT using RSA key ID FE507013

|

||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||

gpg: WARNING: This key is not certified with a trusted signature!

|

||||

gpg: There is no indication that the signature belongs to the owner.

|

||||

@@ -49,4 +49,4 @@ Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||

```

|

||||

|

||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

20

README.md

@@ -1,20 +1,26 @@

|

||||

## Security Onion 2.4 Beta 3

|

||||

## Security Onion 2.4 Beta 4

|

||||

|

||||

Security Onion 2.4 Beta 3 is here!

|

||||

Security Onion 2.4 Beta 4 is here!

|

||||

|

||||

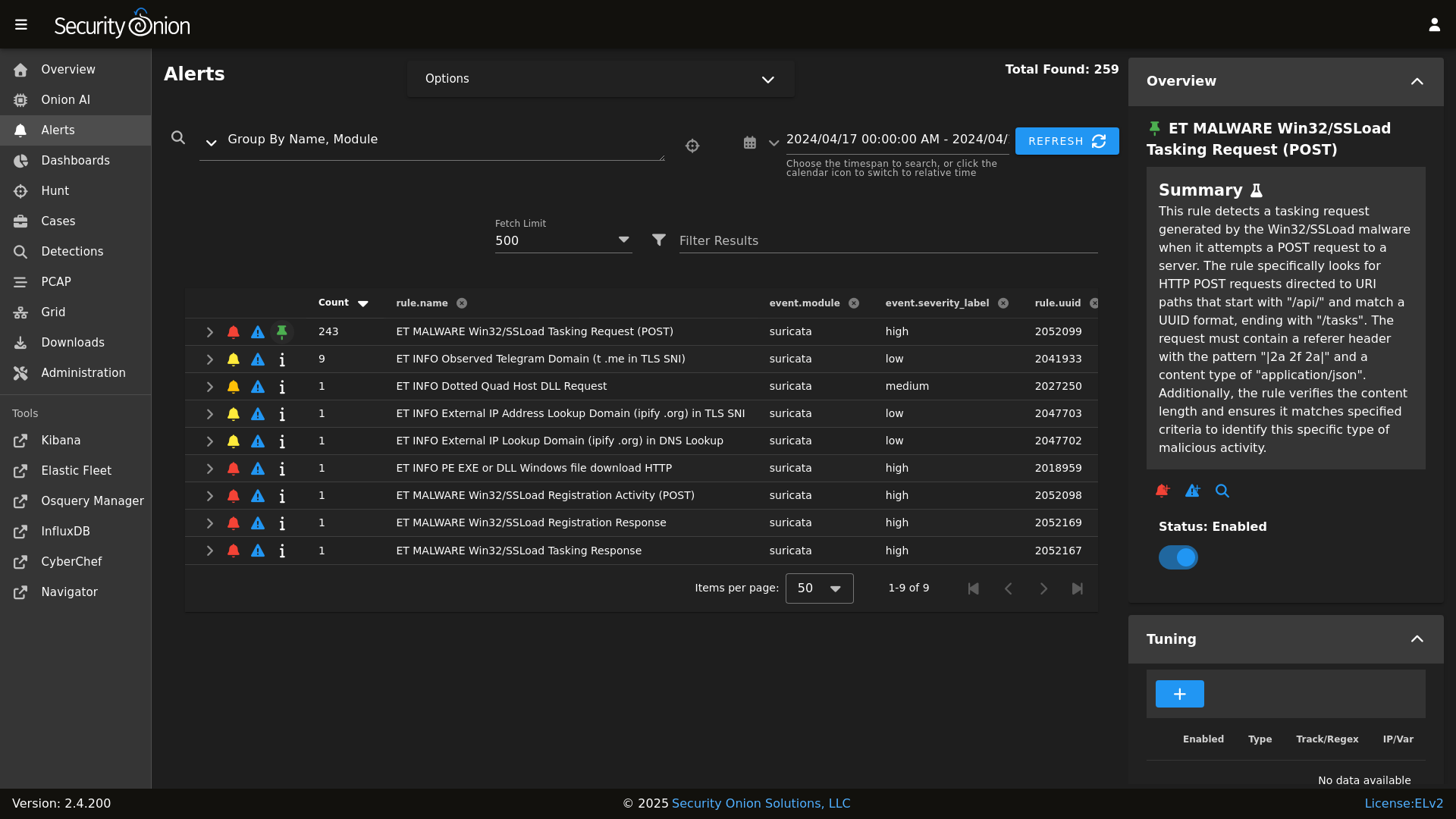

## Screenshots

|

||||

|

||||

Alerts

|

||||

|

||||

|

||||

|

||||

Dashboards

|

||||

|

||||

|

||||

|

||||

Hunt

|

||||

|

||||

|

||||

|

||||

Cases

|

||||

|

||||

PCAP

|

||||

|

||||

|

||||

Grid

|

||||

|

||||

|

||||

Config

|

||||

|

||||

|

||||

### Release Notes

|

||||

|

||||

|

||||

@@ -1,13 +0,0 @@

|

||||

logrotate:

|

||||

conf: |

|

||||

daily

|

||||

rotate 14

|

||||

missingok

|

||||

copytruncate

|

||||

compress

|

||||

create

|

||||

extension .log

|

||||

dateext

|

||||

dateyesterday

|

||||

group_conf: |

|

||||

su root socore

|

||||

@@ -2,7 +2,7 @@

|

||||

{% set cached_grains = salt.saltutil.runner('cache.grains', tgt='*') %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-searchnode or G@role:so-heavynode or G@role:so-receiver or G@role:so-helix ',

|

||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-searchnode or G@role:so-heavynode or G@role:so-receiver or G@role:so-fleet ',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='compound') | dictsort()

|

||||

%}

|

||||

|

||||

14

pillar/soc/license.sls

Normal file

@@ -0,0 +1,14 @@

|

||||

# Copyright Jason Ertel (github.com/jertel).

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with

|

||||

# the Elastic License 2.0.

|

||||

|

||||

# Note: Per the Elastic License 2.0, the second limitation states:

|

||||

#

|

||||

# "You may not move, change, disable, or circumvent the license key functionality

|

||||

# in the software, and you may not remove or obscure any functionality in the

|

||||

# software that is protected by the license key."

|

||||

|

||||

# This file is generated by Security Onion and contains a list of license-enabled features.

|

||||

features: []

|

||||

@@ -40,6 +40,7 @@ base:

|

||||

- logstash.adv_logstash

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

@@ -103,6 +104,7 @@ base:

|

||||

- idstools.adv_idstools

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

@@ -161,6 +163,7 @@ base:

|

||||

- manager.adv_manager

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

@@ -258,6 +261,7 @@ base:

|

||||

- manager.adv_manager

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

|

||||

@@ -46,23 +46,7 @@

|

||||

'pcap',

|

||||

'suricata',

|

||||

'healthcheck',

|

||||

'schedule',

|

||||

'tcpreplay',

|

||||

'docker_clean'

|

||||

],

|

||||

'so-helixsensor': [

|

||||

'salt.master',

|

||||

'ca',

|

||||

'ssl',

|

||||

'registry',

|

||||

'telegraf',

|

||||

'firewall',

|

||||

'idstools',

|

||||

'suricata.manager',

|

||||

'zeek',

|

||||

'redis',

|

||||

'elasticsearch',

|

||||

'logstash',

|

||||

'elasticagent',

|

||||

'schedule',

|

||||

'tcpreplay',

|

||||

'docker_clean'

|

||||

@@ -203,7 +187,7 @@

|

||||

'schedule',

|

||||

'docker_clean'

|

||||

],

|

||||

'so-workstation': [

|

||||

'so-desktop': [

|

||||

],

|

||||

}, grain='role') %}

|

||||

|

||||

@@ -244,7 +228,7 @@

|

||||

{% do allowed_states.append('playbook') %}

|

||||

{% endif %}

|

||||

|

||||

{% if grains.role in ['so-helixsensor', 'so-manager', 'so-standalone', 'so-searchnode', 'so-managersearch', 'so-heavynode', 'so-receiver'] %}

|

||||

{% if grains.role in ['so-manager', 'so-standalone', 'so-searchnode', 'so-managersearch', 'so-heavynode', 'so-receiver'] %}

|

||||

{% do allowed_states.append('logstash') %}

|

||||

{% endif %}

|

||||

|

||||

|

||||

@@ -20,7 +20,6 @@ pki_private_key:

|

||||

- name: /etc/pki/ca.key

|

||||

- keysize: 4096

|

||||

- passphrase:

|

||||

- cipher: aes_256_cbc

|

||||

- backup: True

|

||||

{% if salt['file.file_exists']('/etc/pki/ca.key') -%}

|

||||

- prereq:

|

||||

|

||||

@@ -1,2 +0,0 @@

|

||||

#!/bin/bash

|

||||

/usr/sbin/logrotate -f /opt/so/conf/log-rotate.conf > /dev/null 2>&1

|

||||

@@ -1,2 +0,0 @@

|

||||

#!/bin/bash

|

||||

/usr/sbin/logrotate -f /opt/so/conf/sensor-rotate.conf > /dev/null 2>&1

|

||||

@@ -1,79 +0,0 @@

|

||||

The following GUI tools are available on the analyst workstation:

|

||||

|

||||

chromium

|

||||

url: https://www.chromium.org/Home

|

||||

To run chromium, click Applications > Internet > Chromium Web Browser

|

||||

|

||||

Wireshark

|

||||

url: https://www.wireshark.org/

|

||||

To run Wireshark, click Applications > Internet > Wireshark Network Analyzer

|

||||

|

||||

NetworkMiner

|

||||

url: https://www.netresec.com

|

||||

To run NetworkMiner, click Applications > Internet > NetworkMiner

|

||||

|

||||

The following CLI tools are available on the analyst workstation:

|

||||

|

||||

bit-twist

|

||||

url: http://bittwist.sourceforge.net

|

||||

To run bit-twist, open a terminal and type: bittwist -h

|

||||

|

||||

chaosreader

|

||||

url: http://chaosreader.sourceforge.net

|

||||

To run chaosreader, open a terminal and type: chaosreader -h

|

||||

|

||||

dnsiff

|

||||

url: https://www.monkey.org/~dugsong/dsniff/

|

||||

To run dsniff, open a terminal and type: dsniff -h

|

||||

|

||||

foremost

|

||||

url: http://foremost.sourceforge.net

|

||||

To run foremost, open a terminal and type: foremost -h

|

||||

|

||||

hping3

|

||||

url: http://www.hping.org/hping3.html

|

||||

To run hping3, open a terminal and type: hping3 -h

|

||||

|

||||

netsed

|

||||

url: http://silicone.homelinux.org/projects/netsed/

|

||||

To run netsed, open a terminal and type: netsed -h

|

||||

|

||||

ngrep

|

||||

url: https://github.com/jpr5/ngrep

|

||||

To run ngrep, open a terminal and type: ngrep -h

|

||||

|

||||

scapy

|

||||

url: http://www.secdev.org/projects/scapy/

|

||||

To run scapy, open a terminal and type: scapy

|

||||

|

||||

ssldump

|

||||

url: http://www.rtfm.com/ssldump/

|

||||

To run ssldump, open a terminal and type: ssldump -h

|

||||

|

||||

sslsplit

|

||||

url: https://github.com/droe/sslsplit

|

||||

To run sslsplit, open a terminal and type: sslsplit -h

|

||||

|

||||

tcpdump

|

||||

url: http://www.tcpdump.org

|

||||

To run tcpdump, open a terminal and type: tcpdump -h

|

||||

|

||||

tcpflow

|

||||

url: https://github.com/simsong/tcpflow

|

||||

To run tcpflow, open a terminal and type: tcpflow -h

|

||||

|

||||

tcpstat

|

||||

url: https://frenchfries.net/paul/tcpstat/

|

||||

To run tcpstat, open a terminal and type: tcpstat -h

|

||||

|

||||

tcptrace

|

||||

url: http://www.tcptrace.org

|

||||

To run tcptrace, open a terminal and type: tcptrace -h

|

||||

|

||||

tcpxtract

|

||||

url: http://tcpxtract.sourceforge.net/

|

||||

To run tcpxtract, open a terminal and type: tcpxtract -h

|

||||

|

||||

whois

|

||||

url: http://www.linux.it/~md/software/

|

||||

To run whois, open a terminal and type: whois -h

|

||||

@@ -1,37 +0,0 @@

|

||||

{%- set logrotate_conf = salt['pillar.get']('logrotate:conf') %}

|

||||

{%- set group_conf = salt['pillar.get']('logrotate:group_conf') %}

|

||||

|

||||

|

||||

/opt/so/log/aptcacher-ng/*.log

|

||||

/opt/so/log/idstools/*.log

|

||||

/opt/so/log/nginx/*.log

|

||||

/opt/so/log/soc/*.log

|

||||

/opt/so/log/kratos/*.log

|

||||

/opt/so/log/kibana/*.log

|

||||

/opt/so/log/influxdb/*.log

|

||||

/opt/so/log/elastalert/*.log

|

||||

/opt/so/log/soctopus/*.log

|

||||

/opt/so/log/curator/*.log

|

||||

/opt/so/log/fleet/*.log

|

||||

/opt/so/log/suricata/*.log

|

||||

/opt/so/log/mysql/*.log

|

||||

/opt/so/log/telegraf/*.log

|

||||

/opt/so/log/redis/*.log

|

||||

/opt/so/log/sensoroni/*.log

|

||||

/opt/so/log/stenographer/*.log

|

||||

/opt/so/log/salt/so-salt-minion-check

|

||||

/opt/so/log/salt/minion

|

||||

/opt/so/log/salt/master

|

||||

/opt/so/log/logscan/*.log

|

||||

/nsm/idh/*.log

|

||||

{

|

||||

{{ logrotate_conf | indent(width=4) }}

|

||||

}

|

||||

|

||||

# Playbook's log directory needs additional configuration

|

||||

# because Playbook requires a more permissive directory

|

||||

/opt/so/log/playbook/*.log

|

||||

{

|

||||

{{ logrotate_conf | indent(width=4) }}

|

||||

{{ group_conf | indent(width=4) }}

|

||||

}

|

||||

@@ -1,22 +0,0 @@

|

||||

/opt/so/log/sensor_clean.log

|

||||

{

|

||||

daily

|

||||

rotate 2

|

||||

missingok

|

||||

nocompress

|

||||

create

|

||||

sharedscripts

|

||||

}

|

||||

|

||||

/nsm/strelka/log/strelka.log

|

||||

{

|

||||

daily

|

||||

rotate 14

|

||||

missingok

|

||||

copytruncate

|

||||

compress

|

||||

create

|

||||

extension .log

|

||||

dateext

|

||||

dateyesterday

|

||||

}

|

||||

@@ -10,6 +10,10 @@ include:

|

||||

- manager.elasticsearch # needed for elastic_curl_config state

|

||||

{% endif %}

|

||||

|

||||

net.core.wmem_default:

|

||||

sysctl.present:

|

||||

- value: 26214400

|

||||

|

||||

# Remove variables.txt from /tmp - This is temp

|

||||

rmvariablesfile:

|

||||

file.absent:

|

||||

@@ -147,56 +151,8 @@ so-sensor-clean:

|

||||

- daymonth: '*'

|

||||

- month: '*'

|

||||

- dayweek: '*'

|

||||

|

||||

sensorrotatescript:

|

||||

file.managed:

|

||||

- name: /usr/local/bin/sensor-rotate

|

||||

- source: salt://common/cron/sensor-rotate

|

||||

- mode: 755

|

||||

|

||||

sensorrotateconf:

|

||||

file.managed:

|

||||

- name: /opt/so/conf/sensor-rotate.conf

|

||||

- source: salt://common/files/sensor-rotate.conf

|

||||

- mode: 644

|

||||

|

||||

sensor-rotate:

|

||||

cron.present:

|

||||

- name: /usr/local/bin/sensor-rotate

|

||||

- identifier: sensor-rotate

|

||||

- user: root

|

||||

- minute: '1'

|

||||

- hour: '0'

|

||||

- daymonth: '*'

|

||||

- month: '*'

|

||||

- dayweek: '*'

|

||||

|

||||

{% endif %}

|

||||

|

||||

commonlogrotatescript:

|

||||

file.managed:

|

||||

- name: /usr/local/bin/common-rotate

|

||||

- source: salt://common/cron/common-rotate

|

||||

- mode: 755

|

||||

|

||||

commonlogrotateconf:

|

||||

file.managed:

|

||||

- name: /opt/so/conf/log-rotate.conf

|

||||

- source: salt://common/files/log-rotate.conf

|

||||

- template: jinja

|

||||

- mode: 644

|

||||

|

||||

common-rotate:

|

||||

cron.present:

|

||||

- name: /usr/local/bin/common-rotate

|

||||

- identifier: common-rotate

|

||||

- user: root

|

||||

- minute: '1'

|

||||

- hour: '0'

|

||||

- daymonth: '*'

|

||||

- month: '*'

|

||||

- dayweek: '*'

|

||||

|

||||

# Create the status directory

|

||||

sostatusdir:

|

||||

file.directory:

|

||||

|

||||

@@ -8,6 +8,15 @@ soup_scripts:

|

||||

- source: salt://common/tools/sbin

|

||||

- include_pat:

|

||||

- so-common

|

||||

- so-firewall

|

||||

- so-image-common

|

||||

- soup

|

||||

|

||||

soup_manager_scripts:

|

||||

file.recurse:

|

||||

- name: /usr/sbin

|

||||

- user: root

|

||||

- group: root

|

||||

- file_mode: 755

|

||||

- source: salt://manager/tools/sbin

|

||||

- include_pat:

|

||||

- so-firewall

|

||||

- soup

|

||||

@@ -5,6 +5,7 @@

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||

# Elastic License 2.0.

|

||||

|

||||

ELASTIC_AGENT_TARBALL_VERSION="8.7.1"

|

||||

DEFAULT_SALT_DIR=/opt/so/saltstack/default

|

||||

DOC_BASE_URL="https://docs.securityonion.net/en/2.4"

|

||||

|

||||

@@ -242,7 +243,7 @@ is_manager_node() {

|

||||

is_sensor_node() {

|

||||

# Check to see if this is a sensor (forward) node

|

||||

is_single_node_grid && return 0

|

||||

grep "role: so-" /etc/salt/grains | grep -E "sensor|heavynode|helix" &> /dev/null

|

||||

grep "role: so-" /etc/salt/grains | grep -E "sensor|heavynode" &> /dev/null

|

||||

}

|

||||

|

||||

is_single_node_grid() {

|

||||

@@ -300,6 +301,17 @@ lookup_role() {

|

||||

echo ${pieces[1]}

|

||||

}

|

||||

|

||||

is_feature_enabled() {

|

||||

feature=$1

|

||||

enabled=$(lookup_salt_value features)

|

||||

for cur in $enabled; do

|

||||

if [[ "$feature" == "$cur" ]]; then

|

||||

return 0

|

||||

fi

|

||||

done

|

||||

return 1

|

||||

}

|

||||

|

||||

require_manager() {

|

||||

if is_manager_node; then

|

||||

echo "This is a manager, so we can proceed."

|

||||

|

||||

@@ -6,17 +6,17 @@

|

||||

# Elastic License 2.0.

|

||||

|

||||

|

||||

{# we only want the script to install the workstation if it is Rocky -#}

|

||||

{# we only want the script to install the desktop if it is Rocky -#}

|

||||

{% if grains.os == 'Rocky' -%}

|

||||

{# if this is a manager -#}

|

||||

{% if grains.master == grains.id.split('_')|first -%}

|

||||

|

||||

source /usr/sbin/so-common

|

||||

doc_workstation_url="$DOC_BASE_URL/analyst-vm.html"

|

||||

doc_desktop_url="$DOC_BASE_URL/desktop.html"

|

||||

pillar_file="/opt/so/saltstack/local/pillar/minions/{{grains.id}}.sls"

|

||||

|

||||

if [ -f "$pillar_file" ]; then

|

||||

if ! grep -q "^workstation:$" "$pillar_file"; then

|

||||

if ! grep -q "^desktop:$" "$pillar_file"; then

|

||||

|

||||

FIRSTPASS=yes

|

||||

while [[ $INSTALL != "yes" ]] && [[ $INSTALL != "no" ]]; do

|

||||

@@ -26,7 +26,7 @@ if [ -f "$pillar_file" ]; then

|

||||

echo "## _______________________________ ##"

|

||||

echo "## ##"

|

||||

echo "## Installing the Security Onion ##"

|

||||

echo "## analyst node on this device will ##"

|

||||

echo "## Desktop on this device will ##"

|

||||

echo "## make permanent changes to ##"

|

||||

echo "## the system. ##"

|

||||

echo "## A system reboot will be required ##"

|

||||

@@ -42,40 +42,40 @@ if [ -f "$pillar_file" ]; then

|

||||

done

|

||||

|

||||

if [[ $INSTALL == "no" ]]; then

|

||||

echo "Exiting analyst node installation."

|

||||

echo "Exiting desktop node installation."

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Add workstation pillar to the minion's pillar file

|

||||

# Add desktop pillar to the minion's pillar file

|

||||

printf '%s\n'\

|

||||

"workstation:"\

|

||||

"desktop:"\

|

||||

" gui:"\

|

||||

" enabled: true"\

|

||||

"" >> "$pillar_file"

|

||||

echo "Applying the workstation state. This could take some time since there are many packages that need to be installed."

|

||||

if salt-call state.apply workstation -linfo queue=True; then # make sure the state ran successfully

|

||||

echo "Applying the desktop state. This could take some time since there are many packages that need to be installed."

|

||||

if salt-call state.apply desktop -linfo queue=True; then # make sure the state ran successfully

|

||||

echo ""

|

||||

echo "Analyst workstation has been installed!"

|

||||

echo "Security Onion Desktop has been installed!"

|

||||

echo "Press ENTER to reboot or Ctrl-C to cancel."

|

||||

read pause

|

||||

|

||||

reboot;

|

||||

else

|

||||

echo "There was an issue applying the workstation state. Please review the log above or at /opt/so/log/salt/minion."

|

||||

echo "There was an issue applying the desktop state. Please review the log above or at /opt/so/log/salt/minion."

|

||||

fi

|

||||

else # workstation is already added

|

||||

echo "The workstation pillar already exists in $pillar_file."

|

||||

echo "To enable/disable the gui, set 'workstation:gui:enabled' to true or false in $pillar_file."

|

||||

echo "Additional documentation can be found at $doc_workstation_url."

|

||||

else # desktop is already added

|

||||

echo "The desktop pillar already exists in $pillar_file."

|

||||

echo "To enable/disable the gui, set 'desktop:gui:enabled' to true or false in $pillar_file."

|

||||

echo "Additional documentation can be found at $doc_desktop_url."

|

||||

fi

|

||||

else # if the pillar file doesn't exist

|

||||

echo "Could not find $pillar_file and add the workstation pillar."

|

||||

echo "Could not find $pillar_file and add the desktop pillar."

|

||||

fi

|

||||

|

||||

{#- if this is not a manager #}

|

||||

{% else -%}

|

||||

|

||||

echo "Since this is not a manager, the pillar values to enable analyst workstation must be set manually. Please view the documentation at $doc_workstation_url."

|

||||

echo "Since this is not a manager, the pillar values to enable Security Onion Desktop must be set manually. Please view the documentation at $doc_desktop_url."

|

||||

|

||||

{#- endif if this is a manager #}

|

||||

{% endif -%}

|

||||

@@ -83,7 +83,7 @@ echo "Since this is not a manager, the pillar values to enable analyst workstati

|

||||

{#- if not Rocky #}

|

||||

{%- else %}

|

||||

|

||||

echo "The Analyst Workstation can only be installed on Rocky. Please view the documentation at $doc_workstation_url."

|

||||

echo "The Security Onion Desktop can only be installed on Rocky Linux. Please view the documentation at $doc_desktop_url."

|

||||

|

||||

{#- endif grains.os == Rocky #}

|

||||

{% endif -%}

|

||||

@@ -14,19 +14,56 @@

|

||||

{%- set ES_PASS = salt['pillar.get']('elasticsearch:auth:users:so_elastic_user:pass', '') %}

|

||||

|

||||

INDEX_DATE=$(date +'%Y.%m.%d')

|

||||

RUNID=$(cat /dev/urandom | tr -dc 'a-z0-9' | fold -w 8 | head -n 1)

|

||||

LOG_FILE=/nsm/import/evtx-import.log

|

||||

|

||||

. /usr/sbin/so-common

|

||||

|

||||

function usage {

|

||||

cat << EOF

|

||||

Usage: $0 <evtx-file-1> [evtx-file-2] [evtx-file-*]

|

||||

Usage: $0 [options] <evtx-file-1> [evtx-file-2] [evtx-file-*]

|

||||

|

||||

Imports one or more evtx files into Security Onion. The evtx files will be analyzed and made available for review in the Security Onion toolset.

|

||||

|

||||

Options:

|

||||

--json Outputs summary in JSON format. Implies --quiet.

|

||||

--quiet Silences progress information to stdout.

|

||||

EOF

|

||||

}

|

||||

|

||||

quiet=0

|

||||

json=0

|

||||

INPUT_FILES=

|

||||

while [[ $# -gt 0 ]]; do

|

||||

param=$1

|

||||

shift

|

||||

case "$param" in

|

||||

--json)

|

||||

json=1

|

||||

quiet=1

|

||||

;;

|

||||

--quiet)

|

||||

quiet=1

|

||||

;;

|

||||

-*)

|

||||

echo "Encountered unexpected parameter: $param"

|

||||

usage

|

||||

exit 1

|

||||

;;

|

||||

*)

|

||||

if [[ "$INPUT_FILES" != "" ]]; then

|

||||

INPUT_FILES="$INPUT_FILES $param"

|

||||

else

|

||||

INPUT_FILES="$param"

|

||||

fi

|

||||

;;

|

||||

esac

|

||||

done

|

||||

|

||||

function status {

|

||||

msg=$1

|

||||

[[ $quiet -eq 1 ]] && return

|

||||

echo "$msg"

|

||||

}

|

||||

|

||||

function evtx2es() {

|

||||

EVTX=$1

|

||||

@@ -42,31 +79,30 @@ function evtx2es() {

|

||||

}

|

||||

|

||||

# if no parameters supplied, display usage

|

||||

if [ $# -eq 0 ]; then

|

||||

if [ "$INPUT_FILES" == "" ]; then

|

||||

usage

|

||||

exit 1

|

||||

fi

|

||||

|

||||

# ensure this is a Manager node

|

||||

require_manager

|

||||

require_manager @> /dev/null

|

||||

|

||||

# verify that all parameters are files

|

||||

for i in "$@"; do

|

||||

for i in $INPUT_FILES; do

|

||||

if ! [ -f "$i" ]; then

|

||||

usage

|

||||

echo "\"$i\" is not a valid file!"

|

||||

exit 2

|

||||

fi

|

||||

done

|

||||

|

||||

# track if we have any valid or invalid evtx

|

||||

INVALID_EVTXS="no"

|

||||

VALID_EVTXS="no"

|

||||

|

||||

# track oldest start and newest end so that we can generate the Kibana search hyperlink at the end

|

||||

START_OLDEST="2050-12-31"

|

||||

END_NEWEST="1971-01-01"

|

||||

|

||||

INVALID_EVTXS_COUNT=0

|

||||

VALID_EVTXS_COUNT=0

|

||||

SKIPPED_EVTXS_COUNT=0

|

||||

|

||||

touch /nsm/import/evtx-start_oldest

|

||||

touch /nsm/import/evtx-end_newest

|

||||

|

||||

@@ -74,27 +110,39 @@ echo $START_OLDEST > /nsm/import/evtx-start_oldest

|

||||

echo $END_NEWEST > /nsm/import/evtx-end_newest

|

||||

|

||||

# paths must be quoted in case they include spaces

|

||||

for EVTX in "$@"; do

|

||||

for EVTX in $INPUT_FILES; do

|

||||

EVTX=$(/usr/bin/realpath "$EVTX")

|

||||

echo "Processing Import: ${EVTX}"

|

||||

status "Processing Import: ${EVTX}"

|

||||

|

||||

# generate a unique hash to assist with dedupe checks

|

||||

HASH=$(md5sum "${EVTX}" | awk '{ print $1 }')

|

||||

HASH_DIR=/nsm/import/${HASH}

|

||||

echo "- assigning unique identifier to import: $HASH"

|

||||

status "- assigning unique identifier to import: $HASH"

|

||||

|

||||

if [[ "$HASH_FILTERS" == "" ]]; then

|

||||

HASH_FILTERS="import.id:${HASH}"

|

||||

HASHES="${HASH}"

|

||||

else

|

||||

HASH_FILTERS="$HASH_FILTERS%20OR%20import.id:${HASH}"

|

||||

HASHES="${HASHES} ${HASH}"

|

||||

fi

|

||||

|

||||

if [ -d $HASH_DIR ]; then

|

||||

echo "- this EVTX has already been imported; skipping"

|

||||

INVALID_EVTXS="yes"

|

||||

status "- this EVTX has already been imported; skipping"

|

||||

SKIPPED_EVTXS_COUNT=$((SKIPPED_EVTXS_COUNT + 1))

|

||||

else

|

||||

VALID_EVTXS="yes"

|

||||

|

||||

EVTX_DIR=$HASH_DIR/evtx

|

||||

mkdir -p $EVTX_DIR

|

||||

|

||||

# import evtx and write them to import ingest pipeline

|

||||

echo "- importing logs to Elasticsearch..."

|

||||

status "- importing logs to Elasticsearch..."

|

||||

evtx2es "${EVTX}" $HASH

|

||||

if [[ $? -ne 0 ]]; then

|

||||

INVALID_EVTXS_COUNT=$((INVALID_EVTXS_COUNT + 1))

|

||||

status "- WARNING: This evtx file may not have fully imported successfully"

|

||||

else

|

||||

VALID_EVTXS_COUNT=$((VALID_EVTXS_COUNT + 1))

|

||||

fi

|

||||

|

||||

# compare $START to $START_OLDEST

|

||||

START=$(cat /nsm/import/evtx-start_oldest)

|

||||

@@ -118,38 +166,60 @@ for EVTX in "$@"; do

|

||||

|

||||

fi # end of valid evtx

|

||||

|

||||

echo

|

||||

status

|

||||

|

||||

done # end of for-loop processing evtx files

|

||||

|

||||

# remove temp files

|

||||

echo "Cleaning up:"

|

||||

for TEMP_EVTX in ${TEMP_EVTXS[@]}; do

|

||||

echo "- removing temporary evtx $TEMP_EVTX"

|

||||

rm -f $TEMP_EVTX

|

||||

done

|

||||

|

||||

# output final messages

|

||||

if [ "$INVALID_EVTXS" = "yes" ]; then

|

||||

echo

|

||||

echo "Please note! One or more evtx was invalid! You can scroll up to see which ones were invalid."

|

||||

if [[ $INVALID_EVTXS_COUNT -gt 0 ]]; then

|

||||

status

|

||||

status "Please note! One or more evtx was invalid! You can scroll up to see which ones were invalid."

|

||||

fi

|

||||

|

||||

START_OLDEST_FORMATTED=`date +%Y-%m-%d --date="$START_OLDEST"`

|

||||

START_OLDEST_SLASH=$(echo $START_OLDEST_FORMATTED | sed -e 's/-/%2F/g')

|

||||

END_NEWEST_SLASH=$(echo $END_NEWEST | sed -e 's/-/%2F/g')

|

||||

|

||||

if [ "$VALID_EVTXS" = "yes" ]; then

|

||||

cat << EOF

|

||||

if [[ $VALID_EVTXS_COUNT -gt 0 ]] || [[ $SKIPPED_EVTXS_COUNT -gt 0 ]]; then

|

||||

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

||||

|

||||

Import complete!

|

||||

|

||||

You can use the following hyperlink to view data in the time range of your import. You can triple-click to quickly highlight the entire hyperlink and you can then copy it into your browser:

|

||||

https://{{ URLBASE }}/#/dashboards?q=import.id:${RUNID}%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC

|

||||

|

||||

or you can manually set your Time Range to be (in UTC):

|

||||

From: $START_OLDEST_FORMATTED To: $END_NEWEST

|

||||

|

||||

Please note that it may take 30 seconds or more for events to appear in Security Onion Console.

|

||||

EOF

|

||||

status "Import complete!"

|

||||

status

|

||||

status "Use the following hyperlink to view the imported data. Triple-click to quickly highlight the entire hyperlink and then copy it into a browser:"

|

||||

status

|

||||

status "$URL"

|

||||

status

|

||||

status "or, manually set the Time Range to be (in UTC):"

|

||||

status

|

||||

status "From: $START_OLDEST_FORMATTED To: $END_NEWEST"

|

||||

status

|

||||

status "Note: It can take 30 seconds or more for events to appear in Security Onion Console."

|

||||

RESULT=0

|

||||

else

|

||||

START_OLDEST=

|

||||

END_NEWEST=

|

||||

URL=

|

||||

RESULT=1

|

||||

fi

|

||||

|

||||

if [[ $json -eq 1 ]]; then

|

||||

jq -n \

|

||||

--arg success_count "$VALID_EVTXS_COUNT" \

|

||||

--arg fail_count "$INVALID_EVTXS_COUNT" \

|

||||

--arg skipped_count "$SKIPPED_EVTXS_COUNT" \

|

||||

--arg begin_date "$START_OLDEST" \

|

||||

--arg end_date "$END_NEWEST" \

|

||||

--arg url "$URL" \

|

||||

--arg hashes "$HASHES" \

|

||||

'''{

|

||||

success_count: $success_count,

|

||||

fail_count: $fail_count,

|

||||

skipped_count: $skipped_count,

|

||||

begin_date: $begin_date,

|

||||

end_date: $end_date,

|

||||

url: $url,

|

||||

hash: ($hashes / " ")

|

||||

}'''

|

||||

fi

|

||||

|

||||

exit $RESULT

|

||||

@@ -15,12 +15,51 @@

|

||||

|

||||

function usage {

|

||||

cat << EOF

|

||||

Usage: $0 <pcap-file-1> [pcap-file-2] [pcap-file-N]

|

||||

Usage: $0 [options] <pcap-file-1> [pcap-file-2] [pcap-file-N]

|

||||

|

||||

Imports one or more PCAP files onto a sensor node. The PCAP traffic will be analyzed and made available for review in the Security Onion toolset.

|

||||

|

||||

Options:

|

||||

--json Outputs summary in JSON format. Implies --quiet.

|

||||

--quiet Silences progress information to stdout.

|

||||

EOF

|

||||

}

|

||||

|

||||

quiet=0

|

||||

json=0

|

||||

INPUT_FILES=

|

||||

while [[ $# -gt 0 ]]; do

|

||||

param=$1

|

||||

shift

|

||||

case "$param" in

|

||||

--json)

|

||||

json=1

|

||||

quiet=1

|

||||

;;

|

||||

--quiet)

|

||||

quiet=1

|

||||

;;

|

||||

-*)

|

||||

echo "Encountered unexpected parameter: $param"

|

||||

usage

|

||||

exit 1

|

||||

;;

|

||||

*)

|

||||

if [[ "$INPUT_FILES" != "" ]]; then

|

||||

INPUT_FILES="$INPUT_FILES $param"

|

||||

else

|

||||

INPUT_FILES="$param"

|

||||

fi

|

||||

;;

|

||||

esac

|

||||

done

|

||||

|

||||

function status {

|

||||

msg=$1

|

||||

[[ $quiet -eq 1 ]] && return

|

||||

echo "$msg"

|

||||

}

|

||||

|

||||

function pcapinfo() {

|

||||

PCAP=$1

|

||||

ARGS=$2

|

||||

@@ -84,7 +123,7 @@ function zeek() {

|

||||

}

|

||||

|

||||

# if no parameters supplied, display usage

|

||||

if [ $# -eq 0 ]; then

|

||||

if [ "$INPUT_FILES" == "" ]; then

|

||||

usage

|

||||

exit 1

|

||||

fi

|

||||

@@ -96,31 +135,30 @@ if [ ! -d /opt/so/conf/suricata ]; then

|

||||

fi

|

||||

|

||||

# verify that all parameters are files

|

||||

for i in "$@"; do

|

||||

for i in $INPUT_FILES; do

|

||||

if ! [ -f "$i" ]; then

|

||||

usage

|

||||

echo "\"$i\" is not a valid file!"

|

||||

exit 2

|

||||

fi

|

||||

done

|

||||

|

||||

# track if we have any valid or invalid pcaps

|

||||

INVALID_PCAPS="no"

|

||||

VALID_PCAPS="no"

|

||||

|

||||

# track oldest start and newest end so that we can generate the Kibana search hyperlink at the end

|

||||

START_OLDEST="2050-12-31"

|

||||

END_NEWEST="1971-01-01"

|

||||

|

||||

INVALID_PCAPS_COUNT=0

|

||||

VALID_PCAPS_COUNT=0

|

||||

SKIPPED_PCAPS_COUNT=0

|

||||

|

||||

# paths must be quoted in case they include spaces

|

||||

for PCAP in "$@"; do

|

||||

for PCAP in $INPUT_FILES; do

|

||||

PCAP=$(/usr/bin/realpath "$PCAP")

|

||||

echo "Processing Import: ${PCAP}"

|

||||

echo "- verifying file"

|

||||

status "Processing Import: ${PCAP}"

|

||||

status "- verifying file"

|

||||

if ! pcapinfo "${PCAP}" > /dev/null 2>&1; then

|

||||

# try to fix pcap and then process the fixed pcap directly

|

||||

PCAP_FIXED=`mktemp /tmp/so-import-pcap-XXXXXXXXXX.pcap`

|

||||

echo "- attempting to recover corrupted PCAP file"

|

||||

status "- attempting to recover corrupted PCAP file"

|

||||

pcapfix "${PCAP}" "${PCAP_FIXED}"

|

||||

# Make fixed file world readable since the Suricata docker container will runas a non-root user

|

||||

chmod a+r "${PCAP_FIXED}"

|

||||

@@ -131,33 +169,44 @@ for PCAP in "$@"; do

|

||||

# generate a unique hash to assist with dedupe checks

|

||||

HASH=$(md5sum "${PCAP}" | awk '{ print $1 }')

|

||||

HASH_DIR=/nsm/import/${HASH}

|

||||

echo "- assigning unique identifier to import: $HASH"

|

||||

status "- assigning unique identifier to import: $HASH"

|

||||

|

||||

if [ -d $HASH_DIR ]; then

|

||||

echo "- this PCAP has already been imported; skipping"

|

||||

INVALID_PCAPS="yes"

|

||||

elif pcapinfo "${PCAP}" |egrep -q "Last packet time: 1970-01-01|Last packet time: n/a"; then

|

||||

echo "- this PCAP file is invalid; skipping"

|

||||

INVALID_PCAPS="yes"

|

||||

pcap_data=$(pcapinfo "${PCAP}")

|

||||

if ! echo "$pcap_data" | grep -q "First packet time:" || echo "$pcap_data" |egrep -q "Last packet time: 1970-01-01|Last packet time: n/a"; then

|

||||

status "- this PCAP file is invalid; skipping"

|

||||

INVALID_PCAPS_COUNT=$((INVALID_PCAPS_COUNT + 1))

|

||||

else

|

||||

VALID_PCAPS="yes"

|

||||

if [ -d $HASH_DIR ]; then

|

||||

status "- this PCAP has already been imported; skipping"

|

||||

SKIPPED_PCAPS_COUNT=$((SKIPPED_PCAPS_COUNT + 1))

|

||||

else

|

||||

VALID_PCAPS_COUNT=$((VALID_PCAPS_COUNT + 1))

|

||||

|

||||

PCAP_DIR=$HASH_DIR/pcap

|

||||

mkdir -p $PCAP_DIR

|

||||

PCAP_DIR=$HASH_DIR/pcap

|

||||

mkdir -p $PCAP_DIR

|

||||

|

||||

# generate IDS alerts and write them to standard pipeline

|

||||

echo "- analyzing traffic with Suricata"

|

||||

suricata "${PCAP}" $HASH

|

||||

{% if salt['pillar.get']('global:mdengine') == 'ZEEK' %}

|

||||

# generate Zeek logs and write them to a unique subdirectory in /nsm/import/zeek/

|

||||

# since each run writes to a unique subdirectory, there is no need for a lock file

|

||||

echo "- analyzing traffic with Zeek"

|

||||

zeek "${PCAP}" $HASH

|

||||

{% endif %}

|

||||

# generate IDS alerts and write them to standard pipeline

|

||||

status "- analyzing traffic with Suricata"

|

||||

suricata "${PCAP}" $HASH

|

||||

{% if salt['pillar.get']('global:mdengine') == 'ZEEK' %}

|

||||

# generate Zeek logs and write them to a unique subdirectory in /nsm/import/zeek/

|

||||

# since each run writes to a unique subdirectory, there is no need for a lock file

|

||||

status "- analyzing traffic with Zeek"

|

||||

zeek "${PCAP}" $HASH

|

||||

{% endif %}

|

||||

fi

|

||||

|

||||

if [[ "$HASH_FILTERS" == "" ]]; then

|

||||

HASH_FILTERS="import.id:${HASH}"

|

||||

HASHES="${HASH}"

|

||||

else

|

||||

HASH_FILTERS="$HASH_FILTERS%20OR%20import.id:${HASH}"

|

||||

HASHES="${HASHES} ${HASH}"

|

||||

fi

|

||||

|

||||

START=$(pcapinfo "${PCAP}" -a |grep "First packet time:" | awk '{print $4}')

|

||||

END=$(pcapinfo "${PCAP}" -e |grep "Last packet time:" | awk '{print $4}')

|

||||

echo "- saving PCAP data spanning dates $START through $END"

|

||||

status "- found PCAP data spanning dates $START through $END"

|

||||

|

||||

# compare $START to $START_OLDEST

|

||||

START_COMPARE=$(date -d $START +%s)

|

||||

@@ -179,37 +228,62 @@ for PCAP in "$@"; do

|

||||

|

||||

fi # end of valid pcap

|

||||

|

||||

echo

|

||||

status

|

||||

|

||||

done # end of for-loop processing pcap files

|

||||

|

||||

# remove temp files

|

||||

echo "Cleaning up:"

|

||||

for TEMP_PCAP in ${TEMP_PCAPS[@]}; do

|

||||

echo "- removing temporary pcap $TEMP_PCAP"

|

||||

status "- removing temporary pcap $TEMP_PCAP"

|

||||

rm -f $TEMP_PCAP

|

||||

done

|

||||

|

||||

# output final messages

|

||||

if [ "$INVALID_PCAPS" = "yes" ]; then

|

||||

echo

|

||||

echo "Please note! One or more pcaps was invalid! You can scroll up to see which ones were invalid."

|

||||

if [[ $INVALID_PCAPS_COUNT -gt 0 ]]; then

|

||||

status

|

||||

status "WARNING: One or more pcaps was invalid. Scroll up to see which ones were invalid."

|

||||

fi

|

||||

|

||||

START_OLDEST_SLASH=$(echo $START_OLDEST | sed -e 's/-/%2F/g')

|

||||

END_NEWEST_SLASH=$(echo $END_NEWEST | sed -e 's/-/%2F/g')

|

||||

if [[ $VALID_PCAPS_COUNT -gt 0 ]] || [[ $SKIPPED_PCAPS_COUNT -gt 0 ]]; then

|

||||

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

||||

|

||||

if [ "$VALID_PCAPS" = "yes" ]; then

|

||||

cat << EOF

|

||||

|

||||

Import complete!

|

||||

|

||||

You can use the following hyperlink to view data in the time range of your import. You can triple-click to quickly highlight the entire hyperlink and you can then copy it into your browser:

|

||||

https://{{ URLBASE }}/#/dashboards?q=import.id:${HASH}%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC

|

||||

|

||||

or you can manually set your Time Range to be (in UTC):

|

||||

From: $START_OLDEST To: $END_NEWEST

|

||||

|

||||

Please note that it may take 30 seconds or more for events to appear in Security Onion Console.

|

||||

EOF

|

||||

status "Import complete!"

|

||||

status

|

||||

status "Use the following hyperlink to view the imported data. Triple-click to quickly highlight the entire hyperlink and then copy it into a browser:"

|

||||

status "$URL"

|

||||

status

|

||||

status "or, manually set the Time Range to be (in UTC):"

|

||||

status "From: $START_OLDEST To: $END_NEWEST"

|

||||

status

|

||||

status "Note: It can take 30 seconds or more for events to appear in Security Onion Console."

|

||||

RESULT=0

|

||||

else

|

||||

START_OLDEST=

|

||||

END_NEWEST=

|

||||

URL=

|

||||

RESULT=1

|

||||

fi

|

||||

|

||||

if [[ $json -eq 1 ]]; then

|

||||

jq -n \

|

||||

--arg success_count "$VALID_PCAPS_COUNT" \

|

||||

--arg fail_count "$INVALID_PCAPS_COUNT" \

|

||||

--arg skipped_count "$SKIPPED_PCAPS_COUNT" \

|

||||

--arg begin_date "$START_OLDEST" \

|

||||

--arg end_date "$END_NEWEST" \

|

||||

--arg url "$URL" \

|

||||

--arg hashes "$HASHES" \

|

||||

'''{

|

||||

success_count: $success_count,

|

||||

fail_count: $fail_count,

|

||||

skipped_count: $skipped_count,

|

||||

begin_date: $begin_date,

|

||||

end_date: $end_date,

|

||||

url: $url,

|

||||

hash: ($hashes / " ")

|

||||

}'''

|

||||

fi

|

||||

|

||||

exit $RESULT

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -13,7 +13,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -13,7 +13,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

@@ -12,7 +12,7 @@ actions:

|

||||

options:

|

||||

delete_aliases: False

|

||||

timeout_override:

|

||||

continue_if_exception: False

|

||||

ignore_empty_list: True

|

||||

disable_action: False

|

||||

filters:

|

||||

- filtertype: pattern

|

||||

|

||||

|

Before Width: | Height: | Size: 269 KiB After Width: | Height: | Size: 269 KiB |

|

Before Width: | Height: | Size: 1.7 KiB After Width: | Height: | Size: 1.7 KiB |

|

Before Width: | Height: | Size: 1.7 KiB After Width: | Height: | Size: 1.7 KiB |

|

Before Width: | Height: | Size: 319 KiB After Width: | Height: | Size: 319 KiB |

@@ -1,7 +1,7 @@

|

||||

include:

|

||||

- workstation.xwindows

|

||||

- desktop.xwindows

|

||||

{# If the master is 'salt' then the minion hasn't been configured and isn't connected to the grid. #}

|

||||

{# We need this since the trusted-ca state uses mine data. #}

|

||||

{% if grains.master != 'salt' %}

|

||||

- workstation.trusted-ca

|

||||

- desktop.trusted-ca

|

||||

{% endif %}

|

||||

310

salt/desktop/packages.sls

Normal file

@@ -0,0 +1,310 @@

|

||||

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

||||

|

||||

{# we only want this state to run it is CentOS #}

|

||||

{% if GLOBALS.os == 'Rocky' %}

|

||||

|

||||

|

||||

desktop_packages:

|

||||

pkg.installed:

|

||||

- pkgs:

|

||||

- NetworkManager

|

||||

- NetworkManager-adsl

|

||||

- NetworkManager-bluetooth

|

||||

- NetworkManager-l2tp-gnome

|

||||

- NetworkManager-libreswan-gnome

|

||||

- NetworkManager-openconnect-gnome

|

||||

- NetworkManager-openvpn-gnome

|

||||

- NetworkManager-ppp

|

||||

- NetworkManager-pptp-gnome

|

||||

- NetworkManager-team

|

||||

- NetworkManager-tui

|

||||

- NetworkManager-wifi

|

||||

- NetworkManager-wwan

|

||||

- PackageKit-gstreamer-plugin

|

||||

- aajohan-comfortaa-fonts

|

||||

- abattis-cantarell-fonts

|

||||

- acl

|

||||

- alsa-ucm

|

||||

- alsa-utils

|

||||

- anaconda

|

||||

- anaconda-install-env-deps

|

||||

- anaconda-live

|

||||

- at

|

||||

- attr

|

||||

- audit

|

||||

- authselect

|

||||

- basesystem

|

||||

- bash

|

||||

- bash-completion

|

||||

- bc

|

||||

- blktrace

|

||||

- bluez

|

||||

- bolt

|

||||

- bpftool

|

||||

- bzip2

|

||||

- chkconfig

|

||||

- chromium

|

||||

- chrony

|

||||

- cinnamon

|

||||

- cinnamon-control-center

|

||||

- cinnamon-screensaver

|

||||

- cockpit

|

||||

- coreutils

|

||||

- cpio

|

||||

- cronie

|

||||

- crontabs

|

||||

- crypto-policies

|

||||

- crypto-policies-scripts

|

||||

- cryptsetup

|

||||

- curl

|

||||

- cyrus-sasl-plain

|

||||

- dbus

|

||||

- dejavu-sans-fonts

|

||||

- dejavu-sans-mono-fonts

|

||||

- dejavu-serif-fonts

|

||||

- dnf

|

||||

- dnf-plugins-core

|

||||

- dos2unix

|

||||

- dosfstools

|

||||

- dracut-config-rescue

|

||||

- dracut-live

|

||||

- dsniff

|

||||

- e2fsprogs

|

||||

- ed

|

||||

- efi-filesystem

|

||||

- efibootmgr

|

||||

- efivar-libs

|

||||

- eom

|

||||

- ethtool

|

||||

- f36-backgrounds-extras-gnome

|

||||

- f36-backgrounds-gnome

|

||||

- f37-backgrounds-extras-gnome

|

||||

- f37-backgrounds-gnome

|

||||

- file

|

||||

- filesystem

|

||||

- firewall-config

|

||||

- firewalld

|

||||

- fprintd-pam

|

||||

- git

|

||||

- glibc

|

||||

- glibc-all-langpacks

|

||||

- gnome-calculator

|

||||

- gnome-disk-utility

|

||||

- gnome-screenshot

|

||||

- gnome-system-monitor

|

||||

- gnome-terminal

|

||||

- gnupg2

|

||||

- google-noto-emoji-color-fonts

|

||||

- google-noto-sans-cjk-ttc-fonts

|

||||

- google-noto-sans-gurmukhi-fonts

|

||||

- google-noto-sans-sinhala-vf-fonts

|

||||

- google-noto-serif-cjk-ttc-fonts

|

||||

- grub2-common

|

||||

- grub2-pc-modules

|

||||

- grub2-tools

|

||||

- grub2-tools-efi

|

||||

- grub2-tools-extra

|

||||

- grub2-tools-minimal

|

||||

- grubby

|

||||

- gstreamer1-plugins-bad-free

|

||||

- gstreamer1-plugins-good

|

||||

- gstreamer1-plugins-ugly-free

|

||||

- gvfs-gphoto2

|

||||

- gvfs-mtp

|

||||

- gvfs-smb

|

||||

- hostname

|

||||

- hyperv-daemons

|

||||

- ibus-anthy

|

||||

- ibus-hangul

|

||||

- ibus-libpinyin

|

||||

- ibus-libzhuyin

|

||||

- ibus-m17n

|

||||

- ibus-typing-booster

|

||||

- imsettings-systemd

|

||||

- initial-setup-gui

|

||||

- initscripts

|

||||

- initscripts-rename-device

|

||||

- iproute

|

||||

- iproute-tc

|

||||

- iprutils

|

||||

- iputils

|

||||

- irqbalance

|

||||

- iwl100-firmware

|

||||

- iwl1000-firmware

|

||||

- iwl105-firmware

|

||||

- iwl135-firmware

|

||||

- iwl2000-firmware

|

||||

- iwl2030-firmware

|

||||

- iwl3160-firmware

|

||||

- iwl5000-firmware

|

||||

- iwl5150-firmware

|

||||

- iwl6000g2a-firmware

|

||||

- iwl6000g2b-firmware

|

||||

- iwl6050-firmware

|

||||

- iwl7260-firmware

|

||||