Compare commits

103 Commits

reyesj2-pa

...

2.3.170-20

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

116a6a0acd | ||

|

|

311b69dc4a | ||

|

|

fd59acce5d | ||

|

|

956d3e4345 | ||

|

|

b8355b3a03 | ||

|

|

535b9f86db | ||

|

|

97c66a5404 | ||

|

|

6553beec99 | ||

|

|

e171dd52b8 | ||

|

|

27a837369d | ||

|

|

043b9f78e2 | ||

|

|

2f260a785f | ||

|

|

001b2dc6cc | ||

|

|

b13eedfbc2 | ||

|

|

dd70ef17b9 | ||

|

|

82dff3e9da | ||

|

|

d9cfd92b8f | ||

|

|

33cb771780 | ||

|

|

76cca8594d | ||

|

|

5c9c95ba1f | ||

|

|

e62bebeafe | ||

|

|

8a0e92cc6f | ||

|

|

3f9259dd0a | ||

|

|

30b9868de1 | ||

|

|

e88243c306 | ||

|

|

2128550df2 | ||

|

|

db67c0ed94 | ||

|

|

2e32c0d236 | ||

|

|

4b1ad1910d | ||

|

|

c337145b2c | ||

|

|

bd7b4c92bc | ||

|

|

33ebed3468 | ||

|

|

616bc40412 | ||

|

|

f00d9074ff | ||

|

|

9a692288e2 | ||

|

|

fea2b481e3 | ||

|

|

c17f0081ef | ||

|

|

fbf0803906 | ||

|

|

5deda45b66 | ||

|

|

3b8d8163b3 | ||

|

|

2dfd41bd3c | ||

|

|

49eead1d55 | ||

|

|

54cb3c3a5a | ||

|

|

9f2b920454 | ||

|

|

604af45661 | ||

|

|

3f435c5c1a | ||

|

|

7769af4541 | ||

|

|

9903be8120 | ||

|

|

991a601a3d | ||

|

|

86519d43dc | ||

|

|

179f669acf | ||

|

|

a02f878dcc | ||

|

|

32c29b28eb | ||

|

|

7bf2603414 | ||

|

|

4003876465 | ||

|

|

4c677961c4 | ||

|

|

e950d865d8 | ||

|

|

fd7a118664 | ||

|

|

d7906945df | ||

|

|

cb384ae024 | ||

|

|

7caead2387 | ||

|

|

4827c9e0d4 | ||

|

|

3b62fc63c9 | ||

|

|

ad32c2b1a5 | ||

|

|

f02f431dab | ||

|

|

812964e4d8 | ||

|

|

99805cc326 | ||

|

|

8d2b3f3dfe | ||

|

|

15f7fd8920 | ||

|

|

50460bf91e | ||

|

|

ee654f767a | ||

|

|

8c694a7ca3 | ||

|

|

9ac640fa67 | ||

|

|

db8d9fff2c | ||

|

|

811063268f | ||

|

|

f2b10a5a86 | ||

|

|

c69cac0e5f | ||

|

|

fed4433088 | ||

|

|

839cfcaefa | ||

|

|

3123407ef0 | ||

|

|

d24125c9e6 | ||

|

|

64dc278c95 | ||

|

|

626a824cd6 | ||

|

|

10ba3b4b5a | ||

|

|

1d059fc96e | ||

|

|

4c1585f8d8 | ||

|

|

e235957c00 | ||

|

|

2cc665bac6 | ||

|

|

d6e118dcd3 | ||

|

|

1d2534b2a1 | ||

|

|

484aa7b207 | ||

|

|

6986448239 | ||

|

|

f1d74dcd67 | ||

|

|

dd48d66c1c | ||

|

|

440f4e75c1 | ||

|

|

c795a70e9c | ||

|

|

340dbe8547 | ||

|

|

52a5e743e9 | ||

|

|

5ceff52796 | ||

|

|

f3a0ab0b2d | ||

|

|

4a7c994b66 | ||

|

|

07b8785f3d | ||

|

|

9a1092ab01 |

6

.github/.gitleaks.toml

vendored

@@ -536,11 +536,11 @@ secretGroup = 4

|

||||

|

||||

[allowlist]

|

||||

description = "global allow lists"

|

||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''', '''ssl_.*password''', '''integration_key\s=\s"so-logs-"''']

|

||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''']

|

||||

paths = [

|

||||

'''gitleaks.toml''',

|

||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

||||

'''(go.mod|go.sum)$''',

|

||||

'''salt/nginx/files/enterprise-attack.json''',

|

||||

'''(.*?)whl$'''

|

||||

|

||||

'''salt/nginx/files/enterprise-attack.json'''

|

||||

]

|

||||

|

||||

202

.github/DISCUSSION_TEMPLATE/2-4.yml

vendored

@@ -1,202 +0,0 @@

|

||||

body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

⚠️ This category is solely for conversations related to Security Onion 2.4 ⚠️

|

||||

|

||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Version

|

||||

description: Which version of Security Onion 2.4.x are you asking about?

|

||||

options:

|

||||

-

|

||||

- 2.4.10

|

||||

- 2.4.20

|

||||

- 2.4.30

|

||||

- 2.4.40

|

||||

- 2.4.50

|

||||

- 2.4.60

|

||||

- 2.4.70

|

||||

- 2.4.80

|

||||

- 2.4.90

|

||||

- 2.4.100

|

||||

- 2.4.110

|

||||

- 2.4.111

|

||||

- 2.4.120

|

||||

- 2.4.130

|

||||

- 2.4.140

|

||||

- 2.4.141

|

||||

- 2.4.150

|

||||

- 2.4.160

|

||||

- 2.4.170

|

||||

- 2.4.180

|

||||

- 2.4.190

|

||||

- 2.4.200

|

||||

- Other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Installation Method

|

||||

description: How did you install Security Onion?

|

||||

options:

|

||||

-

|

||||

- Security Onion ISO image

|

||||

- Cloud image (Amazon, Azure, Google)

|

||||

- Network installation on Red Hat derivative like Oracle, Rocky, Alma, etc. (unsupported)

|

||||

- Network installation on Ubuntu (unsupported)

|

||||

- Network installation on Debian (unsupported)

|

||||

- Other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Description

|

||||

description: >

|

||||

Is this discussion about installation, configuration, upgrading, or other?

|

||||

options:

|

||||

-

|

||||

- installation

|

||||

- configuration

|

||||

- upgrading

|

||||

- other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Installation Type

|

||||

description: >

|

||||

When you installed, did you choose Import, Eval, Standalone, Distributed, or something else?

|

||||

options:

|

||||

-

|

||||

- Import

|

||||

- Eval

|

||||

- Standalone

|

||||

- Distributed

|

||||

- other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Location

|

||||

description: >

|

||||

Is this deployment in the cloud, on-prem with Internet access, or airgap?

|

||||

options:

|

||||

-

|

||||

- cloud

|

||||

- on-prem with Internet access

|

||||

- airgap

|

||||

- other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Hardware Specs

|

||||

description: >

|

||||

Does your hardware meet or exceed the minimum requirements for your installation type as shown at https://docs.securityonion.net/en/2.4/hardware.html?

|

||||

options:

|

||||

-

|

||||

- Meets minimum requirements

|

||||

- Exceeds minimum requirements

|

||||

- Does not meet minimum requirements

|

||||

- other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: CPU

|

||||

description: How many CPU cores do you have?

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: RAM

|

||||

description: How much RAM do you have?

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: Storage for /

|

||||

description: How much storage do you have for the / partition?

|

||||

validations:

|

||||

required: true

|

||||

- type: input

|

||||

attributes:

|

||||

label: Storage for /nsm

|

||||

description: How much storage do you have for the /nsm partition?

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Network Traffic Collection

|

||||

description: >

|

||||

Are you collecting network traffic from a tap or span port?

|

||||

options:

|

||||

-

|

||||

- tap

|

||||

- span port

|

||||

- other (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Network Traffic Speeds

|

||||

description: >

|

||||

How much network traffic are you monitoring?

|

||||

options:

|

||||

-

|

||||

- Less than 1Gbps

|

||||

- 1Gbps to 10Gbps

|

||||

- more than 10Gbps

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Status

|

||||

description: >

|

||||

Does SOC Grid show all services on all nodes as running OK?

|

||||

options:

|

||||

-

|

||||

- Yes, all services on all nodes are running OK

|

||||

- No, one or more services are failed (please provide detail below)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Salt Status

|

||||

description: >

|

||||

Do you get any failures when you run "sudo salt-call state.highstate"?

|

||||

options:

|

||||

-

|

||||

- Yes, there are salt failures (please provide detail below)

|

||||

- No, there are no failures

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Logs

|

||||

description: >

|

||||

Are there any additional clues in /opt/so/log/?

|

||||

options:

|

||||

-

|

||||

- Yes, there are additional clues in /opt/so/log/ (please provide detail below)

|

||||

- No, there are no additional clues

|

||||

validations:

|

||||

required: true

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Detail

|

||||

description: Please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and then provide detailed information to help us help you.

|

||||

placeholder: |-

|

||||

STOP! Before typing, please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 in their entirety!

|

||||

|

||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

||||

validations:

|

||||

required: true

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Guidelines

|

||||

options:

|

||||

- label: I have read the discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and assert that I have followed the guidelines.

|

||||

required: true

|

||||

12

.github/ISSUE_TEMPLATE

vendored

Normal file

@@ -0,0 +1,12 @@

|

||||

PLEASE STOP AND READ THIS INFORMATION!

|

||||

|

||||

If you are creating an issue just to ask a question, you will likely get faster and better responses by posting to our discussions forum instead:

|

||||

https://securityonion.net/discuss

|

||||

|

||||

If you think you have found a possible bug or are observing a behavior that you weren't expecting, use the discussion forum to start a conversation about it instead of creating an issue.

|

||||

|

||||

If you are very familiar with the latest version of the product and are confident you have found a bug in Security Onion, you can continue with creating an issue here, but please make sure you have done the following:

|

||||

- duplicated the issue on a fresh installation of the latest version

|

||||

- provide information about your system and how you installed Security Onion

|

||||

- include relevant log files

|

||||

- include reproduction steps

|

||||

38

.github/ISSUE_TEMPLATE/bug_report.md

vendored

@@ -1,38 +0,0 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: This option is for experienced community members to report a confirmed, reproducible bug

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

PLEASE STOP AND READ THIS INFORMATION!

|

||||

|

||||

If you are creating an issue just to ask a question, you will likely get faster and better responses by posting to our discussions forum at https://securityonion.net/discuss.

|

||||

|

||||

If you think you have found a possible bug or are observing a behavior that you weren't expecting, use the discussion forum at https://securityonion.net/discuss to start a conversation about it instead of creating an issue.

|

||||

|

||||

If you are very familiar with the latest version of the product and are confident you have found a bug in Security Onion, you can continue with creating an issue here, but please make sure you have done the following:

|

||||

- duplicated the issue on a fresh installation of the latest version

|

||||

- provide information about your system and how you installed Security Onion

|

||||

- include relevant log files

|

||||

- include reproduction steps

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

5

.github/ISSUE_TEMPLATE/config.yml

vendored

@@ -1,5 +0,0 @@

|

||||

blank_issues_enabled: false

|

||||

contact_links:

|

||||

- name: Security Onion Discussions

|

||||

url: https://securityonion.com/discussions

|

||||

about: Please ask and answer questions here

|

||||

33

.github/workflows/close-threads.yml

vendored

@@ -1,33 +0,0 @@

|

||||

name: 'Close Threads'

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: '50 1 * * *'

|

||||

workflow_dispatch:

|

||||

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

discussions: write

|

||||

|

||||

concurrency:

|

||||

group: lock-threads

|

||||

|

||||

jobs:

|

||||

close-threads:

|

||||

if: github.repository_owner == 'security-onion-solutions'

|

||||

runs-on: ubuntu-latest

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

steps:

|

||||

- uses: actions/stale@v5

|

||||

with:

|

||||

days-before-issue-stale: -1

|

||||

days-before-issue-close: 60

|

||||

stale-issue-message: "This issue is stale because it has been inactive for an extended period. Stale issues convey that the issue, while important to someone, is not critical enough for the author, or other community members to work on, sponsor, or otherwise shepherd the issue through to a resolution."

|

||||

close-issue-message: "This issue was closed because it has been stale for an extended period. It will be automatically locked in 30 days, after which no further commenting will be available."

|

||||

days-before-pr-stale: 45

|

||||

days-before-pr-close: 60

|

||||

stale-pr-message: "This PR is stale because it has been inactive for an extended period. The longer a PR remains stale the more out of date with the main branch it becomes."

|

||||

close-pr-message: "This PR was closed because it has been stale for an extended period. It will be automatically locked in 30 days. If there is still a commitment to finishing this PR re-open it before it is locked."

|

||||

4

.github/workflows/contrib.yml

vendored

@@ -11,14 +11,14 @@ jobs:

|

||||

steps:

|

||||

- name: "Contributor Check"

|

||||

if: (github.event.comment.body == 'recheck' || github.event.comment.body == 'I have read the CLA Document and I hereby sign the CLA') || github.event_name == 'pull_request_target'

|

||||

uses: cla-assistant/github-action@v2.3.1

|

||||

uses: cla-assistant/github-action@v2.1.3-beta

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

PERSONAL_ACCESS_TOKEN : ${{ secrets.PERSONAL_ACCESS_TOKEN }}

|

||||

with:

|

||||

path-to-signatures: 'signatures_v1.json'

|

||||

path-to-document: 'https://securityonionsolutions.com/cla'

|

||||

allowlist: dependabot[bot],jertel,dougburks,TOoSmOotH,defensivedepth,m0duspwnens

|

||||

allowlist: dependabot[bot],jertel,dougburks,TOoSmOotH,weslambert,defensivedepth,m0duspwnens

|

||||

remote-organization-name: Security-Onion-Solutions

|

||||

remote-repository-name: licensing

|

||||

|

||||

|

||||

26

.github/workflows/lock-threads.yml

vendored

@@ -1,26 +0,0 @@

|

||||

name: 'Lock Threads'

|

||||

|

||||

on:

|

||||

schedule:

|

||||

- cron: '50 2 * * *'

|

||||

workflow_dispatch:

|

||||

|

||||

permissions:

|

||||

issues: write

|

||||

pull-requests: write

|

||||

discussions: write

|

||||

|

||||

concurrency:

|

||||

group: lock-threads

|

||||

|

||||

jobs:

|

||||

lock-threads:

|

||||

if: github.repository_owner == 'security-onion-solutions'

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: jertel/lock-threads@main

|

||||

with:

|

||||

include-discussion-currently-open: true

|

||||

discussion-inactive-days: 90

|

||||

issue-inactive-days: 30

|

||||

pr-inactive-days: 30

|

||||

12

.github/workflows/pythontest.yml

vendored

@@ -1,10 +1,6 @@

|

||||

name: python-test

|

||||

|

||||

on:

|

||||

pull_request:

|

||||

paths:

|

||||

- "salt/sensoroni/files/analyzers/**"

|

||||

- "salt/manager/tools/sbin"

|

||||

on: [push, pull_request]

|

||||

|

||||

jobs:

|

||||

build:

|

||||

@@ -13,8 +9,8 @@ jobs:

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

python-version: ["3.13"]

|

||||

python-code-path: ["salt/sensoroni/files/analyzers", "salt/manager/tools/sbin"]

|

||||

python-version: ["3.10"]

|

||||

python-code-path: ["salt/sensoroni/files/analyzers"]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

@@ -32,4 +28,4 @@ jobs:

|

||||

flake8 ${{ matrix.python-code-path }} --show-source --max-complexity=12 --doctests --max-line-length=200 --statistics

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

PYTHONPATH=${{ matrix.python-code-path }} pytest ${{ matrix.python-code-path }} --cov=${{ matrix.python-code-path }} --doctest-modules --cov-report=term --cov-fail-under=100 --cov-config=pytest.ini

|

||||

pytest ${{ matrix.python-code-path }} --cov=${{ matrix.python-code-path }} --doctest-modules --cov-report=term --cov-fail-under=100 --cov-config=${{ matrix.python-code-path }}/pytest.ini

|

||||

|

||||

1

.gitignore

vendored

@@ -1,3 +1,4 @@

|

||||

|

||||

# Created by https://www.gitignore.io/api/macos,windows

|

||||

# Edit at https://www.gitignore.io/?templates=macos,windows

|

||||

|

||||

|

||||

@@ -1,53 +0,0 @@

|

||||

### 2.4.190-20251024 ISO image released on 2025/10/24

|

||||

|

||||

|

||||

### Download and Verify

|

||||

|

||||

2.4.190-20251024 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.190-20251024.iso

|

||||

|

||||

MD5: 25358481FB876226499C011FC0710358

|

||||

SHA1: 0B26173C0CE136F2CA40A15046D1DFB78BCA1165

|

||||

SHA256: 4FD9F62EDA672408828B3C0C446FE5EA9FF3C4EE8488A7AB1101544A3C487872

|

||||

|

||||

Signature for ISO image:

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.190-20251024.iso.sig

|

||||

|

||||

Signing key:

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||

|

||||

For example, here are the steps you can use on most Linux distributions to download and verify our Security Onion ISO image.

|

||||

|

||||

Download and import the signing key:

|

||||

```

|

||||

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS -O - | gpg --import -

|

||||

```

|

||||

|

||||

Download the signature file for the ISO:

|

||||

```

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.190-20251024.iso.sig

|

||||

```

|

||||

|

||||

Download the ISO image:

|

||||

```

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.190-20251024.iso

|

||||

```

|

||||

|

||||

Verify the downloaded ISO image using the signature file:

|

||||

```

|

||||

gpg --verify securityonion-2.4.190-20251024.iso.sig securityonion-2.4.190-20251024.iso

|

||||

```

|

||||

|

||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||

```

|

||||

gpg: Signature made Thu 23 Oct 2025 07:21:46 AM EDT using RSA key ID FE507013

|

||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||

gpg: WARNING: This key is not certified with a trusted signature!

|

||||

gpg: There is no indication that the signature belongs to the owner.

|

||||

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||

```

|

||||

|

||||

If it fails to verify, try downloading again. If it still fails to verify, try downloading from another computer or another network.

|

||||

|

||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

53

LICENSE

@@ -1,53 +0,0 @@

|

||||

Elastic License 2.0 (ELv2)

|

||||

|

||||

Acceptance

|

||||

|

||||

By using the software, you agree to all of the terms and conditions below.

|

||||

|

||||

Copyright License

|

||||

|

||||

The licensor grants you a non-exclusive, royalty-free, worldwide, non-sublicensable, non-transferable license to use, copy, distribute, make available, and prepare derivative works of the software, in each case subject to the limitations and conditions below.

|

||||

|

||||

Limitations

|

||||

|

||||

You may not provide the software to third parties as a hosted or managed service, where the service provides users with access to any substantial set of the features or functionality of the software.

|

||||

|

||||

You may not move, change, disable, or circumvent the license key functionality in the software, and you may not remove or obscure any functionality in the software that is protected by the license key.

|

||||

|

||||

You may not alter, remove, or obscure any licensing, copyright, or other notices of the licensor in the software. Any use of the licensor’s trademarks is subject to applicable law.

|

||||

|

||||

Patents

|

||||

|

||||

The licensor grants you a license, under any patent claims the licensor can license, or becomes able to license, to make, have made, use, sell, offer for sale, import and have imported the software, in each case subject to the limitations and conditions in this license. This license does not cover any patent claims that you cause to be infringed by modifications or additions to the software. If you or your company make any written claim that the software infringes or contributes to infringement of any patent, your patent license for the software granted under these terms ends immediately. If your company makes such a claim, your patent license ends immediately for work on behalf of your company.

|

||||

|

||||

Notices

|

||||

|

||||

You must ensure that anyone who gets a copy of any part of the software from you also gets a copy of these terms.

|

||||

|

||||

If you modify the software, you must include in any modified copies of the software prominent notices stating that you have modified the software.

|

||||

|

||||

No Other Rights

|

||||

|

||||

These terms do not imply any licenses other than those expressly granted in these terms.

|

||||

|

||||

Termination

|

||||

|

||||

If you use the software in violation of these terms, such use is not licensed, and your licenses will automatically terminate. If the licensor provides you with a notice of your violation, and you cease all violation of this license no later than 30 days after you receive that notice, your licenses will be reinstated retroactively. However, if you violate these terms after such reinstatement, any additional violation of these terms will cause your licenses to terminate automatically and permanently.

|

||||

|

||||

No Liability

|

||||

|

||||

As far as the law allows, the software comes as is, without any warranty or condition, and the licensor will not be liable to you for any damages arising out of these terms or the use or nature of the software, under any kind of legal claim.

|

||||

|

||||

Definitions

|

||||

|

||||

The licensor is the entity offering these terms, and the software is the software the licensor makes available under these terms, including any portion of it.

|

||||

|

||||

you refers to the individual or entity agreeing to these terms.

|

||||

|

||||

your company is any legal entity, sole proprietorship, or other kind of organization that you work for, plus all organizations that have control over, are under the control of, or are under common control with that organization. control means ownership of substantially all the assets of an entity, or the power to direct its management and policies by vote, contract, or otherwise. Control can be direct or indirect.

|

||||

|

||||

your licenses are all the licenses granted to you for the software under these terms.

|

||||

|

||||

use means anything you do with the software requiring one of your licenses.

|

||||

|

||||

trademark means trademarks, service marks, and similar rights.

|

||||

35

README.md

@@ -1,50 +1,41 @@

|

||||

## Security Onion 2.4

|

||||

## Security Onion 2.3.170

|

||||

|

||||

Security Onion 2.4 is here!

|

||||

Security Onion 2.3.170 is here!

|

||||

|

||||

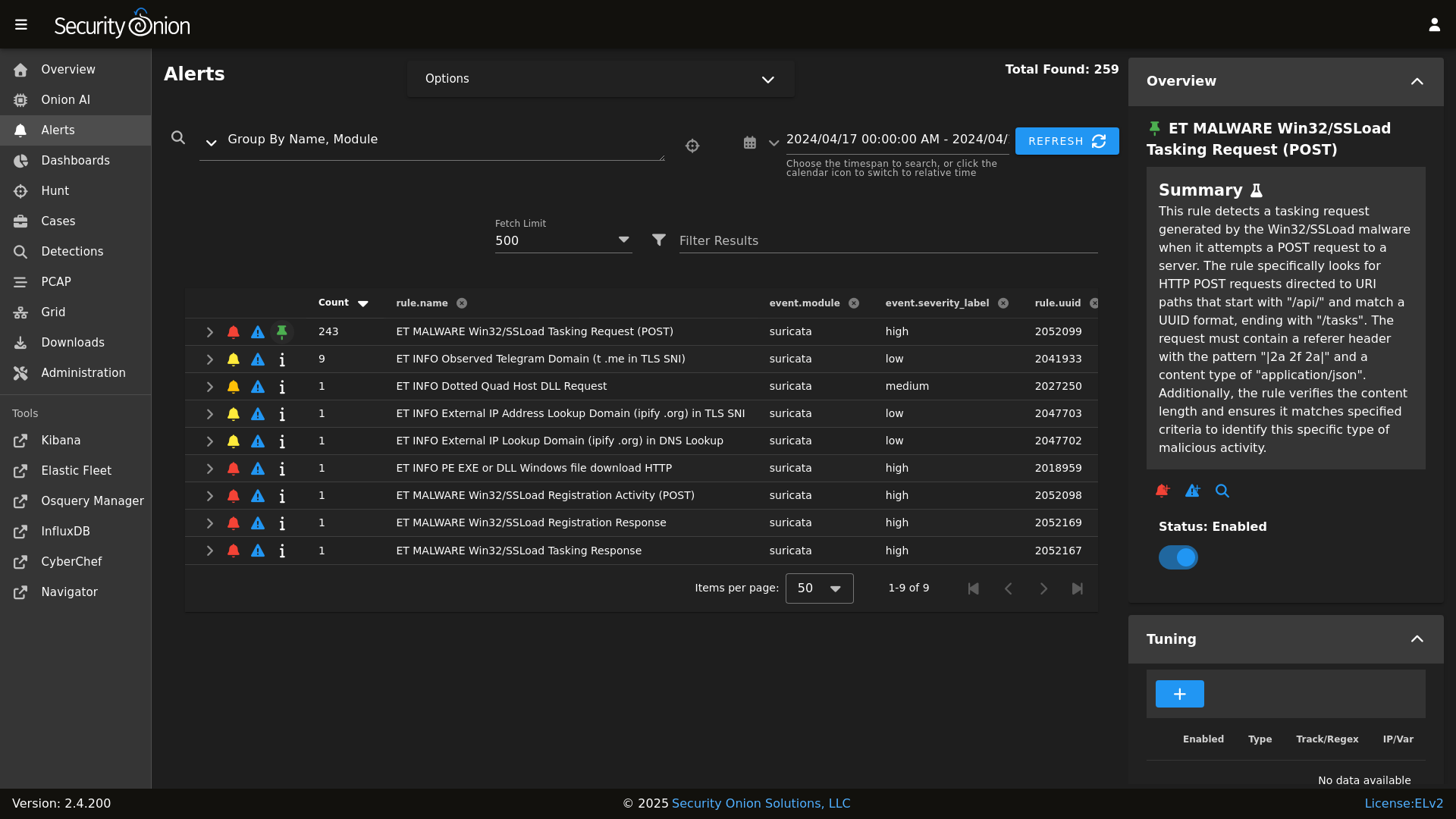

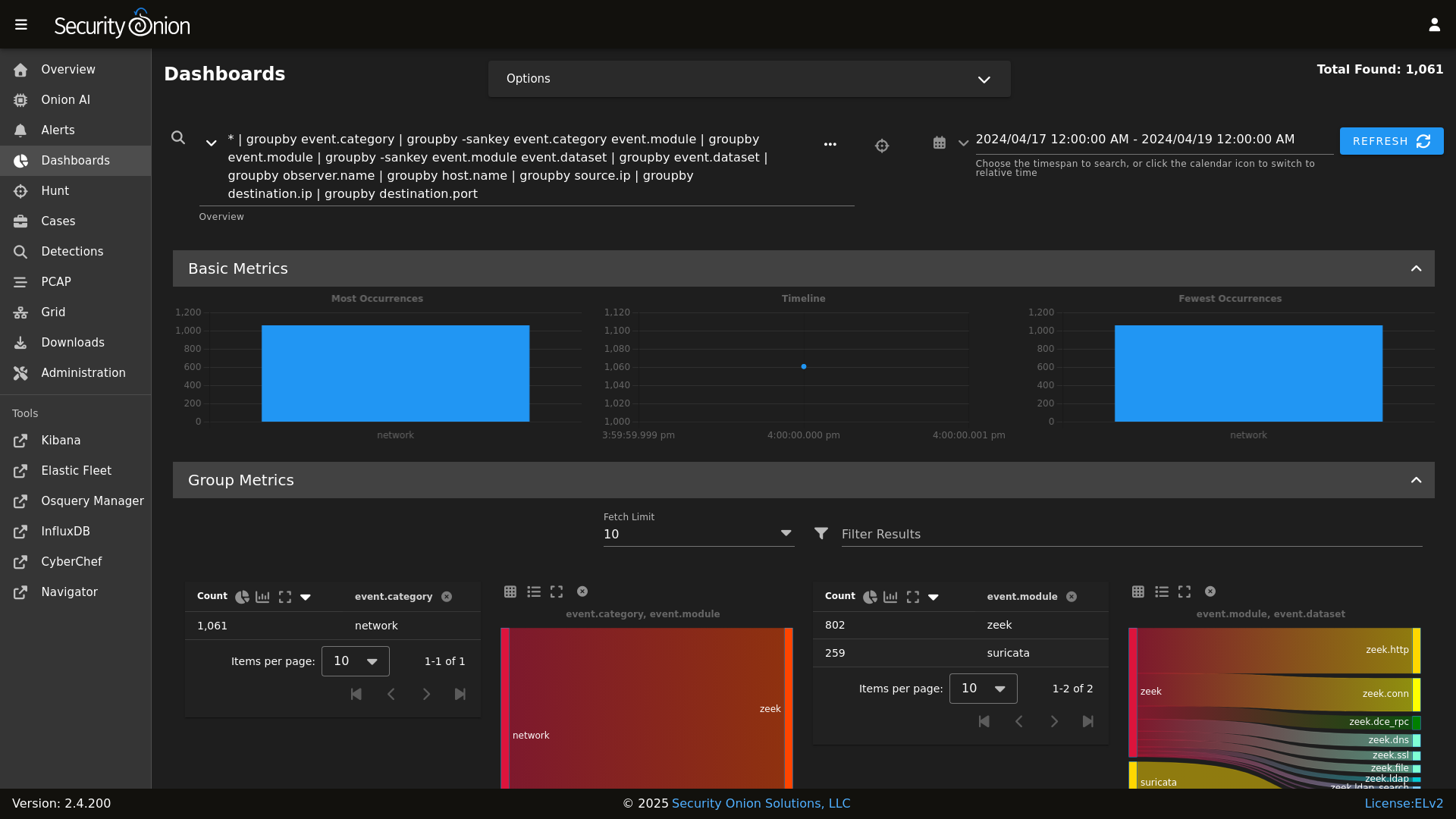

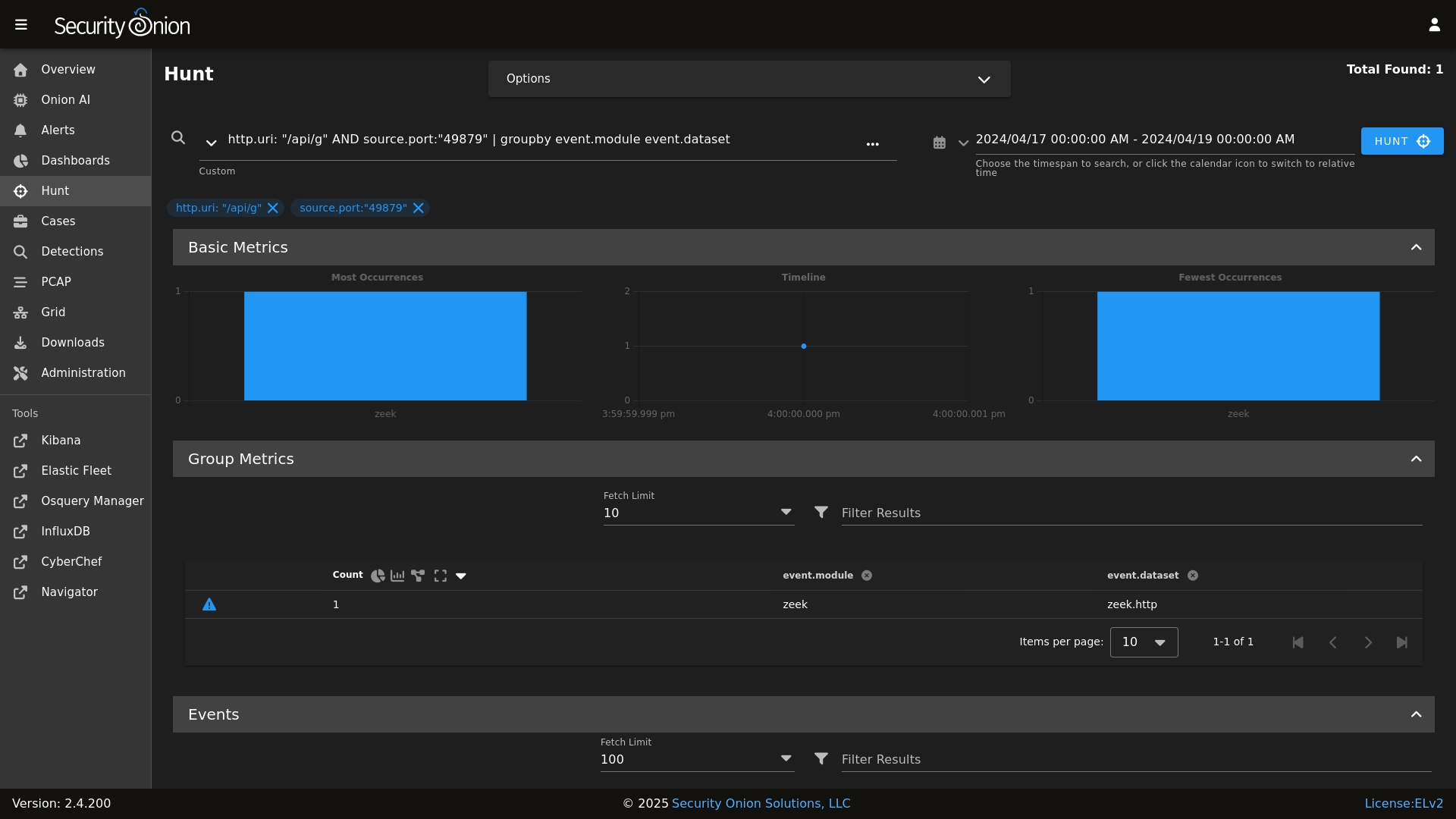

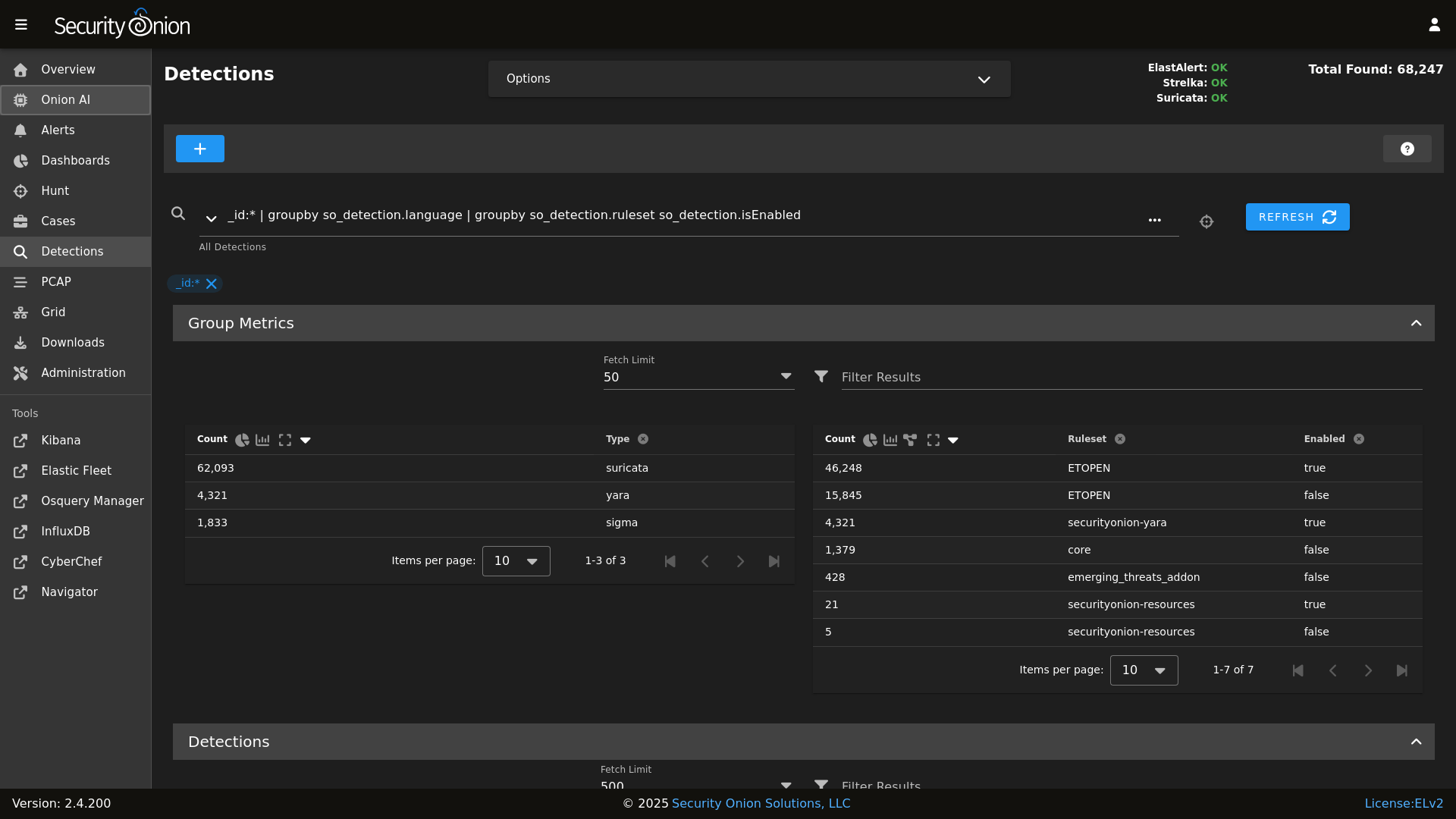

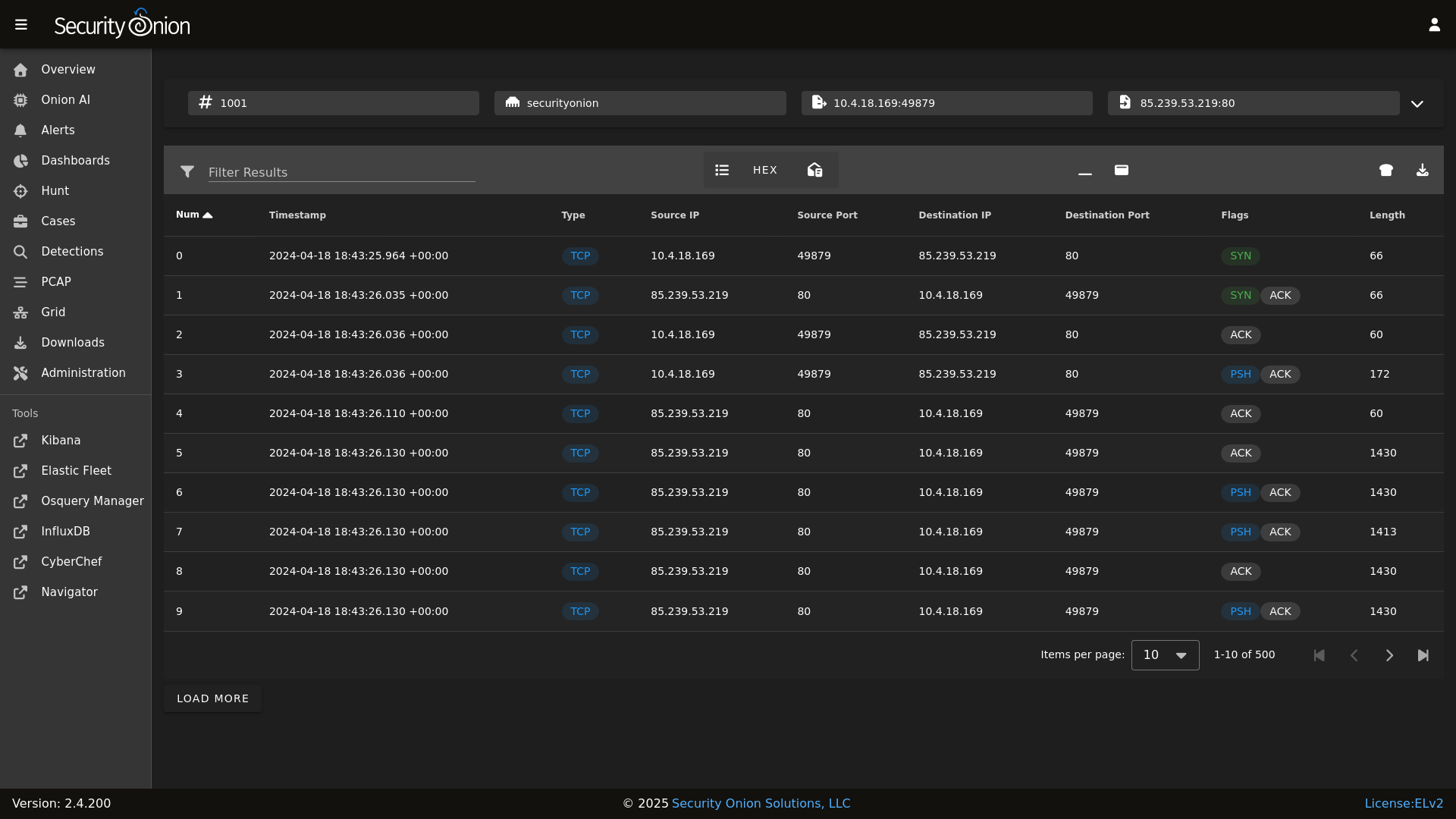

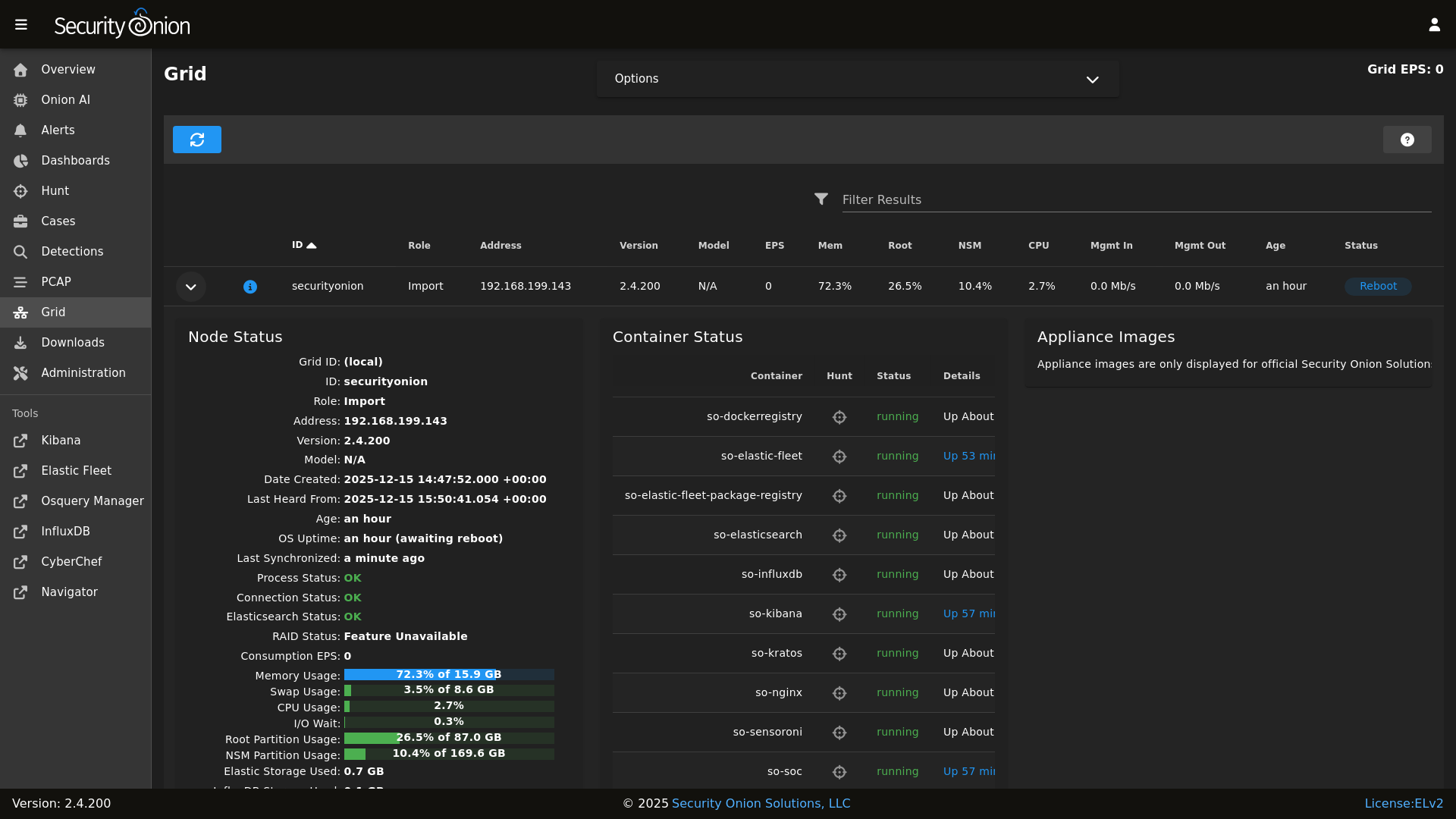

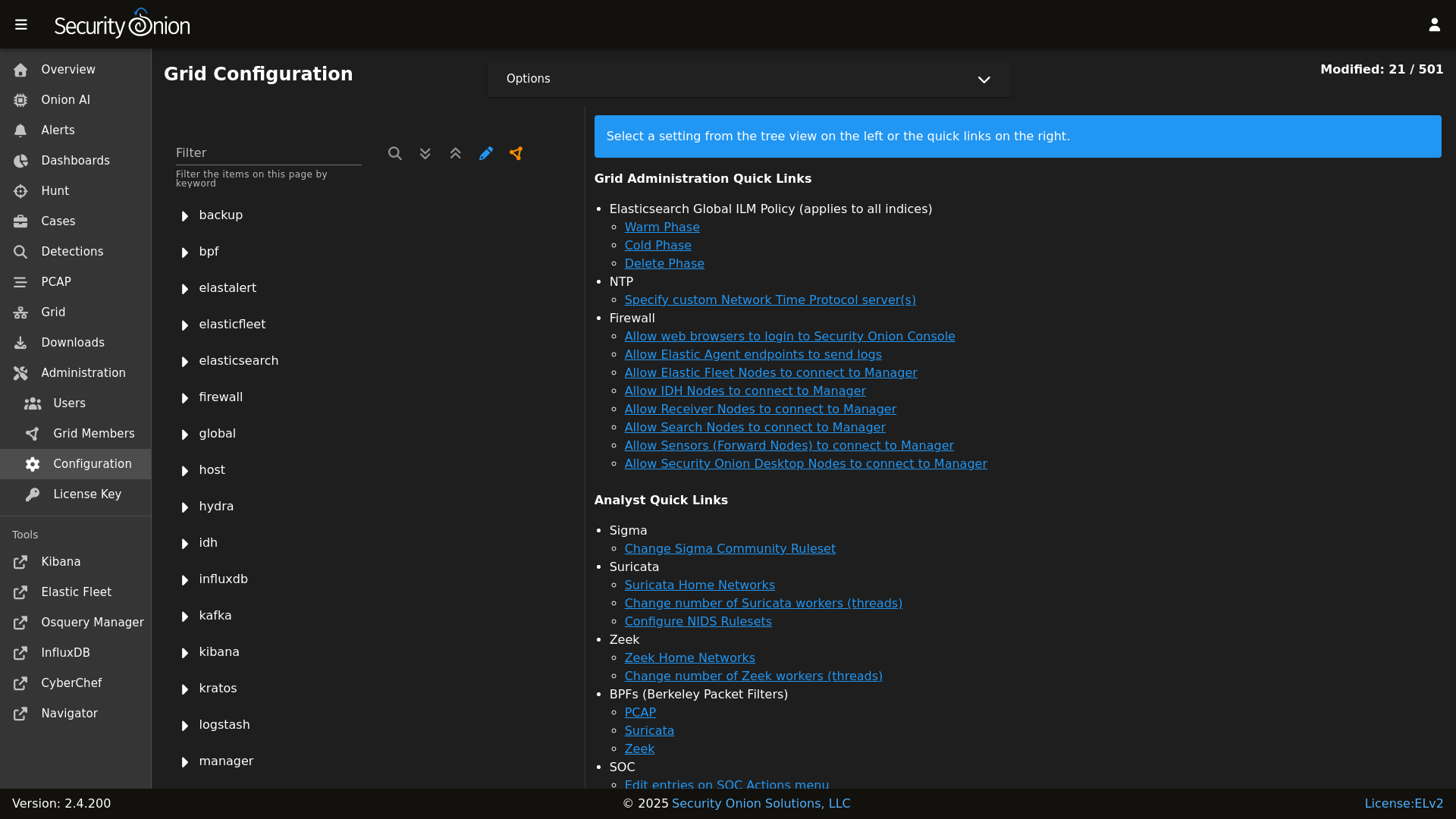

## Screenshots

|

||||

|

||||

Alerts

|

||||

|

||||

|

||||

|

||||

Dashboards

|

||||

|

||||

|

||||

|

||||

Hunt

|

||||

|

||||

|

||||

|

||||

Detections

|

||||

|

||||

|

||||

PCAP

|

||||

|

||||

|

||||

Grid

|

||||

|

||||

|

||||

Config

|

||||

|

||||

Cases

|

||||

|

||||

|

||||

### Release Notes

|

||||

|

||||

https://docs.securityonion.net/en/2.4/release-notes.html

|

||||

https://docs.securityonion.net/en/2.3/release-notes.html

|

||||

|

||||

### Requirements

|

||||

|

||||

https://docs.securityonion.net/en/2.4/hardware.html

|

||||

https://docs.securityonion.net/en/2.3/hardware.html

|

||||

|

||||

### Download

|

||||

|

||||

https://docs.securityonion.net/en/2.4/download.html

|

||||

https://docs.securityonion.net/en/2.3/download.html

|

||||

|

||||

### Installation

|

||||

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

https://docs.securityonion.net/en/2.3/installation.html

|

||||

|

||||

### FAQ

|

||||

|

||||

https://docs.securityonion.net/en/2.4/faq.html

|

||||

https://docs.securityonion.net/en/2.3/faq.html

|

||||

|

||||

### Feedback

|

||||

|

||||

https://docs.securityonion.net/en/2.4/community-support.html

|

||||

https://docs.securityonion.net/en/2.3/community-support.html

|

||||

|

||||

@@ -4,12 +4,9 @@

|

||||

|

||||

| Version | Supported |

|

||||

| ------- | ------------------ |

|

||||

| 2.4.x | :white_check_mark: |

|

||||

| 2.3.x | :x: |

|

||||

| 2.x.x | :white_check_mark: |

|

||||

| 16.04.x | :x: |

|

||||

|

||||

Security Onion 2.3 has reached End Of Life and is no longer supported.

|

||||

|

||||

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

||||

|

||||

## Reporting a Vulnerability

|

||||

|

||||

52

VERIFY_ISO.md

Normal file

@@ -0,0 +1,52 @@

|

||||

### 2.3.170-20220922 ISO image built on 2022/09/22

|

||||

|

||||

|

||||

|

||||

### Download and Verify

|

||||

|

||||

2.3.170-20220922 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.3.170-20220922.iso

|

||||

|

||||

MD5: B45E38F72500CF302AE7CB3A87B3DB4C

|

||||

SHA1: 06EC41B4B7E55453389952BE91B20AA465E18F33

|

||||

SHA256: 634A2E88250DC7583705360EB5AD966D282FAE77AFFAF81676CB6D66D7950A3E

|

||||

|

||||

Signature for ISO image:

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.170-20220922.iso.sig

|

||||

|

||||

Signing key:

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS

|

||||

|

||||

For example, here are the steps you can use on most Linux distributions to download and verify our Security Onion ISO image.

|

||||

|

||||

Download and import the signing key:

|

||||

```

|

||||

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS -O - | gpg --import -

|

||||

```

|

||||

|

||||

Download the signature file for the ISO:

|

||||

```

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.170-20220922.iso.sig

|

||||

```

|

||||

|

||||

Download the ISO image:

|

||||

```

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.3.170-20220922.iso

|

||||

```

|

||||

|

||||

Verify the downloaded ISO image using the signature file:

|

||||

```

|

||||

gpg --verify securityonion-2.3.170-20220922.iso.sig securityonion-2.3.170-20220922.iso

|

||||

```

|

||||

|

||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||

```

|

||||

gpg: Signature made Thu 22 Sep 2022 11:48:42 AM EDT using RSA key ID FE507013

|

||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||

gpg: WARNING: This key is not certified with a trusted signature!

|

||||

gpg: There is no indication that the signature belongs to the owner.

|

||||

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||

```

|

||||

|

||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||

https://docs.securityonion.net/en/2.3/installation.html

|

||||

|

Before Width: | Height: | Size: 21 KiB |

|

Before Width: | Height: | Size: 22 KiB |

|

Before Width: | Height: | Size: 12 KiB |

@@ -1,8 +1,8 @@

|

||||

{% import_yaml 'firewall/ports/ports.yaml' as default_portgroups %}

|

||||

{% set default_portgroups = default_portgroups.firewall.ports %}

|

||||

{% import_yaml 'firewall/ports/ports.local.yaml' as local_portgroups %}

|

||||

{% if local_portgroups.firewall.ports %}

|

||||

{% set local_portgroups = local_portgroups.firewall.ports %}

|

||||

{% import_yaml 'firewall/portgroups.yaml' as default_portgroups %}

|

||||

{% set default_portgroups = default_portgroups.firewall.aliases.ports %}

|

||||

{% import_yaml 'firewall/portgroups.local.yaml' as local_portgroups %}

|

||||

{% if local_portgroups.firewall.aliases.ports %}

|

||||

{% set local_portgroups = local_portgroups.firewall.aliases.ports %}

|

||||

{% else %}

|

||||

{% set local_portgroups = {} %}

|

||||

{% endif %}

|

||||

@@ -12,6 +12,7 @@ role:

|

||||

eval:

|

||||

fleet:

|

||||

heavynode:

|

||||

helixsensor:

|

||||

idh:

|

||||

import:

|

||||

manager:

|

||||

|

||||

82

files/firewall/hostgroups.local.yaml

Normal file

@@ -0,0 +1,82 @@

|

||||

firewall:

|

||||

hostgroups:

|

||||

analyst:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

beats_endpoint:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

beats_endpoint_ssl:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

elasticsearch_rest:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

endgame:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

fleet:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

heavy_node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

idh:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

manager:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

minion:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

osquery_endpoint:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

receiver:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

search_node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

sensor:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

strelka_frontend:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

syslog:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_agent:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_api:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_authd:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

3

files/firewall/portgroups.local.yaml

Normal file

@@ -0,0 +1,3 @@

|

||||

firewall:

|

||||

aliases:

|

||||

ports:

|

||||

@@ -1,2 +0,0 @@

|

||||

firewall:

|

||||

ports:

|

||||

@@ -41,8 +41,7 @@ file_roots:

|

||||

base:

|

||||

- /opt/so/saltstack/local/salt

|

||||

- /opt/so/saltstack/default/salt

|

||||

- /nsm/elastic-fleet/artifacts

|

||||

- /opt/so/rules/nids

|

||||

|

||||

|

||||

# The master_roots setting configures a master-only copy of the file_roots dictionary,

|

||||

# used by the state compiler.

|

||||

@@ -65,4 +64,10 @@ peer:

|

||||

.*:

|

||||

- x509.sign_remote_certificate

|

||||

|

||||

reactor:

|

||||

- 'so/fleet':

|

||||

- salt://reactor/fleet.sls

|

||||

- 'salt/beacon/*/watch_sqlite_db//opt/so/conf/kratos/db/sqlite.db':

|

||||

- salt://reactor/kratos.sls

|

||||

|

||||

|

||||

|

||||

@@ -45,10 +45,12 @@ echo " rootfs: $ROOTFS" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

echo " nsmfs: $NSM" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

if [ $TYPE == 'sensorstab' ]; then

|

||||

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

salt-call state.apply grafana queue=True

|

||||

fi

|

||||

if [ $TYPE == 'evaltab' ] || [ $TYPE == 'standalonetab' ]; then

|

||||

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

if [ ! $10 ]; then

|

||||

salt-call state.apply grafana queue=True

|

||||

salt-call state.apply utility queue=True

|

||||

fi

|

||||

fi

|

||||

|

||||

@@ -1,34 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='elasticsearch:enabled:true',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

elasticsearch:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -1,34 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='G@role:so-hypervisor or G@role:so-managerhype',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='compound') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

hypervisor:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -1,2 +0,0 @@

|

||||

kafka:

|

||||

nodes:

|

||||

13

pillar/logrotate/init.sls

Normal file

@@ -0,0 +1,13 @@

|

||||

logrotate:

|

||||

conf: |

|

||||

daily

|

||||

rotate 14

|

||||

missingok

|

||||

copytruncate

|

||||

compress

|

||||

create

|

||||

extension .log

|

||||

dateext

|

||||

dateyesterday

|

||||

group_conf: |

|

||||

su root socore

|

||||

42

pillar/logstash/helix.sls

Normal file

@@ -0,0 +1,42 @@

|

||||

logstash:

|

||||

pipelines:

|

||||

helix:

|

||||

config:

|

||||

- so/0010_input_hhbeats.conf

|

||||

- so/1033_preprocess_snort.conf

|

||||

- so/1100_preprocess_bro_conn.conf

|

||||

- so/1101_preprocess_bro_dhcp.conf

|

||||

- so/1102_preprocess_bro_dns.conf

|

||||

- so/1103_preprocess_bro_dpd.conf

|

||||

- so/1104_preprocess_bro_files.conf

|

||||

- so/1105_preprocess_bro_ftp.conf

|

||||

- so/1106_preprocess_bro_http.conf

|

||||

- so/1107_preprocess_bro_irc.conf

|

||||

- so/1108_preprocess_bro_kerberos.conf

|

||||

- so/1109_preprocess_bro_notice.conf

|

||||

- so/1110_preprocess_bro_rdp.conf

|

||||

- so/1111_preprocess_bro_signatures.conf

|

||||

- so/1112_preprocess_bro_smtp.conf

|

||||

- so/1113_preprocess_bro_snmp.conf

|

||||

- so/1114_preprocess_bro_software.conf

|

||||

- so/1115_preprocess_bro_ssh.conf

|

||||

- so/1116_preprocess_bro_ssl.conf

|

||||

- so/1117_preprocess_bro_syslog.conf

|

||||

- so/1118_preprocess_bro_tunnel.conf

|

||||

- so/1119_preprocess_bro_weird.conf

|

||||

- so/1121_preprocess_bro_mysql.conf

|

||||

- so/1122_preprocess_bro_socks.conf

|

||||

- so/1123_preprocess_bro_x509.conf

|

||||

- so/1124_preprocess_bro_intel.conf

|

||||

- so/1125_preprocess_bro_modbus.conf

|

||||

- so/1126_preprocess_bro_sip.conf

|

||||

- so/1127_preprocess_bro_radius.conf

|

||||

- so/1128_preprocess_bro_pe.conf

|

||||

- so/1129_preprocess_bro_rfb.conf

|

||||

- so/1130_preprocess_bro_dnp3.conf

|

||||

- so/1131_preprocess_bro_smb_files.conf

|

||||

- so/1132_preprocess_bro_smb_mapping.conf

|

||||

- so/1133_preprocess_bro_ntlm.conf

|

||||

- so/1134_preprocess_bro_dce_rpc.conf

|

||||

- so/8001_postprocess_common_ip_augmentation.conf

|

||||

- so/9997_output_helix.conf.jinja

|

||||

@@ -3,8 +3,6 @@ logstash:

|

||||

port_bindings:

|

||||

- 0.0.0.0:3765:3765

|

||||

- 0.0.0.0:5044:5044

|

||||

- 0.0.0.0:5055:5055

|

||||

- 0.0.0.0:5056:5056

|

||||

- 0.0.0.0:5644:5644

|

||||

- 0.0.0.0:6050:6050

|

||||

- 0.0.0.0:6051:6051

|

||||

|

||||

9

pillar/logstash/manager.sls

Normal file

@@ -0,0 +1,9 @@

|

||||

logstash:

|

||||

pipelines:

|

||||

manager:

|

||||

config:

|

||||

- so/0009_input_beats.conf

|

||||

- so/0010_input_hhbeats.conf

|

||||

- so/0011_input_endgame.conf

|

||||

- so/9999_output_redis.conf.jinja

|

||||

|

||||

@@ -1,15 +1,14 @@

|

||||

{% set node_types = {} %}

|

||||

{% set cached_grains = salt.saltutil.runner('cache.grains', tgt='*') %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='logstash:enabled:true',

|

||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-node or G@role:so-heavynode or G@role:so-receiver or G@role:so-helix',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

tgt_type='compound') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% set hostname = cached_grains[minionid]['host'] %}

|

||||

{% set node_type = minionid.split('_')[1] %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

@@ -19,10 +18,8 @@

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

logstash:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

|

||||

9

pillar/logstash/receiver.sls

Normal file

@@ -0,0 +1,9 @@

|

||||

logstash:

|

||||

pipelines:

|

||||

receiver:

|

||||

config:

|

||||

- so/0009_input_beats.conf

|

||||

- so/0010_input_hhbeats.conf

|

||||

- so/0011_input_endgame.conf

|

||||

- so/9999_output_redis.conf.jinja

|

||||

|

||||

18

pillar/logstash/search.sls

Normal file

@@ -0,0 +1,18 @@

|

||||

logstash:

|

||||

pipelines:

|

||||

search:

|

||||

config:

|

||||

- so/0900_input_redis.conf.jinja

|

||||

- so/9000_output_zeek.conf.jinja

|

||||

- so/9002_output_import.conf.jinja

|

||||

- so/9034_output_syslog.conf.jinja

|

||||

- so/9050_output_filebeatmodules.conf.jinja

|

||||

- so/9100_output_osquery.conf.jinja

|

||||

- so/9400_output_suricata.conf.jinja

|

||||

- so/9500_output_beats.conf.jinja

|

||||

- so/9600_output_ossec.conf.jinja

|

||||

- so/9700_output_strelka.conf.jinja

|

||||

- so/9800_output_logscan.conf.jinja

|

||||

- so/9801_output_rita.conf.jinja

|

||||

- so/9802_output_kratos.conf.jinja

|

||||

- so/9900_output_endgame.conf.jinja

|

||||

@@ -1,12 +1,11 @@

|

||||

{% set node_types = {} %}

|

||||

{% set manage_alived = salt.saltutil.runner('manage.alived', show_ip=True) %}

|

||||

{% set manager = grains.master %}

|

||||

{% set manager_type = manager.split('_')|last %}

|

||||

{% for minionid, ip in salt.saltutil.runner('mine.get', tgt='*', fun='network.ip_addrs', tgt_type='glob') | dictsort() %}

|

||||

{% set hostname = minionid.split('_')[0] %}

|

||||

{% set node_type = minionid.split('_')[1] %}

|

||||

{% set is_alive = False %}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% if minionid in manage_alived.keys() %}

|

||||

{% if ip[0] == manage_alived[minionid] %}

|

||||

{% set is_alive = True %}

|

||||

@@ -21,19 +20,14 @@

|

||||

{% do node_types[node_type][hostname].update({'ip':ip[0], 'alive':is_alive}) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

{% if node_types %}

|

||||

node_data:

|

||||

{% for node_type, host_values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, details in host_values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{details.ip}}

|

||||

alive: {{ details.alive }}

|

||||

role: {{node_type}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

{% else %}

|

||||

node_data: False

|

||||

{% endif %}

|

||||

|

||||

@@ -1,34 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='redis:enabled:true',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='pillar') | dictsort()

|

||||

%}

|

||||

|

||||

# only add a node to the pillar if it returned an ip from the mine

|

||||

{% if ip | length > 0%}

|

||||

{% set hostname = minionid.split('_') | first %}

|

||||

{% set node_type = minionid.split('_') | last %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

|

||||

redis:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -1,14 +0,0 @@

|

||||

# Copyright Jason Ertel (github.com/jertel).

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with

|

||||

# the Elastic License 2.0.

|

||||

|

||||

# Note: Per the Elastic License 2.0, the second limitation states:

|

||||

#

|

||||

# "You may not move, change, disable, or circumvent the license key functionality

|

||||

# in the software, and you may not remove or obscure any functionality in the

|

||||

# software that is protected by the license key."

|

||||

|

||||

# This file is generated by Security Onion and contains a list of license-enabled features.

|

||||

features: []

|

||||

44

pillar/thresholding/pillar.example

Normal file

@@ -0,0 +1,44 @@

|

||||

thresholding:

|

||||

sids:

|

||||

8675309:

|

||||

- threshold:

|

||||

gen_id: 1

|

||||

type: threshold

|

||||

track: by_src

|

||||

count: 10

|

||||

seconds: 10

|

||||

- threshold:

|

||||

gen_id: 1

|

||||

type: limit

|

||||

track: by_dst

|

||||

count: 100

|

||||

seconds: 30

|

||||

- rate_filter:

|

||||

gen_id: 1

|

||||

track: by_rule

|

||||

count: 50

|

||||

seconds: 30

|

||||

new_action: alert

|

||||

timeout: 30

|

||||

- suppress:

|

||||

gen_id: 1

|

||||

track: by_either

|

||||

ip: 10.10.3.7

|

||||

11223344:

|

||||

- threshold:

|

||||

gen_id: 1

|

||||

type: limit

|

||||

track: by_dst

|

||||

count: 10

|

||||

seconds: 10

|

||||

- rate_filter:

|

||||

gen_id: 1

|

||||

track: by_src

|

||||

count: 50

|

||||

seconds: 20

|

||||

new_action: pass

|

||||

timeout: 60

|

||||

- suppress:

|

||||

gen_id: 1

|

||||

track: by_src

|

||||

ip: 10.10.3.0/24

|

||||

20

pillar/thresholding/pillar.usage

Normal file

@@ -0,0 +1,20 @@

|

||||

thresholding:

|

||||

sids:

|

||||

<signature id>:

|

||||

- threshold:

|

||||

gen_id: <generator id>

|

||||

type: <threshold | limit | both>

|

||||

track: <by_src | by_dst>

|

||||

count: <count>

|

||||

seconds: <seconds>

|

||||

- rate_filter:

|

||||

gen_id: <generator id>

|

||||

track: <by_src | by_dst | by_rule | by_both>

|

||||

count: <count>

|

||||

seconds: <seconds>

|

||||

new_action: <alert | pass>

|

||||

timeout: <seconds>

|

||||

- suppress:

|

||||

gen_id: <generator id>

|

||||

track: <by_src | by_dst | by_either>

|

||||

ip: <ip | subnet>

|

||||

332

pillar/top.sls

@@ -1,39 +1,30 @@

|

||||

base:

|

||||

'*':

|

||||

- global.soc_global

|

||||

- global.adv_global

|

||||

- docker.soc_docker

|

||||

- docker.adv_docker

|

||||

- influxdb.token

|

||||

- logrotate.soc_logrotate

|

||||

- logrotate.adv_logrotate

|

||||

- ntp.soc_ntp

|

||||

- ntp.adv_ntp

|

||||

- patch.needs_restarting

|

||||

- patch.soc_patch

|

||||

- patch.adv_patch

|

||||

- sensoroni.soc_sensoroni

|

||||

- sensoroni.adv_sensoroni

|

||||

- telegraf.soc_telegraf

|

||||

- telegraf.adv_telegraf

|

||||

- versionlock.soc_versionlock

|

||||

- versionlock.adv_versionlock

|

||||

- soc.license

|

||||

- logrotate

|

||||

|

||||

'* and not *_desktop':

|

||||

- firewall.soc_firewall

|

||||

- firewall.adv_firewall

|

||||

- nginx.soc_nginx

|

||||

- nginx.adv_nginx

|

||||

'* and not *_eval and not *_import':

|

||||

- logstash.nodes

|

||||

|

||||

'salt-cloud:driver:libvirt':

|

||||

- match: grain

|

||||

- vm.soc_vm

|

||||

- vm.adv_vm

|

||||

|

||||

'*_manager or *_managersearch or *_managerhype':

|

||||

'*_eval or *_helixsensor or *_heavynode or *_sensor or *_standalone or *_import':

|

||||

- match: compound

|

||||

- node_data.ips

|

||||

- zeek

|

||||

|

||||

'*_managersearch or *_heavynode':

|

||||

- match: compound

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- logstash.search

|

||||

- elasticsearch.index_templates

|

||||

|

||||

'*_manager':

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- elasticsearch.index_templates

|

||||

|

||||

'*_manager or *_managersearch':

|

||||

- match: compound

|

||||

- data.*

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

@@ -41,63 +32,18 @@ base:

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

- secrets

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- hydra.soc_hydra

|

||||

- hydra.adv_hydra

|

||||

- redis.nodes

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.nodes

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

- kafka.nodes

|

||||

- kafka.soc_kafka

|

||||

- kafka.adv_kafka

|

||||

- hypervisor.nodes

|

||||

- hypervisor.soc_hypervisor

|

||||

- hypervisor.adv_hypervisor

|

||||

- stig.soc_stig

|

||||

|

||||

'*_sensor':

|

||||

- zeeklogs

|

||||

- healthcheck.sensor

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

- stig.soc_stig

|

||||

|

||||

'*_eval':

|

||||

- node_data.ips

|

||||

- data.*

|

||||

- zeeklogs

|

||||

- secrets

|

||||

- healthcheck.eval

|

||||

- elasticsearch.index_templates

|

||||

@@ -107,48 +53,13 @@ base:

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- hydra.soc_hydra

|

||||

- hydra.adv_hydra

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_standalone':

|

||||

- node_data.ips

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- logstash.search

|

||||

- elasticsearch.index_templates

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

@@ -156,118 +67,60 @@ base:

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

- data.*

|

||||

- zeeklogs

|

||||

- secrets

|

||||

- healthcheck.standalone

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- hydra.soc_hydra

|

||||

- hydra.adv_hydra

|

||||

- redis.nodes

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.nodes

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

|

||||

'*_node':

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

- stig.soc_stig

|

||||

- kafka.nodes

|