Compare commits

11 Commits

kilo

...

feature/us

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

62c702e269 | ||

|

|

f10290246f | ||

|

|

c919f6bca0 | ||

|

|

51b421a165 | ||

|

|

86ff54e844 | ||

|

|

b8cb3f5815 | ||

|

|

381a51271f | ||

|

|

10500178d5 | ||

|

|

e81e66f40d | ||

|

|

f6bd74aadf | ||

|

|

322c2804fc |

545

.github/.gitleaks.toml

vendored

@@ -1,545 +0,0 @@

|

|||||||

title = "gitleaks config"

|

|

||||||

|

|

||||||

# Gitleaks rules are defined by regular expressions and entropy ranges.

|

|

||||||

# Some secrets have unique signatures which make detecting those secrets easy.

|

|

||||||

# Examples of those secrets would be GitLab Personal Access Tokens, AWS keys, and GitHub Access Tokens.

|

|

||||||

# All these examples have defined prefixes like `glpat`, `AKIA`, `ghp_`, etc.

|

|

||||||

#

|

|

||||||

# Other secrets might just be a hash which means we need to write more complex rules to verify

|

|

||||||

# that what we are matching is a secret.

|

|

||||||

#

|

|

||||||

# Here is an example of a semi-generic secret

|

|

||||||

#

|

|

||||||

# discord_client_secret = "8dyfuiRyq=vVc3RRr_edRk-fK__JItpZ"

|

|

||||||

#

|

|

||||||

# We can write a regular expression to capture the variable name (identifier),

|

|

||||||

# the assignment symbol (like '=' or ':='), and finally the actual secret.

|

|

||||||

# The structure of a rule to match this example secret is below:

|

|

||||||

#

|

|

||||||

# Beginning string

|

|

||||||

# quotation

|

|

||||||

# │ End string quotation

|

|

||||||

# │ │

|

|

||||||

# ▼ ▼

|

|

||||||

# (?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_\-]{32})['\"]

|

|

||||||

#

|

|

||||||

# ▲ ▲ ▲

|

|

||||||

# │ │ │

|

|

||||||

# │ │ │

|

|

||||||

# identifier assignment symbol

|

|

||||||

# Secret

|

|

||||||

#

|

|

||||||

[[rules]]

|

|

||||||

id = "gitlab-pat"

|

|

||||||

description = "GitLab Personal Access Token"

|

|

||||||

regex = '''glpat-[0-9a-zA-Z\-\_]{20}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "aws-access-token"

|

|

||||||

description = "AWS"

|

|

||||||

regex = '''(A3T[A-Z0-9]|AKIA|AGPA|AIDA|AROA|AIPA|ANPA|ANVA|ASIA)[A-Z0-9]{16}'''

|

|

||||||

|

|

||||||

# Cryptographic keys

|

|

||||||

[[rules]]

|

|

||||||

id = "PKCS8-PK"

|

|

||||||

description = "PKCS8 private key"

|

|

||||||

regex = '''-----BEGIN PRIVATE KEY-----'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "RSA-PK"

|

|

||||||

description = "RSA private key"

|

|

||||||

regex = '''-----BEGIN RSA PRIVATE KEY-----'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "OPENSSH-PK"

|

|

||||||

description = "SSH private key"

|

|

||||||

regex = '''-----BEGIN OPENSSH PRIVATE KEY-----'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "PGP-PK"

|

|

||||||

description = "PGP private key"

|

|

||||||

regex = '''-----BEGIN PGP PRIVATE KEY BLOCK-----'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "github-pat"

|

|

||||||

description = "GitHub Personal Access Token"

|

|

||||||

regex = '''ghp_[0-9a-zA-Z]{36}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "github-oauth"

|

|

||||||

description = "GitHub OAuth Access Token"

|

|

||||||

regex = '''gho_[0-9a-zA-Z]{36}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "SSH-DSA-PK"

|

|

||||||

description = "SSH (DSA) private key"

|

|

||||||

regex = '''-----BEGIN DSA PRIVATE KEY-----'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "SSH-EC-PK"

|

|

||||||

description = "SSH (EC) private key"

|

|

||||||

regex = '''-----BEGIN EC PRIVATE KEY-----'''

|

|

||||||

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "github-app-token"

|

|

||||||

description = "GitHub App Token"

|

|

||||||

regex = '''(ghu|ghs)_[0-9a-zA-Z]{36}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "github-refresh-token"

|

|

||||||

description = "GitHub Refresh Token"

|

|

||||||

regex = '''ghr_[0-9a-zA-Z]{76}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "shopify-shared-secret"

|

|

||||||

description = "Shopify shared secret"

|

|

||||||

regex = '''shpss_[a-fA-F0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "shopify-access-token"

|

|

||||||

description = "Shopify access token"

|

|

||||||

regex = '''shpat_[a-fA-F0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "shopify-custom-access-token"

|

|

||||||

description = "Shopify custom app access token"

|

|

||||||

regex = '''shpca_[a-fA-F0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "shopify-private-app-access-token"

|

|

||||||

description = "Shopify private app access token"

|

|

||||||

regex = '''shppa_[a-fA-F0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "slack-access-token"

|

|

||||||

description = "Slack token"

|

|

||||||

regex = '''xox[baprs]-([0-9a-zA-Z]{10,48})?'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "stripe-access-token"

|

|

||||||

description = "Stripe"

|

|

||||||

regex = '''(?i)(sk|pk)_(test|live)_[0-9a-z]{10,32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "pypi-upload-token"

|

|

||||||

description = "PyPI upload token"

|

|

||||||

regex = '''pypi-AgEIcHlwaS5vcmc[A-Za-z0-9\-_]{50,1000}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "gcp-service-account"

|

|

||||||

description = "Google (GCP) Service-account"

|

|

||||||

regex = '''\"type\": \"service_account\"'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "heroku-api-key"

|

|

||||||

description = "Heroku API Key"

|

|

||||||

regex = ''' (?i)(heroku[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9A-F]{8}-[0-9A-F]{4}-[0-9A-F]{4}-[0-9A-F]{4}-[0-9A-F]{12})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "slack-web-hook"

|

|

||||||

description = "Slack Webhook"

|

|

||||||

regex = '''https://hooks.slack.com/services/T[a-zA-Z0-9_]{8}/B[a-zA-Z0-9_]{8,12}/[a-zA-Z0-9_]{24}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "twilio-api-key"

|

|

||||||

description = "Twilio API Key"

|

|

||||||

regex = '''SK[0-9a-fA-F]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "age-secret-key"

|

|

||||||

description = "Age secret key"

|

|

||||||

regex = '''AGE-SECRET-KEY-1[QPZRY9X8GF2TVDW0S3JN54KHCE6MUA7L]{58}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "facebook-token"

|

|

||||||

description = "Facebook token"

|

|

||||||

regex = '''(?i)(facebook[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "twitter-token"

|

|

||||||

description = "Twitter token"

|

|

||||||

regex = '''(?i)(twitter[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{35,44})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "adobe-client-id"

|

|

||||||

description = "Adobe Client ID (Oauth Web)"

|

|

||||||

regex = '''(?i)(adobe[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "adobe-client-secret"

|

|

||||||

description = "Adobe Client Secret"

|

|

||||||

regex = '''(p8e-)(?i)[a-z0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "alibaba-access-key-id"

|

|

||||||

description = "Alibaba AccessKey ID"

|

|

||||||

regex = '''(LTAI)(?i)[a-z0-9]{20}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "alibaba-secret-key"

|

|

||||||

description = "Alibaba Secret Key"

|

|

||||||

regex = '''(?i)(alibaba[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{30})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "asana-client-id"

|

|

||||||

description = "Asana Client ID"

|

|

||||||

regex = '''(?i)(asana[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9]{16})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "asana-client-secret"

|

|

||||||

description = "Asana Client Secret"

|

|

||||||

regex = '''(?i)(asana[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "atlassian-api-token"

|

|

||||||

description = "Atlassian API token"

|

|

||||||

regex = '''(?i)(atlassian[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{24})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "bitbucket-client-id"

|

|

||||||

description = "Bitbucket client ID"

|

|

||||||

regex = '''(?i)(bitbucket[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "bitbucket-client-secret"

|

|

||||||

description = "Bitbucket client secret"

|

|

||||||

regex = '''(?i)(bitbucket[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9_\-]{64})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "beamer-api-token"

|

|

||||||

description = "Beamer API token"

|

|

||||||

regex = '''(?i)(beamer[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](b_[a-z0-9=_\-]{44})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "clojars-api-token"

|

|

||||||

description = "Clojars API token"

|

|

||||||

regex = '''(CLOJARS_)(?i)[a-z0-9]{60}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "contentful-delivery-api-token"

|

|

||||||

description = "Contentful delivery API token"

|

|

||||||

regex = '''(?i)(contentful[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9\-=_]{43})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "databricks-api-token"

|

|

||||||

description = "Databricks API token"

|

|

||||||

regex = '''dapi[a-h0-9]{32}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "discord-api-token"

|

|

||||||

description = "Discord API key"

|

|

||||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{64})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "discord-client-id"

|

|

||||||

description = "Discord client ID"

|

|

||||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9]{18})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "discord-client-secret"

|

|

||||||

description = "Discord client secret"

|

|

||||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_\-]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "doppler-api-token"

|

|

||||||

description = "Doppler API token"

|

|

||||||

regex = '''['\"](dp\.pt\.)(?i)[a-z0-9]{43}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "dropbox-api-secret"

|

|

||||||

description = "Dropbox API secret/key"

|

|

||||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{15})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "dropbox--api-key"

|

|

||||||

description = "Dropbox API secret/key"

|

|

||||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{15})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "dropbox-short-lived-api-token"

|

|

||||||

description = "Dropbox short lived API token"

|

|

||||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](sl\.[a-z0-9\-=_]{135})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "dropbox-long-lived-api-token"

|

|

||||||

description = "Dropbox long lived API token"

|

|

||||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"][a-z0-9]{11}(AAAAAAAAAA)[a-z0-9\-_=]{43}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "duffel-api-token"

|

|

||||||

description = "Duffel API token"

|

|

||||||

regex = '''['\"]duffel_(test|live)_(?i)[a-z0-9_-]{43}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "dynatrace-api-token"

|

|

||||||

description = "Dynatrace API token"

|

|

||||||

regex = '''['\"]dt0c01\.(?i)[a-z0-9]{24}\.[a-z0-9]{64}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "easypost-api-token"

|

|

||||||

description = "EasyPost API token"

|

|

||||||

regex = '''['\"]EZAK(?i)[a-z0-9]{54}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "easypost-test-api-token"

|

|

||||||

description = "EasyPost test API token"

|

|

||||||

regex = '''['\"]EZTK(?i)[a-z0-9]{54}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "fastly-api-token"

|

|

||||||

description = "Fastly API token"

|

|

||||||

regex = '''(?i)(fastly[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9\-=_]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "finicity-client-secret"

|

|

||||||

description = "Finicity client secret"

|

|

||||||

regex = '''(?i)(finicity[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{20})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "finicity-api-token"

|

|

||||||

description = "Finicity API token"

|

|

||||||

regex = '''(?i)(finicity[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "flutterwave-public-key"

|

|

||||||

description = "Flutterwave public key"

|

|

||||||

regex = '''FLWPUBK_TEST-(?i)[a-h0-9]{32}-X'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "flutterwave-secret-key"

|

|

||||||

description = "Flutterwave secret key"

|

|

||||||

regex = '''FLWSECK_TEST-(?i)[a-h0-9]{32}-X'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "flutterwave-enc-key"

|

|

||||||

description = "Flutterwave encrypted key"

|

|

||||||

regex = '''FLWSECK_TEST[a-h0-9]{12}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "frameio-api-token"

|

|

||||||

description = "Frame.io API token"

|

|

||||||

regex = '''fio-u-(?i)[a-z0-9\-_=]{64}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "gocardless-api-token"

|

|

||||||

description = "GoCardless API token"

|

|

||||||

regex = '''['\"]live_(?i)[a-z0-9\-_=]{40}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "grafana-api-token"

|

|

||||||

description = "Grafana API token"

|

|

||||||

regex = '''['\"]eyJrIjoi(?i)[a-z0-9\-_=]{72,92}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "hashicorp-tf-api-token"

|

|

||||||

description = "HashiCorp Terraform user/org API token"

|

|

||||||

regex = '''['\"](?i)[a-z0-9]{14}\.atlasv1\.[a-z0-9\-_=]{60,70}['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "hubspot-api-token"

|

|

||||||

description = "HubSpot API token"

|

|

||||||

regex = '''(?i)(hubspot[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "intercom-api-token"

|

|

||||||

description = "Intercom API token"

|

|

||||||

regex = '''(?i)(intercom[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_]{60})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "intercom-client-secret"

|

|

||||||

description = "Intercom client secret/ID"

|

|

||||||

regex = '''(?i)(intercom[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "ionic-api-token"

|

|

||||||

description = "Ionic API token"

|

|

||||||

regex = '''(?i)(ionic[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](ion_[a-z0-9]{42})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "linear-api-token"

|

|

||||||

description = "Linear API token"

|

|

||||||

regex = '''lin_api_(?i)[a-z0-9]{40}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "linear-client-secret"

|

|

||||||

description = "Linear client secret/ID"

|

|

||||||

regex = '''(?i)(linear[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "lob-api-key"

|

|

||||||

description = "Lob API Key"

|

|

||||||

regex = '''(?i)(lob[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]((live|test)_[a-f0-9]{35})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "lob-pub-api-key"

|

|

||||||

description = "Lob Publishable API Key"

|

|

||||||

regex = '''(?i)(lob[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]((test|live)_pub_[a-f0-9]{31})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "mailchimp-api-key"

|

|

||||||

description = "Mailchimp API key"

|

|

||||||

regex = '''(?i)(mailchimp[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32}-us20)['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "mailgun-private-api-token"

|

|

||||||

description = "Mailgun private API token"

|

|

||||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](key-[a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "mailgun-pub-key"

|

|

||||||

description = "Mailgun public validation key"

|

|

||||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](pubkey-[a-f0-9]{32})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "mailgun-signing-key"

|

|

||||||

description = "Mailgun webhook signing key"

|

|

||||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{32}-[a-h0-9]{8}-[a-h0-9]{8})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "mapbox-api-token"

|

|

||||||

description = "Mapbox API token"

|

|

||||||

regex = '''(?i)(pk\.[a-z0-9]{60}\.[a-z0-9]{22})'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "messagebird-api-token"

|

|

||||||

description = "MessageBird API token"

|

|

||||||

regex = '''(?i)(messagebird[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{25})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "messagebird-client-id"

|

|

||||||

description = "MessageBird API client ID"

|

|

||||||

regex = '''(?i)(messagebird[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "new-relic-user-api-key"

|

|

||||||

description = "New Relic user API Key"

|

|

||||||

regex = '''['\"](NRAK-[A-Z0-9]{27})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "new-relic-user-api-id"

|

|

||||||

description = "New Relic user API ID"

|

|

||||||

regex = '''(?i)(newrelic[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([A-Z0-9]{64})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "new-relic-browser-api-token"

|

|

||||||

description = "New Relic ingest browser API token"

|

|

||||||

regex = '''['\"](NRJS-[a-f0-9]{19})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "npm-access-token"

|

|

||||||

description = "npm access token"

|

|

||||||

regex = '''['\"](npm_(?i)[a-z0-9]{36})['\"]'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "planetscale-password"

|

|

||||||

description = "PlanetScale password"

|

|

||||||

regex = '''pscale_pw_(?i)[a-z0-9\-_\.]{43}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "planetscale-api-token"

|

|

||||||

description = "PlanetScale API token"

|

|

||||||

regex = '''pscale_tkn_(?i)[a-z0-9\-_\.]{43}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "postman-api-token"

|

|

||||||

description = "Postman API token"

|

|

||||||

regex = '''PMAK-(?i)[a-f0-9]{24}\-[a-f0-9]{34}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "pulumi-api-token"

|

|

||||||

description = "Pulumi API token"

|

|

||||||

regex = '''pul-[a-f0-9]{40}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "rubygems-api-token"

|

|

||||||

description = "Rubygem API token"

|

|

||||||

regex = '''rubygems_[a-f0-9]{48}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "sendgrid-api-token"

|

|

||||||

description = "SendGrid API token"

|

|

||||||

regex = '''SG\.(?i)[a-z0-9_\-\.]{66}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "sendinblue-api-token"

|

|

||||||

description = "Sendinblue API token"

|

|

||||||

regex = '''xkeysib-[a-f0-9]{64}\-(?i)[a-z0-9]{16}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "shippo-api-token"

|

|

||||||

description = "Shippo API token"

|

|

||||||

regex = '''shippo_(live|test)_[a-f0-9]{40}'''

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "linkedin-client-secret"

|

|

||||||

description = "LinkedIn Client secret"

|

|

||||||

regex = '''(?i)(linkedin[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z]{16})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "linkedin-client-id"

|

|

||||||

description = "LinkedIn Client ID"

|

|

||||||

regex = '''(?i)(linkedin[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{14})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "twitch-api-token"

|

|

||||||

description = "Twitch API token"

|

|

||||||

regex = '''(?i)(twitch[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{30})['\"]'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "typeform-api-token"

|

|

||||||

description = "Typeform API token"

|

|

||||||

regex = '''(?i)(typeform[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}(tfp_[a-z0-9\-_\.=]{59})'''

|

|

||||||

secretGroup = 3

|

|

||||||

|

|

||||||

[[rules]]

|

|

||||||

id = "generic-api-key"

|

|

||||||

description = "Generic API Key"

|

|

||||||

regex = '''(?i)((key|api[^Version]|token|secret|password)[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9a-zA-Z\-_=]{8,64})['\"]'''

|

|

||||||

entropy = 3.7

|

|

||||||

secretGroup = 4

|

|

||||||

|

|

||||||

|

|

||||||

[allowlist]

|

|

||||||

description = "global allow lists"

|

|

||||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''']

|

|

||||||

paths = [

|

|

||||||

'''gitleaks.toml''',

|

|

||||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

|

||||||

'''(go.mod|go.sum)$''',

|

|

||||||

'''salt/nginx/files/enterprise-attack.json'''

|

|

||||||

]

|

|

||||||

190

.github/DISCUSSION_TEMPLATE/2-4.yml

vendored

@@ -1,190 +0,0 @@

|

|||||||

body:

|

|

||||||

- type: markdown

|

|

||||||

attributes:

|

|

||||||

value: |

|

|

||||||

⚠️ This category is solely for conversations related to Security Onion 2.4 ⚠️

|

|

||||||

|

|

||||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Version

|

|

||||||

description: Which version of Security Onion 2.4.x are you asking about?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- 2.4 Pre-release (Beta, Release Candidate)

|

|

||||||

- 2.4.10

|

|

||||||

- 2.4.20

|

|

||||||

- 2.4.30

|

|

||||||

- 2.4.40

|

|

||||||

- 2.4.50

|

|

||||||

- 2.4.60

|

|

||||||

- 2.4.70

|

|

||||||

- 2.4.80

|

|

||||||

- 2.4.90

|

|

||||||

- 2.4.100

|

|

||||||

- Other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Installation Method

|

|

||||||

description: How did you install Security Onion?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Security Onion ISO image

|

|

||||||

- Network installation on Red Hat derivative like Oracle, Rocky, Alma, etc.

|

|

||||||

- Network installation on Ubuntu

|

|

||||||

- Network installation on Debian

|

|

||||||

- Other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Description

|

|

||||||

description: >

|

|

||||||

Is this discussion about installation, configuration, upgrading, or other?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- installation

|

|

||||||

- configuration

|

|

||||||

- upgrading

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Installation Type

|

|

||||||

description: >

|

|

||||||

When you installed, did you choose Import, Eval, Standalone, Distributed, or something else?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Import

|

|

||||||

- Eval

|

|

||||||

- Standalone

|

|

||||||

- Distributed

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Location

|

|

||||||

description: >

|

|

||||||

Is this deployment in the cloud, on-prem with Internet access, or airgap?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- cloud

|

|

||||||

- on-prem with Internet access

|

|

||||||

- airgap

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Hardware Specs

|

|

||||||

description: >

|

|

||||||

Does your hardware meet or exceed the minimum requirements for your installation type as shown at https://docs.securityonion.net/en/2.4/hardware.html?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Meets minimum requirements

|

|

||||||

- Exceeds minimum requirements

|

|

||||||

- Does not meet minimum requirements

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: CPU

|

|

||||||

description: How many CPU cores do you have?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: RAM

|

|

||||||

description: How much RAM do you have?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: Storage for /

|

|

||||||

description: How much storage do you have for the / partition?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: Storage for /nsm

|

|

||||||

description: How much storage do you have for the /nsm partition?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Network Traffic Collection

|

|

||||||

description: >

|

|

||||||

Are you collecting network traffic from a tap or span port?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- tap

|

|

||||||

- span port

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Network Traffic Speeds

|

|

||||||

description: >

|

|

||||||

How much network traffic are you monitoring?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Less than 1Gbps

|

|

||||||

- 1Gbps to 10Gbps

|

|

||||||

- more than 10Gbps

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Status

|

|

||||||

description: >

|

|

||||||

Does SOC Grid show all services on all nodes as running OK?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, all services on all nodes are running OK

|

|

||||||

- No, one or more services are failed (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Salt Status

|

|

||||||

description: >

|

|

||||||

Do you get any failures when you run "sudo salt-call state.highstate"?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, there are salt failures (please provide detail below)

|

|

||||||

- No, there are no failures

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Logs

|

|

||||||

description: >

|

|

||||||

Are there any additional clues in /opt/so/log/?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, there are additional clues in /opt/so/log/ (please provide detail below)

|

|

||||||

- No, there are no additional clues

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: textarea

|

|

||||||

attributes:

|

|

||||||

label: Detail

|

|

||||||

description: Please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and then provide detailed information to help us help you.

|

|

||||||

placeholder: |-

|

|

||||||

STOP! Before typing, please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 in their entirety!

|

|

||||||

|

|

||||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: checkboxes

|

|

||||||

attributes:

|

|

||||||

label: Guidelines

|

|

||||||

options:

|

|

||||||

- label: I have read the discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and assert that I have followed the guidelines.

|

|

||||||

required: true

|

|

||||||

32

.github/workflows/close-threads.yml

vendored

@@ -1,32 +0,0 @@

|

|||||||

name: 'Close Threads'

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

- cron: '50 1 * * *'

|

|

||||||

workflow_dispatch:

|

|

||||||

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

discussions: write

|

|

||||||

|

|

||||||

concurrency:

|

|

||||||

group: lock-threads

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

close-threads:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

steps:

|

|

||||||

- uses: actions/stale@v5

|

|

||||||

with:

|

|

||||||

days-before-issue-stale: -1

|

|

||||||

days-before-issue-close: 60

|

|

||||||

stale-issue-message: "This issue is stale because it has been inactive for an extended period. Stale issues convey that the issue, while important to someone, is not critical enough for the author, or other community members to work on, sponsor, or otherwise shepherd the issue through to a resolution."

|

|

||||||

close-issue-message: "This issue was closed because it has been stale for an extended period. It will be automatically locked in 30 days, after which no further commenting will be available."

|

|

||||||

days-before-pr-stale: 45

|

|

||||||

days-before-pr-close: 60

|

|

||||||

stale-pr-message: "This PR is stale because it has been inactive for an extended period. The longer a PR remains stale the more out of date with the main branch it becomes."

|

|

||||||

close-pr-message: "This PR was closed because it has been stale for an extended period. It will be automatically locked in 30 days. If there is still a commitment to finishing this PR re-open it before it is locked."

|

|

||||||

24

.github/workflows/contrib.yml

vendored

@@ -1,24 +0,0 @@

|

|||||||

name: contrib

|

|

||||||

on:

|

|

||||||

issue_comment:

|

|

||||||

types: [created]

|

|

||||||

pull_request_target:

|

|

||||||

types: [opened,closed,synchronize]

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

CLAssistant:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- name: "Contributor Check"

|

|

||||||

if: (github.event.comment.body == 'recheck' || github.event.comment.body == 'I have read the CLA Document and I hereby sign the CLA') || github.event_name == 'pull_request_target'

|

|

||||||

uses: cla-assistant/github-action@v2.3.1

|

|

||||||

env:

|

|

||||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

|

||||||

PERSONAL_ACCESS_TOKEN : ${{ secrets.PERSONAL_ACCESS_TOKEN }}

|

|

||||||

with:

|

|

||||||

path-to-signatures: 'signatures_v1.json'

|

|

||||||

path-to-document: 'https://securityonionsolutions.com/cla'

|

|

||||||

allowlist: dependabot[bot],jertel,dougburks,TOoSmOotH,weslambert,defensivedepth,m0duspwnens

|

|

||||||

remote-organization-name: Security-Onion-Solutions

|

|

||||||

remote-repository-name: licensing

|

|

||||||

|

|

||||||

4

.github/workflows/leaktest.yml

vendored

@@ -12,6 +12,4 @@ jobs:

|

|||||||

fetch-depth: '0'

|

fetch-depth: '0'

|

||||||

|

|

||||||

- name: Gitleaks

|

- name: Gitleaks

|

||||||

uses: gitleaks/gitleaks-action@v1.6.0

|

uses: zricethezav/gitleaks-action@master

|

||||||

with:

|

|

||||||

config-path: .github/.gitleaks.toml

|

|

||||||

|

|||||||

25

.github/workflows/lock-threads.yml

vendored

@@ -1,25 +0,0 @@

|

|||||||

name: 'Lock Threads'

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

- cron: '50 2 * * *'

|

|

||||||

workflow_dispatch:

|

|

||||||

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

discussions: write

|

|

||||||

|

|

||||||

concurrency:

|

|

||||||

group: lock-threads

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

lock-threads:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: jertel/lock-threads@main

|

|

||||||

with:

|

|

||||||

include-discussion-currently-open: true

|

|

||||||

discussion-inactive-days: 90

|

|

||||||

issue-inactive-days: 30

|

|

||||||

pr-inactive-days: 30

|

|

||||||

39

.github/workflows/pythontest.yml

vendored

@@ -1,39 +0,0 @@

|

|||||||

name: python-test

|

|

||||||

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

paths:

|

|

||||||

- "salt/sensoroni/files/analyzers/**"

|

|

||||||

- "salt/manager/tools/sbin"

|

|

||||||

pull_request:

|

|

||||||

paths:

|

|

||||||

- "salt/sensoroni/files/analyzers/**"

|

|

||||||

- "salt/manager/tools/sbin"

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

build:

|

|

||||||

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

strategy:

|

|

||||||

fail-fast: false

|

|

||||||

matrix:

|

|

||||||

python-version: ["3.10"]

|

|

||||||

python-code-path: ["salt/sensoroni/files/analyzers", "salt/manager/tools/sbin"]

|

|

||||||

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v3

|

|

||||||

- name: Set up Python ${{ matrix.python-version }}

|

|

||||||

uses: actions/setup-python@v3

|

|

||||||

with:

|

|

||||||

python-version: ${{ matrix.python-version }}

|

|

||||||

- name: Install dependencies

|

|

||||||

run: |

|

|

||||||

python -m pip install --upgrade pip

|

|

||||||

python -m pip install flake8 pytest pytest-cov

|

|

||||||

find . -name requirements.txt -exec pip install -r {} \;

|

|

||||||

- name: Lint with flake8

|

|

||||||

run: |

|

|

||||||

flake8 ${{ matrix.python-code-path }} --show-source --max-complexity=12 --doctests --max-line-length=200 --statistics

|

|

||||||

- name: Test with pytest

|

|

||||||

run: |

|

|

||||||

pytest ${{ matrix.python-code-path }} --cov=${{ matrix.python-code-path }} --doctest-modules --cov-report=term --cov-fail-under=100 --cov-config=pytest.ini

|

|

||||||

13

.gitignore

vendored

@@ -56,15 +56,4 @@ $RECYCLE.BIN/

|

|||||||

# Windows shortcuts

|

# Windows shortcuts

|

||||||

*.lnk

|

*.lnk

|

||||||

|

|

||||||

# End of https://www.gitignore.io/api/macos,windows

|

# End of https://www.gitignore.io/api/macos,windows

|

||||||

|

|

||||||

# Pytest output

|

|

||||||

__pycache__

|

|

||||||

.pytest_cache

|

|

||||||

.coverage

|

|

||||||

*.pyc

|

|

||||||

.venv

|

|

||||||

|

|

||||||

# Analyzer dev/test config files

|

|

||||||

*_dev.yaml

|

|

||||||

site-packages

|

|

||||||

@@ -29,11 +29,6 @@

|

|||||||

|

|

||||||

* See this document's [code styling and conventions section](#code-style-and-conventions) below to be sure your PR fits our code requirements prior to submitting.

|

* See this document's [code styling and conventions section](#code-style-and-conventions) below to be sure your PR fits our code requirements prior to submitting.

|

||||||

|

|

||||||

* Change behavior (fix a bug, add a new feature) separately from refactoring code. Refactor pull requests are welcome, but ensure your new code behaves exactly the same as the old.

|

|

||||||

|

|

||||||

* **Do not refactor code for non-functional reasons**. If you are submitting a pull request that refactors code, ensure the refactor is improving the functionality of the code you're refactoring (e.g. decreasing complexity, removing reliance on 3rd party tools, improving performance).

|

|

||||||

|

|

||||||

* Before submitting a PR with significant changes to the project, [start a discussion](https://github.com/Security-Onion-Solutions/securityonion/discussions/new) explaining what you hope to acheive. The project maintainers will provide feedback and determine whether your goal aligns with the project.

|

|

||||||

|

|

||||||

|

|

||||||

### Code style and conventions

|

### Code style and conventions

|

||||||

@@ -42,5 +37,3 @@

|

|||||||

* All new Bash code should pass [ShellCheck](https://www.shellcheck.net/) analysis. Where errors can be *safely* [ignored](https://github.com/koalaman/shellcheck/wiki/Ignore), the relevant disable directive should be accompanied by a brief explanation as to why the error is being ignored.

|

* All new Bash code should pass [ShellCheck](https://www.shellcheck.net/) analysis. Where errors can be *safely* [ignored](https://github.com/koalaman/shellcheck/wiki/Ignore), the relevant disable directive should be accompanied by a brief explanation as to why the error is being ignored.

|

||||||

|

|

||||||

* **Ensure all YAML (this includes Salt states and pillars) is properly formatted**. The spec for YAML v1.2 can be found [here](https://yaml.org/spec/1.2/spec.html), however there are numerous online resources with simpler descriptions of its formatting rules.

|

* **Ensure all YAML (this includes Salt states and pillars) is properly formatted**. The spec for YAML v1.2 can be found [here](https://yaml.org/spec/1.2/spec.html), however there are numerous online resources with simpler descriptions of its formatting rules.

|

||||||

|

|

||||||

* **All code of any language should match the style of other code of that same language within the project.** Be sure that any changes you make do not break from the pre-existing style of Security Onion code.

|

|

||||||

|

|||||||

32

README.md

@@ -1,47 +1,35 @@

|

|||||||

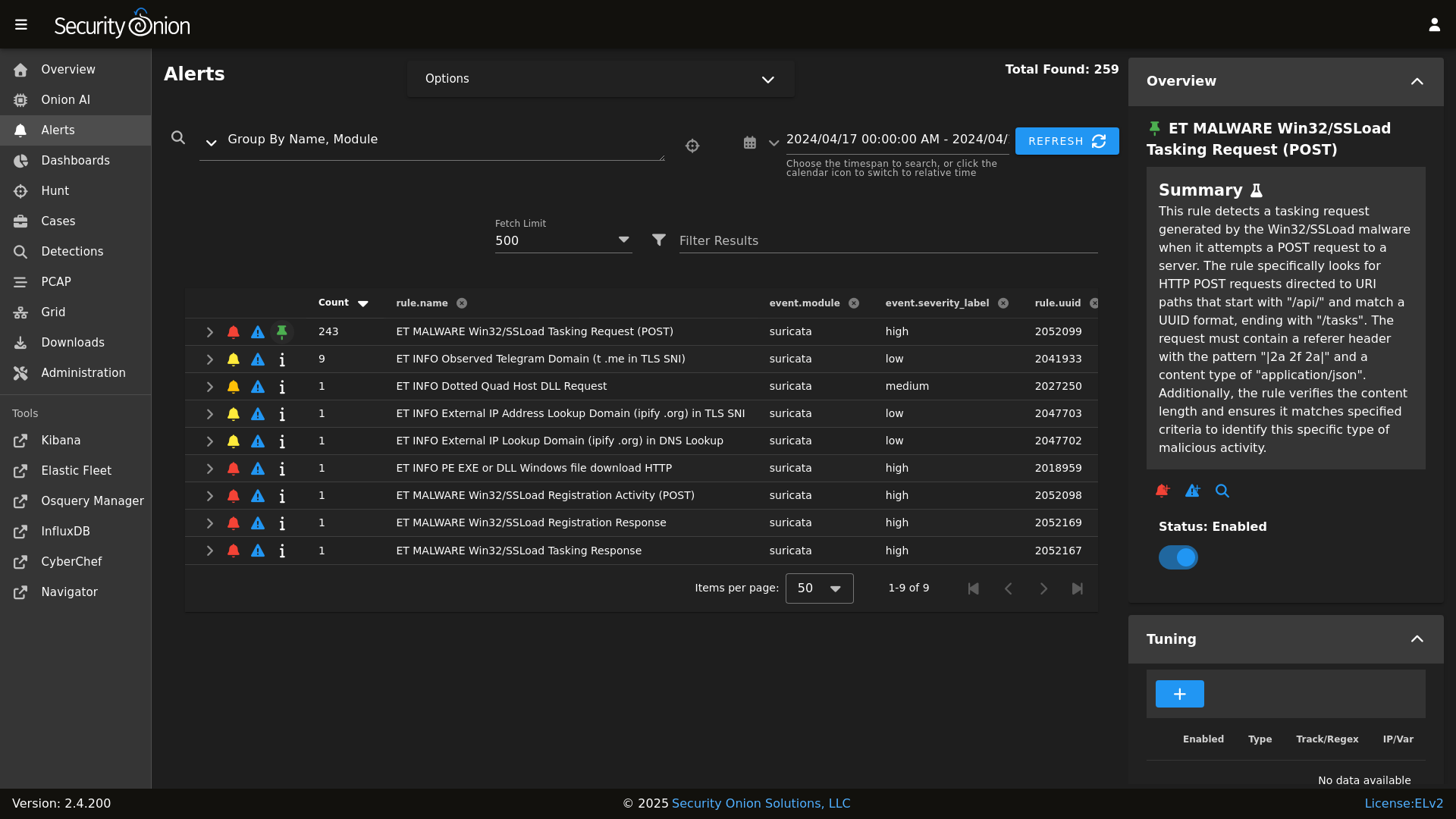

## Security Onion 2.4

|

## Security Onion 2.3.80

|

||||||

|

|

||||||

Security Onion 2.4 is here!

|

Security Onion 2.3.80 is here!

|

||||||

|

|

||||||

## Screenshots

|

## Screenshots

|

||||||

|

|

||||||

Alerts

|

Alerts

|

||||||

|

|

||||||

|

|

||||||

Dashboards

|

|

||||||

|

|

||||||

|

|

||||||

Hunt

|

Hunt

|

||||||

|

|

||||||

|

|

||||||

PCAP

|

|

||||||

|

|

||||||

|

|

||||||

Grid

|

|

||||||

|

|

||||||

|

|

||||||

Config

|

|

||||||

|

|

||||||

|

|

||||||

### Release Notes

|

### Release Notes

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/release-notes.html

|

https://docs.securityonion.net/en/2.3/release-notes.html

|

||||||

|

|

||||||

### Requirements

|

### Requirements

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/hardware.html

|

https://docs.securityonion.net/en/2.3/hardware.html

|

||||||

|

|

||||||

### Download

|

### Download

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/download.html

|

https://docs.securityonion.net/en/2.3/download.html

|

||||||

|

|

||||||

### Installation

|

### Installation

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/installation.html

|

https://docs.securityonion.net/en/2.3/installation.html

|

||||||

|

|

||||||

### FAQ

|

### FAQ

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/faq.html

|

https://docs.securityonion.net/en/2.3/faq.html

|

||||||

|

|

||||||

### Feedback

|

### Feedback

|

||||||

|

|

||||||

https://docs.securityonion.net/en/2.4/community-support.html

|

https://docs.securityonion.net/en/2.3/community-support.html

|

||||||

|

|||||||

@@ -4,8 +4,7 @@

|

|||||||

|

|

||||||

| Version | Supported |

|

| Version | Supported |

|

||||||

| ------- | ------------------ |

|

| ------- | ------------------ |

|

||||||

| 2.4.x | :white_check_mark: |

|

| 2.x.x | :white_check_mark: |

|

||||||

| 2.3.x | :white_check_mark: |

|

|

||||||

| 16.04.x | :x: |

|

| 16.04.x | :x: |

|

||||||

|

|

||||||

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

||||||

|

|||||||

@@ -1,46 +1,47 @@

|

|||||||

### 2.4.60-20240320 ISO image released on 2024/03/20

|

### 2.3.80 ISO image built on 2021/09/27

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Download and Verify

|

### Download and Verify

|

||||||

|

|

||||||

2.4.60-20240320 ISO image:

|

2.3.80 ISO image:

|

||||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

https://download.securityonion.net/file/securityonion/securityonion-2.3.80.iso

|

||||||

|

|

||||||

MD5: 178DD42D06B2F32F3870E0C27219821E

|

MD5: 24F38563860416F4A8ABE18746913E14

|

||||||

SHA1: 73EDCD50817A7F6003FE405CF1808A30D034F89D

|

SHA1: F923C005F54EA2A17AB225ADA0DA46042707AAD9

|

||||||

SHA256: DD334B8D7088A7B78160C253B680D645E25984BA5CCAB5CC5C327CA72137FC06

|

SHA256: 8E95D10AF664D9A406C168EC421D943CB23F0D0C1813C6C2DBA9B4E131984018

|

||||||

|

|

||||||

Signature for ISO image:

|

Signature for ISO image:

|

||||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.80.iso.sig

|

||||||

|

|

||||||

Signing key:

|

Signing key:

|

||||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS

|

||||||

|

|

||||||

For example, here are the steps you can use on most Linux distributions to download and verify our Security Onion ISO image.

|

For example, here are the steps you can use on most Linux distributions to download and verify our Security Onion ISO image.

|

||||||

|

|

||||||

Download and import the signing key:

|

Download and import the signing key:

|

||||||

```

|

```

|

||||||

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS -O - | gpg --import -

|

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS -O - | gpg --import -

|

||||||

```

|

```

|

||||||

|

|

||||||

Download the signature file for the ISO:

|

Download the signature file for the ISO:

|

||||||

```

|

```

|

||||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

wget https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.80.iso.sig

|

||||||

```

|

```

|

||||||

|

|

||||||

Download the ISO image:

|

Download the ISO image:

|

||||||

```

|

```

|

||||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

wget https://download.securityonion.net/file/securityonion/securityonion-2.3.80.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

Verify the downloaded ISO image using the signature file:

|

Verify the downloaded ISO image using the signature file:

|

||||||

```

|

```

|

||||||

gpg --verify securityonion-2.4.60-20240320.iso.sig securityonion-2.4.60-20240320.iso

|

gpg --verify securityonion-2.3.80.iso.sig securityonion-2.3.80.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||||

```

|

```

|

||||||

gpg: Signature made Tue 19 Mar 2024 03:17:58 PM EDT using RSA key ID FE507013

|

gpg: Signature made Mon 27 Sep 2021 08:55:01 AM EDT using RSA key ID FE507013

|

||||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||||

gpg: WARNING: This key is not certified with a trusted signature!

|

gpg: WARNING: This key is not certified with a trusted signature!

|

||||||

gpg: There is no indication that the signature belongs to the owner.

|

gpg: There is no indication that the signature belongs to the owner.

|

||||||

@@ -48,4 +49,4 @@ Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

|||||||

```

|

```

|

||||||

|

|

||||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||||

https://docs.securityonion.net/en/2.4/installation.html

|

https://docs.securityonion.net/en/2.3/installation.html

|

||||||

BIN

assets/images/screenshots/alerts-1.png

Normal file

|

After Width: | Height: | Size: 245 KiB |

|

Before Width: | Height: | Size: 186 KiB |

|

Before Width: | Height: | Size: 21 KiB |

|

Before Width: | Height: | Size: 22 KiB |

|

Before Width: | Height: | Size: 12 KiB |

|

Before Width: | Height: | Size: 201 KiB |

|

Before Width: | Height: | Size: 386 KiB |

BIN

assets/images/screenshots/hunt-1.png

Normal file

|

After Width: | Height: | Size: 168 KiB |

|

Before Width: | Height: | Size: 191 KiB |

@@ -1,8 +1,8 @@

|

|||||||

{% import_yaml 'firewall/ports/ports.yaml' as default_portgroups %}

|

{% import_yaml 'firewall/portgroups.yaml' as default_portgroups %}

|

||||||

{% set default_portgroups = default_portgroups.firewall.ports %}

|

{% set default_portgroups = default_portgroups.firewall.aliases.ports %}

|

||||||

{% import_yaml 'firewall/ports/ports.local.yaml' as local_portgroups %}

|

{% import_yaml 'firewall/portgroups.local.yaml' as local_portgroups %}

|

||||||

{% if local_portgroups.firewall.ports %}

|

{% if local_portgroups.firewall.aliases.ports %}

|

||||||

{% set local_portgroups = local_portgroups.firewall.ports %}

|

{% set local_portgroups = local_portgroups.firewall.aliases.ports %}

|

||||||

{% else %}

|

{% else %}

|

||||||

{% set local_portgroups = {} %}

|

{% set local_portgroups = {} %}

|

||||||

{% endif %}

|

{% endif %}

|

||||||

@@ -12,11 +12,10 @@ role:

|

|||||||

eval:

|

eval:

|

||||||

fleet:

|

fleet:

|

||||||

heavynode:

|

heavynode:

|

||||||

idh:

|

helixsensor:

|

||||||

import:

|

import:

|

||||||

manager:

|

manager:

|

||||||

managersearch:

|

managersearch:

|

||||||

receiver:

|

|

||||||

standalone:

|

standalone:

|

||||||

searchnode:

|

searchnode:

|

||||||

sensor:

|

sensor:

|

||||||

74

files/firewall/hostgroups.local.yaml

Normal file

@@ -0,0 +1,74 @@

|

|||||||

|

firewall:

|

||||||

|

hostgroups:

|

||||||

|

analyst:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

beats_endpoint:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

beats_endpoint_ssl:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

elasticsearch_rest:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

endgame:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

fleet:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

heavy_node:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

manager:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

minion:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

node:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

osquery_endpoint:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

search_node:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

sensor:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

strelka_frontend:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

syslog:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

wazuh_agent:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

wazuh_api:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

|

wazuh_authd:

|

||||||

|

ips:

|

||||||

|

delete:

|

||||||

|

insert:

|

||||||

3

files/firewall/portgroups.local.yaml

Normal file

@@ -0,0 +1,3 @@

|

|||||||

|

firewall:

|

||||||

|

aliases:

|

||||||

|

ports:

|

||||||

@@ -1,2 +0,0 @@

|

|||||||

firewall:

|

|

||||||

ports:

|

|

||||||

@@ -41,8 +41,7 @@ file_roots:

|

|||||||

base:

|

base:

|

||||||

- /opt/so/saltstack/local/salt

|

- /opt/so/saltstack/local/salt

|

||||||

- /opt/so/saltstack/default/salt

|

- /opt/so/saltstack/default/salt

|

||||||

- /nsm/elastic-fleet/artifacts

|

|

||||||

- /opt/so/rules/nids

|

|

||||||

|

|

||||||

# The master_roots setting configures a master-only copy of the file_roots dictionary,

|

# The master_roots setting configures a master-only copy of the file_roots dictionary,

|

||||||

# used by the state compiler.

|

# used by the state compiler.

|

||||||

@@ -65,4 +64,10 @@ peer:

|

|||||||

.*:

|

.*:

|

||||||

- x509.sign_remote_certificate

|

- x509.sign_remote_certificate

|

||||||

|

|

||||||

|

reactor:

|

||||||

|

- 'so/fleet':

|

||||||

|

- salt://reactor/fleet.sls

|

||||||

|

- 'salt/beacon/*/watch_sqlite_db//opt/so/conf/kratos/db/sqlite.db':

|

||||||

|

- salt://reactor/kratos.sls

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -45,10 +45,12 @@ echo " rootfs: $ROOTFS" >> $local_salt_dir/pillar/data/$TYPE.sls

|

|||||||

echo " nsmfs: $NSM" >> $local_salt_dir/pillar/data/$TYPE.sls

|

echo " nsmfs: $NSM" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||||

if [ $TYPE == 'sensorstab' ]; then

|

if [ $TYPE == 'sensorstab' ]; then

|

||||||

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||||

|

salt-call state.apply grafana queue=True

|

||||||

fi

|

fi

|

||||||