mirror of

https://github.com/Security-Onion-Solutions/securityonion.git

synced 2025-12-19 23:43:07 +01:00

Compare commits

1017 Commits

kilo

...

2.4.100-20

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

5472d2586c | ||

|

|

fd187b11f9 | ||

|

|

f6cfd2349b | ||

|

|

a11e78176f | ||

|

|

db4c373c45 | ||

|

|

5be17330d1 | ||

|

|

a7de6993f9 | ||

|

|

a9f2dfc4b8 | ||

|

|

b7e047d149 | ||

|

|

f69137b38d | ||

|

|

9746f6e5e2 | ||

|

|

89a1e2500e | ||

|

|

394ce29ea3 | ||

|

|

f19a35ff06 | ||

|

|

8943e88ca8 | ||

|

|

18774aa0a7 | ||

|

|

af80a78406 | ||

|

|

6043da4424 | ||

|

|

75086bac7f | ||

|

|

726df310ee | ||

|

|

b952728b2c | ||

|

|

1cac2ff1d4 | ||

|

|

a93c77a1cc | ||

|

|

dd09f5b153 | ||

|

|

29f996de66 | ||

|

|

c575e02fbb | ||

|

|

e96a0108c3 | ||

|

|

e86fce692c | ||

|

|

8d35c7c139 | ||

|

|

0a5725a62e | ||

|

|

1c6f5126db | ||

|

|

1ec5e3bf2a | ||

|

|

d29727c869 | ||

|

|

eabb894580 | ||

|

|

96339f0de6 | ||

|

|

d7e3e134a5 | ||

|

|

dfb0ff7a98 | ||

|

|

48f1e24bf5 | ||

|

|

cf47508185 | ||

|

|

2a024039bf | ||

|

|

212cc478de | ||

|

|

88ea60df2a | ||

|

|

c1b7232a88 | ||

|

|

04577a48be | ||

|

|

18ef37a2d0 | ||

|

|

4108e67178 | ||

|

|

ff479de7bd | ||

|

|

4afac201b9 | ||

|

|

c30537fe6a | ||

|

|

1ed73b6f8e | ||

|

|

f01825166d | ||

|

|

07f8bda27e | ||

|

|

e3ecc9d4be | ||

|

|

ca209ed54c | ||

|

|

df6ff027b5 | ||

|

|

e772497e12 | ||

|

|

205bbd9c61 | ||

|

|

224bc6b429 | ||

|

|

dc197f6a5c | ||

|

|

f182833a8d | ||

|

|

61ab1f1ef2 | ||

|

|

dea582f24a | ||

|

|

b860bf753a | ||

|

|

b5690f6879 | ||

|

|

a39ad55578 | ||

|

|

4c276d1211 | ||

|

|

5f74b1b730 | ||

|

|

b9040eb0de | ||

|

|

ab63d5dbdb | ||

|

|

f233f13637 | ||

|

|

c8a8236401 | ||

|

|

f5603b1274 | ||

|

|

1d27fcc50e | ||

|

|

dd2926201d | ||

|

|

ebcef8adbd | ||

|

|

ff14217d38 | ||

|

|

46596f01fa | ||

|

|

c1388a68f0 | ||

|

|

374da11037 | ||

|

|

caa8d9ecb0 | ||

|

|

02c7de6b1a | ||

|

|

c71b9f6e8f | ||

|

|

8c1feccbe0 | ||

|

|

5ee15c8b41 | ||

|

|

5328f55322 | ||

|

|

712f904c43 | ||

|

|

ccd7d86302 | ||

|

|

fc89604982 | ||

|

|

09f7329a21 | ||

|

|

cfd6676583 | ||

|

|

3713ee9d93 | ||

|

|

009c8d55c3 | ||

|

|

c0c01f0d17 | ||

|

|

2fe5dccbb4 | ||

|

|

c83a143eef | ||

|

|

56ef2a4e1c | ||

|

|

c36e8abc19 | ||

|

|

e76293acdb | ||

|

|

5bdb4ed51b | ||

|

|

aaf5d76071 | ||

|

|

d9a696a411 | ||

|

|

76ab4c92f0 | ||

|

|

60beaf51bc | ||

|

|

9ab17ff79c | ||

|

|

1a363790a0 | ||

|

|

d488bb6393 | ||

|

|

114ad779b4 | ||

|

|

49d2ac2b13 | ||

|

|

9a2252ed3f | ||

|

|

9264a03dbc | ||

|

|

fb2a42a9af | ||

|

|

63531cdbb6 | ||

|

|

bae348bef7 | ||

|

|

bd223d8643 | ||

|

|

3fa6c72620 | ||

|

|

2b90bdc86a | ||

|

|

6831b72804 | ||

|

|

5e12b928d9 | ||

|

|

0453f51e64 | ||

|

|

9594e4115c | ||

|

|

201e14f287 | ||

|

|

d833bd0d55 | ||

|

|

46eeb014af | ||

|

|

8e7a2cf353 | ||

|

|

2c528811cc | ||

|

|

3130b56d58 | ||

|

|

b466d83625 | ||

|

|

6d008546f1 | ||

|

|

c60b14e2e7 | ||

|

|

c753a7cffa | ||

|

|

5cba4d7d9b | ||

|

|

685df9e5ea | ||

|

|

ef5a42cf40 | ||

|

|

45ab6c7309 | ||

|

|

1b54a109d5 | ||

|

|

945d04a510 | ||

|

|

658db27a46 | ||

|

|

3e248da14d | ||

|

|

ed7f8dbf1d | ||

|

|

d6af3aab6d | ||

|

|

0cb067f6f2 | ||

|

|

ccf88fa62b | ||

|

|

20f915f649 | ||

|

|

f447b6b698 | ||

|

|

66b087f12f | ||

|

|

f2ad4c40e6 | ||

|

|

8538f2eca2 | ||

|

|

c55fa6dc6a | ||

|

|

17f37750e5 | ||

|

|

e789c17bc3 | ||

|

|

6f44d39b18 | ||

|

|

dd85249781 | ||

|

|

bdba621442 | ||

|

|

034315ed85 | ||

|

|

224c668c31 | ||

|

|

2e17e93cfe | ||

|

|

7dfb75ba6b | ||

|

|

af0425b8f1 | ||

|

|

6cf0a0bb42 | ||

|

|

d97400e6f5 | ||

|

|

cf1335dd84 | ||

|

|

be74449fb9 | ||

|

|

45b2413175 | ||

|

|

022df966c7 | ||

|

|

92385d652e | ||

|

|

4478d7b55a | ||

|

|

612716ee69 | ||

|

|

f78a5d1a78 | ||

|

|

2d0de87530 | ||

|

|

18df491f7e | ||

|

|

cee6ee7a2a | ||

|

|

6d18177f98 | ||

|

|

c0bb395571 | ||

|

|

f051ddc7f0 | ||

|

|

72ad49ed12 | ||

|

|

d11f4ef9ba | ||

|

|

03ca7977a0 | ||

|

|

91b2e7d400 | ||

|

|

34c3a58efe | ||

|

|

a867557f54 | ||

|

|

b814f32e0a | ||

|

|

2df44721d0 | ||

|

|

d0565baaa3 | ||

|

|

38e7da1334 | ||

|

|

1b623c5c7a | ||

|

|

542a116b8c | ||

|

|

e7b6496f98 | ||

|

|

3991c7b5fe | ||

|

|

678b232c24 | ||

|

|

fbd0dbd048 | ||

|

|

1df19faf5c | ||

|

|

8ec5794833 | ||

|

|

bf07d56da6 | ||

|

|

cdbffa2323 | ||

|

|

55469ebd24 | ||

|

|

4e81860a13 | ||

|

|

a23789287e | ||

|

|

fe1824aedd | ||

|

|

e58b2c45dd | ||

|

|

5d322ebc0b | ||

|

|

7ea8d5efd0 | ||

|

|

4182ff66a0 | ||

|

|

ff29d9ca51 | ||

|

|

4a88dedcb8 | ||

|

|

cfe5c1d76a | ||

|

|

ebf5159c95 | ||

|

|

d432019ad9 | ||

|

|

0d8fd42be3 | ||

|

|

d5faf535c3 | ||

|

|

8e1edd1d91 | ||

|

|

d791b23838 | ||

|

|

0db0754ee5 | ||

|

|

1f5a990b1e | ||

|

|

7a2f01be53 | ||

|

|

dadb0db8f3 | ||

|

|

dfd8ac3626 | ||

|

|

9716e09b83 | ||

|

|

669f68ad88 | ||

|

|

32af2d8436 | ||

|

|

24e945eee4 | ||

|

|

8615e5d5ea | ||

|

|

2dd5ff4333 | ||

|

|

6a396ec1aa | ||

|

|

34f558c023 | ||

|

|

9504f0885a | ||

|

|

ef59678441 | ||

|

|

c6f6811f47 | ||

|

|

ce8f9fe024 | ||

|

|

40b7999786 | ||

|

|

69be03f86a | ||

|

|

8dc8092241 | ||

|

|

578c6c567f | ||

|

|

662df1208d | ||

|

|

745b6775f1 | ||

|

|

176aaa8f3d | ||

|

|

4d499be1a8 | ||

|

|

c27225d91f | ||

|

|

1b47d5c622 | ||

|

|

32d7927a49 | ||

|

|

861630681c | ||

|

|

9d725f2b0b | ||

|

|

132263ac1a | ||

|

|

92a847e3bd | ||

|

|

75bbc41d38 | ||

|

|

7716f4aff8 | ||

|

|

8eb6dcc5b7 | ||

|

|

847638442b | ||

|

|

5743189eef | ||

|

|

81d874c6ae | ||

|

|

bfe8a3a01b | ||

|

|

71ed9204ff | ||

|

|

222ebbdec1 | ||

|

|

260d4e44bc | ||

|

|

0c5b3f7c1c | ||

|

|

feee80cad9 | ||

|

|

5f69456e22 | ||

|

|

e59d124c82 | ||

|

|

13d4738e8f | ||

|

|

abdfbba32a | ||

|

|

7d0a961482 | ||

|

|

0f226cc08e | ||

|

|

cfcfc6819f | ||

|

|

fe4e2a9540 | ||

|

|

492554d951 | ||

|

|

dfd5e95c93 | ||

|

|

50f0c43212 | ||

|

|

7fe8715bce | ||

|

|

f837ea944a | ||

|

|

c2d43e5d22 | ||

|

|

51bb4837f5 | ||

|

|

caec424e44 | ||

|

|

156176c628 | ||

|

|

81b4c4e2c0 | ||

|

|

d4107dc60a | ||

|

|

d34605a512 | ||

|

|

af5e7cd72c | ||

|

|

93378e92e6 | ||

|

|

81ce762250 | ||

|

|

cb727bf48d | ||

|

|

9a0bad88cc | ||

|

|

680e84851b | ||

|

|

ea771ed21b | ||

|

|

c332cd777c | ||

|

|

9fce85c988 | ||

|

|

6141c7a849 | ||

|

|

bf91030204 | ||

|

|

9577c3f59d | ||

|

|

77dedc575e | ||

|

|

0295b8d658 | ||

|

|

6a9d78fa7c | ||

|

|

b84521cdd2 | ||

|

|

ff4679ec08 | ||

|

|

c5ce7102e8 | ||

|

|

70c001e22b | ||

|

|

f1dc22a200 | ||

|

|

aae1b69093 | ||

|

|

469ca44016 | ||

|

|

81fcd68e9b | ||

|

|

8781419b4a | ||

|

|

2eea671857 | ||

|

|

73acfbf864 | ||

|

|

ae0e994461 | ||

|

|

07b9011636 | ||

|

|

bc2b3b7f8f | ||

|

|

ea02a2b868 | ||

|

|

ba3a6cbe87 | ||

|

|

268dcbe00b | ||

|

|

6be97f13d0 | ||

|

|

95d6c93a07 | ||

|

|

a2bb220043 | ||

|

|

911d6dcce1 | ||

|

|

5f6a9850eb | ||

|

|

de18bf06c3 | ||

|

|

73473d671d | ||

|

|

3fbab7c3af | ||

|

|

521cccaed6 | ||

|

|

35da3408dc | ||

|

|

c03096e806 | ||

|

|

2afc947d6c | ||

|

|

076da649cf | ||

|

|

55f8303dc2 | ||

|

|

93ced0959c | ||

|

|

6f13fa50bf | ||

|

|

3bface12e0 | ||

|

|

b584c8e353 | ||

|

|

6caf87df2d | ||

|

|

4d1f2c2bc1 | ||

|

|

0b1175b46c | ||

|

|

4e50dabc56 | ||

|

|

ce45a5926a | ||

|

|

c540a4f257 | ||

|

|

7af94c172f | ||

|

|

7556587e35 | ||

|

|

a0030b27e2 | ||

|

|

8080e05444 | ||

|

|

af11879545 | ||

|

|

c89f1c9d95 | ||

|

|

b7ac599a42 | ||

|

|

8363877c66 | ||

|

|

4bcb4b5b9c | ||

|

|

68302e14b9 | ||

|

|

c1abc7a7f1 | ||

|

|

484717d57d | ||

|

|

b91c608fcf | ||

|

|

8f8ece2b34 | ||

|

|

9b5c1c01e9 | ||

|

|

816a1d446e | ||

|

|

19bfd5beca | ||

|

|

9ac7e051b3 | ||

|

|

80b1d51f76 | ||

|

|

6340ebb36d | ||

|

|

70721afa51 | ||

|

|

9c31622598 | ||

|

|

f372b0907b | ||

|

|

fac96e0b08 | ||

|

|

2bc53f9868 | ||

|

|

e8106befe9 | ||

|

|

83412b813f | ||

|

|

b56d497543 | ||

|

|

dd40962288 | ||

|

|

b7eebad2a5 | ||

|

|

8f8698fd02 | ||

|

|

092f716f12 | ||

|

|

c38f48c7f2 | ||

|

|

98837bc379 | ||

|

|

0f243bb6ec | ||

|

|

88fc1bbe32 | ||

|

|

d5ef0e5744 | ||

|

|

2ecac38f6d | ||

|

|

e90557d7dc | ||

|

|

628893fd5b | ||

|

|

a81e4c3362 | ||

|

|

ca7b89c308 | ||

|

|

03335cc015 | ||

|

|

08557ae287 | ||

|

|

08d2a6242d | ||

|

|

4b481bd405 | ||

|

|

0b1e3b2a7f | ||

|

|

dbd9873450 | ||

|

|

c6d0a17669 | ||

|

|

adeab10f6d | ||

|

|

824f852ed7 | ||

|

|

284c1be85f | ||

|

|

7ad6baf483 | ||

|

|

f1638faa3a | ||

|

|

dea786abfa | ||

|

|

f96b82b112 | ||

|

|

95fe11c6b4 | ||

|

|

f2f688b9b8 | ||

|

|

0139e18271 | ||

|

|

657995d744 | ||

|

|

4057238185 | ||

|

|

fb07ff65c9 | ||

|

|

dbc56ffee7 | ||

|

|

ee696be51d | ||

|

|

5d3fd3d389 | ||

|

|

fa063722e1 | ||

|

|

f5cc35509b | ||

|

|

d39c8fae54 | ||

|

|

d3b81babec | ||

|

|

f35f6bd4c8 | ||

|

|

d5cfef94a3 | ||

|

|

f37f5ba97b | ||

|

|

42818a9950 | ||

|

|

e85c3e5b27 | ||

|

|

a39c88c7b4 | ||

|

|

73ebf5256a | ||

|

|

6d31cd2a41 | ||

|

|

5600fed9c4 | ||

|

|

6920b77b4a | ||

|

|

ccd6b3914c | ||

|

|

c4723263a4 | ||

|

|

4581a46529 | ||

|

|

33a2c5dcd8 | ||

|

|

f6a8a21f94 | ||

|

|

ff5773c837 | ||

|

|

66f8084916 | ||

|

|

a2467d0418 | ||

|

|

3b0339a9b3 | ||

|

|

fb1d4fdd3c | ||

|

|

56a16539ae | ||

|

|

c0b2cf7388 | ||

|

|

d9c58d9333 | ||

|

|

ef3a52468f | ||

|

|

c88b731793 | ||

|

|

2e85a28c02 | ||

|

|

964fef1aab | ||

|

|

1a832fa0a5 | ||

|

|

75bdc92bbf | ||

|

|

a8c231ad8c | ||

|

|

f396247838 | ||

|

|

e3ea4776c7 | ||

|

|

37a928b065 | ||

|

|

85c269e697 | ||

|

|

6e70268ab9 | ||

|

|

fb8929ea37 | ||

|

|

5d9c0dd8b5 | ||

|

|

debf093c54 | ||

|

|

00b5a5cc0c | ||

|

|

dbb99d0367 | ||

|

|

7702f05756 | ||

|

|

2c635bce62 | ||

|

|

48713a4e7b | ||

|

|

e831354401 | ||

|

|

55c5ea5c4c | ||

|

|

1fd5165079 | ||

|

|

949cea95f4 | ||

|

|

12762e08ef | ||

|

|

62bdb2627a | ||

|

|

386be4e746 | ||

|

|

dfcf7a436f | ||

|

|

d9ec556061 | ||

|

|

876d860488 | ||

|

|

88651219a6 | ||

|

|

a655f8dc04 | ||

|

|

e98b8566c9 | ||

|

|

ef10794e3b | ||

|

|

0d034e7adc | ||

|

|

59097070ef | ||

|

|

77b5aa4369 | ||

|

|

0d7c331ff0 | ||

|

|

1c1a1a1d3f | ||

|

|

47efcfd6e2 | ||

|

|

15a0b959aa | ||

|

|

ca49943a7f | ||

|

|

ee4ca0d7a2 | ||

|

|

0d634f3b8e | ||

|

|

f68ac23f0e | ||

|

|

825c4a9adb | ||

|

|

2a2b86ebe6 | ||

|

|

74dfc25376 | ||

|

|

81ee60e658 | ||

|

|

fcb6a47e8c | ||

|

|

49fd84a3a7 | ||

|

|

58b565558d | ||

|

|

185fb38b2d | ||

|

|

550b3ee92d | ||

|

|

29a87fd166 | ||

|

|

f90d40b471 | ||

|

|

4344988abe | ||

|

|

979147a111 | ||

|

|

66725b11b3 | ||

|

|

19f9c4e389 | ||

|

|

bd11d59c15 | ||

|

|

15155613c3 | ||

|

|

b5f656ae58 | ||

|

|

7177392adc | ||

|

|

ea7715f729 | ||

|

|

0b9ebefdb6 | ||

|

|

19e66604d0 | ||

|

|

1e6161f89c | ||

|

|

a8c287c491 | ||

|

|

2c4f5f0a91 | ||

|

|

8e7c487cb0 | ||

|

|

3d4f3a04a3 | ||

|

|

ce063cf435 | ||

|

|

a072e34cfe | ||

|

|

d19c1a514b | ||

|

|

b415810485 | ||

|

|

3cfd710756 | ||

|

|

382cd24a57 | ||

|

|

b1beb617b3 | ||

|

|

91f8b1fef7 | ||

|

|

ca6e2b8e22 | ||

|

|

8af3158ea7 | ||

|

|

8b011b8d7e | ||

|

|

f9e9b825cf | ||

|

|

3992ef1082 | ||

|

|

556fdfdcf9 | ||

|

|

f4490fab58 | ||

|

|

5aaf44ebb2 | ||

|

|

deb140e38e | ||

|

|

3de6454d4f | ||

|

|

d57cc9627f | ||

|

|

8ce19a93b9 | ||

|

|

d315b95d77 | ||

|

|

6172816f61 | ||

|

|

03826dd32c | ||

|

|

b7a4f20c61 | ||

|

|

02b4d37c11 | ||

|

|

f8ce039065 | ||

|

|

e2d0b8f4c7 | ||

|

|

8a3061fe3e | ||

|

|

c594168b65 | ||

|

|

31fdf15ce1 | ||

|

|

6b2219b7f2 | ||

|

|

64144b4759 | ||

|

|

6e97c39f58 | ||

|

|

026023fd0a | ||

|

|

d7ee89542a | ||

|

|

6fac6eebce | ||

|

|

3c3497c2fd | ||

|

|

fcc72a4f4e | ||

|

|

28dea9be58 | ||

|

|

0cc57fc240 | ||

|

|

17518b90ca | ||

|

|

d9edff38df | ||

|

|

300d8436a8 | ||

|

|

1c4d36760a | ||

|

|

34a5985311 | ||

|

|

aa0163349b | ||

|

|

572b8d08d9 | ||

|

|

cc6cb346e7 | ||

|

|

b54632080e | ||

|

|

44d3468f65 | ||

|

|

9d4668f4d3 | ||

|

|

da2ac4776e | ||

|

|

9796354b48 | ||

|

|

aa32eb9c0e | ||

|

|

4771810361 | ||

|

|

52f27c00ce | ||

|

|

ab9ec2ec6b | ||

|

|

4d7835612d | ||

|

|

8076ea0e0a | ||

|

|

320ae641b1 | ||

|

|

b4aec9a9d0 | ||

|

|

6af0308482 | ||

|

|

08024c7511 | ||

|

|

3a56058f7f | ||

|

|

795de7ab07 | ||

|

|

8803ad4018 | ||

|

|

62a8024c6c | ||

|

|

ea253726a0 | ||

|

|

a0af25c314 | ||

|

|

e3a0847867 | ||

|

|

7345d2c5a6 | ||

|

|

7cbc3a83c6 | ||

|

|

427b1e4524 | ||

|

|

2dbbe8dec4 | ||

|

|

e76c2c95a9 | ||

|

|

51862e5803 | ||

|

|

27ad84ebd9 | ||

|

|

67645a662d | ||

|

|

1d16f6b7ed | ||

|

|

5b45c80a62 | ||

|

|

6dec9b4cf7 | ||

|

|

13062099b3 | ||

|

|

7250fb1188 | ||

|

|

437d0028db | ||

|

|

1ef9509aac | ||

|

|

d606f259d1 | ||

|

|

c8870eae65 | ||

|

|

2419066dc8 | ||

|

|

e430de88d3 | ||

|

|

c4c38f58cb | ||

|

|

26b5a39912 | ||

|

|

eb03858230 | ||

|

|

2643da978b | ||

|

|

649f52dac7 | ||

|

|

927fe91f25 | ||

|

|

9d6f6c7893 | ||

|

|

28e40e42b3 | ||

|

|

6c71c45ef6 | ||

|

|

641899ad56 | ||

|

|

d120326cb9 | ||

|

|

a4f2d8f327 | ||

|

|

ae323cf385 | ||

|

|

788c31014d | ||

|

|

154dc605ef | ||

|

|

2a0e33401d | ||

|

|

79b4d7b6b6 | ||

|

|

986cbb129a | ||

|

|

950c68783c | ||

|

|

cec75ba475 | ||

|

|

26cb8d43e1 | ||

|

|

a1291e43c3 | ||

|

|

45fd07cdf8 | ||

|

|

fecd674fdb | ||

|

|

dff2de4527 | ||

|

|

19e1aaa1a6 | ||

|

|

074d063fee | ||

|

|

6ed82d7b29 | ||

|

|

ea4cf42913 | ||

|

|

8a34f5621c | ||

|

|

823ff7ce11 | ||

|

|

fb8456b4a6 | ||

|

|

c864fec70c | ||

|

|

a74fee4cd0 | ||

|

|

3a99624eb8 | ||

|

|

656bf60fda | ||

|

|

cdc47cb1cd | ||

|

|

01a68568a6 | ||

|

|

2ad87bf1fe | ||

|

|

eca2a4a9c8 | ||

|

|

dff609d829 | ||

|

|

b916465b06 | ||

|

|

0567b93534 | ||

|

|

ad9fdf064b | ||

|

|

77e2117051 | ||

|

|

5b7b6e5fb8 | ||

|

|

c7845bdf56 | ||

|

|

5a5a1e86ac | ||

|

|

796eefc2f0 | ||

|

|

1862deaf5e | ||

|

|

0d2e5e0065 | ||

|

|

5dc098f0fc | ||

|

|

af681881e6 | ||

|

|

47dc911b79 | ||

|

|

6d2ecce9b7 | ||

|

|

326c59bb26 | ||

|

|

c1257f1c13 | ||

|

|

2eee617788 | ||

|

|

70ef8092a7 | ||

|

|

8364b2a730 | ||

|

|

cb7dea1295 | ||

|

|

1da88b70ac | ||

|

|

b4817fa062 | ||

|

|

bc24227732 | ||

|

|

2e70d157e2 | ||

|

|

5e2e5b2724 | ||

|

|

dcc1f656ee | ||

|

|

23da1f6ee9 | ||

|

|

bee8c2c1ce | ||

|

|

4ebe070cd8 | ||

|

|

a5e89c0854 | ||

|

|

a25e43db8f | ||

|

|

b997e44715 | ||

|

|

1e48955376 | ||

|

|

5056ec526b | ||

|

|

2431d7b028 | ||

|

|

d2fa77ae10 | ||

|

|

445fb31634 | ||

|

|

5aa611302a | ||

|

|

554a203541 | ||

|

|

be1758aea7 | ||

|

|

38f74d2e9e | ||

|

|

5b966b83a9 | ||

|

|

a67f0d93a0 | ||

|

|

3f73b14a6a | ||

|

|

e57d1a5fb5 | ||

|

|

f689cfcd0a | ||

|

|

26c6a98b45 | ||

|

|

45c344e3fa | ||

|

|

7b905f5a94 | ||

|

|

6d5ff59657 | ||

|

|

7f12d4c815 | ||

|

|

b50789a77c | ||

|

|

bdf1b45a07 | ||

|

|

3d4fd59a15 | ||

|

|

91c9f26a0c | ||

|

|

6cbbb81cad | ||

|

|

442a717d75 | ||

|

|

fa3522a233 | ||

|

|

bbc374b56e | ||

|

|

9ae6fc5666 | ||

|

|

5fe8c6a95f | ||

|

|

2929877042 | ||

|

|

8035740d2b | ||

|

|

4f8aaba6c6 | ||

|

|

e9b1263249 | ||

|

|

3b2d3573d8 | ||

|

|

e960ae66a3 | ||

|

|

093cbc5ebc | ||

|

|

f663ef8c16 | ||

|

|

de9f6425f9 | ||

|

|

33d1170a91 | ||

|

|

240ffc0862 | ||

|

|

0822a46e94 | ||

|

|

1be3e6204d | ||

|

|

956ae7a7ae | ||

|

|

3285ae9366 | ||

|

|

47ced60243 | ||

|

|

72b2503b49 | ||

|

|

58ebbfba20 | ||

|

|

e164d15ec6 | ||

|

|

3efdb4e532 | ||

|

|

854799fabb | ||

|

|

47ba4c0f57 | ||

|

|

10c8e4203c | ||

|

|

05c69925c9 | ||

|

|

252d9a5320 | ||

|

|

7122709bbf | ||

|

|

f7223f132a | ||

|

|

8cd75902f2 | ||

|

|

c71af9127b | ||

|

|

e6f45161c1 | ||

|

|

fe2edeb2fb | ||

|

|

6294f751ee | ||

|

|

de0af58cf8 | ||

|

|

84abfa6881 | ||

|

|

6b60e85a33 | ||

|

|

63f3e23e2b | ||

|

|

ad1cda1746 | ||

|

|

66563a4da0 | ||

|

|

d0e140cf7b | ||

|

|

87c6d0a820 | ||

|

|

eb1249618b | ||

|

|

cef9bb1487 | ||

|

|

9a25d3c30f | ||

|

|

9a4a85e3ae | ||

|

|

bb49944b96 | ||

|

|

72db369fbb | ||

|

|

84db82852c | ||

|

|

fcc4050f86 | ||

|

|

9c83a52c6d | ||

|

|

ea4750d8ad | ||

|

|

e9944796c8 | ||

|

|

4d6124f982 | ||

|

|

dd168e1cca | ||

|

|

ddf662bdb4 | ||

|

|

fadb6e2aa9 | ||

|

|

192d91565d | ||

|

|

82ef4c96c3 | ||

|

|

a6e8b25969 | ||

|

|

529bc01d69 | ||

|

|

a663bf63c6 | ||

|

|

11055b1d32 | ||

|

|

fd9a91420d | ||

|

|

529c8d7cf2 | ||

|

|

13ccb58f84 | ||

|

|

086ebe1a7c | ||

|

|

29c964cca1 | ||

|

|

f2c3c928fc | ||

|

|

3cbc29e767 | ||

|

|

89cb8b79fd | ||

|

|

b5c5c7857b | ||

|

|

ed05d51969 | ||

|

|

2c7eb3c755 | ||

|

|

cc17de2184 | ||

|

|

b424426298 | ||

|

|

03f9160fcc | ||

|

|

d50de804a8 | ||

|

|

983ef362e9 | ||

|

|

d88c1a5e0a | ||

|

|

44afa55274 | ||

|

|

ab832e4bb2 | ||

|

|

3c3ed8b5c5 | ||

|

|

c9d9979f22 | ||

|

|

383420b554 | ||

|

|

73b5bb1a75 | ||

|

|

59a02635ed | ||

|

|

13a6520a8c | ||

|

|

4b7f826a2a | ||

|

|

0bd0c7b1ec | ||

|

|

428fe787c4 | ||

|

|

1b3a0a3de8 | ||

|

|

96ec285241 | ||

|

|

75b5e16696 | ||

|

|

8a0a435700 | ||

|

|

e53e7768a0 | ||

|

|

36573d6005 | ||

|

|

aa0c589361 | ||

|

|

bef408b944 | ||

|

|

691b02a15e | ||

|

|

fc1c41e5a4 | ||

|

|

58ddd55123 | ||

|

|

685b80e519 | ||

|

|

5a401af1fd | ||

|

|

25d63f7516 | ||

|

|

d402943403 | ||

|

|

64c43b1a55 | ||

|

|

a237ef5d96 | ||

|

|

6c5e0579cf | ||

|

|

4ac04a1a46 | ||

|

|

746128e37b | ||

|

|

fe81ffaf78 | ||

|

|

1f6eb9cdc3 | ||

|

|

c48da45ac3 | ||

|

|

5cc358de4e | ||

|

|

406dda6051 | ||

|

|

229a989914 | ||

|

|

6c6647629c | ||

|

|

610dd2c08d | ||

|

|

506bbd314d | ||

|

|

7f9bc1fc0f | ||

|

|

8d9aae1983 | ||

|

|

4caa6a10b5 | ||

|

|

665b7197a6 | ||

|

|

3854620bcd | ||

|

|

67a57e9df7 | ||

|

|

4b79623ce3 | ||

|

|

ff28476191 | ||

|

|

8cc4d2668e | ||

|

|

dbfb178556 | ||

|

|

c4994a208b | ||

|

|

eedea2ca88 | ||

|

|

de6ea29e3b | ||

|

|

bb983d4ba2 | ||

|

|

5e8b16569f | ||

|

|

c014508519 | ||

|

|

f5e42e73af | ||

|

|

fcfbb1e857 | ||

|

|

911ee579a9 | ||

|

|

a6ff92b099 | ||

|

|

d73ba7dd3e | ||

|

|

04ddcd5c93 | ||

|

|

af29ae1968 | ||

|

|

fbd3cff90d | ||

|

|

0ed9894b7e | ||

|

|

a54a72c269 | ||

|

|

5b81a73e58 | ||

|

|

49ccd86c39 | ||

|

|

f514e5e9bb | ||

|

|

3955587372 | ||

|

|

6b28dc72e8 | ||

|

|

ca7253a589 | ||

|

|

af53dcda1b | ||

|

|

55cf90f477 | ||

|

|

c269fb90ac | ||

|

|

1250a728ac | ||

|

|

68e016090b | ||

|

|

fd689a4607 | ||

|

|

ae09869417 | ||

|

|

1c5f02ade2 | ||

|

|

ed97aa4e78 | ||

|

|

7124f04138 | ||

|

|

2ab9cbba61 | ||

|

|

4097e1d81a | ||

|

|

d3bd56b131 | ||

|

|

e9e61ea2d8 | ||

|

|

86b984001d | ||

|

|

2206553e03 | ||

|

|

fa7f8104c8 | ||

|

|

bd5fe43285 | ||

|

|

d38051e806 | ||

|

|

daa5342986 | ||

|

|

c48436ccbf | ||

|

|

7aa00faa6c | ||

|

|

6217a7b9a9 | ||

|

|

d67ebabc95 | ||

|

|

b9474b9352 | ||

|

|

376efab40c | ||

|

|

65274e89d7 | ||

|

|

acf29a6c9c | ||

|

|

721e04f793 | ||

|

|

00cea6fb80 | ||

|

|

433309ef1a | ||

|

|

cbc95d0b30 | ||

|

|

21f86be8ee | ||

|

|

8e38c3763e | ||

|

|

ca807bd6bd | ||

|

|

735cfb4c29 | ||

|

|

6202090836 | ||

|

|

436cbc1f06 | ||

|

|

40b08d737c | ||

|

|

4c5b42b898 | ||

|

|

7a6b72ebac | ||

|

|

f72cbd5f23 | ||

|

|

1d7e47f589 | ||

|

|

49d5fa95a2 | ||

|

|

204f44449a | ||

|

|

6046848ee7 | ||

|

|

b0aee238b1 | ||

|

|

d8ac3f1292 | ||

|

|

8788b34c8a | ||

|

|

784ec54795 | ||

|

|

54fce4bf8f | ||

|

|

c4ebe25bab | ||

|

|

7b4e207329 | ||

|

|

5ec3b834fb | ||

|

|

7668fa1396 | ||

|

|

470b0e4bf6 | ||

|

|

d3f163bf9e | ||

|

|

4b31632dfc | ||

|

|

c2f7f7e3a5 | ||

|

|

07cb0c7d46 | ||

|

|

14c824143b | ||

|

|

c75c411426 | ||

|

|

a7fab380b4 | ||

|

|

a9517e1291 | ||

|

|

1017838cfc | ||

|

|

1d221a574b | ||

|

|

a35bfc4822 | ||

|

|

7c64fc8c05 | ||

|

|

f66cca96ce | ||

|

|

12da7db22c | ||

|

|

1b8584d4bb | ||

|

|

9c59f42c16 | ||

|

|

fb5eea8284 | ||

|

|

9db9af27ae | ||

|

|

0f50a265cf | ||

|

|

3e05c04aa1 | ||

|

|

8f8896c505 | ||

|

|

941a841da0 | ||

|

|

13105c4ab3 | ||

|

|

dc27bbb01d | ||

|

|

2b8a051525 | ||

|

|

1c7cc8dd3b | ||

|

|

58d081eed1 | ||

|

|

9078b2bad2 | ||

|

|

8889c974b8 | ||

|

|

f615a73120 | ||

|

|

66844af1c2 | ||

|

|

a0b7d89eb6 | ||

|

|

c31e459c2b | ||

|

|

b863060df1 | ||

|

|

d96d696c35 | ||

|

|

105eadf111 | ||

|

|

ca57c20691 | ||

|

|

c4767bfdc8 | ||

|

|

0de1f76139 | ||

|

|

5f4a0fdfad | ||

|

|

18f95e867f | ||

|

|

ed6137a76a | ||

|

|

c3f02a698e | ||

|

|

db106f8ca1 | ||

|

|

c712529cf6 | ||

|

|

976ddd3982 | ||

|

|

64748b98ad | ||

|

|

3335612365 | ||

|

|

513273c8c3 | ||

|

|

0dfde3c9f2 | ||

|

|

0efdcfcb52 | ||

|

|

fbdcc53fe0 | ||

|

|

8e47cc73a5 | ||

|

|

639bf05081 | ||

|

|

c1b5ef0891 | ||

|

|

a8f25150f6 | ||

|

|

1ee2a6d37b | ||

|

|

f64d9224fb | ||

|

|

4e142e0212 | ||

|

|

c9bf1c86c6 | ||

|

|

82830c8173 | ||

|

|

7f5741c43b | ||

|

|

643d4831c1 | ||

|

|

b032eed22a | ||

|

|

1b49c8540e | ||

|

|

f7534a0ae3 | ||

|

|

b6187ab769 | ||

|

|

780ad9eb10 | ||

|

|

283939b18a | ||

|

|

e25bc8efe4 | ||

|

|

3b112e20e3 | ||

|

|

26abe90671 | ||

|

|

23a6c4adb6 | ||

|

|

2f03cbf115 | ||

|

|

a678a5a416 | ||

|

|

b2b54ccf60 | ||

|

|

55e71c867c | ||

|

|

6c2437f8ef | ||

|

|

261f2cbaf7 | ||

|

|

f083558666 | ||

|

|

505eeea66a | ||

|

|

1001aa665d | ||

|

|

7f488422b0 | ||

|

|

f17d8d3369 | ||

|

|

ff777560ac | ||

|

|

2c68fd6311 | ||

|

|

c1bf710e46 | ||

|

|

9d2b40f366 | ||

|

|

3aea2dec85 | ||

|

|

65f6b7022c | ||

|

|

e5a3a54aea | ||

|

|

be88dbe181 | ||

|

|

b64ed5535e | ||

|

|

5be56703e9 | ||

|

|

0c7ba62867 | ||

|

|

d9d851040c | ||

|

|

e747a4e3fe | ||

|

|

000d15a53c | ||

|

|

cc2164221c | ||

|

|

102c3271d1 | ||

|

|

32b8649c77 | ||

|

|

9c5ba92589 | ||

|

|

d2c9e0ea4a | ||

|

|

2928b71616 | ||

|

|

216b8c01bf | ||

|

|

ce0c9f846d | ||

|

|

ba262ee01a | ||

|

|

b571eeb8e6 | ||

|

|

7fe377f899 | ||

|

|

d57f773072 | ||

|

|

389357ad2b | ||

|

|

e2caf4668e | ||

|

|

63a58efba4 | ||

|

|

bbcd3116f7 | ||

|

|

9c12aa261e | ||

|

|

cc0f4847ba | ||

|

|

923b80ba60 | ||

|

|

7c4ea8a58e | ||

|

|

20bd9a9701 | ||

|

|

49fa800b2b | ||

|

|

446f1ffdf5 | ||

|

|

b658c82cdc | ||

|

|

2168698595 | ||

|

|

8cf29682bb | ||

|

|

86dc7cc804 |

2

.github/.gitleaks.toml

vendored

2

.github/.gitleaks.toml

vendored

@@ -536,7 +536,7 @@ secretGroup = 4

|

|||||||

|

|

||||||

[allowlist]

|

[allowlist]

|

||||||

description = "global allow lists"

|

description = "global allow lists"

|

||||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''']

|

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''', '''ssl_.*password''']

|

||||||

paths = [

|

paths = [

|

||||||

'''gitleaks.toml''',

|

'''gitleaks.toml''',

|

||||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

||||||

|

|||||||

1

.github/workflows/close-threads.yml

vendored

1

.github/workflows/close-threads.yml

vendored

@@ -15,6 +15,7 @@ concurrency:

|

|||||||

|

|

||||||

jobs:

|

jobs:

|

||||||

close-threads:

|

close-threads:

|

||||||

|

if: github.repository_owner == 'security-onion-solutions'

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

permissions:

|

permissions:

|

||||||

issues: write

|

issues: write

|

||||||

|

|||||||

1

.github/workflows/lock-threads.yml

vendored

1

.github/workflows/lock-threads.yml

vendored

@@ -15,6 +15,7 @@ concurrency:

|

|||||||

|

|

||||||

jobs:

|

jobs:

|

||||||

lock-threads:

|

lock-threads:

|

||||||

|

if: github.repository_owner == 'security-onion-solutions'

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

steps:

|

steps:

|

||||||

- uses: jertel/lock-threads@main

|

- uses: jertel/lock-threads@main

|

||||||

|

|||||||

@@ -1,17 +1,17 @@

|

|||||||

### 2.4.60-20240320 ISO image released on 2024/03/20

|

### 2.4.100-20240903 ISO image released on 2024/09/03

|

||||||

|

|

||||||

|

|

||||||

### Download and Verify

|

### Download and Verify

|

||||||

|

|

||||||

2.4.60-20240320 ISO image:

|

2.4.100-20240903 ISO image:

|

||||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

https://download.securityonion.net/file/securityonion/securityonion-2.4.100-20240903.iso

|

||||||

|

|

||||||

MD5: 178DD42D06B2F32F3870E0C27219821E

|

MD5: 856BBB4F0764C0A479D8949725FC096B

|

||||||

SHA1: 73EDCD50817A7F6003FE405CF1808A30D034F89D

|

SHA1: B3FCFB8F1031EB8AA833A90C6C5BB61328A73842

|

||||||

SHA256: DD334B8D7088A7B78160C253B680D645E25984BA5CCAB5CC5C327CA72137FC06

|

SHA256: 0103EB9D78970396BB47CBD18DA1FFE64524F5C1C559487A1B2D293E1882B265

|

||||||

|

|

||||||

Signature for ISO image:

|

Signature for ISO image:

|

||||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.100-20240903.iso.sig

|

||||||

|

|

||||||

Signing key:

|

Signing key:

|

||||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||||

@@ -25,27 +25,29 @@ wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.

|

|||||||

|

|

||||||

Download the signature file for the ISO:

|

Download the signature file for the ISO:

|

||||||

```

|

```

|

||||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.100-20240903.iso.sig

|

||||||

```

|

```

|

||||||

|

|

||||||

Download the ISO image:

|

Download the ISO image:

|

||||||

```

|

```

|

||||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.100-20240903.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

Verify the downloaded ISO image using the signature file:

|

Verify the downloaded ISO image using the signature file:

|

||||||

```

|

```

|

||||||

gpg --verify securityonion-2.4.60-20240320.iso.sig securityonion-2.4.60-20240320.iso

|

gpg --verify securityonion-2.4.100-20240903.iso.sig securityonion-2.4.100-20240903.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||||

```

|

```

|

||||||

gpg: Signature made Tue 19 Mar 2024 03:17:58 PM EDT using RSA key ID FE507013

|

gpg: Signature made Sat 31 Aug 2024 05:05:05 PM EDT using RSA key ID FE507013

|

||||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||||

gpg: WARNING: This key is not certified with a trusted signature!

|

gpg: WARNING: This key is not certified with a trusted signature!

|

||||||

gpg: There is no indication that the signature belongs to the owner.

|

gpg: There is no indication that the signature belongs to the owner.

|

||||||

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||||

```

|

```

|

||||||

|

|

||||||

|

If it fails to verify, try downloading again. If it still fails to verify, try downloading from another computer or another network.

|

||||||

|

|

||||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||||

https://docs.securityonion.net/en/2.4/installation.html

|

https://docs.securityonion.net/en/2.4/installation.html

|

||||||

|

|||||||

13

README.md

13

README.md

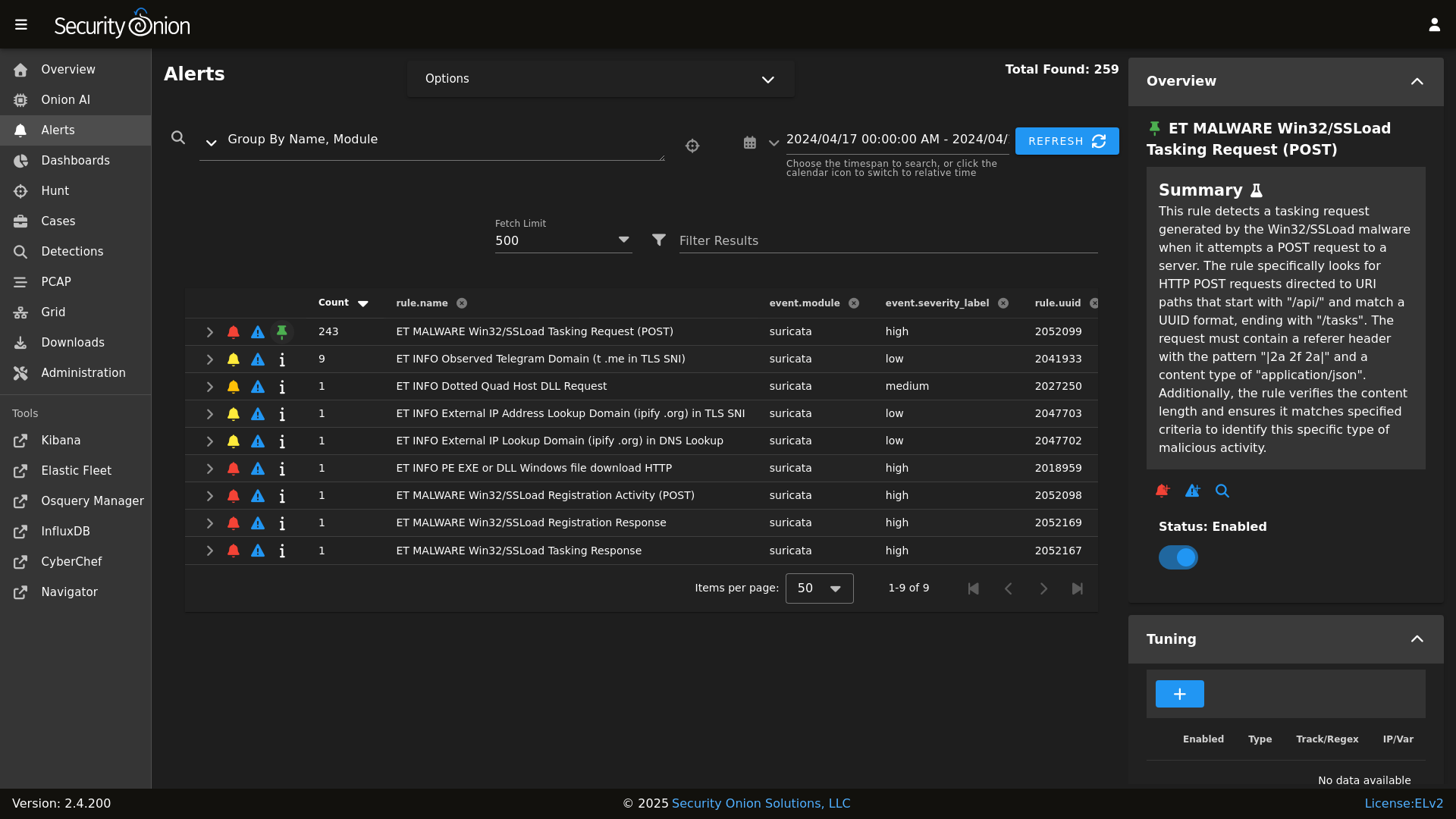

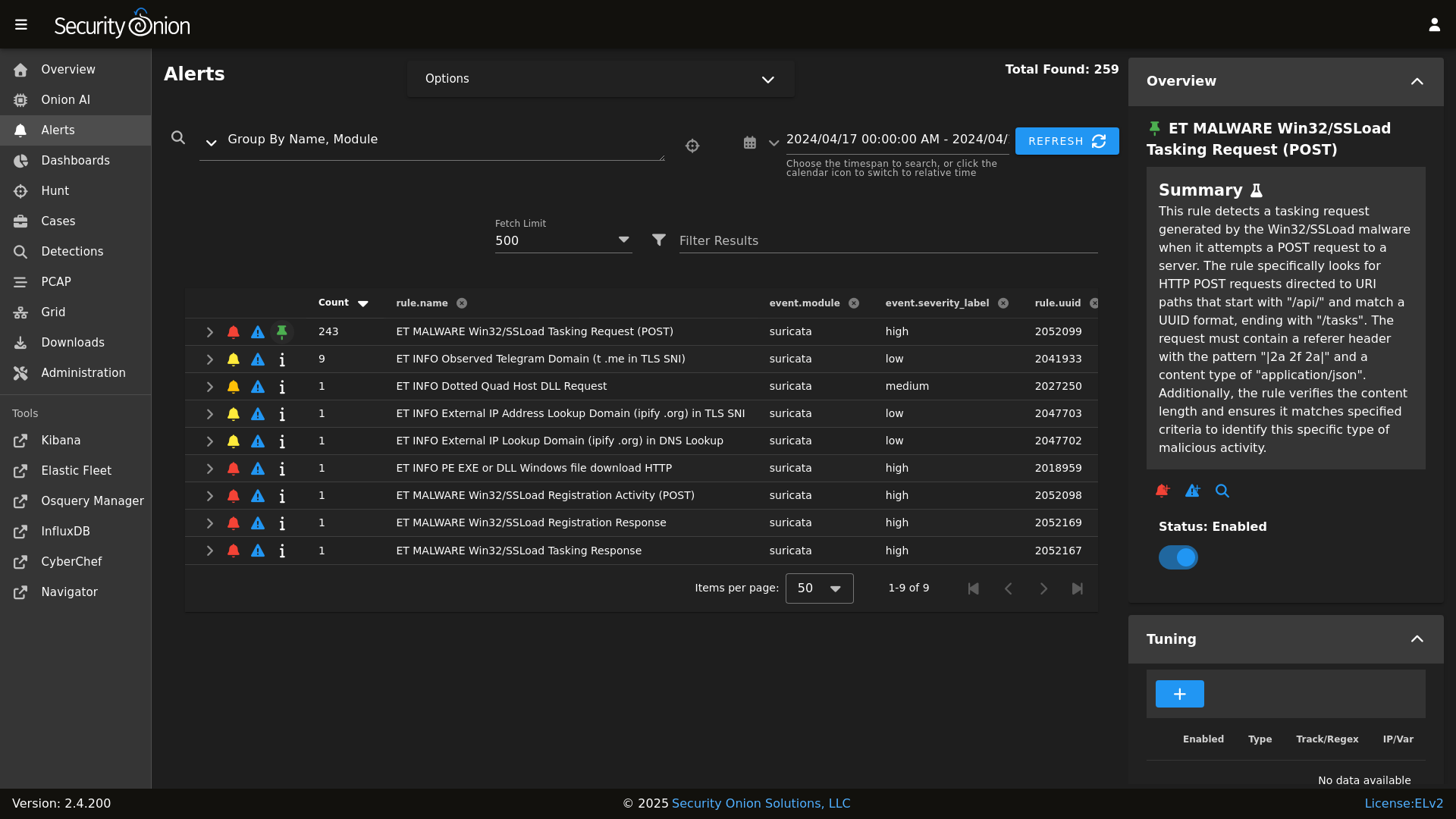

@@ -8,19 +8,22 @@ Alerts

|

|||||||

|

|

||||||

|

|

||||||

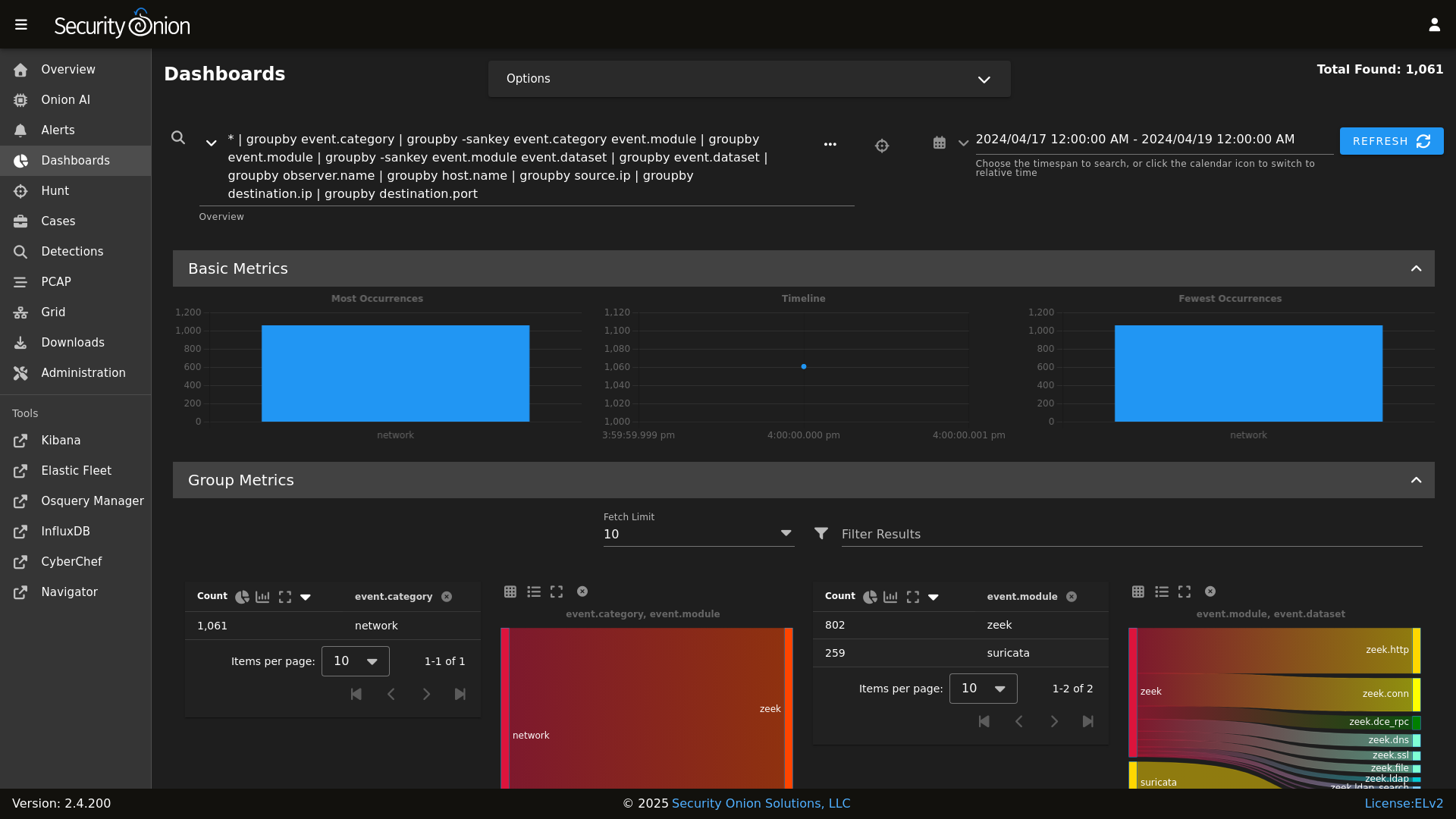

Dashboards

|

Dashboards

|

||||||

|

|

||||||

|

|

||||||

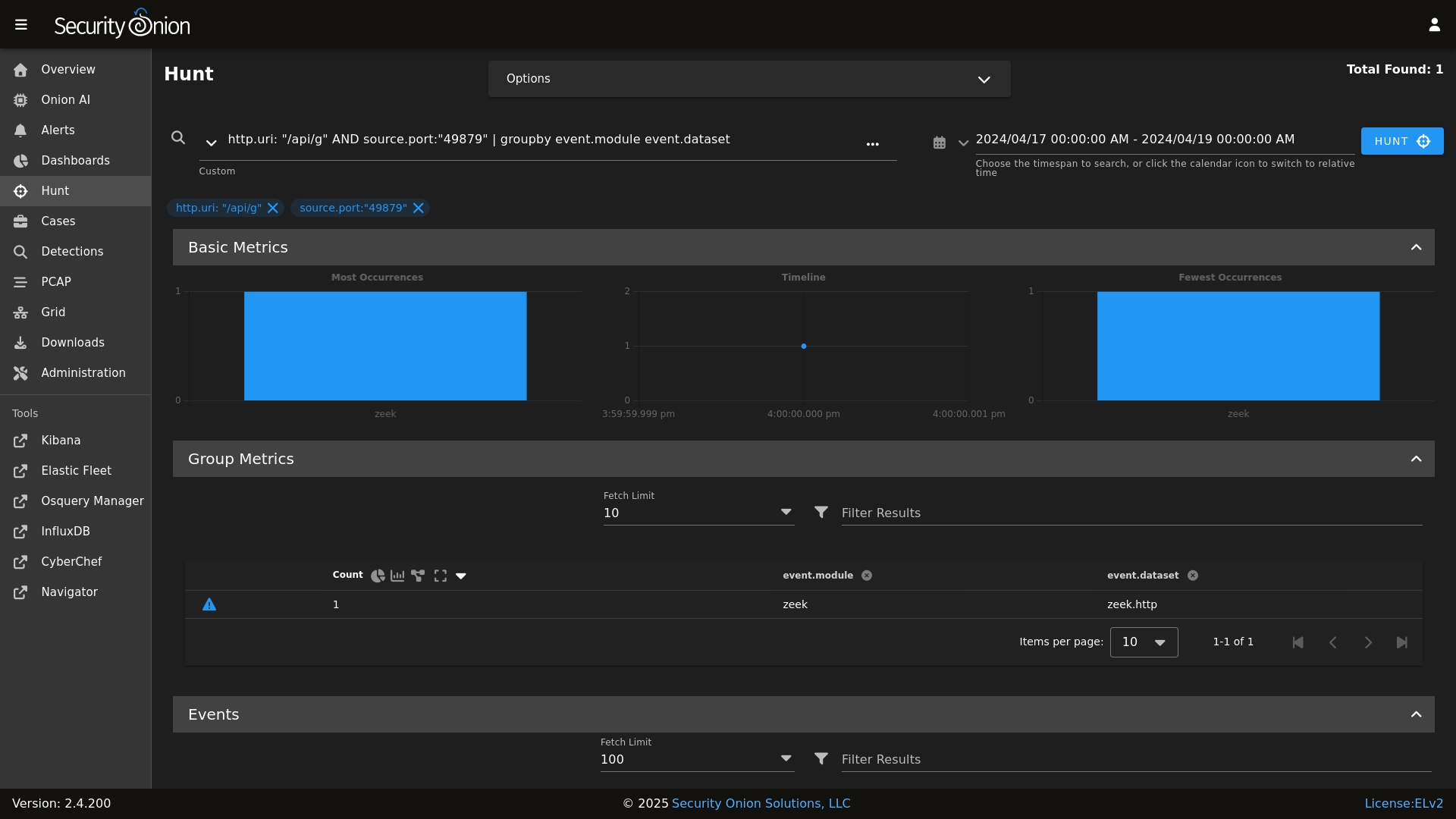

Hunt

|

Hunt

|

||||||

|

|

||||||

|

|

||||||

|

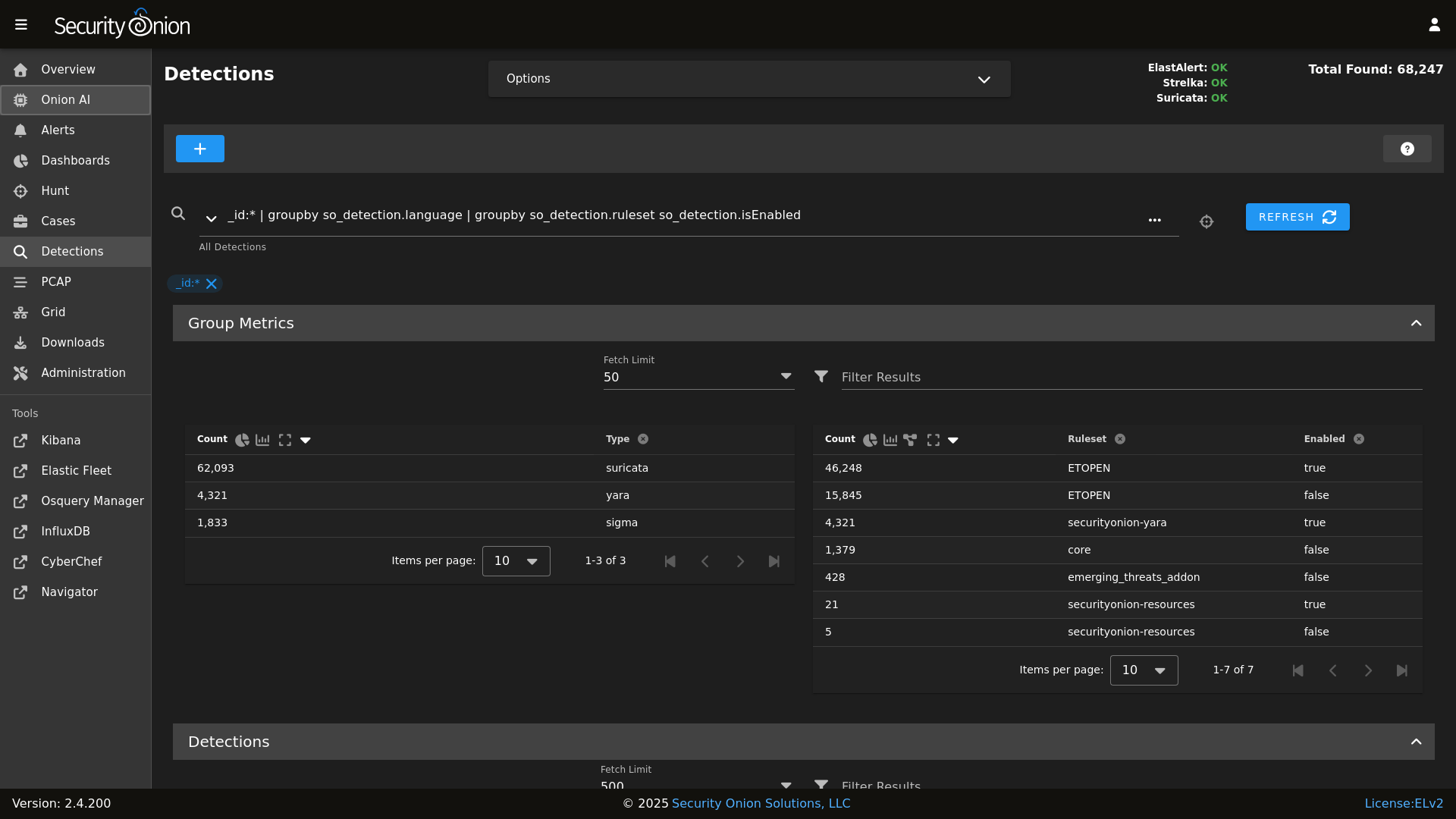

Detections

|

||||||

|

|

||||||

|

|

||||||

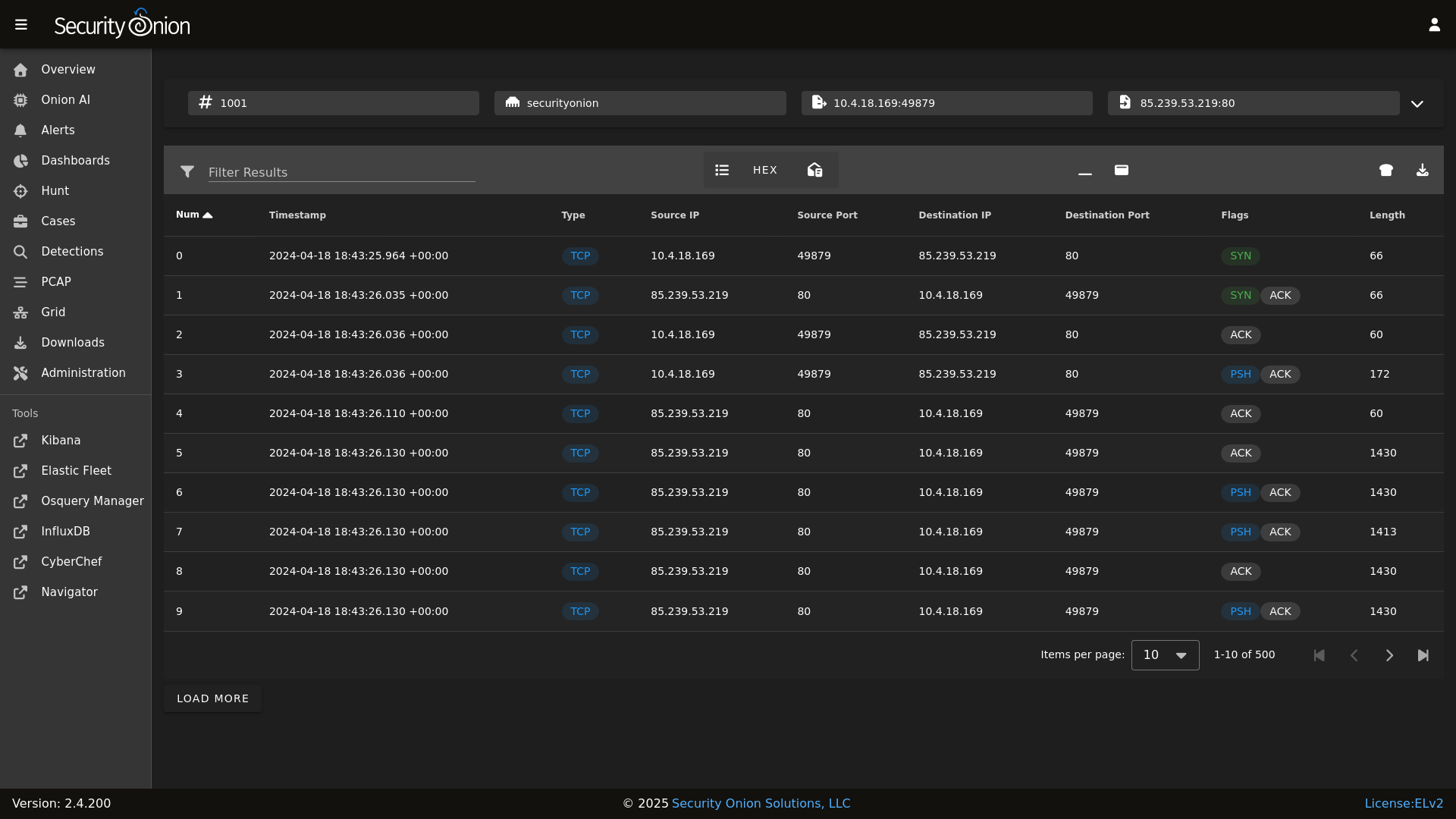

PCAP

|

PCAP

|

||||||

|

|

||||||

|

|

||||||

Grid

|

Grid

|

||||||

|

|

||||||

|

|

||||||

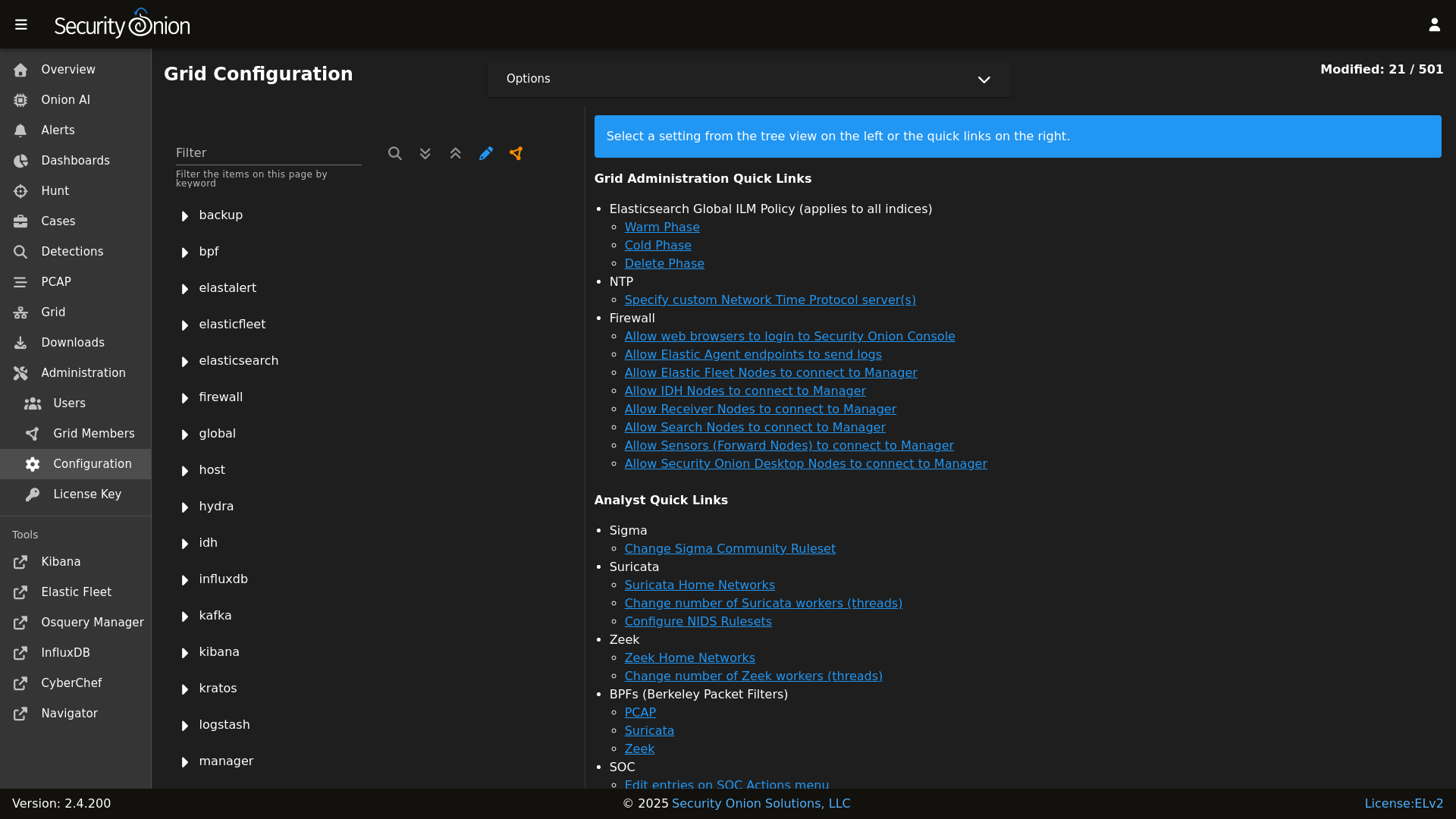

Config

|

Config

|

||||||

|

|

||||||

|

|

||||||

### Release Notes

|

### Release Notes

|

||||||

|

|

||||||

|

|||||||

@@ -5,9 +5,11 @@

|

|||||||

| Version | Supported |

|

| Version | Supported |

|

||||||

| ------- | ------------------ |

|

| ------- | ------------------ |

|

||||||

| 2.4.x | :white_check_mark: |

|

| 2.4.x | :white_check_mark: |

|

||||||

| 2.3.x | :white_check_mark: |

|

| 2.3.x | :x: |

|

||||||

| 16.04.x | :x: |

|

| 16.04.x | :x: |

|

||||||

|

|

||||||

|

Security Onion 2.3 has reached End Of Life and is no longer supported.

|

||||||

|

|

||||||

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

||||||

|

|

||||||

## Reporting a Vulnerability

|

## Reporting a Vulnerability

|

||||||

|

|||||||

@@ -19,4 +19,4 @@ role:

|

|||||||

receiver:

|

receiver:

|

||||||

standalone:

|

standalone:

|

||||||

searchnode:

|

searchnode:

|

||||||

sensor:

|

sensor:

|

||||||

34

pillar/elasticsearch/nodes.sls

Normal file

34

pillar/elasticsearch/nodes.sls

Normal file

@@ -0,0 +1,34 @@

|

|||||||

|

{% set node_types = {} %}

|

||||||

|

{% for minionid, ip in salt.saltutil.runner(

|

||||||

|

'mine.get',

|

||||||

|

tgt='elasticsearch:enabled:true',

|

||||||

|

fun='network.ip_addrs',

|

||||||

|

tgt_type='pillar') | dictsort()

|

||||||

|

%}

|

||||||

|

|

||||||

|

# only add a node to the pillar if it returned an ip from the mine

|

||||||

|

{% if ip | length > 0%}

|

||||||

|

{% set hostname = minionid.split('_') | first %}

|

||||||

|

{% set node_type = minionid.split('_') | last %}

|

||||||

|

{% if node_type not in node_types.keys() %}

|

||||||

|

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||||

|

{% else %}

|

||||||

|

{% if hostname not in node_types[node_type] %}

|

||||||

|

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||||

|

{% else %}

|

||||||

|

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||||

|

{% endif %}

|

||||||

|

{% endif %}

|

||||||

|

{% endif %}

|

||||||

|

{% endfor %}

|

||||||

|

|

||||||

|

|

||||||

|

elasticsearch:

|

||||||

|

nodes:

|

||||||

|

{% for node_type, values in node_types.items() %}

|

||||||

|

{{node_type}}:

|

||||||

|

{% for hostname, ip in values.items() %}

|

||||||

|

{{hostname}}:

|

||||||

|

ip: {{ip}}

|

||||||

|

{% endfor %}

|

||||||

|

{% endfor %}

|

||||||

2

pillar/kafka/nodes.sls

Normal file

2

pillar/kafka/nodes.sls

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

kafka:

|

||||||

|

nodes:

|

||||||

@@ -1,16 +1,15 @@

|

|||||||

{% set node_types = {} %}

|

{% set node_types = {} %}

|

||||||

{% set cached_grains = salt.saltutil.runner('cache.grains', tgt='*') %}

|

|

||||||

{% for minionid, ip in salt.saltutil.runner(

|

{% for minionid, ip in salt.saltutil.runner(

|

||||||

'mine.get',

|

'mine.get',

|

||||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-searchnode or G@role:so-heavynode or G@role:so-receiver or G@role:so-fleet ',

|

tgt='logstash:enabled:true',

|

||||||

fun='network.ip_addrs',

|

fun='network.ip_addrs',

|

||||||

tgt_type='compound') | dictsort()

|

tgt_type='pillar') | dictsort()

|

||||||

%}

|

%}

|

||||||

|

|

||||||

# only add a node to the pillar if it returned an ip from the mine

|

# only add a node to the pillar if it returned an ip from the mine

|

||||||

{% if ip | length > 0%}

|

{% if ip | length > 0%}

|

||||||

{% set hostname = cached_grains[minionid]['host'] %}

|

{% set hostname = minionid.split('_') | first %}

|

||||||

{% set node_type = minionid.split('_')[1] %}

|

{% set node_type = minionid.split('_') | last %}

|

||||||

{% if node_type not in node_types.keys() %}

|

{% if node_type not in node_types.keys() %}

|

||||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||||

{% else %}

|

{% else %}

|

||||||

|

|||||||

34

pillar/redis/nodes.sls

Normal file

34

pillar/redis/nodes.sls

Normal file

@@ -0,0 +1,34 @@

|

|||||||

|

{% set node_types = {} %}

|

||||||

|

{% for minionid, ip in salt.saltutil.runner(

|

||||||

|

'mine.get',

|

||||||

|

tgt='redis:enabled:true',

|

||||||

|

fun='network.ip_addrs',

|

||||||

|

tgt_type='pillar') | dictsort()

|

||||||

|

%}

|

||||||

|

|

||||||

|

# only add a node to the pillar if it returned an ip from the mine

|

||||||

|

{% if ip | length > 0%}

|

||||||

|

{% set hostname = minionid.split('_') | first %}

|

||||||

|

{% set node_type = minionid.split('_') | last %}

|

||||||

|

{% if node_type not in node_types.keys() %}

|

||||||

|

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||||

|

{% else %}

|

||||||

|

{% if hostname not in node_types[node_type] %}

|

||||||

|

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||||

|

{% else %}

|

||||||

|

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||||

|

{% endif %}

|

||||||

|

{% endif %}

|

||||||

|

{% endif %}

|

||||||

|

{% endfor %}

|

||||||

|

|

||||||

|

|

||||||

|

redis:

|

||||||

|

nodes:

|

||||||

|

{% for node_type, values in node_types.items() %}

|

||||||

|

{{node_type}}:

|

||||||

|

{% for hostname, ip in values.items() %}

|

||||||

|

{{hostname}}:

|

||||||

|

ip: {{ip}}

|

||||||

|

{% endfor %}

|

||||||

|

{% endfor %}

|

||||||

@@ -47,10 +47,12 @@ base:

|

|||||||

- kibana.adv_kibana

|

- kibana.adv_kibana

|

||||||

- kratos.soc_kratos

|

- kratos.soc_kratos

|

||||||

- kratos.adv_kratos

|

- kratos.adv_kratos

|

||||||

|

- redis.nodes

|

||||||

- redis.soc_redis

|

- redis.soc_redis

|

||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- influxdb.soc_influxdb

|

- influxdb.soc_influxdb

|

||||||

- influxdb.adv_influxdb

|

- influxdb.adv_influxdb

|

||||||

|

- elasticsearch.nodes

|

||||||

- elasticsearch.soc_elasticsearch

|

- elasticsearch.soc_elasticsearch

|

||||||

- elasticsearch.adv_elasticsearch

|

- elasticsearch.adv_elasticsearch

|

||||||

- elasticfleet.soc_elasticfleet

|

- elasticfleet.soc_elasticfleet

|

||||||

@@ -61,6 +63,9 @@ base:

|

|||||||

- backup.adv_backup

|

- backup.adv_backup

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

|

- kafka.nodes

|

||||||

|

- kafka.soc_kafka

|

||||||

|

- kafka.adv_kafka

|

||||||

- stig.soc_stig

|

- stig.soc_stig

|

||||||

|

|

||||||

'*_sensor':

|

'*_sensor':

|

||||||

@@ -144,10 +149,12 @@ base:

|

|||||||

- idstools.adv_idstools

|

- idstools.adv_idstools

|

||||||

- kratos.soc_kratos

|

- kratos.soc_kratos

|

||||||

- kratos.adv_kratos

|

- kratos.adv_kratos

|

||||||

|

- redis.nodes

|

||||||

- redis.soc_redis

|

- redis.soc_redis

|

||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- influxdb.soc_influxdb

|

- influxdb.soc_influxdb

|

||||||

- influxdb.adv_influxdb

|

- influxdb.adv_influxdb

|

||||||

|

- elasticsearch.nodes

|

||||||

- elasticsearch.soc_elasticsearch

|

- elasticsearch.soc_elasticsearch

|

||||||

- elasticsearch.adv_elasticsearch

|

- elasticsearch.adv_elasticsearch

|

||||||

- elasticfleet.soc_elasticfleet

|

- elasticfleet.soc_elasticfleet

|

||||||

@@ -176,6 +183,9 @@ base:

|

|||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- stig.soc_stig

|

- stig.soc_stig

|

||||||

|

- kafka.nodes

|

||||||

|

- kafka.soc_kafka

|

||||||

|

- kafka.adv_kafka

|

||||||

|

|

||||||

'*_heavynode':

|

'*_heavynode':

|

||||||

- elasticsearch.auth

|

- elasticsearch.auth

|

||||||

@@ -209,17 +219,22 @@ base:

|

|||||||

- logstash.nodes

|

- logstash.nodes

|

||||||

- logstash.soc_logstash

|

- logstash.soc_logstash

|

||||||

- logstash.adv_logstash

|

- logstash.adv_logstash

|

||||||

|

- elasticsearch.nodes

|

||||||

- elasticsearch.soc_elasticsearch

|

- elasticsearch.soc_elasticsearch

|

||||||

- elasticsearch.adv_elasticsearch

|

- elasticsearch.adv_elasticsearch

|

||||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||||

- elasticsearch.auth

|

- elasticsearch.auth

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

- redis.nodes

|

||||||

- redis.soc_redis

|

- redis.soc_redis

|

||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- stig.soc_stig

|

- stig.soc_stig

|

||||||

- soc.license

|

- soc.license

|

||||||

|

- kafka.nodes

|

||||||

|

- kafka.soc_kafka

|

||||||

|

- kafka.adv_kafka

|

||||||

|

|

||||||

'*_receiver':

|

'*_receiver':

|

||||||

- logstash.nodes

|

- logstash.nodes

|

||||||

@@ -232,6 +247,10 @@ base:

|

|||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

|

- kafka.nodes

|

||||||

|

- kafka.soc_kafka

|

||||||

|

- kafka.adv_kafka

|

||||||

|

- soc.license

|

||||||

|

|

||||||

'*_import':

|

'*_import':

|

||||||

- secrets

|

- secrets

|

||||||

|

|||||||

14

pyci.sh

14

pyci.sh

@@ -15,12 +15,16 @@ TARGET_DIR=${1:-.}

|

|||||||

|

|

||||||

PATH=$PATH:/usr/local/bin

|

PATH=$PATH:/usr/local/bin

|

||||||

|

|

||||||

if ! which pytest &> /dev/null || ! which flake8 &> /dev/null ; then

|

if [ ! -d .venv ]; then

|

||||||

echo "Missing dependencies. Consider running the following command:"

|

python -m venv .venv

|

||||||

echo " python -m pip install flake8 pytest pytest-cov"

|

fi

|

||||||

|

|

||||||

|

source .venv/bin/activate

|

||||||

|

|

||||||

|

if ! pip install flake8 pytest pytest-cov pyyaml; then

|

||||||

|

echo "Unable to install dependencies."

|

||||||

exit 1

|

exit 1

|

||||||

fi

|

fi

|

||||||

|

|

||||||

pip install pytest pytest-cov

|

|

||||||

flake8 "$TARGET_DIR" "--config=${HOME_DIR}/pytest.ini"

|

flake8 "$TARGET_DIR" "--config=${HOME_DIR}/pytest.ini"

|

||||||

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

||||||

|

|||||||

@@ -65,6 +65,7 @@

|

|||||||

'registry',

|

'registry',

|

||||||

'manager',

|

'manager',

|

||||||

'nginx',

|

'nginx',

|

||||||

|

'strelka.manager',

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

@@ -91,6 +92,7 @@

|

|||||||

'nginx',

|

'nginx',

|

||||||

'telegraf',

|

'telegraf',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

|

'strelka.manager',

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'elasticfleet',

|

'elasticfleet',

|

||||||

@@ -101,7 +103,8 @@

|

|||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'docker_clean',

|

||||||

'stig'

|

'stig',

|

||||||

|

'kafka'

|

||||||

],

|

],

|

||||||

'so-managersearch': [

|

'so-managersearch': [

|

||||||

'salt.master',

|

'salt.master',

|

||||||

@@ -111,6 +114,7 @@

|

|||||||

'nginx',

|

'nginx',

|

||||||

'telegraf',

|

'telegraf',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

|

'strelka.manager',

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'elastic-fleet-package-registry',

|

'elastic-fleet-package-registry',

|

||||||

@@ -122,7 +126,8 @@

|

|||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'docker_clean',

|

||||||

'stig'

|

'stig',

|

||||||

|

'kafka'

|

||||||

],

|

],

|

||||||

'so-searchnode': [

|

'so-searchnode': [

|

||||||

'ssl',

|

'ssl',

|

||||||

@@ -131,7 +136,9 @@

|

|||||||

'firewall',

|

'firewall',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'docker_clean',

|

||||||

'stig'

|

'stig',

|

||||||

|

'kafka.ca',

|

||||||

|

'kafka.ssl'

|

||||||

],

|

],

|

||||||

'so-standalone': [

|

'so-standalone': [

|

||||||

'salt.master',

|

'salt.master',

|

||||||

@@ -156,7 +163,8 @@

|

|||||||

'schedule',

|

'schedule',

|

||||||

'tcpreplay',

|

'tcpreplay',

|

||||||

'docker_clean',

|

'docker_clean',

|

||||||

'stig'

|

'stig',

|

||||||

|

'kafka'

|

||||||

],

|

],

|

||||||

'so-sensor': [

|

'so-sensor': [

|

||||||

'ssl',

|

'ssl',

|

||||||

@@ -187,7 +195,9 @@

|

|||||||

'telegraf',

|

'telegraf',

|

||||||

'firewall',

|

'firewall',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean'

|

'docker_clean',

|

||||||

|

'kafka',

|

||||||

|

'stig'

|

||||||

],

|

],

|

||||||

'so-desktop': [

|

'so-desktop': [

|

||||||

'ssl',

|

'ssl',

|

||||||

|

|||||||

@@ -1,6 +1,3 @@

|

|||||||

mine_functions:

|

|

||||||

x509.get_pem_entries: [/etc/pki/ca.crt]

|

|

||||||

|

|

||||||

x509_signing_policies:

|

x509_signing_policies:

|

||||||

filebeat:

|

filebeat:

|

||||||

- minions: '*'

|

- minions: '*'

|

||||||

@@ -70,3 +67,17 @@ x509_signing_policies:

|

|||||||

- authorityKeyIdentifier: keyid,issuer:always

|

- authorityKeyIdentifier: keyid,issuer:always

|

||||||

- days_valid: 820

|

- days_valid: 820

|

||||||

- copypath: /etc/pki/issued_certs/

|

- copypath: /etc/pki/issued_certs/

|

||||||

|

kafka:

|

||||||

|

- minions: '*'

|

||||||

|

- signing_private_key: /etc/pki/ca.key

|

||||||

|

- signing_cert: /etc/pki/ca.crt

|

||||||

|

- C: US

|

||||||

|

- ST: Utah

|

||||||

|

- L: Salt Lake City

|

||||||

|

- basicConstraints: "critical CA:false"

|

||||||

|

- keyUsage: "digitalSignature, keyEncipherment"

|

||||||

|

- subjectKeyIdentifier: hash

|

||||||

|

- authorityKeyIdentifier: keyid,issuer:always

|

||||||

|

- extendedKeyUsage: "serverAuth, clientAuth"

|

||||||

|

- days_valid: 820

|

||||||

|

- copypath: /etc/pki/issued_certs/

|

||||||

|

|||||||

@@ -14,6 +14,11 @@ net.core.wmem_default:

|

|||||||

sysctl.present:

|

sysctl.present:

|

||||||

- value: 26214400

|

- value: 26214400

|

||||||

|

|

||||||

|

# Users are not a fan of console messages

|

||||||

|

kernel.printk:

|

||||||

|

sysctl.present:

|

||||||

|

- value: "3 4 1 3"

|

||||||

|

|

||||||

# Remove variables.txt from /tmp - This is temp

|

# Remove variables.txt from /tmp - This is temp

|

||||||

rmvariablesfile:

|

rmvariablesfile:

|

||||||

file.absent:

|

file.absent:

|

||||||

|

|||||||

@@ -1,9 +1,16 @@

|

|||||||

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

{% if SOC_GLOBAL.global.airgap %}

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

{% set UPDATE_DIR='/tmp/soagupdate/SecurityOnion' %}

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

{% else %}

|

# Elastic License 2.0.

|

||||||

{% set UPDATE_DIR='/tmp/sogh/securityonion' %}

|

|

||||||

{% endif %}

|

{% if '2.4' in salt['cp.get_file_str']('/etc/soversion') %}

|

||||||

|

|

||||||

|

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

||||||

|

{% if SOC_GLOBAL.global.airgap %}

|

||||||

|

{% set UPDATE_DIR='/tmp/soagupdate/SecurityOnion' %}

|

||||||

|

{% else %}

|

||||||

|

{% set UPDATE_DIR='/tmp/sogh/securityonion' %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

remove_common_soup:

|

remove_common_soup:

|

||||||

file.absent:

|

file.absent:

|

||||||

@@ -13,6 +20,8 @@ remove_common_so-firewall:

|

|||||||

file.absent:

|

file.absent:

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

||||||

|

|

||||||

|

# This section is used to put the scripts in place in the Salt file system

|

||||||

|

# in case a state run tries to overwrite what we do in the next section.

|

||||||

copy_so-common_common_tools_sbin:

|

copy_so-common_common_tools_sbin:

|

||||||

file.copy:

|

file.copy:

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

||||||

@@ -41,6 +50,21 @@ copy_so-firewall_manager_tools_sbin:

|

|||||||

- force: True

|

- force: True

|

||||||

- preserve: True

|

- preserve: True

|

||||||

|

|

||||||

|

copy_so-yaml_manager_tools_sbin:

|

||||||

|

file.copy:

|

||||||

|

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-yaml.py

|

||||||

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

||||||

|

- force: True

|

||||||

|

- preserve: True

|

||||||

|

|

||||||

|

copy_so-repo-sync_manager_tools_sbin:

|

||||||

|

file.copy:

|

||||||

|

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-repo-sync

|

||||||

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

||||||

|

- preserve: True

|

||||||

|

|

||||||

|

# This section is used to put the new script in place so that it can be called during soup.

|

||||||

|

# It is faster than calling the states that normally manage them to put them in place.

|

||||||

copy_so-common_sbin:

|

copy_so-common_sbin:

|

||||||

file.copy:

|

file.copy:

|

||||||

- name: /usr/sbin/so-common

|

- name: /usr/sbin/so-common

|

||||||

@@ -68,3 +92,26 @@ copy_so-firewall_sbin:

|

|||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-firewall

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-firewall

|

||||||

- force: True

|

- force: True

|

||||||

- preserve: True

|

- preserve: True

|

||||||

|

|

||||||

|

copy_so-yaml_sbin:

|

||||||

|

file.copy:

|

||||||

|

- name: /usr/sbin/so-yaml.py

|

||||||

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

||||||

|

- force: True

|

||||||

|

- preserve: True

|

||||||

|

|

||||||

|

copy_so-repo-sync_sbin:

|

||||||

|

file.copy:

|

||||||

|

- name: /usr/sbin/so-repo-sync

|

||||||

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

||||||

|

- force: True

|

||||||

|

- preserve: True

|

||||||

|

|

||||||

|

{% else %}

|

||||||

|

fix_23_soup_sbin:

|

||||||

|

cmd.run:

|

||||||

|

- name: curl -s -f -o /usr/sbin/soup https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.3/main/salt/common/tools/sbin/soup

|

||||||

|

fix_23_soup_salt:

|

||||||

|

cmd.run:

|

||||||

|

- name: curl -s -f -o /opt/so/saltstack/defalt/salt/common/tools/sbin/soup https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.3/main/salt/common/tools/sbin/soup

|

||||||

|

{% endif %}

|

||||||

|

|||||||

@@ -5,8 +5,13 @@

|

|||||||