mirror of

https://github.com/Security-Onion-Solutions/securityonion.git

synced 2025-12-06 17:22:49 +01:00

Compare commits

1 Commits

2.4.80-202

...

sysusers

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

50ab63162a |

3

.github/.gitleaks.toml

vendored

3

.github/.gitleaks.toml

vendored

@@ -536,10 +536,11 @@ secretGroup = 4

|

|||||||

|

|

||||||

[allowlist]

|

[allowlist]

|

||||||

description = "global allow lists"

|

description = "global allow lists"

|

||||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''', '''.*:.*StrelkaHexDump.*''', '''.*:.*PLACEHOLDER.*''', '''ssl_.*password''']

|

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''']

|

||||||

paths = [

|

paths = [

|

||||||

'''gitleaks.toml''',

|

'''gitleaks.toml''',

|

||||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

||||||

'''(go.mod|go.sum)$''',

|

'''(go.mod|go.sum)$''',

|

||||||

|

|

||||||

'''salt/nginx/files/enterprise-attack.json'''

|

'''salt/nginx/files/enterprise-attack.json'''

|

||||||

]

|

]

|

||||||

|

|||||||

190

.github/DISCUSSION_TEMPLATE/2-4.yml

vendored

190

.github/DISCUSSION_TEMPLATE/2-4.yml

vendored

@@ -1,190 +0,0 @@

|

|||||||

body:

|

|

||||||

- type: markdown

|

|

||||||

attributes:

|

|

||||||

value: |

|

|

||||||

⚠️ This category is solely for conversations related to Security Onion 2.4 ⚠️

|

|

||||||

|

|

||||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Version

|

|

||||||

description: Which version of Security Onion 2.4.x are you asking about?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- 2.4 Pre-release (Beta, Release Candidate)

|

|

||||||

- 2.4.10

|

|

||||||

- 2.4.20

|

|

||||||

- 2.4.30

|

|

||||||

- 2.4.40

|

|

||||||

- 2.4.50

|

|

||||||

- 2.4.60

|

|

||||||

- 2.4.70

|

|

||||||

- 2.4.80

|

|

||||||

- 2.4.90

|

|

||||||

- 2.4.100

|

|

||||||

- Other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Installation Method

|

|

||||||

description: How did you install Security Onion?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Security Onion ISO image

|

|

||||||

- Network installation on Red Hat derivative like Oracle, Rocky, Alma, etc.

|

|

||||||

- Network installation on Ubuntu

|

|

||||||

- Network installation on Debian

|

|

||||||

- Other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Description

|

|

||||||

description: >

|

|

||||||

Is this discussion about installation, configuration, upgrading, or other?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- installation

|

|

||||||

- configuration

|

|

||||||

- upgrading

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Installation Type

|

|

||||||

description: >

|

|

||||||

When you installed, did you choose Import, Eval, Standalone, Distributed, or something else?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Import

|

|

||||||

- Eval

|

|

||||||

- Standalone

|

|

||||||

- Distributed

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Location

|

|

||||||

description: >

|

|

||||||

Is this deployment in the cloud, on-prem with Internet access, or airgap?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- cloud

|

|

||||||

- on-prem with Internet access

|

|

||||||

- airgap

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Hardware Specs

|

|

||||||

description: >

|

|

||||||

Does your hardware meet or exceed the minimum requirements for your installation type as shown at https://docs.securityonion.net/en/2.4/hardware.html?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Meets minimum requirements

|

|

||||||

- Exceeds minimum requirements

|

|

||||||

- Does not meet minimum requirements

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: CPU

|

|

||||||

description: How many CPU cores do you have?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: RAM

|

|

||||||

description: How much RAM do you have?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: Storage for /

|

|

||||||

description: How much storage do you have for the / partition?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: input

|

|

||||||

attributes:

|

|

||||||

label: Storage for /nsm

|

|

||||||

description: How much storage do you have for the /nsm partition?

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Network Traffic Collection

|

|

||||||

description: >

|

|

||||||

Are you collecting network traffic from a tap or span port?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- tap

|

|

||||||

- span port

|

|

||||||

- other (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Network Traffic Speeds

|

|

||||||

description: >

|

|

||||||

How much network traffic are you monitoring?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Less than 1Gbps

|

|

||||||

- 1Gbps to 10Gbps

|

|

||||||

- more than 10Gbps

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Status

|

|

||||||

description: >

|

|

||||||

Does SOC Grid show all services on all nodes as running OK?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, all services on all nodes are running OK

|

|

||||||

- No, one or more services are failed (please provide detail below)

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Salt Status

|

|

||||||

description: >

|

|

||||||

Do you get any failures when you run "sudo salt-call state.highstate"?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, there are salt failures (please provide detail below)

|

|

||||||

- No, there are no failures

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: dropdown

|

|

||||||

attributes:

|

|

||||||

label: Logs

|

|

||||||

description: >

|

|

||||||

Are there any additional clues in /opt/so/log/?

|

|

||||||

options:

|

|

||||||

-

|

|

||||||

- Yes, there are additional clues in /opt/so/log/ (please provide detail below)

|

|

||||||

- No, there are no additional clues

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: textarea

|

|

||||||

attributes:

|

|

||||||

label: Detail

|

|

||||||

description: Please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and then provide detailed information to help us help you.

|

|

||||||

placeholder: |-

|

|

||||||

STOP! Before typing, please read our discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 in their entirety!

|

|

||||||

|

|

||||||

If your organization needs more immediate, enterprise grade professional support, with one-on-one virtual meetings and screensharing, contact us via our website: https://securityonion.com/support

|

|

||||||

validations:

|

|

||||||

required: true

|

|

||||||

- type: checkboxes

|

|

||||||

attributes:

|

|

||||||

label: Guidelines

|

|

||||||

options:

|

|

||||||

- label: I have read the discussion guidelines at https://github.com/Security-Onion-Solutions/securityonion/discussions/1720 and assert that I have followed the guidelines.

|

|

||||||

required: true

|

|

||||||

33

.github/workflows/close-threads.yml

vendored

33

.github/workflows/close-threads.yml

vendored

@@ -1,33 +0,0 @@

|

|||||||

name: 'Close Threads'

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

- cron: '50 1 * * *'

|

|

||||||

workflow_dispatch:

|

|

||||||

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

discussions: write

|

|

||||||

|

|

||||||

concurrency:

|

|

||||||

group: lock-threads

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

close-threads:

|

|

||||||

if: github.repository_owner == 'security-onion-solutions'

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

steps:

|

|

||||||

- uses: actions/stale@v5

|

|

||||||

with:

|

|

||||||

days-before-issue-stale: -1

|

|

||||||

days-before-issue-close: 60

|

|

||||||

stale-issue-message: "This issue is stale because it has been inactive for an extended period. Stale issues convey that the issue, while important to someone, is not critical enough for the author, or other community members to work on, sponsor, or otherwise shepherd the issue through to a resolution."

|

|

||||||

close-issue-message: "This issue was closed because it has been stale for an extended period. It will be automatically locked in 30 days, after which no further commenting will be available."

|

|

||||||

days-before-pr-stale: 45

|

|

||||||

days-before-pr-close: 60

|

|

||||||

stale-pr-message: "This PR is stale because it has been inactive for an extended period. The longer a PR remains stale the more out of date with the main branch it becomes."

|

|

||||||

close-pr-message: "This PR was closed because it has been stale for an extended period. It will be automatically locked in 30 days. If there is still a commitment to finishing this PR re-open it before it is locked."

|

|

||||||

26

.github/workflows/lock-threads.yml

vendored

26

.github/workflows/lock-threads.yml

vendored

@@ -1,26 +0,0 @@

|

|||||||

name: 'Lock Threads'

|

|

||||||

|

|

||||||

on:

|

|

||||||

schedule:

|

|

||||||

- cron: '50 2 * * *'

|

|

||||||

workflow_dispatch:

|

|

||||||

|

|

||||||

permissions:

|

|

||||||

issues: write

|

|

||||||

pull-requests: write

|

|

||||||

discussions: write

|

|

||||||

|

|

||||||

concurrency:

|

|

||||||

group: lock-threads

|

|

||||||

|

|

||||||

jobs:

|

|

||||||

lock-threads:

|

|

||||||

if: github.repository_owner == 'security-onion-solutions'

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: jertel/lock-threads@main

|

|

||||||

with:

|

|

||||||

include-discussion-currently-open: true

|

|

||||||

discussion-inactive-days: 90

|

|

||||||

issue-inactive-days: 30

|

|

||||||

pr-inactive-days: 30

|

|

||||||

@@ -1,17 +1,17 @@

|

|||||||

### 2.4.80-20240624 ISO image released on 2024/06/25

|

### 2.4.30-20231228 ISO image released on 2024/01/02

|

||||||

|

|

||||||

|

|

||||||

### Download and Verify

|

### Download and Verify

|

||||||

|

|

||||||

2.4.80-20240624 ISO image:

|

2.4.30-20231228 ISO image:

|

||||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.80-20240624.iso

|

https://download.securityonion.net/file/securityonion/securityonion-2.4.30-20231228.iso

|

||||||

|

|

||||||

MD5: 139F9762E926F9CB3C4A9528A3752C31

|

MD5: DBD47645CD6FA8358C51D8753046FB54

|

||||||

SHA1: BC6CA2C5F4ABC1A04E83A5CF8FFA6A53B1583CC9

|

SHA1: 2494091065434ACB028F71444A5D16E8F8A11EDF

|

||||||

SHA256: 70E90845C84FFA30AD6CF21504634F57C273E7996CA72F7250428DDBAAC5B1BD

|

SHA256: 3345AE1DC58AC7F29D82E60D9A36CDF8DE19B7DFF999D8C4F89C7BD36AEE7F1D

|

||||||

|

|

||||||

Signature for ISO image:

|

Signature for ISO image:

|

||||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.80-20240624.iso.sig

|

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.30-20231228.iso.sig

|

||||||

|

|

||||||

Signing key:

|

Signing key:

|

||||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||||

@@ -25,29 +25,27 @@ wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.

|

|||||||

|

|

||||||

Download the signature file for the ISO:

|

Download the signature file for the ISO:

|

||||||

```

|

```

|

||||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.80-20240624.iso.sig

|

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.30-20231228.iso.sig

|

||||||

```

|

```

|

||||||

|

|

||||||

Download the ISO image:

|

Download the ISO image:

|

||||||

```

|

```

|

||||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.80-20240624.iso

|

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.30-20231228.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

Verify the downloaded ISO image using the signature file:

|

Verify the downloaded ISO image using the signature file:

|

||||||

```

|

```

|

||||||

gpg --verify securityonion-2.4.80-20240624.iso.sig securityonion-2.4.80-20240624.iso

|

gpg --verify securityonion-2.4.30-20231228.iso.sig securityonion-2.4.30-20231228.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||||

```

|

```

|

||||||

gpg: Signature made Mon 24 Jun 2024 02:42:03 PM EDT using RSA key ID FE507013

|

gpg: Signature made Thu 28 Dec 2023 10:08:31 AM EST using RSA key ID FE507013

|

||||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||||

gpg: WARNING: This key is not certified with a trusted signature!

|

gpg: WARNING: This key is not certified with a trusted signature!

|

||||||

gpg: There is no indication that the signature belongs to the owner.

|

gpg: There is no indication that the signature belongs to the owner.

|

||||||

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||||

```

|

```

|

||||||

|

|

||||||

If it fails to verify, try downloading again. If it still fails to verify, try downloading from another computer or another network.

|

|

||||||

|

|

||||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||||

https://docs.securityonion.net/en/2.4/installation.html

|

https://docs.securityonion.net/en/2.4/installation.html

|

||||||

|

|||||||

13

README.md

13

README.md

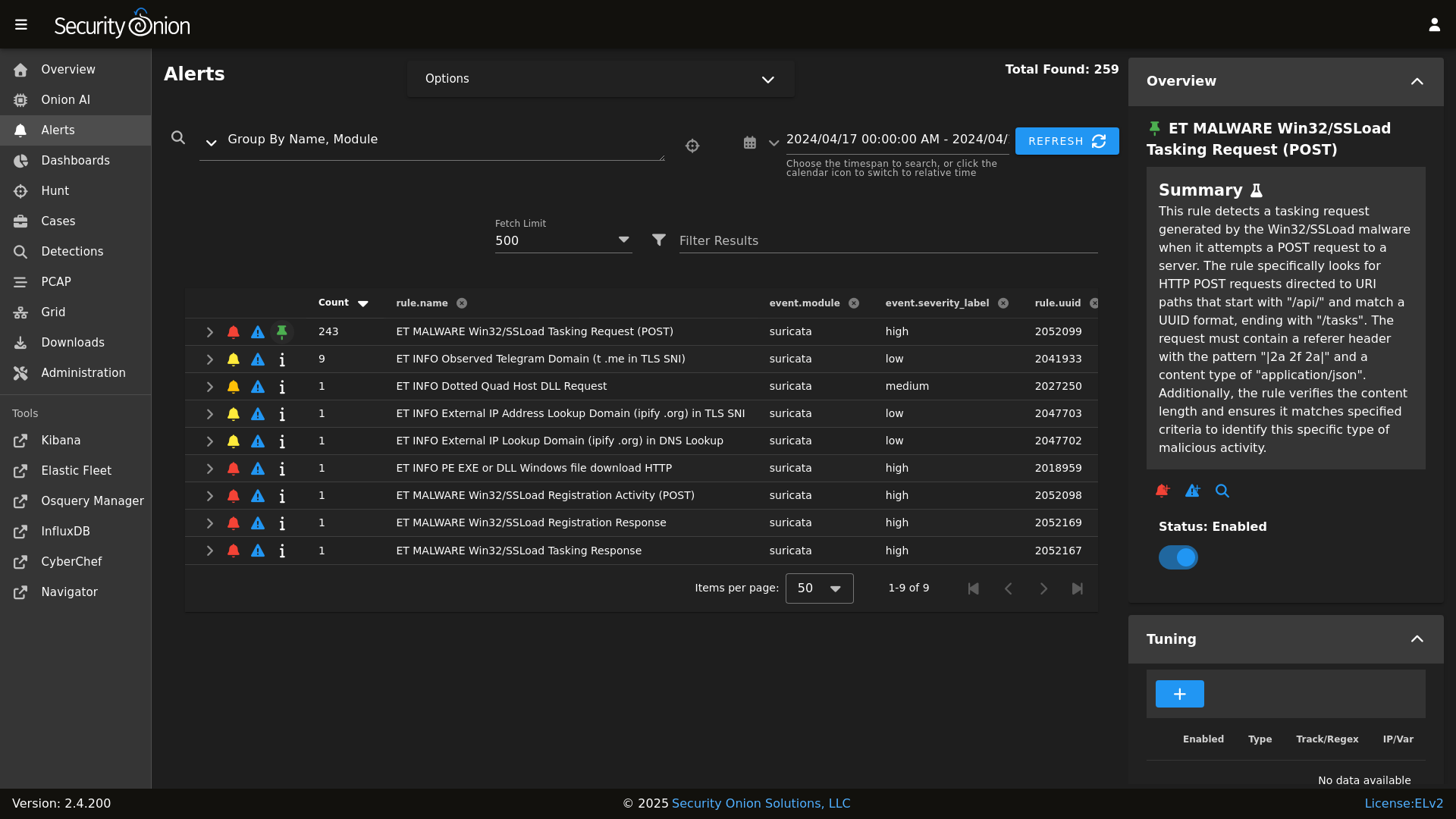

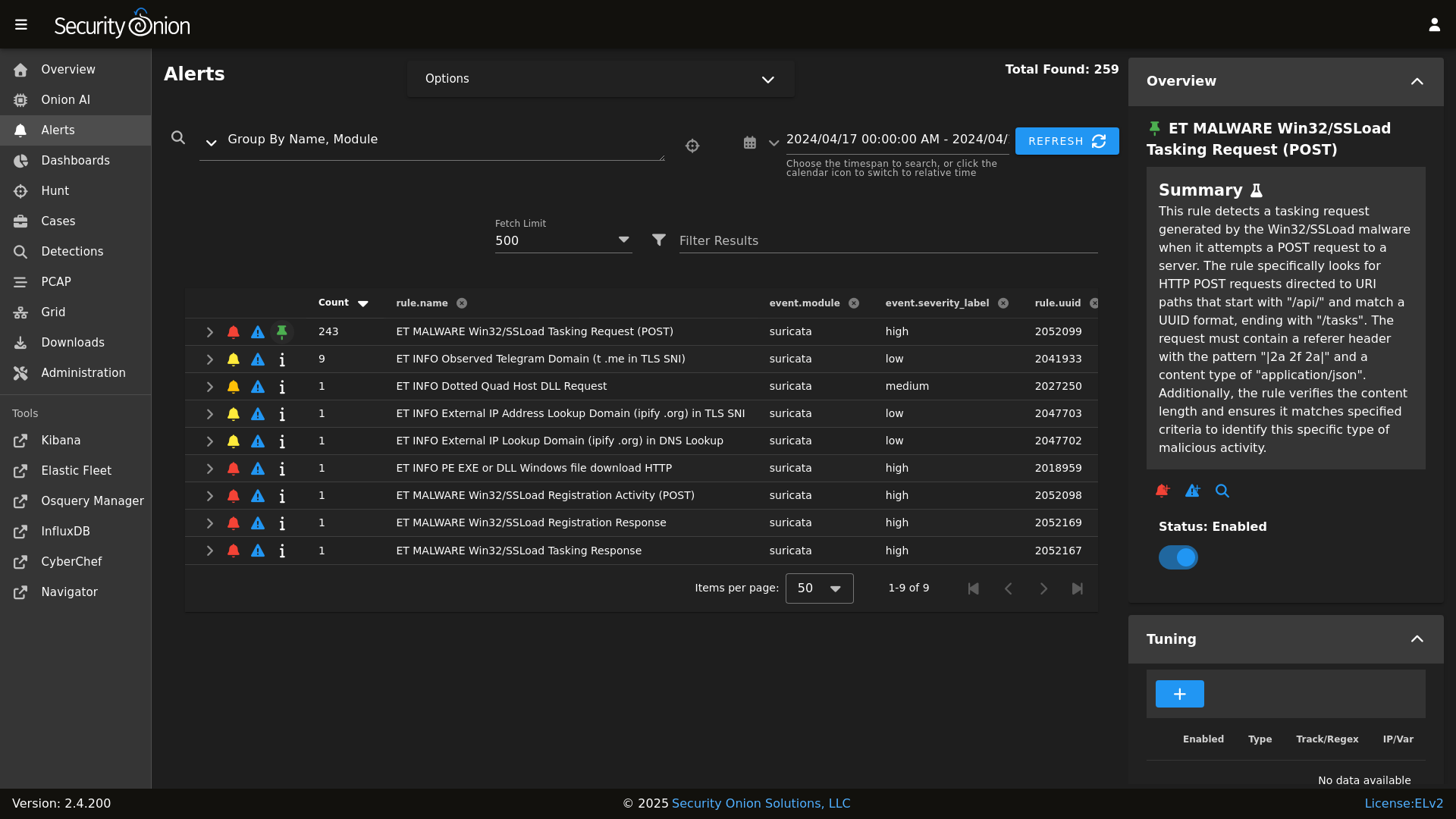

@@ -8,22 +8,19 @@ Alerts

|

|||||||

|

|

||||||

|

|

||||||

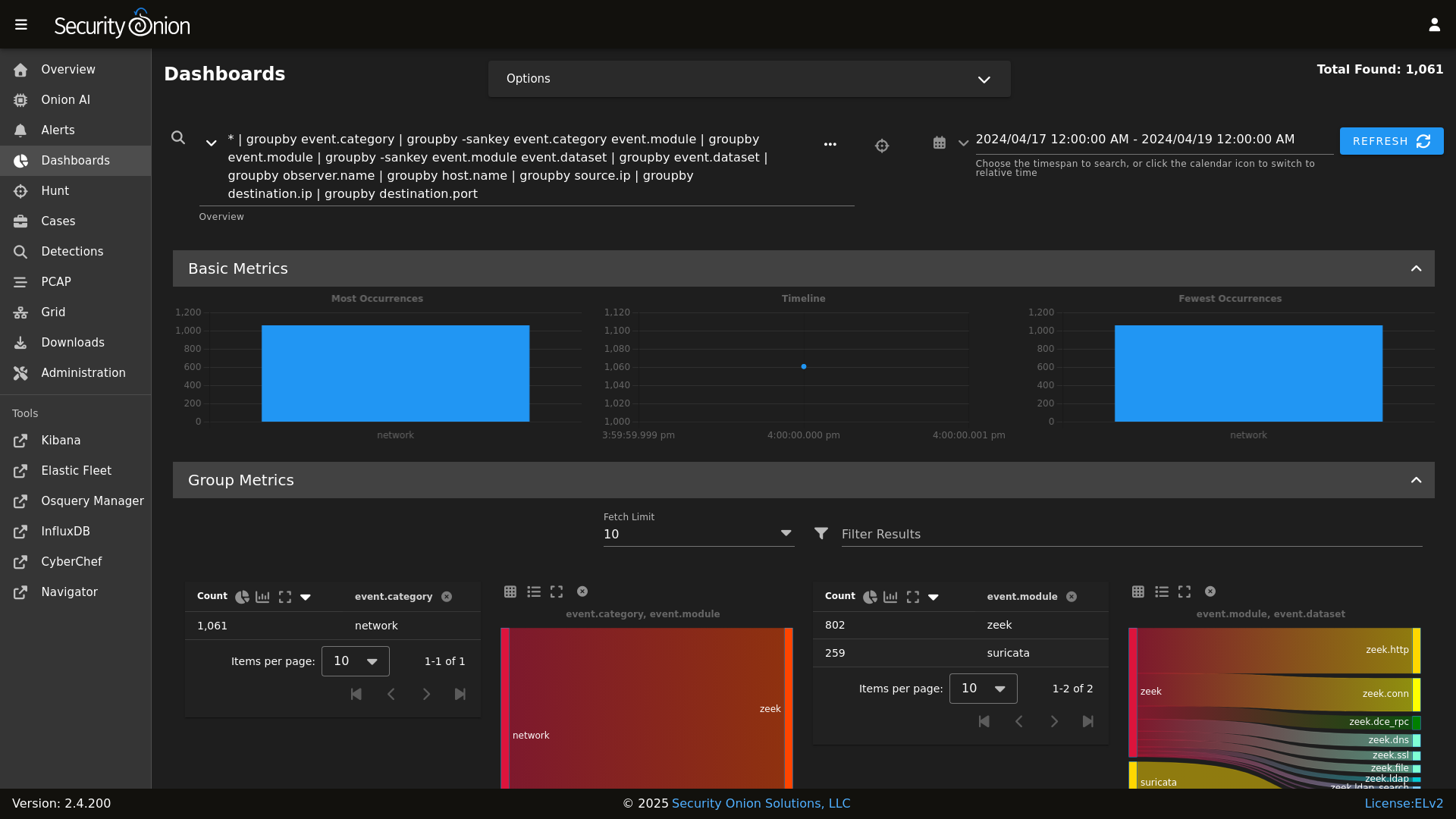

Dashboards

|

Dashboards

|

||||||

|

|

||||||

|

|

||||||

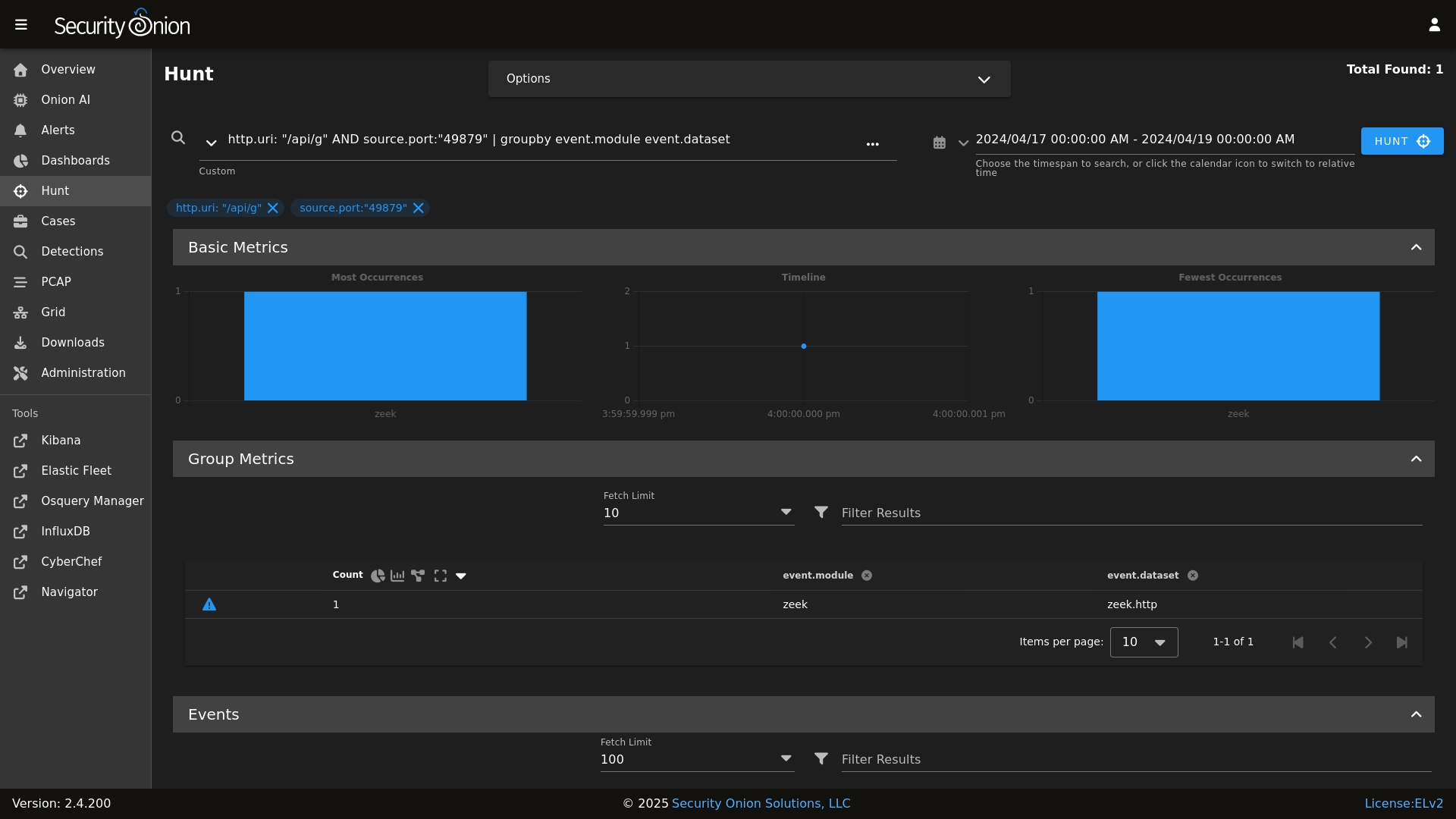

Hunt

|

Hunt

|

||||||

|

|

||||||

|

|

||||||

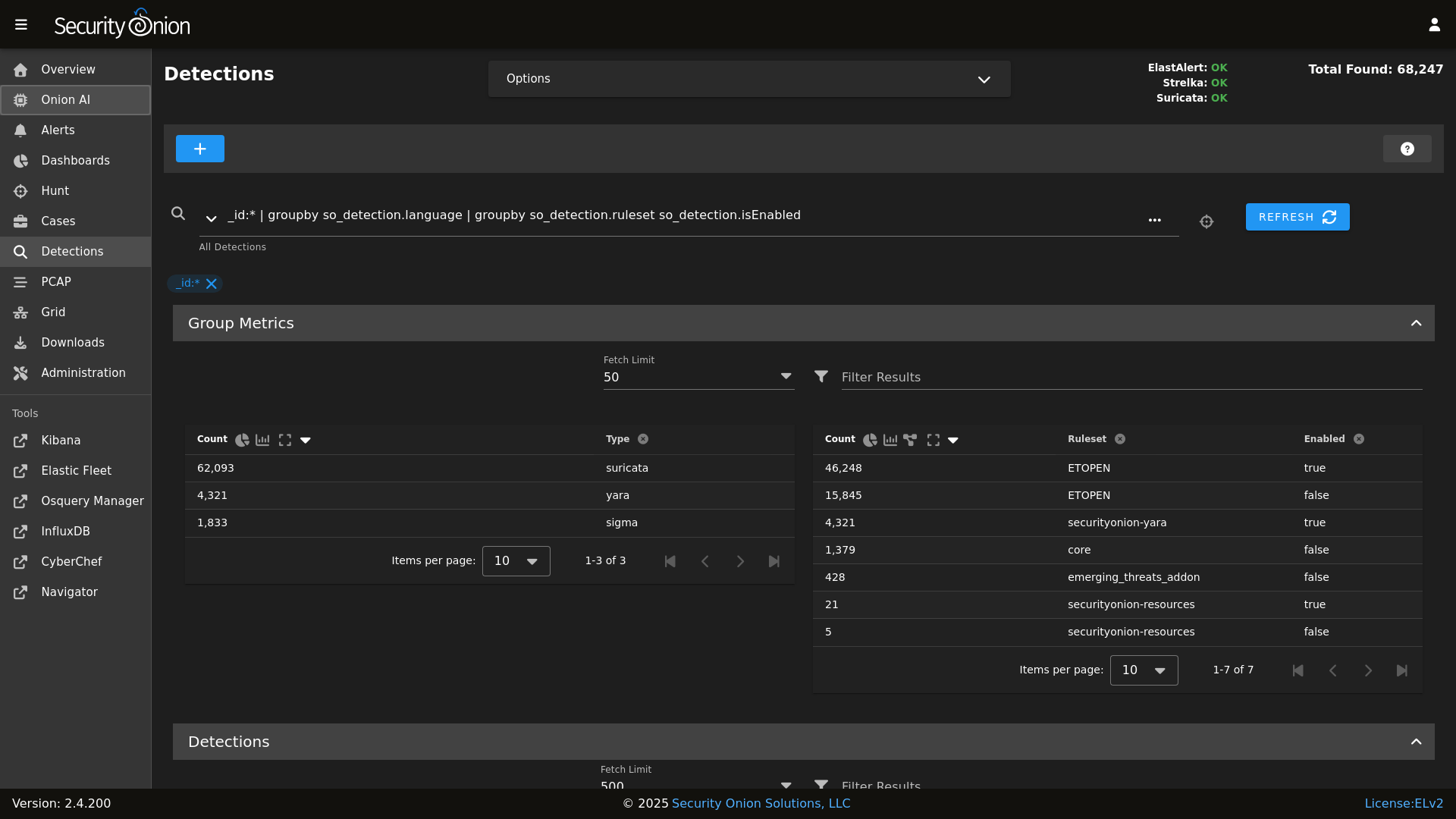

Detections

|

|

||||||

|

|

||||||

|

|

||||||

PCAP

|

PCAP

|

||||||

|

|

||||||

|

|

||||||

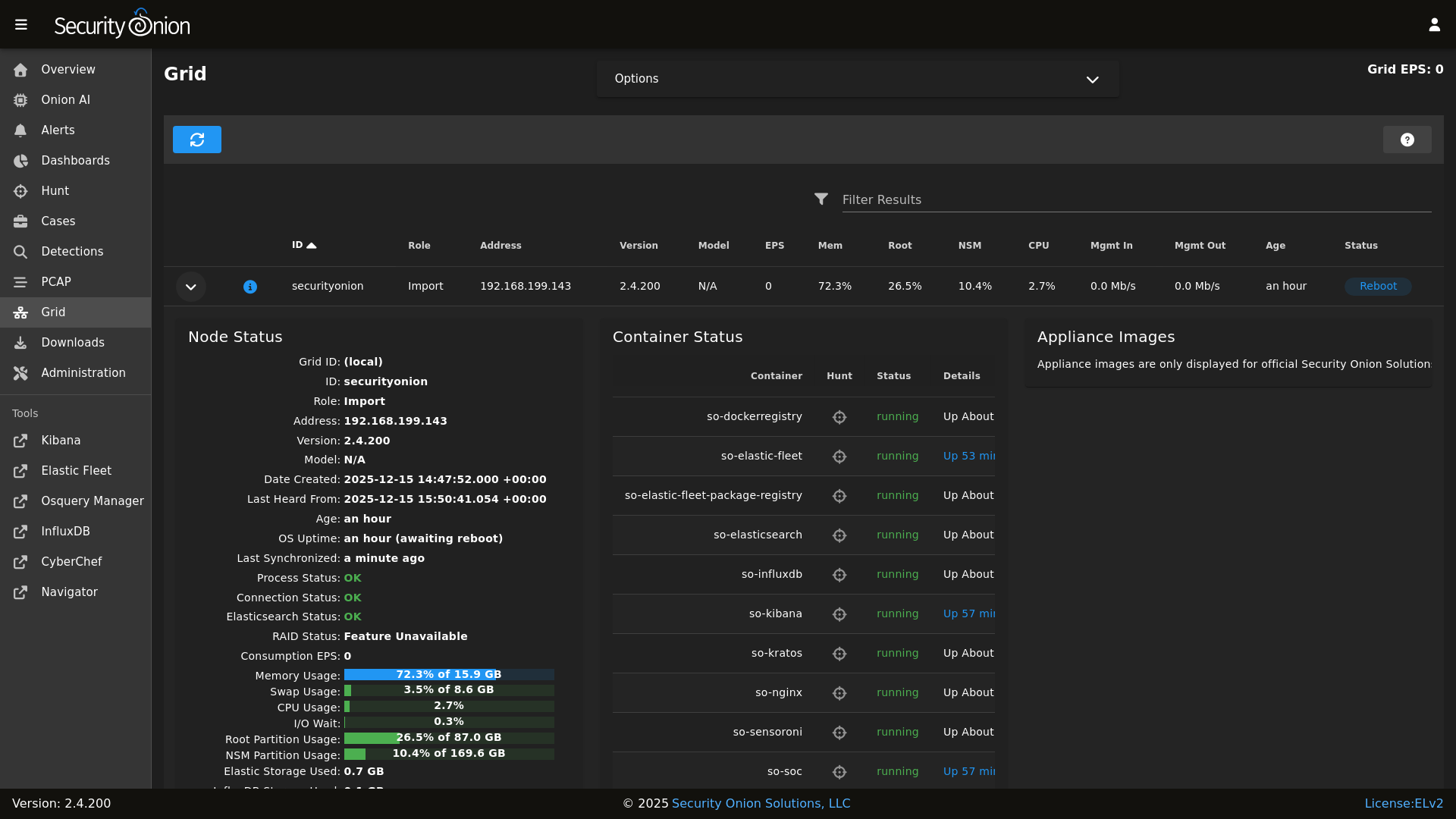

Grid

|

Grid

|

||||||

|

|

||||||

|

|

||||||

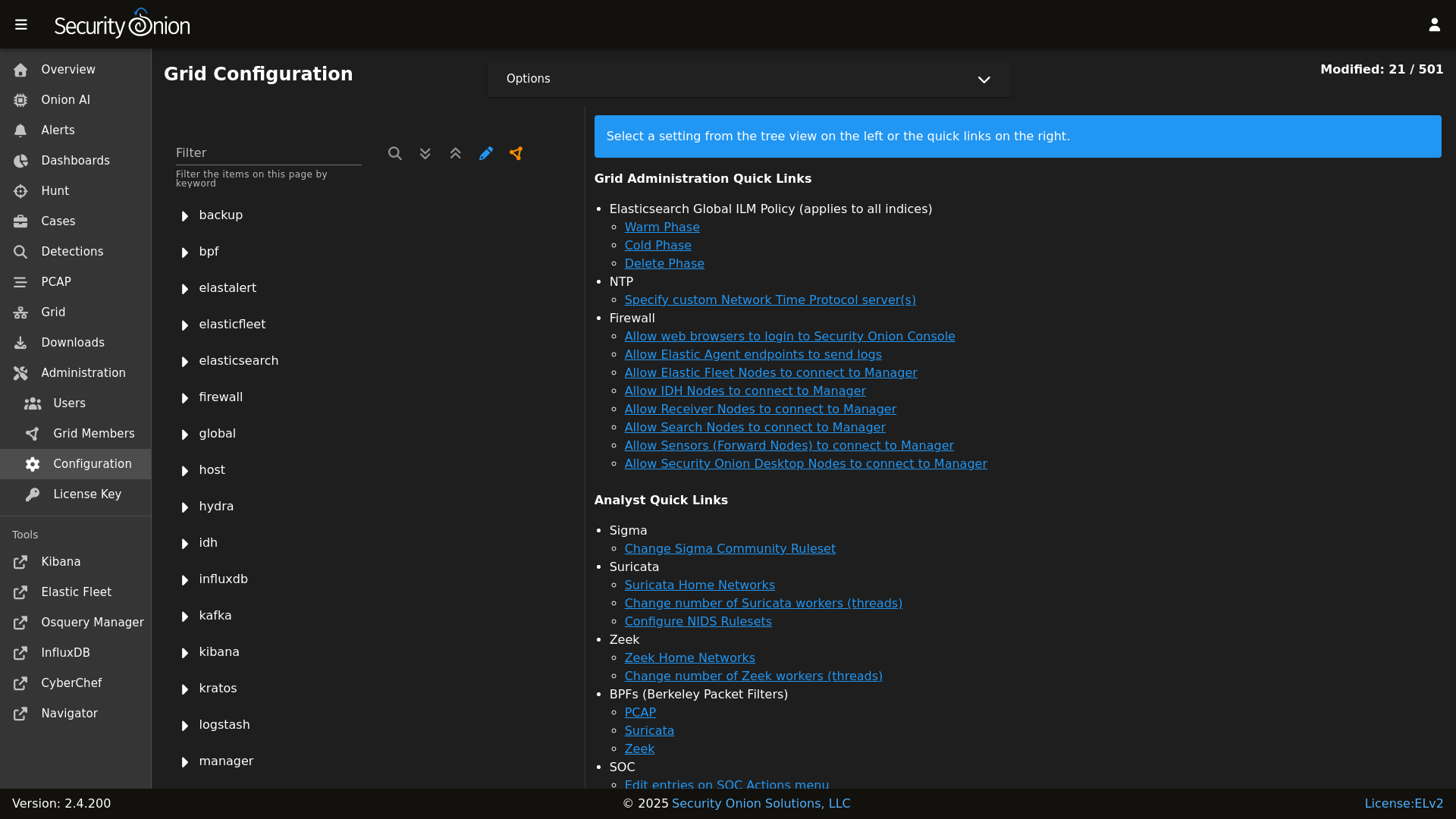

Config

|

Config

|

||||||

|

|

||||||

|

|

||||||

### Release Notes

|

### Release Notes

|

||||||

|

|

||||||

|

|||||||

@@ -41,8 +41,7 @@ file_roots:

|

|||||||

base:

|

base:

|

||||||

- /opt/so/saltstack/local/salt

|

- /opt/so/saltstack/local/salt

|

||||||

- /opt/so/saltstack/default/salt

|

- /opt/so/saltstack/default/salt

|

||||||

- /nsm/elastic-fleet/artifacts

|

|

||||||

- /opt/so/rules/nids

|

|

||||||

|

|

||||||

# The master_roots setting configures a master-only copy of the file_roots dictionary,

|

# The master_roots setting configures a master-only copy of the file_roots dictionary,

|

||||||

# used by the state compiler.

|

# used by the state compiler.

|

||||||

|

|||||||

@@ -1,2 +0,0 @@

|

|||||||

kafka:

|

|

||||||

nodes:

|

|

||||||

@@ -16,6 +16,7 @@ base:

|

|||||||

- sensoroni.adv_sensoroni

|

- sensoroni.adv_sensoroni

|

||||||

- telegraf.soc_telegraf

|

- telegraf.soc_telegraf

|

||||||

- telegraf.adv_telegraf

|

- telegraf.adv_telegraf

|

||||||

|

- users

|

||||||

|

|

||||||

'* and not *_desktop':

|

'* and not *_desktop':

|

||||||

- firewall.soc_firewall

|

- firewall.soc_firewall

|

||||||

@@ -43,6 +44,8 @@ base:

|

|||||||

- soc.soc_soc

|

- soc.soc_soc

|

||||||

- soc.adv_soc

|

- soc.adv_soc

|

||||||

- soc.license

|

- soc.license

|

||||||

|

- soctopus.soc_soctopus

|

||||||

|

- soctopus.adv_soctopus

|

||||||

- kibana.soc_kibana

|

- kibana.soc_kibana

|

||||||

- kibana.adv_kibana

|

- kibana.adv_kibana

|

||||||

- kratos.soc_kratos

|

- kratos.soc_kratos

|

||||||

@@ -59,12 +62,10 @@ base:

|

|||||||

- elastalert.adv_elastalert

|

- elastalert.adv_elastalert

|

||||||

- backup.soc_backup

|

- backup.soc_backup

|

||||||

- backup.adv_backup

|

- backup.adv_backup

|

||||||

|

- soctopus.soc_soctopus

|

||||||

|

- soctopus.adv_soctopus

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- kafka.nodes

|

|

||||||

- kafka.soc_kafka

|

|

||||||

- kafka.adv_kafka

|

|

||||||

- stig.soc_stig

|

|

||||||

|

|

||||||

'*_sensor':

|

'*_sensor':

|

||||||

- healthcheck.sensor

|

- healthcheck.sensor

|

||||||

@@ -80,8 +81,6 @@ base:

|

|||||||

- suricata.adv_suricata

|

- suricata.adv_suricata

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- stig.soc_stig

|

|

||||||

- soc.license

|

|

||||||

|

|

||||||

'*_eval':

|

'*_eval':

|

||||||

- secrets

|

- secrets

|

||||||

@@ -107,6 +106,8 @@ base:

|

|||||||

- soc.soc_soc

|

- soc.soc_soc

|

||||||

- soc.adv_soc

|

- soc.adv_soc

|

||||||

- soc.license

|

- soc.license

|

||||||

|

- soctopus.soc_soctopus

|

||||||

|

- soctopus.adv_soctopus

|

||||||

- kibana.soc_kibana

|

- kibana.soc_kibana

|

||||||

- kibana.adv_kibana

|

- kibana.adv_kibana

|

||||||

- strelka.soc_strelka

|

- strelka.soc_strelka

|

||||||

@@ -162,6 +163,8 @@ base:

|

|||||||

- soc.soc_soc

|

- soc.soc_soc

|

||||||

- soc.adv_soc

|

- soc.adv_soc

|

||||||

- soc.license

|

- soc.license

|

||||||

|

- soctopus.soc_soctopus

|

||||||

|

- soctopus.adv_soctopus

|

||||||

- kibana.soc_kibana

|

- kibana.soc_kibana

|

||||||

- kibana.adv_kibana

|

- kibana.adv_kibana

|

||||||

- strelka.soc_strelka

|

- strelka.soc_strelka

|

||||||

@@ -178,10 +181,6 @@ base:

|

|||||||

- suricata.adv_suricata

|

- suricata.adv_suricata

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- stig.soc_stig

|

|

||||||

- kafka.nodes

|

|

||||||

- kafka.soc_kafka

|

|

||||||

- kafka.adv_kafka

|

|

||||||

|

|

||||||

'*_heavynode':

|

'*_heavynode':

|

||||||

- elasticsearch.auth

|

- elasticsearch.auth

|

||||||

@@ -224,9 +223,6 @@ base:

|

|||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- stig.soc_stig

|

|

||||||

- soc.license

|

|

||||||

- kafka.nodes

|

|

||||||

|

|

||||||

'*_receiver':

|

'*_receiver':

|

||||||

- logstash.nodes

|

- logstash.nodes

|

||||||

@@ -239,10 +235,6 @@ base:

|

|||||||

- redis.adv_redis

|

- redis.adv_redis

|

||||||

- minions.{{ grains.id }}

|

- minions.{{ grains.id }}

|

||||||

- minions.adv_{{ grains.id }}

|

- minions.adv_{{ grains.id }}

|

||||||

- kafka.nodes

|

|

||||||

- kafka.soc_kafka

|

|

||||||

- kafka.adv_kafka

|

|

||||||

- soc.license

|

|

||||||

|

|

||||||

'*_import':

|

'*_import':

|

||||||

- secrets

|

- secrets

|

||||||

@@ -265,6 +257,8 @@ base:

|

|||||||

- soc.soc_soc

|

- soc.soc_soc

|

||||||

- soc.adv_soc

|

- soc.adv_soc

|

||||||

- soc.license

|

- soc.license

|

||||||

|

- soctopus.soc_soctopus

|

||||||

|

- soctopus.adv_soctopus

|

||||||

- kibana.soc_kibana

|

- kibana.soc_kibana

|

||||||

- kibana.adv_kibana

|

- kibana.adv_kibana

|

||||||

- backup.soc_backup

|

- backup.soc_backup

|

||||||

|

|||||||

2

pillar/users/init.sls

Normal file

2

pillar/users/init.sls

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

# users pillar goes in /opt/so/saltstack/local/pillar/users/init.sls

|

||||||

|

# the users directory may need to be created under /opt/so/saltstack/local/pillar

|

||||||

18

pillar/users/pillar.example

Normal file

18

pillar/users/pillar.example

Normal file

@@ -0,0 +1,18 @@

|

|||||||

|

users:

|

||||||

|

sclapton:

|

||||||

|

# required fields

|

||||||

|

status: present

|

||||||

|

# node_access determines which node types the user can access.

|

||||||

|

# this can either be by grains.role or by final part of the minion id after the _

|

||||||

|

node_access:

|

||||||

|

- standalone

|

||||||

|

- searchnode

|

||||||

|

# optional fields

|

||||||

|

fullname: Stevie Claptoon

|

||||||

|

uid: 1001

|

||||||

|

gid: 1001

|

||||||

|

homephone: does not have a phone

|

||||||

|

groups:

|

||||||

|

- mygroup1

|

||||||

|

- mygroup2

|

||||||

|

- wheel # give sudo access

|

||||||

20

pillar/users/pillar.usage

Normal file

20

pillar/users/pillar.usage

Normal file

@@ -0,0 +1,20 @@

|

|||||||

|

users:

|

||||||

|

sclapton:

|

||||||

|

# required fields

|

||||||

|

status: <present | absent>

|

||||||

|

# node_access determines which node types the user can access.

|

||||||

|

# this can either be by grains.role or by final part of the minion id after the _

|

||||||

|

node_access:

|

||||||

|

- standalone

|

||||||

|

- searchnode

|

||||||

|

# optional fields

|

||||||

|

fullname: <string>

|

||||||

|

uid: <integer>

|

||||||

|

gid: <integer>

|

||||||

|

roomnumber: <string>

|

||||||

|

workphone: <string>

|

||||||

|

homephone: <string>

|

||||||

|

groups:

|

||||||

|

- <string>

|

||||||

|

- <string>

|

||||||

|

- wheel # give sudo access

|

||||||

12

pyci.sh

12

pyci.sh

@@ -15,16 +15,12 @@ TARGET_DIR=${1:-.}

|

|||||||

|

|

||||||

PATH=$PATH:/usr/local/bin

|

PATH=$PATH:/usr/local/bin

|

||||||

|

|

||||||

if [ ! -d .venv ]; then

|

if ! which pytest &> /dev/null || ! which flake8 &> /dev/null ; then

|

||||||

python -m venv .venv

|

echo "Missing dependencies. Consider running the following command:"

|

||||||

fi

|

echo " python -m pip install flake8 pytest pytest-cov"

|

||||||

|

|

||||||

source .venv/bin/activate

|

|

||||||

|

|

||||||

if ! pip install flake8 pytest pytest-cov pyyaml; then

|

|

||||||

echo "Unable to install dependencies."

|

|

||||||

exit 1

|

exit 1

|

||||||

fi

|

fi

|

||||||

|

|

||||||

|

pip install pytest pytest-cov

|

||||||

flake8 "$TARGET_DIR" "--config=${HOME_DIR}/pytest.ini"

|

flake8 "$TARGET_DIR" "--config=${HOME_DIR}/pytest.ini"

|

||||||

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

python3 -m pytest "--cov-config=${HOME_DIR}/pytest.ini" "--cov=$TARGET_DIR" --doctest-modules --cov-report=term --cov-fail-under=100 "$TARGET_DIR"

|

||||||

@@ -34,6 +34,7 @@

|

|||||||

'suricata',

|

'suricata',

|

||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

|

'soctopus',

|

||||||

'tcpreplay',

|

'tcpreplay',

|

||||||

'docker_clean'

|

'docker_clean'

|

||||||

],

|

],

|

||||||

@@ -65,7 +66,6 @@

|

|||||||

'registry',

|

'registry',

|

||||||

'manager',

|

'manager',

|

||||||

'nginx',

|

'nginx',

|

||||||

'strelka.manager',

|

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

@@ -92,7 +92,6 @@

|

|||||||

'nginx',

|

'nginx',

|

||||||

'telegraf',

|

'telegraf',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

'strelka.manager',

|

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'elasticfleet',

|

'elasticfleet',

|

||||||

@@ -102,9 +101,8 @@

|

|||||||

'suricata.manager',

|

'suricata.manager',

|

||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'soctopus',

|

||||||

'stig',

|

'docker_clean'

|

||||||

'kafka'

|

|

||||||

],

|

],

|

||||||

'so-managersearch': [

|

'so-managersearch': [

|

||||||

'salt.master',

|

'salt.master',

|

||||||

@@ -114,7 +112,6 @@

|

|||||||

'nginx',

|

'nginx',

|

||||||

'telegraf',

|

'telegraf',

|

||||||

'influxdb',

|

'influxdb',

|

||||||

'strelka.manager',

|

|

||||||

'soc',

|

'soc',

|

||||||

'kratos',

|

'kratos',

|

||||||

'elastic-fleet-package-registry',

|

'elastic-fleet-package-registry',

|

||||||

@@ -125,9 +122,8 @@

|

|||||||

'suricata.manager',

|

'suricata.manager',

|

||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'soctopus',

|

||||||

'stig',

|

'docker_clean'

|

||||||

'kafka'

|

|

||||||

],

|

],

|

||||||

'so-searchnode': [

|

'so-searchnode': [

|

||||||

'ssl',

|

'ssl',

|

||||||

@@ -135,8 +131,7 @@

|

|||||||

'telegraf',

|

'telegraf',

|

||||||

'firewall',

|

'firewall',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'docker_clean'

|

||||||

'stig'

|

|

||||||

],

|

],

|

||||||

'so-standalone': [

|

'so-standalone': [

|

||||||

'salt.master',

|

'salt.master',

|

||||||

@@ -159,10 +154,9 @@

|

|||||||

'healthcheck',

|

'healthcheck',

|

||||||

'utility',

|

'utility',

|

||||||

'schedule',

|

'schedule',

|

||||||

|

'soctopus',

|

||||||

'tcpreplay',

|

'tcpreplay',

|

||||||

'docker_clean',

|

'docker_clean'

|

||||||

'stig',

|

|

||||||

'kafka'

|

|

||||||

],

|

],

|

||||||

'so-sensor': [

|

'so-sensor': [

|

||||||

'ssl',

|

'ssl',

|

||||||

@@ -174,15 +168,13 @@

|

|||||||

'healthcheck',

|

'healthcheck',

|

||||||

'schedule',

|

'schedule',

|

||||||

'tcpreplay',

|

'tcpreplay',

|

||||||

'docker_clean',

|

'docker_clean'

|

||||||

'stig'

|

|

||||||

],

|

],

|

||||||

'so-fleet': [

|

'so-fleet': [

|

||||||

'ssl',

|

'ssl',

|

||||||

'telegraf',

|

'telegraf',

|

||||||

'firewall',

|

'firewall',

|

||||||

'logstash',

|

'logstash',

|

||||||

'nginx',

|

|

||||||

'healthcheck',

|

'healthcheck',

|

||||||

'schedule',

|

'schedule',

|

||||||

'elasticfleet',

|

'elasticfleet',

|

||||||

@@ -193,10 +185,7 @@

|

|||||||

'telegraf',

|

'telegraf',

|

||||||

'firewall',

|

'firewall',

|

||||||

'schedule',

|

'schedule',

|

||||||

'docker_clean',

|

'docker_clean'

|

||||||

'kafka',

|

|

||||||

'elasticsearch.ca',

|

|

||||||

'stig'

|

|

||||||

],

|

],

|

||||||

'so-desktop': [

|

'so-desktop': [

|

||||||

'ssl',

|

'ssl',

|

||||||

@@ -205,6 +194,10 @@

|

|||||||

],

|

],

|

||||||

}, grain='role') %}

|

}, grain='role') %}

|

||||||

|

|

||||||

|

{% if grains.role in ['so-eval', 'so-manager', 'so-managersearch', 'so-standalone'] %}

|

||||||

|

{% do allowed_states.append('mysql') %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

{%- if grains.role in ['so-sensor', 'so-eval', 'so-standalone', 'so-heavynode'] %}

|

{%- if grains.role in ['so-sensor', 'so-eval', 'so-standalone', 'so-heavynode'] %}

|

||||||

{% do allowed_states.append('zeek') %}

|

{% do allowed_states.append('zeek') %}

|

||||||

{%- endif %}

|

{%- endif %}

|

||||||

@@ -230,6 +223,10 @@

|

|||||||

{% do allowed_states.append('elastalert') %}

|

{% do allowed_states.append('elastalert') %}

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|

||||||

|

{% if grains.role in ['so-eval', 'so-manager', 'so-standalone', 'so-managersearch'] %}

|

||||||

|

{% do allowed_states.append('playbook') %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

{% if grains.role in ['so-manager', 'so-standalone', 'so-searchnode', 'so-managersearch', 'so-heavynode', 'so-receiver'] %}

|

{% if grains.role in ['so-manager', 'so-standalone', 'so-searchnode', 'so-managersearch', 'so-heavynode', 'so-receiver'] %}

|

||||||

{% do allowed_states.append('logstash') %}

|

{% do allowed_states.append('logstash') %}

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|||||||

@@ -1,10 +1,7 @@

|

|||||||

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

|

||||||

{% if GLOBALS.pcap_engine == "TRANSITION" %}

|

|

||||||

{% set PCAPBPF = ["ip and host 255.255.255.1 and port 1"] %}

|

|

||||||

{% else %}

|

|

||||||

{% import_yaml 'bpf/defaults.yaml' as BPFDEFAULTS %}

|

{% import_yaml 'bpf/defaults.yaml' as BPFDEFAULTS %}

|

||||||

{% set BPFMERGED = salt['pillar.get']('bpf', BPFDEFAULTS.bpf, merge=True) %}

|

{% set BPFMERGED = salt['pillar.get']('bpf', BPFDEFAULTS.bpf, merge=True) %}

|

||||||

{% import 'bpf/macros.jinja' as MACROS %}

|

{% import 'bpf/macros.jinja' as MACROS %}

|

||||||

|

|

||||||

{{ MACROS.remove_comments(BPFMERGED, 'pcap') }}

|

{{ MACROS.remove_comments(BPFMERGED, 'pcap') }}

|

||||||

|

|

||||||

{% set PCAPBPF = BPFMERGED.pcap %}

|

{% set PCAPBPF = BPFMERGED.pcap %}

|

||||||

{% endif %}

|

|

||||||

|

|||||||

@@ -1,6 +1,6 @@

|

|||||||

bpf:

|

bpf:

|

||||||

pcap:

|

pcap:

|

||||||

description: List of BPF filters to apply to Stenographer.

|

description: List of BPF filters to apply to PCAP.

|

||||||

multiline: True

|

multiline: True

|

||||||

forcedType: "[]string"

|

forcedType: "[]string"

|

||||||

helpLink: bpf.html

|

helpLink: bpf.html

|

||||||

|

|||||||

@@ -1,3 +1,6 @@

|

|||||||

|

mine_functions:

|

||||||

|

x509.get_pem_entries: [/etc/pki/ca.crt]

|

||||||

|

|

||||||

x509_signing_policies:

|

x509_signing_policies:

|

||||||

filebeat:

|

filebeat:

|

||||||

- minions: '*'

|

- minions: '*'

|

||||||

@@ -67,17 +70,3 @@ x509_signing_policies:

|

|||||||

- authorityKeyIdentifier: keyid,issuer:always

|

- authorityKeyIdentifier: keyid,issuer:always

|

||||||

- days_valid: 820

|

- days_valid: 820

|

||||||

- copypath: /etc/pki/issued_certs/

|

- copypath: /etc/pki/issued_certs/

|

||||||

kafka:

|

|

||||||

- minions: '*'

|

|

||||||

- signing_private_key: /etc/pki/ca.key

|

|

||||||

- signing_cert: /etc/pki/ca.crt

|

|

||||||

- C: US

|

|

||||||

- ST: Utah

|

|

||||||

- L: Salt Lake City

|

|

||||||

- basicConstraints: "critical CA:false"

|

|

||||||

- keyUsage: "digitalSignature, keyEncipherment"

|

|

||||||

- subjectKeyIdentifier: hash

|

|

||||||

- authorityKeyIdentifier: keyid,issuer:always

|

|

||||||

- extendedKeyUsage: "serverAuth, clientAuth"

|

|

||||||

- days_valid: 820

|

|

||||||

- copypath: /etc/pki/issued_certs/

|

|

||||||

|

|||||||

@@ -4,6 +4,7 @@

|

|||||||

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

||||||

|

|

||||||

include:

|

include:

|

||||||

|

- common.soup_scripts

|

||||||

- common.packages

|

- common.packages

|

||||||

{% if GLOBALS.role in GLOBALS.manager_roles %}

|

{% if GLOBALS.role in GLOBALS.manager_roles %}

|

||||||

- manager.elasticsearch # needed for elastic_curl_config state

|

- manager.elasticsearch # needed for elastic_curl_config state

|

||||||

@@ -133,18 +134,6 @@ common_sbin_jinja:

|

|||||||

- file_mode: 755

|

- file_mode: 755

|

||||||

- template: jinja

|

- template: jinja

|

||||||

|

|

||||||

{% if not GLOBALS.is_manager%}

|

|

||||||

# prior to 2.4.50 these scripts were in common/tools/sbin on the manager because of soup and distributed to non managers

|

|

||||||

# these two states remove the scripts from non manager nodes

|

|

||||||

remove_soup:

|

|

||||||

file.absent:

|

|

||||||

- name: /usr/sbin/soup

|

|

||||||

|

|

||||||

remove_so-firewall:

|

|

||||||

file.absent:

|

|

||||||

- name: /usr/sbin/so-firewall

|

|

||||||

{% endif %}

|

|

||||||

|

|

||||||

so-status_script:

|

so-status_script:

|

||||||

file.managed:

|

file.managed:

|

||||||

- name: /usr/sbin/so-status

|

- name: /usr/sbin/so-status

|

||||||

|

|||||||

@@ -1,117 +1,23 @@

|

|||||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

# Sync some Utilities

|

||||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

soup_scripts:

|

||||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

file.recurse:

|

||||||

# Elastic License 2.0.

|

- name: /usr/sbin

|

||||||

|

- user: root

|

||||||

|

- group: root

|

||||||

|

- file_mode: 755

|

||||||

|

- source: salt://common/tools/sbin

|

||||||

|

- include_pat:

|

||||||

|

- so-common

|

||||||

|

- so-image-common

|

||||||

|

|

||||||

{% if '2.4' in salt['cp.get_file_str']('/etc/soversion') %}

|

soup_manager_scripts:

|

||||||

|

file.recurse:

|

||||||

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

- name: /usr/sbin

|

||||||

{% if SOC_GLOBAL.global.airgap %}

|

- user: root

|

||||||

{% set UPDATE_DIR='/tmp/soagupdate/SecurityOnion' %}

|

- group: root

|

||||||

{% else %}

|

- file_mode: 755

|

||||||

{% set UPDATE_DIR='/tmp/sogh/securityonion' %}

|

- source: salt://manager/tools/sbin

|

||||||

{% endif %}

|

- include_pat:

|

||||||

|

- so-firewall

|

||||||

remove_common_soup:

|

- so-repo-sync

|

||||||

file.absent:

|

- soup

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/soup

|

|

||||||

|

|

||||||

remove_common_so-firewall:

|

|

||||||

file.absent:

|

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

|

||||||

|

|

||||||

# This section is used to put the scripts in place in the Salt file system

|

|

||||||

# in case a state run tries to overwrite what we do in the next section.

|

|

||||||

copy_so-common_common_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

|

||||||

- source: {{UPDATE_DIR}}/salt/common/tools/sbin/so-common

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-image-common_common_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-image-common

|

|

||||||

- source: {{UPDATE_DIR}}/salt/common/tools/sbin/so-image-common

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_soup_manager_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/soup

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/soup

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-firewall_manager_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-firewall

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-firewall

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-yaml_manager_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-yaml.py

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-repo-sync_manager_tools_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-repo-sync

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

# This section is used to put the new script in place so that it can be called during soup.

|

|

||||||

# It is faster than calling the states that normally manage them to put them in place.

|

|

||||||

copy_so-common_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/so-common

|

|

||||||

- source: {{UPDATE_DIR}}/salt/common/tools/sbin/so-common

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-image-common_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/so-image-common

|

|

||||||

- source: {{UPDATE_DIR}}/salt/common/tools/sbin/so-image-common

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_soup_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/soup

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/soup

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-firewall_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/so-firewall

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-firewall

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-yaml_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/so-yaml.py

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

copy_so-repo-sync_sbin:

|

|

||||||

file.copy:

|

|

||||||

- name: /usr/sbin/so-repo-sync

|

|

||||||

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-repo-sync

|

|

||||||

- force: True

|

|

||||||

- preserve: True

|

|

||||||

|

|

||||||

{% else %}

|

|

||||||

fix_23_soup_sbin:

|

|

||||||

cmd.run:

|

|

||||||

- name: curl -s -f -o /usr/sbin/soup https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.3/main/salt/common/tools/sbin/soup

|

|

||||||

fix_23_soup_salt:

|

|

||||||

cmd.run:

|

|

||||||

- name: curl -s -f -o /opt/so/saltstack/defalt/salt/common/tools/sbin/soup https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.3/main/salt/common/tools/sbin/soup

|

|

||||||

{% endif %}

|

|

||||||

|

|||||||

@@ -5,13 +5,8 @@

|

|||||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

# Elastic License 2.0.

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

. /usr/sbin/so-common

|

. /usr/sbin/so-common

|

||||||

|

|

||||||

cat << EOF

|

salt-call state.highstate -l info

|

||||||

|

|

||||||

so-checkin will run a full salt highstate to apply all salt states. If a highstate is already running, this request will be queued and so it may pause for a few minutes before you see any more output. For more information about so-checkin and salt, please see:

|

|

||||||

https://docs.securityonion.net/en/2.4/salt.html

|

|

||||||

|

|

||||||

EOF

|

|

||||||

|

|

||||||

salt-call state.highstate -l info queue=True

|

|

||||||

|

|||||||

@@ -31,11 +31,6 @@ if ! echo "$PATH" | grep -q "/usr/sbin"; then

|

|||||||

export PATH="$PATH:/usr/sbin"

|

export PATH="$PATH:/usr/sbin"

|

||||||

fi

|

fi

|

||||||

|

|

||||||

# See if a proxy is set. If so use it.

|

|

||||||

if [ -f /etc/profile.d/so-proxy.sh ]; then

|

|

||||||

. /etc/profile.d/so-proxy.sh

|

|

||||||

fi

|

|

||||||

|

|

||||||

# Define a banner to separate sections

|

# Define a banner to separate sections

|

||||||

banner="========================================================================="

|

banner="========================================================================="

|

||||||

|

|

||||||

@@ -184,21 +179,6 @@ copy_new_files() {

|

|||||||

cd /tmp

|

cd /tmp

|

||||||

}

|

}

|

||||||

|

|

||||||

create_local_directories() {

|

|

||||||

echo "Creating local pillar and salt directories if needed"

|

|

||||||

PILLARSALTDIR=$1

|

|

||||||

local_salt_dir="/opt/so/saltstack/local"

|

|

||||||

for i in "pillar" "salt"; do

|

|

||||||

for d in $(find $PILLARSALTDIR/$i -type d); do

|

|

||||||

suffixdir=${d//$PILLARSALTDIR/}

|

|

||||||

if [ ! -d "$local_salt_dir/$suffixdir" ]; then

|

|

||||||

mkdir -pv $local_salt_dir$suffixdir

|

|

||||||

fi

|

|

||||||

done

|

|

||||||

chown -R socore:socore $local_salt_dir/$i

|

|

||||||

done

|

|

||||||

}

|

|

||||||

|

|

||||||

disable_fastestmirror() {

|

disable_fastestmirror() {

|

||||||

sed -i 's/enabled=1/enabled=0/' /etc/yum/pluginconf.d/fastestmirror.conf

|

sed -i 's/enabled=1/enabled=0/' /etc/yum/pluginconf.d/fastestmirror.conf

|

||||||

}

|

}

|

||||||

@@ -268,14 +248,6 @@ get_random_value() {

|

|||||||

head -c 5000 /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w $length | head -n 1

|

head -c 5000 /dev/urandom | tr -dc 'a-zA-Z0-9' | fold -w $length | head -n 1

|

||||||

}

|

}

|

||||||

|

|

||||||

get_agent_count() {

|

|

||||||

if [ -f /opt/so/log/agents/agentstatus.log ]; then

|

|

||||||

AGENTCOUNT=$(cat /opt/so/log/agents/agentstatus.log | grep -wF active | awk '{print $2}')

|

|

||||||

else

|

|

||||||

AGENTCOUNT=0

|

|

||||||

fi

|

|

||||||

}

|

|

||||||

|

|

||||||

gpg_rpm_import() {

|

gpg_rpm_import() {

|

||||||

if [[ $is_oracle ]]; then

|

if [[ $is_oracle ]]; then

|

||||||

if [[ "$WHATWOULDYOUSAYYAHDOHERE" == "setup" ]]; then

|

if [[ "$WHATWOULDYOUSAYYAHDOHERE" == "setup" ]]; then

|

||||||

@@ -357,7 +329,7 @@ lookup_salt_value() {

|

|||||||

local=""

|

local=""

|

||||||

fi

|

fi

|

||||||

|

|

||||||

salt-call -lerror --no-color ${kind}.get ${group}${key} --out=${output} ${local}

|

salt-call --no-color ${kind}.get ${group}${key} --out=${output} ${local}

|

||||||

}

|

}

|

||||||

|

|

||||||

lookup_pillar() {

|

lookup_pillar() {

|

||||||

@@ -394,13 +366,6 @@ is_feature_enabled() {

|

|||||||

return 1

|

return 1

|

||||||

}

|

}

|

||||||

|

|

||||||

read_feat() {

|

|

||||||

if [ -f /opt/so/log/sostatus/lks_enabled ]; then

|

|

||||||

lic_id=$(cat /opt/so/saltstack/local/pillar/soc/license.sls | grep license_id: | awk '{print $2}')

|

|

||||||

echo "$lic_id/$(cat /opt/so/log/sostatus/lks_enabled)/$(cat /opt/so/log/sostatus/fps_enabled)"

|

|

||||||

fi

|

|

||||||

}

|

|

||||||

|

|

||||||

require_manager() {

|

require_manager() {

|

||||||

if is_manager_node; then

|

if is_manager_node; then

|

||||||

echo "This is a manager, so we can proceed."

|

echo "This is a manager, so we can proceed."

|

||||||

@@ -594,15 +559,6 @@ status () {

|

|||||||

printf "\n=========================================================================\n$(date) | $1\n=========================================================================\n"

|

printf "\n=========================================================================\n$(date) | $1\n=========================================================================\n"

|

||||||

}

|

}

|

||||||

|

|

||||||

sync_options() {

|

|

||||||

set_version

|

|

||||||

set_os

|

|

||||||

salt_minion_count

|

|

||||||

get_agent_count

|

|

||||||

|

|

||||||

echo "$VERSION/$OS/$(uname -r)/$MINIONCOUNT:$AGENTCOUNT/$(read_feat)"

|

|

||||||

}

|

|

||||||

|

|

||||||

systemctl_func() {

|

systemctl_func() {

|

||||||

local action=$1

|

local action=$1

|

||||||

local echo_action=$1

|

local echo_action=$1

|

||||||

|

|||||||

@@ -8,7 +8,6 @@

|

|||||||

import sys

|

import sys

|

||||||

import subprocess

|

import subprocess

|

||||||

import os

|

import os

|

||||||

import json

|

|

||||||

|

|

||||||

sys.path.append('/opt/saltstack/salt/lib/python3.10/site-packages/')

|

sys.path.append('/opt/saltstack/salt/lib/python3.10/site-packages/')

|

||||||

import salt.config

|

import salt.config

|

||||||

@@ -37,67 +36,17 @@ def check_needs_restarted():

|

|||||||

with open(outfile, 'w') as f:

|

with open(outfile, 'w') as f:

|

||||||

f.write(val)

|

f.write(val)

|

||||||

|

|

||||||

def check_for_fps():

|

|

||||||

feat = 'fps'

|

|

||||||

feat_full = feat.replace('ps', 'ips')

|

|

||||||

fps = 0

|

|

||||||

try:

|

|

||||||

result = subprocess.run([feat_full + '-mode-setup', '--is-enabled'], stdout=subprocess.PIPE)

|

|

||||||

if result.returncode == 0:

|

|

||||||

fps = 1

|

|

||||||

except FileNotFoundError:

|

|

||||||

fn = '/proc/sys/crypto/' + feat_full + '_enabled'

|

|

||||||

try:

|

|

||||||

with open(fn, 'r') as f:

|

|

||||||

contents = f.read()

|

|

||||||

if '1' in contents:

|

|

||||||

fps = 1

|

|

||||||

except:

|

|

||||||

# Unknown, so assume 0

|

|

||||||

fps = 0

|

|

||||||

|

|

||||||

with open('/opt/so/log/sostatus/fps_enabled', 'w') as f:

|

|

||||||

f.write(str(fps))

|

|

||||||

|

|

||||||

def check_for_lks():

|

|

||||||

feat = 'Lks'

|

|

||||||

feat_full = feat.replace('ks', 'uks')

|

|

||||||

lks = 0

|

|

||||||

result = subprocess.run(['lsblk', '-p', '-J'], check=True, stdout=subprocess.PIPE)

|

|

||||||

data = json.loads(result.stdout)

|

|

||||||

for device in data['blockdevices']:

|

|

||||||

if 'children' in device:

|

|

||||||

for gc in device['children']:

|

|

||||||

if 'children' in gc:

|

|

||||||

try:

|

|

||||||

arg = 'is' + feat_full

|

|

||||||

result = subprocess.run(['cryptsetup', arg, gc['name']], stdout=subprocess.PIPE)

|

|

||||||

if result.returncode == 0:

|

|

||||||

lks = 1

|

|

||||||

except FileNotFoundError:

|

|

||||||

for ggc in gc['children']:

|

|

||||||

if 'crypt' in ggc['type']:

|

|

||||||

lks = 1

|

|

||||||

if lks:

|

|

||||||

break

|

|

||||||

with open('/opt/so/log/sostatus/lks_enabled', 'w') as f:

|

|

||||||

f.write(str(lks))

|

|

||||||

|

|

||||||

def fail(msg):

|

def fail(msg):

|

||||||

print(msg, file=sys.stderr)

|

print(msg, file=sys.stderr)

|

||||||

sys.exit(1)

|

sys.exit(1)

|

||||||

|

|

||||||

|

|

||||||

def main():

|

def main():

|

||||||

proc = subprocess.run(['id', '-u'], stdout=subprocess.PIPE, encoding="utf-8")

|

proc = subprocess.run(['id', '-u'], stdout=subprocess.PIPE, encoding="utf-8")

|

||||||

if proc.stdout.strip() != "0":

|

if proc.stdout.strip() != "0":

|

||||||

fail("This program must be run as root")

|

fail("This program must be run as root")

|

||||||

# Ensure that umask is 0022 so that files created by this script have rw-r-r permissions

|

|

||||||

org_umask = os.umask(0o022)

|

|

||||||

check_needs_restarted()

|

check_needs_restarted()

|

||||||

check_for_fps()

|

|

||||||

check_for_lks()

|

|

||||||

# Restore umask to whatever value was set before this script was run. SXIG sets to 0077 rw---

|

|

||||||

os.umask(org_umask)

|

|

||||||

|

|

||||||

if __name__ == "__main__":

|

if __name__ == "__main__":

|

||||||

main()

|

main()

|

||||||

|

|||||||

@@ -50,14 +50,16 @@ container_list() {

|

|||||||

"so-idh"

|

"so-idh"

|

||||||

"so-idstools"

|

"so-idstools"

|

||||||

"so-influxdb"

|

"so-influxdb"

|

||||||

"so-kafka"

|

|

||||||

"so-kibana"

|

"so-kibana"

|

||||||

"so-kratos"

|

"so-kratos"

|

||||||

"so-logstash"

|

"so-logstash"

|

||||||

|

"so-mysql"

|

||||||

"so-nginx"

|

"so-nginx"

|

||||||

"so-pcaptools"

|

"so-pcaptools"

|

||||||

|

"so-playbook"

|

||||||

"so-redis"

|

"so-redis"

|

||||||

"so-soc"

|

"so-soc"

|

||||||

|

"so-soctopus"

|

||||||

"so-steno"

|

"so-steno"

|

||||||

"so-strelka-backend"

|

"so-strelka-backend"

|

||||||

"so-strelka-filestream"

|

"so-strelka-filestream"

|

||||||

|

|||||||

@@ -49,6 +49,10 @@ if [ "$CONTINUE" == "y" ]; then

|

|||||||

sed -i "s|$OLD_IP|$NEW_IP|g" $file

|

sed -i "s|$OLD_IP|$NEW_IP|g" $file

|

||||||

done

|

done

|

||||||

|

|

||||||

|

echo "Granting MySQL root user permissions on $NEW_IP"

|

||||||

|

docker exec -i so-mysql mysql --user=root --password=$(lookup_pillar_secret 'mysql') -e "GRANT ALL PRIVILEGES ON *.* TO 'root'@'$NEW_IP' IDENTIFIED BY '$(lookup_pillar_secret 'mysql')' WITH GRANT OPTION;" &> /dev/null

|

||||||

|

echo "Removing MySQL root user from $OLD_IP"

|

||||||

|

docker exec -i so-mysql mysql --user=root --password=$(lookup_pillar_secret 'mysql') -e "DROP USER 'root'@'$OLD_IP';" &> /dev/null

|

||||||

echo "Updating Kibana dashboards"

|

echo "Updating Kibana dashboards"

|

||||||

salt-call state.apply kibana.so_savedobjects_defaults -l info queue=True

|

salt-call state.apply kibana.so_savedobjects_defaults -l info queue=True

|

||||||

|

|

||||||

|

|||||||

@@ -122,7 +122,6 @@ if [[ $EXCLUDE_STARTUP_ERRORS == 'Y' ]]; then

|

|||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|error while communicating" # Elasticsearch MS -> HN "sensor" temporarily unavailable

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|error while communicating" # Elasticsearch MS -> HN "sensor" temporarily unavailable

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|tls handshake error" # Docker registry container when new node comes onlines

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|tls handshake error" # Docker registry container when new node comes onlines

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Unable to get license information" # Logstash trying to contact ES before it's ready

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Unable to get license information" # Logstash trying to contact ES before it's ready

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|process already finished" # Telegraf script finished just as the auto kill timeout kicked in

|

|

||||||

fi

|

fi

|

||||||

|

|

||||||

if [[ $EXCLUDE_FALSE_POSITIVE_ERRORS == 'Y' ]]; then

|

if [[ $EXCLUDE_FALSE_POSITIVE_ERRORS == 'Y' ]]; then

|

||||||

@@ -155,11 +154,15 @@ if [[ $EXCLUDE_KNOWN_ERRORS == 'Y' ]]; then

|

|||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|fail\\(error\\)" # redis/python generic stack line, rely on other lines for actual error

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|fail\\(error\\)" # redis/python generic stack line, rely on other lines for actual error

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|urlerror" # idstools connection timeout

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|urlerror" # idstools connection timeout

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|timeouterror" # idstools connection timeout

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|timeouterror" # idstools connection timeout

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|forbidden" # playbook

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|_ml" # Elastic ML errors

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|_ml" # Elastic ML errors

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|context canceled" # elastic agent during shutdown

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|context canceled" # elastic agent during shutdown

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|exited with code 128" # soctopus errors during forced restart by highstate

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|geoip databases update" # airgap can't update GeoIP DB

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|geoip databases update" # airgap can't update GeoIP DB

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|filenotfounderror" # bug in 2.4.10 filecheck salt state caused duplicate cronjobs

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|filenotfounderror" # bug in 2.4.10 filecheck salt state caused duplicate cronjobs

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|salt-minion-check" # bug in early 2.4 place Jinja script in non-jinja salt dir causing cron output errors

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|salt-minion-check" # bug in early 2.4 place Jinja script in non-jinja salt dir causing cron output errors

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|generating elastalert config" # playbook expected error

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|activerecord" # playbook expected error

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|monitoring.metrics" # known issue with elastic agent casting the field incorrectly if an integer value shows up before a float

|