Compare commits

11 Commits

2.4.20-202

...

feature/us

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

62c702e269 | ||

|

|

f10290246f | ||

|

|

c919f6bca0 | ||

|

|

51b421a165 | ||

|

|

86ff54e844 | ||

|

|

b8cb3f5815 | ||

|

|

381a51271f | ||

|

|

10500178d5 | ||

|

|

e81e66f40d | ||

|

|

f6bd74aadf | ||

|

|

322c2804fc |

546

.github/.gitleaks.toml

vendored

@@ -1,546 +0,0 @@

|

||||

title = "gitleaks config"

|

||||

|

||||

# Gitleaks rules are defined by regular expressions and entropy ranges.

|

||||

# Some secrets have unique signatures which make detecting those secrets easy.

|

||||

# Examples of those secrets would be GitLab Personal Access Tokens, AWS keys, and GitHub Access Tokens.

|

||||

# All these examples have defined prefixes like `glpat`, `AKIA`, `ghp_`, etc.

|

||||

#

|

||||

# Other secrets might just be a hash which means we need to write more complex rules to verify

|

||||

# that what we are matching is a secret.

|

||||

#

|

||||

# Here is an example of a semi-generic secret

|

||||

#

|

||||

# discord_client_secret = "8dyfuiRyq=vVc3RRr_edRk-fK__JItpZ"

|

||||

#

|

||||

# We can write a regular expression to capture the variable name (identifier),

|

||||

# the assignment symbol (like '=' or ':='), and finally the actual secret.

|

||||

# The structure of a rule to match this example secret is below:

|

||||

#

|

||||

# Beginning string

|

||||

# quotation

|

||||

# │ End string quotation

|

||||

# │ │

|

||||

# ▼ ▼

|

||||

# (?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_\-]{32})['\"]

|

||||

#

|

||||

# ▲ ▲ ▲

|

||||

# │ │ │

|

||||

# │ │ │

|

||||

# identifier assignment symbol

|

||||

# Secret

|

||||

#

|

||||

[[rules]]

|

||||

id = "gitlab-pat"

|

||||

description = "GitLab Personal Access Token"

|

||||

regex = '''glpat-[0-9a-zA-Z\-\_]{20}'''

|

||||

|

||||

[[rules]]

|

||||

id = "aws-access-token"

|

||||

description = "AWS"

|

||||

regex = '''(A3T[A-Z0-9]|AKIA|AGPA|AIDA|AROA|AIPA|ANPA|ANVA|ASIA)[A-Z0-9]{16}'''

|

||||

|

||||

# Cryptographic keys

|

||||

[[rules]]

|

||||

id = "PKCS8-PK"

|

||||

description = "PKCS8 private key"

|

||||

regex = '''-----BEGIN PRIVATE KEY-----'''

|

||||

|

||||

[[rules]]

|

||||

id = "RSA-PK"

|

||||

description = "RSA private key"

|

||||

regex = '''-----BEGIN RSA PRIVATE KEY-----'''

|

||||

|

||||

[[rules]]

|

||||

id = "OPENSSH-PK"

|

||||

description = "SSH private key"

|

||||

regex = '''-----BEGIN OPENSSH PRIVATE KEY-----'''

|

||||

|

||||

[[rules]]

|

||||

id = "PGP-PK"

|

||||

description = "PGP private key"

|

||||

regex = '''-----BEGIN PGP PRIVATE KEY BLOCK-----'''

|

||||

|

||||

[[rules]]

|

||||

id = "github-pat"

|

||||

description = "GitHub Personal Access Token"

|

||||

regex = '''ghp_[0-9a-zA-Z]{36}'''

|

||||

|

||||

[[rules]]

|

||||

id = "github-oauth"

|

||||

description = "GitHub OAuth Access Token"

|

||||

regex = '''gho_[0-9a-zA-Z]{36}'''

|

||||

|

||||

[[rules]]

|

||||

id = "SSH-DSA-PK"

|

||||

description = "SSH (DSA) private key"

|

||||

regex = '''-----BEGIN DSA PRIVATE KEY-----'''

|

||||

|

||||

[[rules]]

|

||||

id = "SSH-EC-PK"

|

||||

description = "SSH (EC) private key"

|

||||

regex = '''-----BEGIN EC PRIVATE KEY-----'''

|

||||

|

||||

|

||||

[[rules]]

|

||||

id = "github-app-token"

|

||||

description = "GitHub App Token"

|

||||

regex = '''(ghu|ghs)_[0-9a-zA-Z]{36}'''

|

||||

|

||||

[[rules]]

|

||||

id = "github-refresh-token"

|

||||

description = "GitHub Refresh Token"

|

||||

regex = '''ghr_[0-9a-zA-Z]{76}'''

|

||||

|

||||

[[rules]]

|

||||

id = "shopify-shared-secret"

|

||||

description = "Shopify shared secret"

|

||||

regex = '''shpss_[a-fA-F0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "shopify-access-token"

|

||||

description = "Shopify access token"

|

||||

regex = '''shpat_[a-fA-F0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "shopify-custom-access-token"

|

||||

description = "Shopify custom app access token"

|

||||

regex = '''shpca_[a-fA-F0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "shopify-private-app-access-token"

|

||||

description = "Shopify private app access token"

|

||||

regex = '''shppa_[a-fA-F0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "slack-access-token"

|

||||

description = "Slack token"

|

||||

regex = '''xox[baprs]-([0-9a-zA-Z]{10,48})?'''

|

||||

|

||||

[[rules]]

|

||||

id = "stripe-access-token"

|

||||

description = "Stripe"

|

||||

regex = '''(?i)(sk|pk)_(test|live)_[0-9a-z]{10,32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "pypi-upload-token"

|

||||

description = "PyPI upload token"

|

||||

regex = '''pypi-AgEIcHlwaS5vcmc[A-Za-z0-9\-_]{50,1000}'''

|

||||

|

||||

[[rules]]

|

||||

id = "gcp-service-account"

|

||||

description = "Google (GCP) Service-account"

|

||||

regex = '''\"type\": \"service_account\"'''

|

||||

|

||||

[[rules]]

|

||||

id = "heroku-api-key"

|

||||

description = "Heroku API Key"

|

||||

regex = ''' (?i)(heroku[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9A-F]{8}-[0-9A-F]{4}-[0-9A-F]{4}-[0-9A-F]{4}-[0-9A-F]{12})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "slack-web-hook"

|

||||

description = "Slack Webhook"

|

||||

regex = '''https://hooks.slack.com/services/T[a-zA-Z0-9_]{8}/B[a-zA-Z0-9_]{8,12}/[a-zA-Z0-9_]{24}'''

|

||||

|

||||

[[rules]]

|

||||

id = "twilio-api-key"

|

||||

description = "Twilio API Key"

|

||||

regex = '''SK[0-9a-fA-F]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "age-secret-key"

|

||||

description = "Age secret key"

|

||||

regex = '''AGE-SECRET-KEY-1[QPZRY9X8GF2TVDW0S3JN54KHCE6MUA7L]{58}'''

|

||||

|

||||

[[rules]]

|

||||

id = "facebook-token"

|

||||

description = "Facebook token"

|

||||

regex = '''(?i)(facebook[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "twitter-token"

|

||||

description = "Twitter token"

|

||||

regex = '''(?i)(twitter[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{35,44})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "adobe-client-id"

|

||||

description = "Adobe Client ID (Oauth Web)"

|

||||

regex = '''(?i)(adobe[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "adobe-client-secret"

|

||||

description = "Adobe Client Secret"

|

||||

regex = '''(p8e-)(?i)[a-z0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "alibaba-access-key-id"

|

||||

description = "Alibaba AccessKey ID"

|

||||

regex = '''(LTAI)(?i)[a-z0-9]{20}'''

|

||||

|

||||

[[rules]]

|

||||

id = "alibaba-secret-key"

|

||||

description = "Alibaba Secret Key"

|

||||

regex = '''(?i)(alibaba[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{30})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "asana-client-id"

|

||||

description = "Asana Client ID"

|

||||

regex = '''(?i)(asana[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9]{16})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "asana-client-secret"

|

||||

description = "Asana Client Secret"

|

||||

regex = '''(?i)(asana[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "atlassian-api-token"

|

||||

description = "Atlassian API token"

|

||||

regex = '''(?i)(atlassian[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{24})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "bitbucket-client-id"

|

||||

description = "Bitbucket client ID"

|

||||

regex = '''(?i)(bitbucket[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "bitbucket-client-secret"

|

||||

description = "Bitbucket client secret"

|

||||

regex = '''(?i)(bitbucket[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9_\-]{64})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "beamer-api-token"

|

||||

description = "Beamer API token"

|

||||

regex = '''(?i)(beamer[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](b_[a-z0-9=_\-]{44})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "clojars-api-token"

|

||||

description = "Clojars API token"

|

||||

regex = '''(CLOJARS_)(?i)[a-z0-9]{60}'''

|

||||

|

||||

[[rules]]

|

||||

id = "contentful-delivery-api-token"

|

||||

description = "Contentful delivery API token"

|

||||

regex = '''(?i)(contentful[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9\-=_]{43})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "databricks-api-token"

|

||||

description = "Databricks API token"

|

||||

regex = '''dapi[a-h0-9]{32}'''

|

||||

|

||||

[[rules]]

|

||||

id = "discord-api-token"

|

||||

description = "Discord API key"

|

||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{64})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "discord-client-id"

|

||||

description = "Discord client ID"

|

||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9]{18})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "discord-client-secret"

|

||||

description = "Discord client secret"

|

||||

regex = '''(?i)(discord[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_\-]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "doppler-api-token"

|

||||

description = "Doppler API token"

|

||||

regex = '''['\"](dp\.pt\.)(?i)[a-z0-9]{43}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "dropbox-api-secret"

|

||||

description = "Dropbox API secret/key"

|

||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{15})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "dropbox--api-key"

|

||||

description = "Dropbox API secret/key"

|

||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{15})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "dropbox-short-lived-api-token"

|

||||

description = "Dropbox short lived API token"

|

||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](sl\.[a-z0-9\-=_]{135})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "dropbox-long-lived-api-token"

|

||||

description = "Dropbox long lived API token"

|

||||

regex = '''(?i)(dropbox[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"][a-z0-9]{11}(AAAAAAAAAA)[a-z0-9\-_=]{43}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "duffel-api-token"

|

||||

description = "Duffel API token"

|

||||

regex = '''['\"]duffel_(test|live)_(?i)[a-z0-9_-]{43}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "dynatrace-api-token"

|

||||

description = "Dynatrace API token"

|

||||

regex = '''['\"]dt0c01\.(?i)[a-z0-9]{24}\.[a-z0-9]{64}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "easypost-api-token"

|

||||

description = "EasyPost API token"

|

||||

regex = '''['\"]EZAK(?i)[a-z0-9]{54}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "easypost-test-api-token"

|

||||

description = "EasyPost test API token"

|

||||

regex = '''['\"]EZTK(?i)[a-z0-9]{54}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "fastly-api-token"

|

||||

description = "Fastly API token"

|

||||

regex = '''(?i)(fastly[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9\-=_]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "finicity-client-secret"

|

||||

description = "Finicity client secret"

|

||||

regex = '''(?i)(finicity[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{20})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "finicity-api-token"

|

||||

description = "Finicity API token"

|

||||

regex = '''(?i)(finicity[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "flutterwave-public-key"

|

||||

description = "Flutterwave public key"

|

||||

regex = '''FLWPUBK_TEST-(?i)[a-h0-9]{32}-X'''

|

||||

|

||||

[[rules]]

|

||||

id = "flutterwave-secret-key"

|

||||

description = "Flutterwave secret key"

|

||||

regex = '''FLWSECK_TEST-(?i)[a-h0-9]{32}-X'''

|

||||

|

||||

[[rules]]

|

||||

id = "flutterwave-enc-key"

|

||||

description = "Flutterwave encrypted key"

|

||||

regex = '''FLWSECK_TEST[a-h0-9]{12}'''

|

||||

|

||||

[[rules]]

|

||||

id = "frameio-api-token"

|

||||

description = "Frame.io API token"

|

||||

regex = '''fio-u-(?i)[a-z0-9\-_=]{64}'''

|

||||

|

||||

[[rules]]

|

||||

id = "gocardless-api-token"

|

||||

description = "GoCardless API token"

|

||||

regex = '''['\"]live_(?i)[a-z0-9\-_=]{40}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "grafana-api-token"

|

||||

description = "Grafana API token"

|

||||

regex = '''['\"]eyJrIjoi(?i)[a-z0-9\-_=]{72,92}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "hashicorp-tf-api-token"

|

||||

description = "HashiCorp Terraform user/org API token"

|

||||

regex = '''['\"](?i)[a-z0-9]{14}\.atlasv1\.[a-z0-9\-_=]{60,70}['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "hubspot-api-token"

|

||||

description = "HubSpot API token"

|

||||

regex = '''(?i)(hubspot[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "intercom-api-token"

|

||||

description = "Intercom API token"

|

||||

regex = '''(?i)(intercom[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9=_]{60})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "intercom-client-secret"

|

||||

description = "Intercom client secret/ID"

|

||||

regex = '''(?i)(intercom[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "ionic-api-token"

|

||||

description = "Ionic API token"

|

||||

regex = '''(?i)(ionic[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](ion_[a-z0-9]{42})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "linear-api-token"

|

||||

description = "Linear API token"

|

||||

regex = '''lin_api_(?i)[a-z0-9]{40}'''

|

||||

|

||||

[[rules]]

|

||||

id = "linear-client-secret"

|

||||

description = "Linear client secret/ID"

|

||||

regex = '''(?i)(linear[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "lob-api-key"

|

||||

description = "Lob API Key"

|

||||

regex = '''(?i)(lob[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]((live|test)_[a-f0-9]{35})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "lob-pub-api-key"

|

||||

description = "Lob Publishable API Key"

|

||||

regex = '''(?i)(lob[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]((test|live)_pub_[a-f0-9]{31})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "mailchimp-api-key"

|

||||

description = "Mailchimp API key"

|

||||

regex = '''(?i)(mailchimp[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-f0-9]{32}-us20)['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "mailgun-private-api-token"

|

||||

description = "Mailgun private API token"

|

||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](key-[a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "mailgun-pub-key"

|

||||

description = "Mailgun public validation key"

|

||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"](pubkey-[a-f0-9]{32})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "mailgun-signing-key"

|

||||

description = "Mailgun webhook signing key"

|

||||

regex = '''(?i)(mailgun[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{32}-[a-h0-9]{8}-[a-h0-9]{8})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "mapbox-api-token"

|

||||

description = "Mapbox API token"

|

||||

regex = '''(?i)(pk\.[a-z0-9]{60}\.[a-z0-9]{22})'''

|

||||

|

||||

[[rules]]

|

||||

id = "messagebird-api-token"

|

||||

description = "MessageBird API token"

|

||||

regex = '''(?i)(messagebird[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{25})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "messagebird-client-id"

|

||||

description = "MessageBird API client ID"

|

||||

regex = '''(?i)(messagebird[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-h0-9]{8}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{4}-[a-h0-9]{12})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "new-relic-user-api-key"

|

||||

description = "New Relic user API Key"

|

||||

regex = '''['\"](NRAK-[A-Z0-9]{27})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "new-relic-user-api-id"

|

||||

description = "New Relic user API ID"

|

||||

regex = '''(?i)(newrelic[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([A-Z0-9]{64})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "new-relic-browser-api-token"

|

||||

description = "New Relic ingest browser API token"

|

||||

regex = '''['\"](NRJS-[a-f0-9]{19})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "npm-access-token"

|

||||

description = "npm access token"

|

||||

regex = '''['\"](npm_(?i)[a-z0-9]{36})['\"]'''

|

||||

|

||||

[[rules]]

|

||||

id = "planetscale-password"

|

||||

description = "PlanetScale password"

|

||||

regex = '''pscale_pw_(?i)[a-z0-9\-_\.]{43}'''

|

||||

|

||||

[[rules]]

|

||||

id = "planetscale-api-token"

|

||||

description = "PlanetScale API token"

|

||||

regex = '''pscale_tkn_(?i)[a-z0-9\-_\.]{43}'''

|

||||

|

||||

[[rules]]

|

||||

id = "postman-api-token"

|

||||

description = "Postman API token"

|

||||

regex = '''PMAK-(?i)[a-f0-9]{24}\-[a-f0-9]{34}'''

|

||||

|

||||

[[rules]]

|

||||

id = "pulumi-api-token"

|

||||

description = "Pulumi API token"

|

||||

regex = '''pul-[a-f0-9]{40}'''

|

||||

|

||||

[[rules]]

|

||||

id = "rubygems-api-token"

|

||||

description = "Rubygem API token"

|

||||

regex = '''rubygems_[a-f0-9]{48}'''

|

||||

|

||||

[[rules]]

|

||||

id = "sendgrid-api-token"

|

||||

description = "SendGrid API token"

|

||||

regex = '''SG\.(?i)[a-z0-9_\-\.]{66}'''

|

||||

|

||||

[[rules]]

|

||||

id = "sendinblue-api-token"

|

||||

description = "Sendinblue API token"

|

||||

regex = '''xkeysib-[a-f0-9]{64}\-(?i)[a-z0-9]{16}'''

|

||||

|

||||

[[rules]]

|

||||

id = "shippo-api-token"

|

||||

description = "Shippo API token"

|

||||

regex = '''shippo_(live|test)_[a-f0-9]{40}'''

|

||||

|

||||

[[rules]]

|

||||

id = "linkedin-client-secret"

|

||||

description = "LinkedIn Client secret"

|

||||

regex = '''(?i)(linkedin[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z]{16})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "linkedin-client-id"

|

||||

description = "LinkedIn Client ID"

|

||||

regex = '''(?i)(linkedin[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{14})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "twitch-api-token"

|

||||

description = "Twitch API token"

|

||||

regex = '''(?i)(twitch[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([a-z0-9]{30})['\"]'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "typeform-api-token"

|

||||

description = "Typeform API token"

|

||||

regex = '''(?i)(typeform[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}(tfp_[a-z0-9\-_\.=]{59})'''

|

||||

secretGroup = 3

|

||||

|

||||

[[rules]]

|

||||

id = "generic-api-key"

|

||||

description = "Generic API Key"

|

||||

regex = '''(?i)((key|api[^Version]|token|secret|password)[a-z0-9_ .\-,]{0,25})(=|>|:=|\|\|:|<=|=>|:).{0,5}['\"]([0-9a-zA-Z\-_=]{8,64})['\"]'''

|

||||

entropy = 3.7

|

||||

secretGroup = 4

|

||||

|

||||

|

||||

[allowlist]

|

||||

description = "global allow lists"

|

||||

regexes = ['''219-09-9999''', '''078-05-1120''', '''(9[0-9]{2}|666)-\d{2}-\d{4}''', '''RPM-GPG-KEY.*''']

|

||||

paths = [

|

||||

'''gitleaks.toml''',

|

||||

'''(.*?)(jpg|gif|doc|pdf|bin|svg|socket)$''',

|

||||

'''(go.mod|go.sum)$''',

|

||||

|

||||

'''salt/nginx/files/enterprise-attack.json'''

|

||||

]

|

||||

24

.github/workflows/contrib.yml

vendored

@@ -1,24 +0,0 @@

|

||||

name: contrib

|

||||

on:

|

||||

issue_comment:

|

||||

types: [created]

|

||||

pull_request_target:

|

||||

types: [opened,closed,synchronize]

|

||||

|

||||

jobs:

|

||||

CLAssistant:

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: "Contributor Check"

|

||||

if: (github.event.comment.body == 'recheck' || github.event.comment.body == 'I have read the CLA Document and I hereby sign the CLA') || github.event_name == 'pull_request_target'

|

||||

uses: cla-assistant/github-action@v2.1.3-beta

|

||||

env:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

PERSONAL_ACCESS_TOKEN : ${{ secrets.PERSONAL_ACCESS_TOKEN }}

|

||||

with:

|

||||

path-to-signatures: 'signatures_v1.json'

|

||||

path-to-document: 'https://securityonionsolutions.com/cla'

|

||||

allowlist: dependabot[bot],jertel,dougburks,TOoSmOotH,weslambert,defensivedepth,m0duspwnens

|

||||

remote-organization-name: Security-Onion-Solutions

|

||||

remote-repository-name: licensing

|

||||

|

||||

4

.github/workflows/leaktest.yml

vendored

@@ -12,6 +12,4 @@ jobs:

|

||||

fetch-depth: '0'

|

||||

|

||||

- name: Gitleaks

|

||||

uses: gitleaks/gitleaks-action@v1.6.0

|

||||

with:

|

||||

config-path: .github/.gitleaks.toml

|

||||

uses: zricethezav/gitleaks-action@master

|

||||

|

||||

37

.github/workflows/pythontest.yml

vendored

@@ -1,37 +0,0 @@

|

||||

name: python-test

|

||||

|

||||

on:

|

||||

push:

|

||||

paths:

|

||||

- "salt/sensoroni/files/analyzers/**"

|

||||

pull_request:

|

||||

paths:

|

||||

- "salt/sensoroni/files/analyzers/**"

|

||||

|

||||

jobs:

|

||||

build:

|

||||

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

fail-fast: false

|

||||

matrix:

|

||||

python-version: ["3.10"]

|

||||

python-code-path: ["salt/sensoroni/files/analyzers"]

|

||||

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Set up Python ${{ matrix.python-version }}

|

||||

uses: actions/setup-python@v3

|

||||

with:

|

||||

python-version: ${{ matrix.python-version }}

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

python -m pip install flake8 pytest pytest-cov

|

||||

find . -name requirements.txt -exec pip install -r {} \;

|

||||

- name: Lint with flake8

|

||||

run: |

|

||||

flake8 ${{ matrix.python-code-path }} --show-source --max-complexity=12 --doctests --max-line-length=200 --statistics

|

||||

- name: Test with pytest

|

||||

run: |

|

||||

pytest ${{ matrix.python-code-path }} --cov=${{ matrix.python-code-path }} --doctest-modules --cov-report=term --cov-fail-under=100 --cov-config=${{ matrix.python-code-path }}/pytest.ini

|

||||

13

.gitignore

vendored

@@ -56,15 +56,4 @@ $RECYCLE.BIN/

|

||||

# Windows shortcuts

|

||||

*.lnk

|

||||

|

||||

# End of https://www.gitignore.io/api/macos,windows

|

||||

|

||||

# Pytest output

|

||||

__pycache__

|

||||

.pytest_cache

|

||||

.coverage

|

||||

*.pyc

|

||||

.venv

|

||||

|

||||

# Analyzer dev/test config files

|

||||

*_dev.yaml

|

||||

site-packages

|

||||

# End of https://www.gitignore.io/api/macos,windows

|

||||

@@ -29,11 +29,6 @@

|

||||

|

||||

* See this document's [code styling and conventions section](#code-style-and-conventions) below to be sure your PR fits our code requirements prior to submitting.

|

||||

|

||||

* Change behavior (fix a bug, add a new feature) separately from refactoring code. Refactor pull requests are welcome, but ensure your new code behaves exactly the same as the old.

|

||||

|

||||

* **Do not refactor code for non-functional reasons**. If you are submitting a pull request that refactors code, ensure the refactor is improving the functionality of the code you're refactoring (e.g. decreasing complexity, removing reliance on 3rd party tools, improving performance).

|

||||

|

||||

* Before submitting a PR with significant changes to the project, [start a discussion](https://github.com/Security-Onion-Solutions/securityonion/discussions/new) explaining what you hope to acheive. The project maintainers will provide feedback and determine whether your goal aligns with the project.

|

||||

|

||||

|

||||

### Code style and conventions

|

||||

@@ -42,5 +37,3 @@

|

||||

* All new Bash code should pass [ShellCheck](https://www.shellcheck.net/) analysis. Where errors can be *safely* [ignored](https://github.com/koalaman/shellcheck/wiki/Ignore), the relevant disable directive should be accompanied by a brief explanation as to why the error is being ignored.

|

||||

|

||||

* **Ensure all YAML (this includes Salt states and pillars) is properly formatted**. The spec for YAML v1.2 can be found [here](https://yaml.org/spec/1.2/spec.html), however there are numerous online resources with simpler descriptions of its formatting rules.

|

||||

|

||||

* **All code of any language should match the style of other code of that same language within the project.** Be sure that any changes you make do not break from the pre-existing style of Security Onion code.

|

||||

|

||||

32

README.md

@@ -1,47 +1,35 @@

|

||||

## Security Onion 2.4

|

||||

## Security Onion 2.3.80

|

||||

|

||||

Security Onion 2.4 is here!

|

||||

Security Onion 2.3.80 is here!

|

||||

|

||||

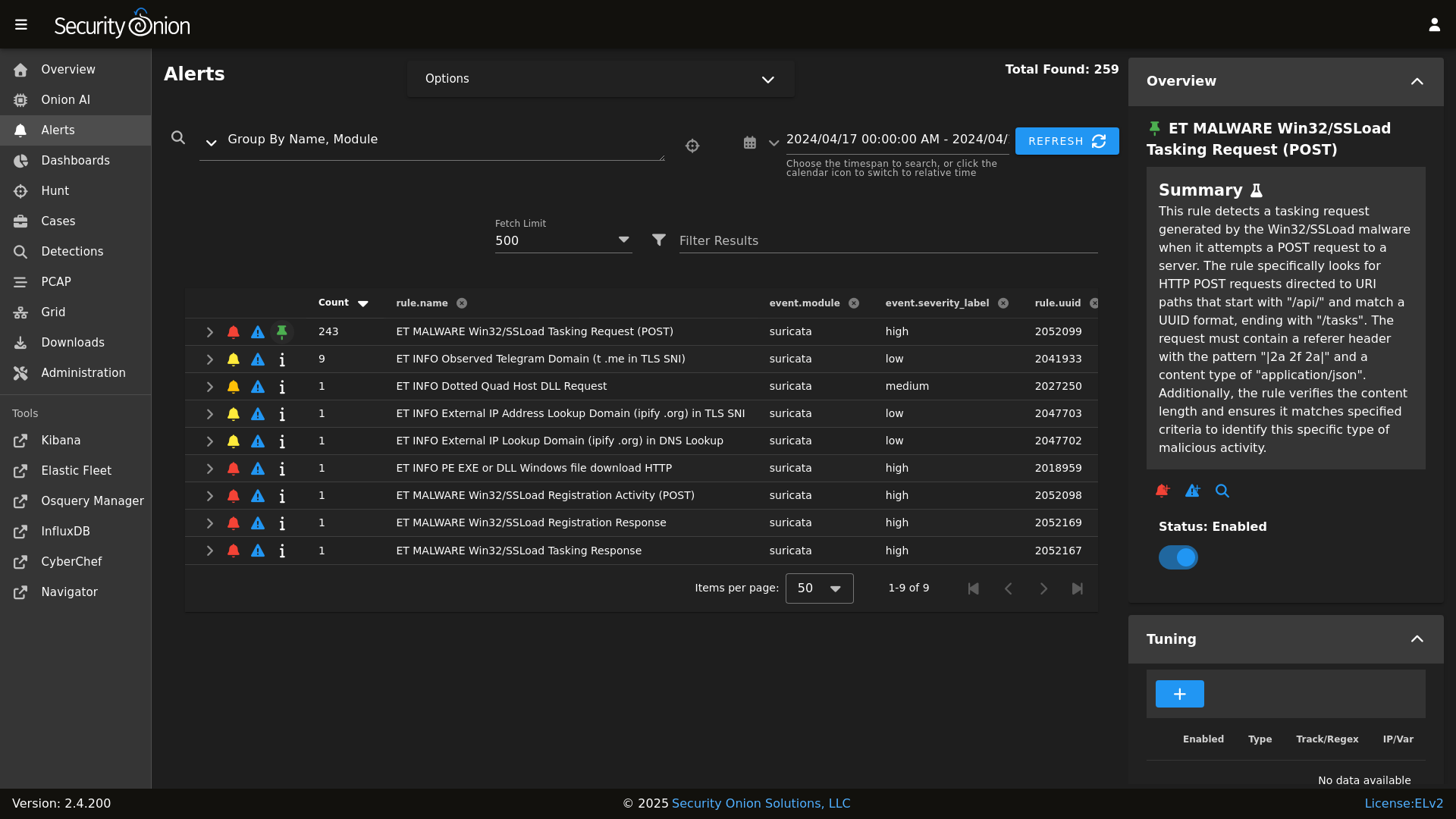

## Screenshots

|

||||

|

||||

Alerts

|

||||

|

||||

|

||||

Dashboards

|

||||

|

||||

|

||||

|

||||

Hunt

|

||||

|

||||

|

||||

PCAP

|

||||

|

||||

|

||||

Grid

|

||||

|

||||

|

||||

Config

|

||||

|

||||

|

||||

|

||||

### Release Notes

|

||||

|

||||

https://docs.securityonion.net/en/2.4/release-notes.html

|

||||

https://docs.securityonion.net/en/2.3/release-notes.html

|

||||

|

||||

### Requirements

|

||||

|

||||

https://docs.securityonion.net/en/2.4/hardware.html

|

||||

https://docs.securityonion.net/en/2.3/hardware.html

|

||||

|

||||

### Download

|

||||

|

||||

https://docs.securityonion.net/en/2.4/download.html

|

||||

https://docs.securityonion.net/en/2.3/download.html

|

||||

|

||||

### Installation

|

||||

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

https://docs.securityonion.net/en/2.3/installation.html

|

||||

|

||||

### FAQ

|

||||

|

||||

https://docs.securityonion.net/en/2.4/faq.html

|

||||

https://docs.securityonion.net/en/2.3/faq.html

|

||||

|

||||

### Feedback

|

||||

|

||||

https://docs.securityonion.net/en/2.4/community-support.html

|

||||

https://docs.securityonion.net/en/2.3/community-support.html

|

||||

|

||||

@@ -4,8 +4,7 @@

|

||||

|

||||

| Version | Supported |

|

||||

| ------- | ------------------ |

|

||||

| 2.4.x | :white_check_mark: |

|

||||

| 2.3.x | :white_check_mark: |

|

||||

| 2.x.x | :white_check_mark: |

|

||||

| 16.04.x | :x: |

|

||||

|

||||

Security Onion 16.04 has reached End Of Life and is no longer supported.

|

||||

|

||||

@@ -1,47 +1,47 @@

|

||||

### 2.4.20-20231012 ISO image released on 2023/10/12

|

||||

### 2.3.80 ISO image built on 2021/09/27

|

||||

|

||||

|

||||

|

||||

### Download and Verify

|

||||

|

||||

2.4.20-20231012 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.20-20231012.iso

|

||||

|

||||

MD5: 7D6ACA843068BA9432B3FF63BFD1EF0F

|

||||

SHA1: BEF2B906066A1B04921DF0B80E7FDD4BC8ECED5C

|

||||

SHA256: 5D511D50F11666C69AE12435A47B9A2D30CB3CC88F8D38DC58A5BC0ECADF1BF5

|

||||

2.3.80 ISO image:

|

||||

https://download.securityonion.net/file/securityonion/securityonion-2.3.80.iso

|

||||

|

||||

MD5: 24F38563860416F4A8ABE18746913E14

|

||||

SHA1: F923C005F54EA2A17AB225ADA0DA46042707AAD9

|

||||

SHA256: 8E95D10AF664D9A406C168EC421D943CB23F0D0C1813C6C2DBA9B4E131984018

|

||||

|

||||

Signature for ISO image:

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.20-20231012.iso.sig

|

||||

https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.80.iso.sig

|

||||

|

||||

Signing key:

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS

|

||||

|

||||

For example, here are the steps you can use on most Linux distributions to download and verify our Security Onion ISO image.

|

||||

|

||||

Download and import the signing key:

|

||||

```

|

||||

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS -O - | gpg --import -

|

||||

wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/master/KEYS -O - | gpg --import -

|

||||

```

|

||||

|

||||

Download the signature file for the ISO:

|

||||

```

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.20-20231012.iso.sig

|

||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/master/sigs/securityonion-2.3.80.iso.sig

|

||||

```

|

||||

|

||||

Download the ISO image:

|

||||

```

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.20-20231012.iso

|

||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.3.80.iso

|

||||

```

|

||||

|

||||

Verify the downloaded ISO image using the signature file:

|

||||

```

|

||||

gpg --verify securityonion-2.4.20-20231012.iso.sig securityonion-2.4.20-20231012.iso

|

||||

gpg --verify securityonion-2.3.80.iso.sig securityonion-2.3.80.iso

|

||||

```

|

||||

|

||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||

```

|

||||

gpg: Signature made Thu 12 Oct 2023 01:28:32 PM EDT using RSA key ID FE507013

|

||||

gpg: Signature made Mon 27 Sep 2021 08:55:01 AM EDT using RSA key ID FE507013

|

||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||

gpg: WARNING: This key is not certified with a trusted signature!

|

||||

gpg: There is no indication that the signature belongs to the owner.

|

||||

@@ -49,4 +49,4 @@ Primary key fingerprint: C804 A93D 36BE 0C73 3EA1 9644 7C10 60B7 FE50 7013

|

||||

```

|

||||

|

||||

Once you've verified the ISO image, you're ready to proceed to our Installation guide:

|

||||

https://docs.securityonion.net/en/2.4/installation.html

|

||||

https://docs.securityonion.net/en/2.3/installation.html

|

||||

BIN

assets/images/screenshots/alerts-1.png

Normal file

|

After Width: | Height: | Size: 245 KiB |

|

Before Width: | Height: | Size: 186 KiB |

|

Before Width: | Height: | Size: 201 KiB |

|

Before Width: | Height: | Size: 386 KiB |

BIN

assets/images/screenshots/hunt-1.png

Normal file

|

After Width: | Height: | Size: 168 KiB |

|

Before Width: | Height: | Size: 191 KiB |

@@ -1,8 +1,8 @@

|

||||

{% import_yaml 'firewall/ports/ports.yaml' as default_portgroups %}

|

||||

{% set default_portgroups = default_portgroups.firewall.ports %}

|

||||

{% import_yaml 'firewall/ports/ports.local.yaml' as local_portgroups %}

|

||||

{% if local_portgroups.firewall.ports %}

|

||||

{% set local_portgroups = local_portgroups.firewall.ports %}

|

||||

{% import_yaml 'firewall/portgroups.yaml' as default_portgroups %}

|

||||

{% set default_portgroups = default_portgroups.firewall.aliases.ports %}

|

||||

{% import_yaml 'firewall/portgroups.local.yaml' as local_portgroups %}

|

||||

{% if local_portgroups.firewall.aliases.ports %}

|

||||

{% set local_portgroups = local_portgroups.firewall.aliases.ports %}

|

||||

{% else %}

|

||||

{% set local_portgroups = {} %}

|

||||

{% endif %}

|

||||

@@ -13,11 +13,9 @@ role:

|

||||

fleet:

|

||||

heavynode:

|

||||

helixsensor:

|

||||

idh:

|

||||

import:

|

||||

manager:

|

||||

managersearch:

|

||||

receiver:

|

||||

standalone:

|

||||

searchnode:

|

||||

sensor:

|

||||

sensor:

|

||||

74

files/firewall/hostgroups.local.yaml

Normal file

@@ -0,0 +1,74 @@

|

||||

firewall:

|

||||

hostgroups:

|

||||

analyst:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

beats_endpoint:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

beats_endpoint_ssl:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

elasticsearch_rest:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

endgame:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

fleet:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

heavy_node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

manager:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

minion:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

osquery_endpoint:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

search_node:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

sensor:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

strelka_frontend:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

syslog:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_agent:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_api:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

wazuh_authd:

|

||||

ips:

|

||||

delete:

|

||||

insert:

|

||||

3

files/firewall/portgroups.local.yaml

Normal file

@@ -0,0 +1,3 @@

|

||||

firewall:

|

||||

aliases:

|

||||

ports:

|

||||

@@ -1,2 +0,0 @@

|

||||

firewall:

|

||||

ports:

|

||||

@@ -64,4 +64,10 @@ peer:

|

||||

.*:

|

||||

- x509.sign_remote_certificate

|

||||

|

||||

reactor:

|

||||

- 'so/fleet':

|

||||

- salt://reactor/fleet.sls

|

||||

- 'salt/beacon/*/watch_sqlite_db//opt/so/conf/kratos/db/sqlite.db':

|

||||

- salt://reactor/kratos.sls

|

||||

|

||||

|

||||

|

||||

@@ -45,10 +45,12 @@ echo " rootfs: $ROOTFS" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

echo " nsmfs: $NSM" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

if [ $TYPE == 'sensorstab' ]; then

|

||||

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

salt-call state.apply grafana queue=True

|

||||

fi

|

||||

if [ $TYPE == 'evaltab' ] || [ $TYPE == 'standalonetab' ]; then

|

||||

echo " monint: bond0" >> $local_salt_dir/pillar/data/$TYPE.sls

|

||||

if [ ! $10 ]; then

|

||||

salt-call state.apply grafana queue=True

|

||||

salt-call state.apply utility queue=True

|

||||

fi

|

||||

fi

|

||||

|

||||

@@ -1,2 +1,13 @@

|

||||

elasticsearch:

|

||||

templates:

|

||||

- so/so-beats-template.json.jinja

|

||||

- so/so-common-template.json.jinja

|

||||

- so/so-firewall-template.json.jinja

|

||||

- so/so-flow-template.json.jinja

|

||||

- so/so-ids-template.json.jinja

|

||||

- so/so-import-template.json.jinja

|

||||

- so/so-osquery-template.json.jinja

|

||||

- so/so-ossec-template.json.jinja

|

||||

- so/so-strelka-template.json.jinja

|

||||

- so/so-syslog-template.json.jinja

|

||||

- so/so-zeek-template.json.jinja

|

||||

|

||||

@@ -1,2 +0,0 @@

|

||||

elasticsearch:

|

||||

index_settings:

|

||||

@@ -1,2 +1,14 @@

|

||||

elasticsearch:

|

||||

templates:

|

||||

- so/so-beats-template.json.jinja

|

||||

- so/so-common-template.json.jinja

|

||||

- so/so-endgame-template.json.jinja

|

||||

- so/so-firewall-template.json.jinja

|

||||

- so/so-flow-template.json.jinja

|

||||

- so/so-ids-template.json.jinja

|

||||

- so/so-import-template.json.jinja

|

||||

- so/so-osquery-template.json.jinja

|

||||

- so/so-ossec-template.json.jinja

|

||||

- so/so-strelka-template.json.jinja

|

||||

- so/so-syslog-template.json.jinja

|

||||

- so/so-zeek-template.json.jinja

|

||||

|

||||

@@ -1,2 +1,14 @@

|

||||

elasticsearch:

|

||||

templates:

|

||||

- so/so-beats-template.json.jinja

|

||||

- so/so-common-template.json.jinja

|

||||

- so/so-endgame-template.json.jinja

|

||||

- so/so-firewall-template.json.jinja

|

||||

- so/so-flow-template.json.jinja

|

||||

- so/so-ids-template.json.jinja

|

||||

- so/so-import-template.json.jinja

|

||||

- so/so-osquery-template.json.jinja

|

||||

- so/so-ossec-template.json.jinja

|

||||

- so/so-strelka-template.json.jinja

|

||||

- so/so-syslog-template.json.jinja

|

||||

- so/so-zeek-template.json.jinja

|

||||

|

||||

13

pillar/logrotate/init.sls

Normal file

@@ -0,0 +1,13 @@

|

||||

logrotate:

|

||||

conf: |

|

||||

daily

|

||||

rotate 14

|

||||

missingok

|

||||

copytruncate

|

||||

compress

|

||||

create

|

||||

extension .log

|

||||

dateext

|

||||

dateyesterday

|

||||

group_conf: |

|

||||

su root socore

|

||||

42

pillar/logstash/helix.sls

Normal file

@@ -0,0 +1,42 @@

|

||||

logstash:

|

||||

pipelines:

|

||||

helix:

|

||||

config:

|

||||

- so/0010_input_hhbeats.conf

|

||||

- so/1033_preprocess_snort.conf

|

||||

- so/1100_preprocess_bro_conn.conf

|

||||

- so/1101_preprocess_bro_dhcp.conf

|

||||

- so/1102_preprocess_bro_dns.conf

|

||||

- so/1103_preprocess_bro_dpd.conf

|

||||

- so/1104_preprocess_bro_files.conf

|

||||

- so/1105_preprocess_bro_ftp.conf

|

||||

- so/1106_preprocess_bro_http.conf

|

||||

- so/1107_preprocess_bro_irc.conf

|

||||

- so/1108_preprocess_bro_kerberos.conf

|

||||

- so/1109_preprocess_bro_notice.conf

|

||||

- so/1110_preprocess_bro_rdp.conf

|

||||

- so/1111_preprocess_bro_signatures.conf

|

||||

- so/1112_preprocess_bro_smtp.conf

|

||||

- so/1113_preprocess_bro_snmp.conf

|

||||

- so/1114_preprocess_bro_software.conf

|

||||

- so/1115_preprocess_bro_ssh.conf

|

||||

- so/1116_preprocess_bro_ssl.conf

|

||||

- so/1117_preprocess_bro_syslog.conf

|

||||

- so/1118_preprocess_bro_tunnel.conf

|

||||

- so/1119_preprocess_bro_weird.conf

|

||||

- so/1121_preprocess_bro_mysql.conf

|

||||

- so/1122_preprocess_bro_socks.conf

|

||||

- so/1123_preprocess_bro_x509.conf

|

||||

- so/1124_preprocess_bro_intel.conf

|

||||

- so/1125_preprocess_bro_modbus.conf

|

||||

- so/1126_preprocess_bro_sip.conf

|

||||

- so/1127_preprocess_bro_radius.conf

|

||||

- so/1128_preprocess_bro_pe.conf

|

||||

- so/1129_preprocess_bro_rfb.conf

|

||||

- so/1130_preprocess_bro_dnp3.conf

|

||||

- so/1131_preprocess_bro_smb_files.conf

|

||||

- so/1132_preprocess_bro_smb_mapping.conf

|

||||

- so/1133_preprocess_bro_ntlm.conf

|

||||

- so/1134_preprocess_bro_dce_rpc.conf

|

||||

- so/8001_postprocess_common_ip_augmentation.conf

|

||||

- so/9997_output_helix.conf.jinja

|

||||

@@ -3,8 +3,6 @@ logstash:

|

||||

port_bindings:

|

||||

- 0.0.0.0:3765:3765

|

||||

- 0.0.0.0:5044:5044

|

||||

- 0.0.0.0:5055:5055

|

||||

- 0.0.0.0:5056:5056

|

||||

- 0.0.0.0:5644:5644

|

||||

- 0.0.0.0:6050:6050

|

||||

- 0.0.0.0:6051:6051

|

||||

|

||||

10

pillar/logstash/manager.sls

Normal file

@@ -0,0 +1,10 @@

|

||||

{%- set PIPELINE = salt['pillar.get']('global:pipeline', 'redis') %}

|

||||

logstash:

|

||||

pipelines:

|

||||

manager:

|

||||

config:

|

||||

- so/0009_input_beats.conf

|

||||

- so/0010_input_hhbeats.conf

|

||||

- so/0011_input_endgame.conf

|

||||

- so/9999_output_redis.conf.jinja

|

||||

|

||||

@@ -1,31 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% set cached_grains = salt.saltutil.runner('cache.grains', tgt='*') %}

|

||||

{% for minionid, ip in salt.saltutil.runner(

|

||||

'mine.get',

|

||||

tgt='G@role:so-manager or G@role:so-managersearch or G@role:so-standalone or G@role:so-searchnode or G@role:so-heavynode or G@role:so-receiver or G@role:so-fleet ',

|

||||

fun='network.ip_addrs',

|

||||

tgt_type='compound') | dictsort()

|

||||

%}

|

||||

|

||||

{% set hostname = cached_grains[minionid]['host'] %}

|

||||

{% set node_type = minionid.split('_')[1] %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: ip[0]}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: ip[0]}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update(ip[0]) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

logstash:

|

||||

nodes:

|

||||

{% for node_type, values in node_types.items() %}

|

||||

{{node_type}}:

|

||||

{% for hostname, ip in values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{ip}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

17

pillar/logstash/search.sls

Normal file

@@ -0,0 +1,17 @@

|

||||

{%- set PIPELINE = salt['pillar.get']('global:pipeline', 'minio') %}

|

||||

logstash:

|

||||

pipelines:

|

||||

search:

|

||||

config:

|

||||

- so/0900_input_redis.conf.jinja

|

||||

- so/9000_output_zeek.conf.jinja

|

||||

- so/9002_output_import.conf.jinja

|

||||

- so/9034_output_syslog.conf.jinja

|

||||

- so/9050_output_filebeatmodules.conf.jinja

|

||||

- so/9100_output_osquery.conf.jinja

|

||||

- so/9400_output_suricata.conf.jinja

|

||||

- so/9500_output_beats.conf.jinja

|

||||

- so/9600_output_ossec.conf.jinja

|

||||

- so/9700_output_strelka.conf.jinja

|

||||

- so/9800_output_logscan.conf.jinja

|

||||

- so/9900_output_endgame.conf.jinja

|

||||

@@ -1,31 +0,0 @@

|

||||

{% set node_types = {} %}

|

||||

{% set manage_alived = salt.saltutil.runner('manage.alived', show_ip=True) %}

|

||||

{% for minionid, ip in salt.saltutil.runner('mine.get', tgt='*', fun='network.ip_addrs', tgt_type='glob') | dictsort() %}

|

||||

{% set hostname = minionid.split('_')[0] %}

|

||||

{% set node_type = minionid.split('_')[1] %}

|

||||

{% set is_alive = False %}

|

||||

{% if minionid in manage_alived.keys() %}

|

||||

{% if ip[0] == manage_alived[minionid] %}

|

||||

{% set is_alive = True %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% if node_type not in node_types.keys() %}

|

||||

{% do node_types.update({node_type: {hostname: {'ip':ip[0], 'alive':is_alive }}}) %}

|

||||

{% else %}

|

||||

{% if hostname not in node_types[node_type] %}

|

||||

{% do node_types[node_type].update({hostname: {'ip':ip[0], 'alive':is_alive}}) %}

|

||||

{% else %}

|

||||

{% do node_types[node_type][hostname].update({'ip':ip[0], 'alive':is_alive}) %}

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

{% endfor %}

|

||||

|

||||

node_data:

|

||||

{% for node_type, host_values in node_types.items() %}

|

||||

{% for hostname, details in host_values.items() %}

|

||||

{{hostname}}:

|

||||

ip: {{details.ip}}

|

||||

alive: {{ details.alive }}

|

||||

role: {{node_type}}

|

||||

{% endfor %}

|

||||

{% endfor %}

|

||||

@@ -1,14 +0,0 @@

|

||||

# Copyright Jason Ertel (github.com/jertel).

|

||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||

# https://securityonion.net/license; you may not use this file except in compliance with

|

||||

# the Elastic License 2.0.

|

||||

|

||||

# Note: Per the Elastic License 2.0, the second limitation states:

|

||||

#

|

||||

# "You may not move, change, disable, or circumvent the license key functionality

|

||||

# in the software, and you may not remove or obscure any functionality in the

|

||||

# software that is protected by the license key."

|

||||

|

||||

# This file is generated by Security Onion and contains a list of license-enabled features.

|

||||

features: []

|

||||

346

pillar/top.sls

@@ -1,307 +1,119 @@

|

||||

base:

|

||||

'*':

|

||||

- global.soc_global

|

||||

- global.adv_global

|

||||

- docker.soc_docker

|

||||

- docker.adv_docker

|

||||

- influxdb.token

|

||||

- logrotate.soc_logrotate

|

||||

- logrotate.adv_logrotate

|

||||

- ntp.soc_ntp

|

||||

- ntp.adv_ntp

|

||||

- patch.needs_restarting

|

||||

- patch.soc_patch

|

||||

- patch.adv_patch

|

||||

- sensoroni.soc_sensoroni

|

||||

- sensoroni.adv_sensoroni

|

||||

- telegraf.soc_telegraf

|

||||

- telegraf.adv_telegraf

|

||||

- logrotate

|

||||

- users

|

||||

|

||||

'* and not *_desktop':

|

||||

- firewall.soc_firewall

|

||||

- firewall.adv_firewall

|

||||

- nginx.soc_nginx

|

||||

- nginx.adv_nginx

|

||||

- node_data.ips

|

||||

'*_eval or *_helixsensor or *_heavynode or *_sensor or *_standalone or *_import':

|

||||

- match: compound

|

||||

- zeek

|

||||

|

||||

'*_managersearch or *_heavynode':

|

||||

- match: compound

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- logstash.search

|

||||

- elasticsearch.search

|

||||

|

||||

'*_manager':

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- elasticsearch.manager

|

||||

|

||||

'*_manager or *_managersearch':

|

||||

- match: compound

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- data.*

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

- secrets

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- curator.soc_curator

|

||||

- curator.adv_curator

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_sensor':

|

||||

- zeeklogs

|

||||

- healthcheck.sensor

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_eval':

|

||||

- data.*

|

||||

- zeeklogs

|

||||

- secrets

|

||||

- healthcheck.eval

|

||||

- elasticsearch.index_templates

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.eval

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

- kratos.soc_kratos

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- curator.soc_curator

|

||||

- curator.adv_curator

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

{% endif %}

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_standalone':

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- elasticsearch.index_templates

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- logstash

|

||||

- logstash.manager

|

||||

- logstash.search

|

||||

- elasticsearch.search

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

{% endif %}

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/kibana/secrets.sls') %}

|

||||

- kibana.secrets

|

||||

{% endif %}

|

||||

{% endif %}

|

||||

- data.*

|

||||

- zeeklogs

|

||||

- secrets

|

||||

- healthcheck.standalone

|

||||

- idstools.soc_idstools

|

||||

- idstools.adv_idstools

|

||||

- kratos.soc_kratos

|

||||

- kratos.adv_kratos

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- influxdb.soc_influxdb

|

||||

- influxdb.adv_influxdb

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- elasticfleet.soc_elasticfleet

|

||||

- elasticfleet.adv_elasticfleet

|

||||

- elastalert.soc_elastalert

|

||||

- elastalert.adv_elastalert

|

||||

- manager.soc_manager

|

||||

- manager.adv_manager

|

||||

- soc.soc_soc

|

||||

- soc.adv_soc

|

||||

- soc.license

|

||||

- soctopus.soc_soctopus

|

||||

- soctopus.adv_soctopus

|

||||

- kibana.soc_kibana

|

||||

- kibana.adv_kibana

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- curator.soc_curator

|

||||

- curator.adv_curator

|

||||

- backup.soc_backup

|

||||

- backup.adv_backup

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

|

||||

'*_node':

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_heavynode':

|

||||

- zeeklogs

|

||||

- elasticsearch.auth

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

- curator.soc_curator

|

||||

- curator.adv_curator

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- zeek.soc_zeek

|

||||

- zeek.adv_zeek

|

||||

- bpf.soc_bpf

|

||||

- bpf.adv_bpf

|

||||

- pcap.soc_pcap

|

||||

- pcap.adv_pcap

|

||||

- suricata.soc_suricata

|

||||

- suricata.adv_suricata

|

||||

- strelka.soc_strelka

|

||||

- strelka.adv_strelka

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_idh':

|

||||

- idh.soc_idh

|

||||

- idh.adv_idh

|

||||

'*_helixsensor':

|

||||

- fireeye

|

||||

- zeeklogs

|

||||

- logstash

|

||||

- logstash.helix

|

||||

- global

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_searchnode':

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

- elasticsearch.soc_elasticsearch

|

||||

- elasticsearch.adv_elasticsearch

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_receiver':

|

||||

- logstash.nodes

|

||||

- logstash.soc_logstash

|

||||

- logstash.adv_logstash

|

||||

{% if salt['file.file_exists']('/opt/so/saltstack/local/pillar/elasticsearch/auth.sls') %}

|

||||

- elasticsearch.auth

|

||||

{% endif %}

|

||||

- redis.soc_redis

|

||||

- redis.adv_redis

|

||||

- minions.{{ grains.id }}

|

||||

- minions.adv_{{ grains.id }}

|

||||

|

||||

'*_import':

|

||||

- secrets

|

||||

- elasticsearch.index_templates

|

||||