mirror of

https://github.com/Security-Onion-Solutions/securityonion.git

synced 2025-12-06 17:22:49 +01:00

Merge branch '2.4/dev' into desktop

This commit is contained in:

12

README.md

12

README.md

@@ -5,22 +5,22 @@ Security Onion 2.4 Release Candidate 1 (RC1) is here!

|

|||||||

## Screenshots

|

## Screenshots

|

||||||

|

|

||||||

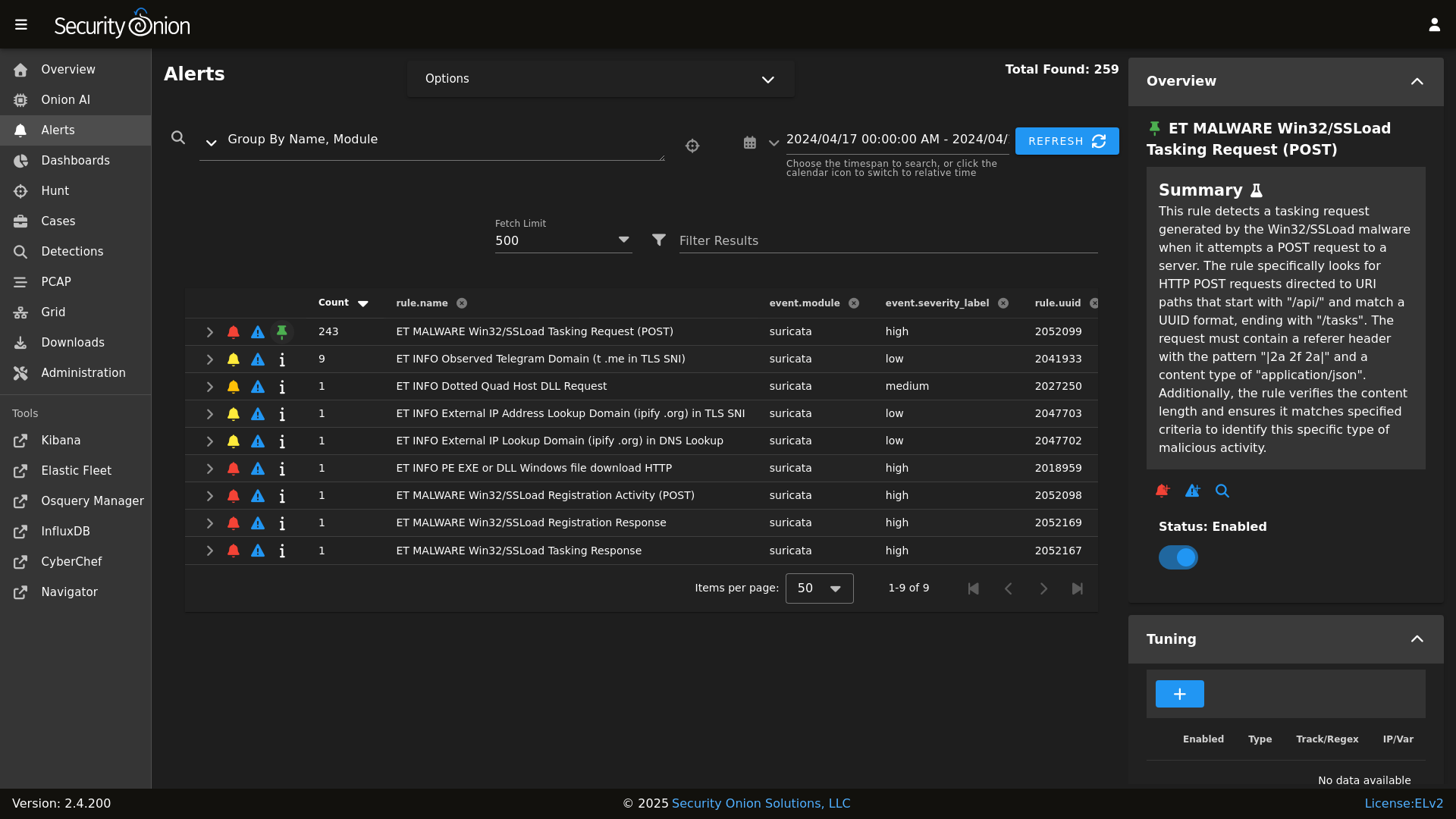

Alerts

|

Alerts

|

||||||

|

|

||||||

|

|

||||||

Dashboards

|

Dashboards

|

||||||

|

|

||||||

|

|

||||||

Hunt

|

Hunt

|

||||||

|

|

||||||

|

|

||||||

PCAP

|

PCAP

|

||||||

|

|

||||||

|

|

||||||

Grid

|

Grid

|

||||||

|

|

||||||

|

|

||||||

Config

|

Config

|

||||||

|

|

||||||

|

|

||||||

### Release Notes

|

### Release Notes

|

||||||

|

|

||||||

|

|||||||

@@ -46,6 +46,7 @@

|

|||||||

'pcap',

|

'pcap',

|

||||||

'suricata',

|

'suricata',

|

||||||

'healthcheck',

|

'healthcheck',

|

||||||

|

'elasticagent',

|

||||||

'schedule',

|

'schedule',

|

||||||

'tcpreplay',

|

'tcpreplay',

|

||||||

'docker_clean'

|

'docker_clean'

|

||||||

|

|||||||

@@ -8,6 +8,15 @@ soup_scripts:

|

|||||||

- source: salt://common/tools/sbin

|

- source: salt://common/tools/sbin

|

||||||

- include_pat:

|

- include_pat:

|

||||||

- so-common

|

- so-common

|

||||||

- so-firewall

|

|

||||||

- so-image-common

|

- so-image-common

|

||||||

- soup

|

|

||||||

|

soup_manager_scripts:

|

||||||

|

file.recurse:

|

||||||

|

- name: /usr/sbin

|

||||||

|

- user: root

|

||||||

|

- group: root

|

||||||

|

- file_mode: 755

|

||||||

|

- source: salt://manager/tools/sbin

|

||||||

|

- include_pat:

|

||||||

|

- so-firewall

|

||||||

|

- soup

|

||||||

@@ -43,6 +43,7 @@ desktop_packages:

|

|||||||

- bpftool

|

- bpftool

|

||||||

- bzip2

|

- bzip2

|

||||||

- chkconfig

|

- chkconfig

|

||||||

|

- chromium

|

||||||

- chrony

|

- chrony

|

||||||

- cinnamon

|

- cinnamon

|

||||||

- cinnamon-control-center

|

- cinnamon-control-center

|

||||||

@@ -67,6 +68,7 @@ desktop_packages:

|

|||||||

- dosfstools

|

- dosfstools

|

||||||

- dracut-config-rescue

|

- dracut-config-rescue

|

||||||

- dracut-live

|

- dracut-live

|

||||||

|

- dsniff

|

||||||

- e2fsprogs

|

- e2fsprogs

|

||||||

- ed

|

- ed

|

||||||

- efi-filesystem

|

- efi-filesystem

|

||||||

@@ -192,6 +194,7 @@ desktop_packages:

|

|||||||

- nemo-preview

|

- nemo-preview

|

||||||

- net-tools

|

- net-tools

|

||||||

- netronome-firmware

|

- netronome-firmware

|

||||||

|

- ngrep

|

||||||

- nm-connection-editor

|

- nm-connection-editor

|

||||||

- nmap-ncat

|

- nmap-ncat

|

||||||

- nvme-cli

|

- nvme-cli

|

||||||

@@ -220,6 +223,7 @@ desktop_packages:

|

|||||||

- psacct

|

- psacct

|

||||||

- pt-sans-fonts

|

- pt-sans-fonts

|

||||||

- python3-libselinux

|

- python3-libselinux

|

||||||

|

- python3-scapy

|

||||||

- qemu-guest-agent

|

- qemu-guest-agent

|

||||||

- quota

|

- quota

|

||||||

- realmd

|

- realmd

|

||||||

@@ -251,6 +255,7 @@ desktop_packages:

|

|||||||

- smc-meera-fonts

|

- smc-meera-fonts

|

||||||

- sos

|

- sos

|

||||||

- spice-vdagent

|

- spice-vdagent

|

||||||

|

- ssldump

|

||||||

- sssd

|

- sssd

|

||||||

- sssd-common

|

- sssd-common

|

||||||

- sssd-kcm

|

- sssd-kcm

|

||||||

@@ -263,6 +268,7 @@ desktop_packages:

|

|||||||

- systemd-udev

|

- systemd-udev

|

||||||

- tar

|

- tar

|

||||||

- tcpdump

|

- tcpdump

|

||||||

|

- tcpflow

|

||||||

- teamd

|

- teamd

|

||||||

- thai-scalable-waree-fonts

|

- thai-scalable-waree-fonts

|

||||||

- time

|

- time

|

||||||

@@ -282,8 +288,10 @@ desktop_packages:

|

|||||||

- vim-powerline

|

- vim-powerline

|

||||||

- virt-what

|

- virt-what

|

||||||

- wget

|

- wget

|

||||||

|

- whois

|

||||||

- which

|

- which

|

||||||

- wireplumber

|

- wireplumber

|

||||||

|

- wireshark

|

||||||

- words

|

- words

|

||||||

- xdg-user-dirs-gtk

|

- xdg-user-dirs-gtk

|

||||||

- xed

|

- xed

|

||||||

|

|||||||

@@ -178,6 +178,11 @@ docker:

|

|||||||

custom_bind_mounts: []

|

custom_bind_mounts: []

|

||||||

extra_hosts: []

|

extra_hosts: []

|

||||||

extra_env: []

|

extra_env: []

|

||||||

|

'so-elastic-agent':

|

||||||

|

final_octet: 46

|

||||||

|

custom_bind_mounts: []

|

||||||

|

extra_hosts: []

|

||||||

|

extra_env: []

|

||||||

'so-telegraf':

|

'so-telegraf':

|

||||||

final_octet: 99

|

final_octet: 99

|

||||||

custom_bind_mounts: []

|

custom_bind_mounts: []

|

||||||

|

|||||||

47

salt/elasticagent/config.sls

Normal file

47

salt/elasticagent/config.sls

Normal file

@@ -0,0 +1,47 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

{% from 'allowed_states.map.jinja' import allowed_states %}

|

||||||

|

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

||||||

|

{% if sls.split('.')[0] in allowed_states %}

|

||||||

|

|

||||||

|

# Add EA Group

|

||||||

|

elasticagentgroup:

|

||||||

|

group.present:

|

||||||

|

- name: elastic-agent

|

||||||

|

- gid: 949

|

||||||

|

|

||||||

|

# Add EA user

|

||||||

|

elastic-agent:

|

||||||

|

user.present:

|

||||||

|

- uid: 949

|

||||||

|

- gid: 949

|

||||||

|

- home: /opt/so/conf/elastic-agent

|

||||||

|

- createhome: False

|

||||||

|

|

||||||

|

elasticagentconfdir:

|

||||||

|

file.directory:

|

||||||

|

- name: /opt/so/conf/elastic-agent

|

||||||

|

- user: 949

|

||||||

|

- group: 939

|

||||||

|

- makedirs: True

|

||||||

|

|

||||||

|

# Create config

|

||||||

|

create-elastic-agent-config:

|

||||||

|

file.managed:

|

||||||

|

- name: /opt/so/conf/elastic-agent/elastic-agent.yml

|

||||||

|

- source: salt://elasticagent/files/elastic-agent.yml.jinja

|

||||||

|

- user: 949

|

||||||

|

- group: 939

|

||||||

|

- template: jinja

|

||||||

|

|

||||||

|

|

||||||

|

{% else %}

|

||||||

|

|

||||||

|

{{sls}}_state_not_allowed:

|

||||||

|

test.fail_without_changes:

|

||||||

|

- name: {{sls}}_state_not_allowed

|

||||||

|

|

||||||

|

{% endif %}

|

||||||

2

salt/elasticagent/defaults.yaml

Normal file

2

salt/elasticagent/defaults.yaml

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

elasticagent:

|

||||||

|

enabled: False

|

||||||

27

salt/elasticagent/disabled.sls

Normal file

27

salt/elasticagent/disabled.sls

Normal file

@@ -0,0 +1,27 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

{% from 'allowed_states.map.jinja' import allowed_states %}

|

||||||

|

{% if sls.split('.')[0] in allowed_states %}

|

||||||

|

|

||||||

|

include:

|

||||||

|

- elasticagent.sostatus

|

||||||

|

|

||||||

|

so-elastic-agent:

|

||||||

|

docker_container.absent:

|

||||||

|

- force: True

|

||||||

|

|

||||||

|

so-elastic-agent_so-status.disabled:

|

||||||

|

file.comment:

|

||||||

|

- name: /opt/so/conf/so-status/so-status.conf

|

||||||

|

- regex: ^so-elastic-agent$

|

||||||

|

|

||||||

|

{% else %}

|

||||||

|

|

||||||

|

{{sls}}_state_not_allowed:

|

||||||

|

test.fail_without_changes:

|

||||||

|

- name: {{sls}}_state_not_allowed

|

||||||

|

|

||||||

|

{% endif %}

|

||||||

62

salt/elasticagent/enabled.sls

Normal file

62

salt/elasticagent/enabled.sls

Normal file

@@ -0,0 +1,62 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

{% from 'allowed_states.map.jinja' import allowed_states %}

|

||||||

|

{% if sls.split('.')[0] in allowed_states %}

|

||||||

|

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

||||||

|

{% from 'docker/docker.map.jinja' import DOCKER %}

|

||||||

|

|

||||||

|

|

||||||

|

include:

|

||||||

|

- elasticagent.config

|

||||||

|

- elasticagent.sostatus

|

||||||

|

|

||||||

|

so-elastic-agent:

|

||||||

|

docker_container.running:

|

||||||

|

- image: {{ GLOBALS.registry_host }}:5000/{{ GLOBALS.image_repo }}/so-elastic-agent:{{ GLOBALS.so_version }}

|

||||||

|

- name: so-elastic-agent

|

||||||

|

- hostname: {{ GLOBALS.hostname }}

|

||||||

|

- detach: True

|

||||||

|

- user: 949

|

||||||

|

- networks:

|

||||||

|

- sobridge:

|

||||||

|

- ipv4_address: {{ DOCKER.containers['so-elastic-agent'].ip }}

|

||||||

|

- extra_hosts:

|

||||||

|

- {{ GLOBALS.manager }}:{{ GLOBALS.manager_ip }}

|

||||||

|

- {{ GLOBALS.hostname }}:{{ GLOBALS.node_ip }}

|

||||||

|

{% if DOCKER.containers['so-elastic-agent'].extra_hosts %}

|

||||||

|

{% for XTRAHOST in DOCKER.containers['so-elastic-agent'].extra_hosts %}

|

||||||

|

- {{ XTRAHOST }}

|

||||||

|

{% endfor %}

|

||||||

|

{% endif %}

|

||||||

|

- binds:

|

||||||

|

- /opt/so/conf/elastic-agent/elastic-agent.yml:/usr/share/elastic-agent/elastic-agent.yml:ro

|

||||||

|

- /nsm:/nsm:ro

|

||||||

|

{% if DOCKER.containers['so-elastic-agent'].custom_bind_mounts %}

|

||||||

|

{% for BIND in DOCKER.containers['so-elastic-agent'].custom_bind_mounts %}

|

||||||

|

- {{ BIND }}

|

||||||

|

{% endfor %}

|

||||||

|

{% endif %}

|

||||||

|

{% if DOCKER.containers['so-elastic-agent'].extra_env %}

|

||||||

|

- environment:

|

||||||

|

{% for XTRAENV in DOCKER.containers['so-elastic-agent'].extra_env %}

|

||||||

|

- {{ XTRAENV }}

|

||||||

|

{% endfor %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

|

|

||||||

|

delete_so-elastic-agent_so-status.disabled:

|

||||||

|

file.uncomment:

|

||||||

|

- name: /opt/so/conf/so-status/so-status.conf

|

||||||

|

- regex: ^so-elastic-agent$

|

||||||

|

|

||||||

|

|

||||||

|

{% else %}

|

||||||

|

|

||||||

|

{{sls}}_state_not_allowed:

|

||||||

|

test.fail_without_changes:

|

||||||

|

- name: {{sls}}_state_not_allowed

|

||||||

|

|

||||||

|

{% endif %}

|

||||||

119

salt/elasticagent/files/elastic-agent.yml.jinja

Normal file

119

salt/elasticagent/files/elastic-agent.yml.jinja

Normal file

@@ -0,0 +1,119 @@

|

|||||||

|

{% from 'vars/globals.map.jinja' import GLOBALS %}

|

||||||

|

{%- set ES_USER = salt['pillar.get']('elasticsearch:auth:users:so_elastic_user:user', '') %}

|

||||||

|

{%- set ES_PASS = salt['pillar.get']('elasticsearch:auth:users:so_elastic_user:pass', '') %}

|

||||||

|

|

||||||

|

id: aea1ba80-1065-11ee-a369-97538913b6a9

|

||||||

|

revision: 2

|

||||||

|

outputs:

|

||||||

|

default:

|

||||||

|

type: elasticsearch

|

||||||

|

hosts:

|

||||||

|

- 'https://{{ GLOBALS.hostname }}:9200'

|

||||||

|

username: '{{ ES_USER }}'

|

||||||

|

password: '{{ ES_PASS }}'

|

||||||

|

ssl.verification_mode: none

|

||||||

|

output_permissions: {}

|

||||||

|

agent:

|

||||||

|

download:

|

||||||

|

sourceURI: 'http://{{ GLOBALS.manager }}:8443/artifacts/'

|

||||||

|

monitoring:

|

||||||

|

enabled: false

|

||||||

|

logs: false

|

||||||

|

metrics: false

|

||||||

|

features: {}

|

||||||

|

inputs:

|

||||||

|

- id: logfile-logs-80ffa884-2cfc-459a-964a-34df25714d85

|

||||||

|

name: suricata-logs

|

||||||

|

revision: 1

|

||||||

|

type: logfile

|

||||||

|

use_output: default

|

||||||

|

meta:

|

||||||

|

package:

|

||||||

|

name: log

|

||||||

|

version:

|

||||||

|

data_stream:

|

||||||

|

namespace: so

|

||||||

|

package_policy_id: 80ffa884-2cfc-459a-964a-34df25714d85

|

||||||

|

streams:

|

||||||

|

- id: logfile-log.log-80ffa884-2cfc-459a-964a-34df25714d85

|

||||||

|

data_stream:

|

||||||

|

dataset: suricata

|

||||||

|

paths:

|

||||||

|

- /nsm/suricata/eve*.json

|

||||||

|

processors:

|

||||||

|

- add_fields:

|

||||||

|

target: event

|

||||||

|

fields:

|

||||||

|

category: network

|

||||||

|

module: suricata

|

||||||

|

pipeline: suricata.common

|

||||||

|

- id: logfile-logs-90103ac4-f6bd-4a4a-b596-952c332390fc

|

||||||

|

name: strelka-logs

|

||||||

|

revision: 1

|

||||||

|

type: logfile

|

||||||

|

use_output: default

|

||||||

|

meta:

|

||||||

|

package:

|

||||||

|

name: log

|

||||||

|

version:

|

||||||

|

data_stream:

|

||||||

|

namespace: so

|

||||||

|

package_policy_id: 90103ac4-f6bd-4a4a-b596-952c332390fc

|

||||||

|

streams:

|

||||||

|

- id: logfile-log.log-90103ac4-f6bd-4a4a-b596-952c332390fc

|

||||||

|

data_stream:

|

||||||

|

dataset: strelka

|

||||||

|

paths:

|

||||||

|

- /nsm/strelka/log/strelka.log

|

||||||

|

processors:

|

||||||

|

- add_fields:

|

||||||

|

target: event

|

||||||

|

fields:

|

||||||

|

category: file

|

||||||

|

module: strelka

|

||||||

|

pipeline: strelka.file

|

||||||

|

- id: logfile-logs-6197fe84-9b58-4d9b-8464-3d517f28808d

|

||||||

|

name: zeek-logs

|

||||||

|

revision: 1

|

||||||

|

type: logfile

|

||||||

|

use_output: default

|

||||||

|

meta:

|

||||||

|

package:

|

||||||

|

name: log

|

||||||

|

version:

|

||||||

|

data_stream:

|

||||||

|

namespace: so

|

||||||

|

package_policy_id: 6197fe84-9b58-4d9b-8464-3d517f28808d

|

||||||

|

streams:

|

||||||

|

- id: logfile-log.log-6197fe84-9b58-4d9b-8464-3d517f28808d

|

||||||

|

data_stream:

|

||||||

|

dataset: zeek

|

||||||

|

paths:

|

||||||

|

- /nsm/zeek/logs/current/*.log

|

||||||

|

processors:

|

||||||

|

- dissect:

|

||||||

|

tokenizer: '/nsm/zeek/logs/current/%{pipeline}.log'

|

||||||

|

field: log.file.path

|

||||||

|

trim_chars: .log

|

||||||

|

target_prefix: ''

|

||||||

|

- script:

|

||||||

|

lang: javascript

|

||||||

|

source: |

|

||||||

|

function process(event) {

|

||||||

|

var pl = event.Get("pipeline");

|

||||||

|

event.Put("@metadata.pipeline", "zeek." + pl);

|

||||||

|

}

|

||||||

|

- add_fields:

|

||||||

|

target: event

|

||||||

|

fields:

|

||||||

|

category: network

|

||||||

|

module: zeek

|

||||||

|

- add_tags:

|

||||||

|

tags: ics

|

||||||

|

when:

|

||||||

|

regexp:

|

||||||

|

pipeline: >-

|

||||||

|

^bacnet*|^bsap*|^cip*|^cotp*|^dnp3*|^ecat*|^enip*|^modbus*|^opcua*|^profinet*|^s7comm*

|

||||||

|

exclude_files:

|

||||||

|

- >-

|

||||||

|

broker|capture_loss|cluster|ecat_arp_info|known_hosts|known_services|loaded_scripts|ntp|ocsp|packet_filter|reporter|stats|stderr|stdout.log$

|

||||||

13

salt/elasticagent/init.sls

Normal file

13

salt/elasticagent/init.sls

Normal file

@@ -0,0 +1,13 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

{% from 'elasticagent/map.jinja' import ELASTICAGENTMERGED %}

|

||||||

|

|

||||||

|

include:

|

||||||

|

{% if ELASTICAGENTMERGED.enabled %}

|

||||||

|

- elasticagent.enabled

|

||||||

|

{% else %}

|

||||||

|

- elasticagent.disabled

|

||||||

|

{% endif %}

|

||||||

7

salt/elasticagent/map.jinja

Normal file

7

salt/elasticagent/map.jinja

Normal file

@@ -0,0 +1,7 @@

|

|||||||

|

{# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

Elastic License 2.0. #}

|

||||||

|

|

||||||

|

{% import_yaml 'elasticagent/defaults.yaml' as ELASTICAGENTDEFAULTS %}

|

||||||

|

{% set ELASTICAGENTMERGED = salt['pillar.get']('elasticagent', ELASTICAGENTDEFAULTS.elasticagent, merge=True) %}

|

||||||

21

salt/elasticagent/sostatus.sls

Normal file

21

salt/elasticagent/sostatus.sls

Normal file

@@ -0,0 +1,21 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

{% from 'allowed_states.map.jinja' import allowed_states %}

|

||||||

|

{% if sls.split('.')[0] in allowed_states %}

|

||||||

|

|

||||||

|

append_so-elastic-agent_so-status.conf:

|

||||||

|

file.append:

|

||||||

|

- name: /opt/so/conf/so-status/so-status.conf

|

||||||

|

- text: so-elastic-agent

|

||||||

|

- unless: grep -q so-elastic-agent$ /opt/so/conf/so-status/so-status.conf

|

||||||

|

|

||||||

|

{% else %}

|

||||||

|

|

||||||

|

{{sls}}_state_not_allowed:

|

||||||

|

test.fail_without_changes:

|

||||||

|

- name: {{sls}}_state_not_allowed

|

||||||

|

|

||||||

|

{% endif %}

|

||||||

10

salt/elasticagent/tools/sbin/so-elastic-agent-restart

Executable file

10

salt/elasticagent/tools/sbin/so-elastic-agent-restart

Executable file

@@ -0,0 +1,10 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

|

||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

. /usr/sbin/so-common

|

||||||

|

|

||||||

|

/usr/sbin/so-restart elastic-agent $1

|

||||||

12

salt/elasticagent/tools/sbin/so-elastic-agent-start

Executable file

12

salt/elasticagent/tools/sbin/so-elastic-agent-start

Executable file

@@ -0,0 +1,12 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

|

||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

. /usr/sbin/so-common

|

||||||

|

|

||||||

|

/usr/sbin/so-start elastic-agent $1

|

||||||

12

salt/elasticagent/tools/sbin/so-elastic-agent-stop

Executable file

12

salt/elasticagent/tools/sbin/so-elastic-agent-stop

Executable file

@@ -0,0 +1,12 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

|

||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

. /usr/sbin/so-common

|

||||||

|

|

||||||

|

/usr/sbin/so-stop elastic-agent $1

|

||||||

@@ -8,13 +8,13 @@

|

|||||||

{% if sls.split('.')[0] in allowed_states %}

|

{% if sls.split('.')[0] in allowed_states %}

|

||||||

|

|

||||||

# Add EA Group

|

# Add EA Group

|

||||||

elasticsagentgroup:

|

elasticfleetgroup:

|

||||||

group.present:

|

group.present:

|

||||||

- name: elastic-agent

|

- name: elastic-fleet

|

||||||

- gid: 947

|

- gid: 947

|

||||||

|

|

||||||

# Add EA user

|

# Add EA user

|

||||||

elastic-agent:

|

elastic-fleet:

|

||||||

user.present:

|

user.present:

|

||||||

- uid: 947

|

- uid: 947

|

||||||

- gid: 947

|

- gid: 947

|

||||||

|

|||||||

@@ -23,3 +23,11 @@ elasticfleet:

|

|||||||

- stats

|

- stats

|

||||||

- stderr

|

- stderr

|

||||||

- stdout

|

- stdout

|

||||||

|

packages:

|

||||||

|

- aws

|

||||||

|

- azure

|

||||||

|

- cloudflare

|

||||||

|

- fim

|

||||||

|

- github

|

||||||

|

- google_workspace

|

||||||

|

- 1password

|

||||||

|

|||||||

@@ -8,7 +8,7 @@

|

|||||||

"name": "import-zeek-logs",

|

"name": "import-zeek-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Zeek Import logs",

|

"description": "Zeek Import logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -9,7 +9,7 @@

|

|||||||

"name": "zeek-logs",

|

"name": "zeek-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Zeek logs",

|

"description": "Zeek logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -0,0 +1,106 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "elasticsearch",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "elasticsearch-logs",

|

||||||

|

"namespace": "default",

|

||||||

|

"description": "Elasticsearch Logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"elasticsearch-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"elasticsearch.audit": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/elasticsearch/*_audit.json"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch.deprecation": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/elasticsearch/*_deprecation.json"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch.gc": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/elasticsearch/gc.log.[0-9]*",

|

||||||

|

"/var/log/elasticsearch/gc.log"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch.server": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/elasticsearch/*.log"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch.slowlog": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/elasticsearch/*_index_search_slowlog.json",

|

||||||

|

"/var/log/elasticsearch/*_index_indexing_slowlog.json"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch-elasticsearch/metrics": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"hosts": [

|

||||||

|

"http://localhost:9200"

|

||||||

|

],

|

||||||

|

"scope": "node"

|

||||||

|

},

|

||||||

|

"streams": {

|

||||||

|

"elasticsearch.stack_monitoring.ccr": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.cluster_stats": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.enrich": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.index": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.index_recovery": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"active.only": true

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.index_summary": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.ml_job": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.node": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.node_stats": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.pending_tasks": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"elasticsearch.stack_monitoring.shard": {

|

||||||

|

"enabled": false

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "idh-logs",

|

"name": "idh-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "IDH integration",

|

"description": "IDH integration",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "import-evtx-logs",

|

"name": "import-evtx-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Import Windows EVTX logs",

|

"description": "Import Windows EVTX logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"vars": {},

|

"vars": {},

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "import-suricata-logs",

|

"name": "import-suricata-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Import Suricata logs",

|

"description": "Import Suricata logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -0,0 +1,29 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "log",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "kratos-logs",

|

||||||

|

"namespace": "so",

|

||||||

|

"description": "Kratos logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"logs-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"log.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/kratos/kratos.log"

|

||||||

|

],

|

||||||

|

"data_stream.dataset": "kratos",

|

||||||

|

"tags": ["so-kratos"],

|

||||||

|

"processors": "- decode_json_fields:\n fields: [\"message\"]\n target: \"\"\n add_error_key: true \n- add_fields:\n target: event\n fields:\n category: iam\n module: kratos",

|

||||||

|

"custom": "pipeline: kratos"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,20 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "osquery_manager",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "osquery-grid-nodes",

|

||||||

|

"namespace": "default",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"osquery_manager-osquery": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"osquery_manager.result": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,76 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "redis",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "redis-logs",

|

||||||

|

"namespace": "default",

|

||||||

|

"description": "Redis logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"redis-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"redis.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/redis/redis.log"

|

||||||

|

],

|

||||||

|

"tags": [

|

||||||

|

"redis-log"

|

||||||

|

],

|

||||||

|

"preserve_original_event": false

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"redis-redis": {

|

||||||

|

"enabled": false,

|

||||||

|

"streams": {

|

||||||

|

"redis.slowlog": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"hosts": [

|

||||||

|

"127.0.0.1:6379"

|

||||||

|

],

|

||||||

|

"password": ""

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"redis-redis/metrics": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"hosts": [

|

||||||

|

"127.0.0.1:6379"

|

||||||

|

],

|

||||||

|

"idle_timeout": "20s",

|

||||||

|

"maxconn": 10,

|

||||||

|

"network": "tcp",

|

||||||

|

"password": ""

|

||||||

|

},

|

||||||

|

"streams": {

|

||||||

|

"redis.info": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"period": "10s"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"redis.key": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"key.patterns": "- limit: 20\n pattern: *\n",

|

||||||

|

"period": "10s"

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"redis.keyspace": {

|

||||||

|

"enabled": false,

|

||||||

|

"vars": {

|

||||||

|

"period": "10s"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,29 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "log",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "soc-auth-sync-logs",

|

||||||

|

"namespace": "so",

|

||||||

|

"description": "Security Onion - Elastic Auth Sync - Logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"logs-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"log.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/soc/sync.log"

|

||||||

|

],

|

||||||

|

"data_stream.dataset": "soc",

|

||||||

|

"tags": ["so-soc"],

|

||||||

|

"processors": "- dissect:\n tokenizer: \"%{event.action}\"\n field: \"message\"\n target_prefix: \"\"\n- add_fields:\n target: event\n fields:\n category: host\n module: soc\n dataset_temp: auth_sync",

|

||||||

|

"custom": "pipeline: common"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,29 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "log",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "soc-salt-relay-logs",

|

||||||

|

"namespace": "so",

|

||||||

|

"description": "Security Onion - Salt Relay - Logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"logs-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"log.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/soc/salt-relay.log"

|

||||||

|

],

|

||||||

|

"data_stream.dataset": "soc",

|

||||||

|

"tags": ["so-soc"],

|

||||||

|

"processors": "- dissect:\n tokenizer: \"%{soc.ts} | %{event.action}\"\n field: \"message\"\n target_prefix: \"\"\n- add_fields:\n target: event\n fields:\n category: host\n module: soc\n dataset_temp: salt_relay",

|

||||||

|

"custom": "pipeline: common"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,29 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "log",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "soc-sensoroni-logs",

|

||||||

|

"namespace": "so",

|

||||||

|

"description": "Security Onion - Sensoroni - Logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"logs-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"log.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/sensoroni/sensoroni.log"

|

||||||

|

],

|

||||||

|

"data_stream.dataset": "soc",

|

||||||

|

"tags": [],

|

||||||

|

"processors": "- decode_json_fields:\n fields: [\"message\"]\n target: \"sensoroni\"\n process_array: true\n max_depth: 2\n add_error_key: true \n- add_fields:\n target: event\n fields:\n category: host\n module: soc\n dataset_temp: sensoroni\n- rename:\n fields:\n - from: \"sensoroni.fields.sourceIp\"\n to: \"source.ip\"\n - from: \"sensoroni.fields.status\"\n to: \"http.response.status_code\"\n - from: \"sensoroni.fields.method\"\n to: \"http.request.method\"\n - from: \"sensoroni.fields.path\"\n to: \"url.path\"\n - from: \"sensoroni.message\"\n to: \"event.action\"\n - from: \"sensoroni.level\"\n to: \"log.level\"\n ignore_missing: true",

|

||||||

|

"custom": "pipeline: common"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -0,0 +1,29 @@

|

|||||||

|

{

|

||||||

|

"package": {

|

||||||

|

"name": "log",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "soc-server-logs",

|

||||||

|

"namespace": "so",

|

||||||

|

"description": "Security Onion Console Logs",

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"inputs": {

|

||||||

|

"logs-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"log.log": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/opt/so/log/soc/sensoroni-server.log"

|

||||||

|

],

|

||||||

|

"data_stream.dataset": "soc",

|

||||||

|

"tags": ["so-soc"],

|

||||||

|

"processors": "- decode_json_fields:\n fields: [\"message\"]\n target: \"soc\"\n process_array: true\n max_depth: 2\n add_error_key: true \n- add_fields:\n target: event\n fields:\n category: host\n module: soc\n dataset_temp: server\n- rename:\n fields:\n - from: \"soc.fields.sourceIp\"\n to: \"source.ip\"\n - from: \"soc.fields.status\"\n to: \"http.response.status_code\"\n - from: \"soc.fields.method\"\n to: \"http.request.method\"\n - from: \"soc.fields.path\"\n to: \"url.path\"\n - from: \"soc.message\"\n to: \"event.action\"\n - from: \"soc.level\"\n to: \"log.level\"\n ignore_missing: true",

|

||||||

|

"custom": "pipeline: common"

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "strelka-logs",

|

"name": "strelka-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Strelka logs",

|

"description": "Strelka logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "suricata-logs",

|

"name": "suricata-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Suricata integration",

|

"description": "Suricata integration",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "syslog-tcp-514",

|

"name": "syslog-tcp-514",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Syslog Over TCP Port 514",

|

"description": "Syslog Over TCP Port 514",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"tcp-tcp": {

|

"tcp-tcp": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "syslog-udp-514",

|

"name": "syslog-udp-514",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Syslog over UDP Port 514",

|

"description": "Syslog over UDP Port 514",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_general",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"udp-udp": {

|

"udp-udp": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -0,0 +1,40 @@

|

|||||||

|

{

|

||||||

|

"policy_id": "so-grid-nodes_general",

|

||||||

|

"package": {

|

||||||

|

"name": "system",

|

||||||

|

"version": ""

|

||||||

|

},

|

||||||

|

"name": "system-grid-nodes",

|

||||||

|

"namespace": "default",

|

||||||

|

"inputs": {

|

||||||

|

"system-logfile": {

|

||||||

|

"enabled": true,

|

||||||

|

"streams": {

|

||||||

|

"system.auth": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/auth.log*",

|

||||||

|

"/var/log/secure*"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"system.syslog": {

|

||||||

|

"enabled": true,

|

||||||

|

"vars": {

|

||||||

|

"paths": [

|

||||||

|

"/var/log/messages*",

|

||||||

|

"/var/log/syslog*"

|

||||||

|

]

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

|

},

|

||||||

|

"system-winlog": {

|

||||||

|

"enabled": false

|

||||||

|

},

|

||||||

|

"system-system/metrics": {

|

||||||

|

"enabled": false

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "elasticsearch-logs",

|

"name": "elasticsearch-logs",

|

||||||

"namespace": "default",

|

"namespace": "default",

|

||||||

"description": "Elasticsearch Logs",

|

"description": "Elasticsearch Logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"elasticsearch-logfile": {

|

"elasticsearch-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "kratos-logs",

|

"name": "kratos-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Kratos logs",

|

"description": "Kratos logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -5,7 +5,7 @@

|

|||||||

},

|

},

|

||||||

"name": "osquery-grid-nodes",

|

"name": "osquery-grid-nodes",

|

||||||

"namespace": "default",

|

"namespace": "default",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"osquery_manager-osquery": {

|

"osquery_manager-osquery": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "redis-logs",

|

"name": "redis-logs",

|

||||||

"namespace": "default",

|

"namespace": "default",

|

||||||

"description": "Redis logs",

|

"description": "Redis logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"redis-logfile": {

|

"redis-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "soc-auth-sync-logs",

|

"name": "soc-auth-sync-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Security Onion - Elastic Auth Sync - Logs",

|

"description": "Security Onion - Elastic Auth Sync - Logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "soc-salt-relay-logs",

|

"name": "soc-salt-relay-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Security Onion - Salt Relay - Logs",

|

"description": "Security Onion - Salt Relay - Logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "soc-sensoroni-logs",

|

"name": "soc-sensoroni-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Security Onion - Sensoroni - Logs",

|

"description": "Security Onion - Sensoroni - Logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -6,7 +6,7 @@

|

|||||||

"name": "soc-server-logs",

|

"name": "soc-server-logs",

|

||||||

"namespace": "so",

|

"namespace": "so",

|

||||||

"description": "Security Onion Console Logs",

|

"description": "Security Onion Console Logs",

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"inputs": {

|

"inputs": {

|

||||||

"logs-logfile": {

|

"logs-logfile": {

|

||||||

"enabled": true,

|

"enabled": true,

|

||||||

@@ -1,5 +1,5 @@

|

|||||||

{

|

{

|

||||||

"policy_id": "so-grid-nodes",

|

"policy_id": "so-grid-nodes_heavy",

|

||||||

"package": {

|

"package": {

|

||||||

"name": "system",

|

"name": "system",

|

||||||

"version": ""

|

"version": ""

|

||||||

@@ -2,15 +2,24 @@

|

|||||||

# or more contributor license agreements. Licensed under the Elastic License 2.0; you may not use

|

# or more contributor license agreements. Licensed under the Elastic License 2.0; you may not use

|

||||||

# this file except in compliance with the Elastic License 2.0.

|

# this file except in compliance with the Elastic License 2.0.

|

||||||

|

|

||||||

{%- set GRIDNODETOKEN = salt['pillar.get']('global:fleet_grid_enrollment_token') -%}

|

{%- set GRIDNODETOKENGENERAL = salt['pillar.get']('global:fleet_grid_enrollment_token_general') -%}

|

||||||

|

{%- set GRIDNODETOKENHEAVY = salt['pillar.get']('global:fleet_grid_enrollment_token_heavy') -%}

|

||||||

|

|

||||||

{% set AGENT_STATUS = salt['service.available']('elastic-agent') %}

|

{% set AGENT_STATUS = salt['service.available']('elastic-agent') %}

|

||||||

{% if not AGENT_STATUS %}

|

{% if not AGENT_STATUS %}

|

||||||

|

|

||||||

|

{% if grains.role not in ['so-heavynode'] %}

|

||||||

run_installer:

|

run_installer:

|

||||||

cmd.script:

|

cmd.script:

|

||||||

- name: salt://elasticfleet/files/so_agent-installers/so-elastic-agent_linux_amd64

|

- name: salt://elasticfleet/files/so_agent-installers/so-elastic-agent_linux_amd64

|

||||||

- cwd: /opt/so

|

- cwd: /opt/so

|

||||||

- args: -token={{ GRIDNODETOKEN }}

|

- args: -token={{ GRIDNODETOKENGENERAL }}

|

||||||

|

{% else %}

|

||||||

|

run_installer:

|

||||||

|

cmd.script:

|

||||||

|

- name: salt://elasticfleet/files/so_agent-installers/so-elastic-agent_linux_amd64

|

||||||

|

- cwd: /opt/so

|

||||||

|

- args: -token={{ GRIDNODETOKENHEAVY }}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|||||||

@@ -51,6 +51,21 @@ elastic_fleet_integration_update() {

|

|||||||

curl -K /opt/so/conf/elasticsearch/curl.config -L -X PUT "localhost:5601/api/fleet/package_policies/$UPDATE_ID" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d "$JSON_STRING"

|

curl -K /opt/so/conf/elasticsearch/curl.config -L -X PUT "localhost:5601/api/fleet/package_policies/$UPDATE_ID" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' -d "$JSON_STRING"

|

||||||

}

|

}

|

||||||

|

|

||||||

|

elastic_fleet_package_version_check() {

|

||||||

|

PACKAGE=$1

|

||||||

|

curl -s -K /opt/so/conf/elasticsearch/curl.config -b "sid=$SESSIONCOOKIE" -L -X GET "localhost:5601/api/fleet/epm/packages/$PACKAGE" | jq -r '.item.version'

|

||||||

|

}

|

||||||

|

|

||||||

|

elastic_fleet_package_install() {

|

||||||

|

PKGKEY=$1

|

||||||

|

curl -s -K /opt/so/conf/elasticsearch/curl.config -b "sid=$SESSIONCOOKIE" -L -X POST -H 'kbn-xsrf: true' "localhost:5601/api/fleet/epm/packages/$PKGKEY"

|

||||||

|

}

|

||||||

|

|

||||||

|

elastic_fleet_package_is_installed() {

|

||||||

|

PACKAGE=$1

|

||||||

|

curl -s -K /opt/so/conf/elasticsearch/curl.config -b "sid=$SESSIONCOOKIE" -L -X GET -H 'kbn-xsrf: true' "localhost:5601/api/fleet/epm/packages/$PACKAGE" | jq -r '.item.status'

|

||||||

|

}

|

||||||

|

|

||||||

elastic_fleet_policy_create() {

|

elastic_fleet_policy_create() {

|

||||||

|

|

||||||

NAME=$1

|

NAME=$1

|

||||||

|

|||||||

@@ -25,11 +25,30 @@ if [ ! -f /opt/so/state/eaintegrations.txt ]; then

|

|||||||

fi

|

fi

|

||||||

done

|

done

|

||||||

|

|

||||||

# Grid Nodes

|

# Grid Nodes - General

|

||||||

for INTEGRATION in /opt/so/conf/elastic-fleet/integrations/grid-nodes/*.json

|

for INTEGRATION in /opt/so/conf/elastic-fleet/integrations/grid-nodes_general/*.json

|

||||||

do

|

do

|

||||||

printf "\n\nGrid Nodes Policy - Loading $INTEGRATION\n"

|

printf "\n\nGrid Nodes Policy_General - Loading $INTEGRATION\n"

|

||||||

elastic_fleet_integration_check "so-grid-nodes" "$INTEGRATION"

|

elastic_fleet_integration_check "so-grid-nodes_general" "$INTEGRATION"

|

||||||

|

if [ -n "$INTEGRATION_ID" ]; then

|

||||||

|

printf "\n\nIntegration $NAME exists - Updating integration\n"

|

||||||

|

elastic_fleet_integration_update "$INTEGRATION_ID" "@$INTEGRATION"

|

||||||

|

else

|

||||||

|

printf "\n\nIntegration does not exist - Creating integration\n"

|

||||||

|

if [ "$NAME" != "elasticsearch-logs" ]; then

|

||||||

|

elastic_fleet_integration_create "@$INTEGRATION"

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

done

|

||||||

|

if [[ "$RETURN_CODE" != "1" ]]; then

|

||||||

|

touch /opt/so/state/eaintegrations.txt

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Grid Nodes - Heavy

|

||||||

|

for INTEGRATION in /opt/so/conf/elastic-fleet/integrations/grid-nodes_heavy/*.json

|

||||||

|

do

|

||||||

|

printf "\n\nGrid Nodes Policy_Heavy - Loading $INTEGRATION\n"

|

||||||

|

elastic_fleet_integration_check "so-grid-nodes_heavy" "$INTEGRATION"

|

||||||

if [ -n "$INTEGRATION_ID" ]; then

|

if [ -n "$INTEGRATION_ID" ]; then

|

||||||

printf "\n\nIntegration $NAME exists - Updating integration\n"

|

printf "\n\nIntegration $NAME exists - Updating integration\n"

|

||||||

elastic_fleet_integration_update "$INTEGRATION_ID" "@$INTEGRATION"

|

elastic_fleet_integration_update "$INTEGRATION_ID" "@$INTEGRATION"

|

||||||

|

|||||||

17

salt/elasticfleet/tools/sbin_jinja/so-elastic-fleet-package-load

Executable file

17

salt/elasticfleet/tools/sbin_jinja/so-elastic-fleet-package-load

Executable file

@@ -0,0 +1,17 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

|

||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0; you may not use

|

||||||

|

# this file except in compliance with the Elastic License 2.0.

|

||||||

|

{%- import_yaml 'elasticfleet/defaults.yaml' as ELASTICFLEETDEFAULTS %}

|

||||||

|

{%- set SUPPORTED_PACKAGES = salt['pillar.get']('elasticfleet:packages', default=ELASTICFLEETDEFAULTS.elasticfleet.packages, merge=True) %}

|

||||||

|

|

||||||

|

. /usr/sbin/so-elastic-fleet-common

|

||||||

|

|

||||||

|

{%- for PACKAGE in SUPPORTED_PACKAGES %}

|

||||||

|

echo "Setting up {{ PACKAGE }} package..."

|

||||||

|

VERSION=$(elastic_fleet_package_version_check "{{ PACKAGE }}")

|

||||||

|

elastic_fleet_package_install "{{ PACKAGE }}-$VERSION"

|

||||||

|

echo

|

||||||

|

{%- endfor %}

|

||||||

|

echo

|

||||||

@@ -48,6 +48,11 @@ curl -K /opt/so/conf/elasticsearch/curl.config -L -X POST "localhost:5601/api/fl

|

|||||||

printf "\n\n"

|

printf "\n\n"

|

||||||

|

|

||||||

### Create Policies & Associated Integration Configuration ###

|

### Create Policies & Associated Integration Configuration ###

|

||||||

|

# Load packages

|

||||||

|

/usr/sbin/so-elastic-fleet-package-load

|

||||||

|

|

||||||

|

# Load Elasticsearch templates

|

||||||

|

/usr/sbin/so-elasticsearch-templates-load

|

||||||

|

|

||||||

# Manager Fleet Server Host

|

# Manager Fleet Server Host

|

||||||

elastic_fleet_policy_create "FleetServer_{{ GLOBALS.hostname }}" "Fleet Server - {{ GLOBALS.hostname }}" "true" "120"

|

elastic_fleet_policy_create "FleetServer_{{ GLOBALS.hostname }}" "Fleet Server - {{ GLOBALS.hostname }}" "true" "120"

|

||||||

@@ -62,8 +67,11 @@ curl -K /opt/so/conf/elasticsearch/curl.config -L -X PUT "localhost:5601/api/fle

|

|||||||

# Initial Endpoints Policy

|

# Initial Endpoints Policy

|

||||||

elastic_fleet_policy_create "endpoints-initial" "Initial Endpoint Policy" "false" "1209600"

|

elastic_fleet_policy_create "endpoints-initial" "Initial Endpoint Policy" "false" "1209600"

|

||||||

|

|

||||||

# Grid Nodes Policy

|

# Grid Nodes - General Policy

|

||||||

elastic_fleet_policy_create "so-grid-nodes" "SO Grid Node Policy" "false" "1209600"

|

elastic_fleet_policy_create "so-grid-nodes_general" "SO Grid Nodes - General Purpose" "false" "1209600"

|

||||||

|

|

||||||

|

# Grid Nodes - Heavy Node Policy

|

||||||

|

elastic_fleet_policy_create "so-grid-nodes_heavy" "SO Grid Nodes - Heavy Node" "false" "1209600"

|

||||||

|

|

||||||

# Load Integrations for default policies

|

# Load Integrations for default policies

|

||||||

so-elastic-fleet-integration-policy-load

|

so-elastic-fleet-integration-policy-load

|

||||||

@@ -81,7 +89,8 @@ curl -K /opt/so/conf/elasticsearch/curl.config -L -X POST "localhost:5601/api/fl

|

|||||||

|

|

||||||

# Query for Enrollment Tokens for default policies

|

# Query for Enrollment Tokens for default policies

|

||||||

ENDPOINTSENROLLMENTOKEN=$(curl -K /opt/so/conf/elasticsearch/curl.config -L "localhost:5601/api/fleet/enrollment_api_keys" -H 'kbn-xsrf: true' -H 'Content-Type: application/json' | jq .list | jq -r -c '.[] | select(.policy_id | contains("endpoints-initial")) | .api_key')

|