mirror of

https://github.com/Security-Onion-Solutions/securityonion.git

synced 2025-12-06 17:22:49 +01:00

Merge remote-tracking branch 'origin/2.4/dev' into reyesj2/kafka

Signed-off-by: reyesj2 <94730068+reyesj2@users.noreply.github.com>

This commit is contained in:

@@ -1,17 +1,17 @@

|

|||||||

### 2.4.60-20240320 ISO image released on 2024/03/20

|

### 2.4.70-20240529 ISO image released on 2024/05/29

|

||||||

|

|

||||||

|

|

||||||

### Download and Verify

|

### Download and Verify

|

||||||

|

|

||||||

2.4.60-20240320 ISO image:

|

2.4.70-20240529 ISO image:

|

||||||

https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

https://download.securityonion.net/file/securityonion/securityonion-2.4.70-20240529.iso

|

||||||

|

|

||||||

MD5: 178DD42D06B2F32F3870E0C27219821E

|

MD5: 8FCCF31C2470D1ABA380AF196B611DEC

|

||||||

SHA1: 73EDCD50817A7F6003FE405CF1808A30D034F89D

|

SHA1: EE5E8F8C14819E7A1FE423E6920531A97F39600B

|

||||||

SHA256: DD334B8D7088A7B78160C253B680D645E25984BA5CCAB5CC5C327CA72137FC06

|

SHA256: EF5E781D50D50660F452ADC54FD4911296ECBECED7879FA8E04687337CA89BEC

|

||||||

|

|

||||||

Signature for ISO image:

|

Signature for ISO image:

|

||||||

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.70-20240529.iso.sig

|

||||||

|

|

||||||

Signing key:

|

Signing key:

|

||||||

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.4/main/KEYS

|

||||||

@@ -25,22 +25,22 @@ wget https://raw.githubusercontent.com/Security-Onion-Solutions/securityonion/2.

|

|||||||

|

|

||||||

Download the signature file for the ISO:

|

Download the signature file for the ISO:

|

||||||

```

|

```

|

||||||

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.60-20240320.iso.sig

|

wget https://github.com/Security-Onion-Solutions/securityonion/raw/2.4/main/sigs/securityonion-2.4.70-20240529.iso.sig

|

||||||

```

|

```

|

||||||

|

|

||||||

Download the ISO image:

|

Download the ISO image:

|

||||||

```

|

```

|

||||||

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.60-20240320.iso

|

wget https://download.securityonion.net/file/securityonion/securityonion-2.4.70-20240529.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

Verify the downloaded ISO image using the signature file:

|

Verify the downloaded ISO image using the signature file:

|

||||||

```

|

```

|

||||||

gpg --verify securityonion-2.4.60-20240320.iso.sig securityonion-2.4.60-20240320.iso

|

gpg --verify securityonion-2.4.70-20240529.iso.sig securityonion-2.4.70-20240529.iso

|

||||||

```

|

```

|

||||||

|

|

||||||

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

The output should show "Good signature" and the Primary key fingerprint should match what's shown below:

|

||||||

```

|

```

|

||||||

gpg: Signature made Tue 19 Mar 2024 03:17:58 PM EDT using RSA key ID FE507013

|

gpg: Signature made Wed 29 May 2024 11:40:59 AM EDT using RSA key ID FE507013

|

||||||

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

gpg: Good signature from "Security Onion Solutions, LLC <info@securityonionsolutions.com>"

|

||||||

gpg: WARNING: This key is not certified with a trusted signature!

|

gpg: WARNING: This key is not certified with a trusted signature!

|

||||||

gpg: There is no indication that the signature belongs to the owner.

|

gpg: There is no indication that the signature belongs to the owner.

|

||||||

|

|||||||

13

README.md

13

README.md

@@ -8,19 +8,22 @@ Alerts

|

|||||||

|

|

||||||

|

|

||||||

Dashboards

|

Dashboards

|

||||||

|

|

||||||

|

|

||||||

Hunt

|

Hunt

|

||||||

|

|

||||||

|

|

||||||

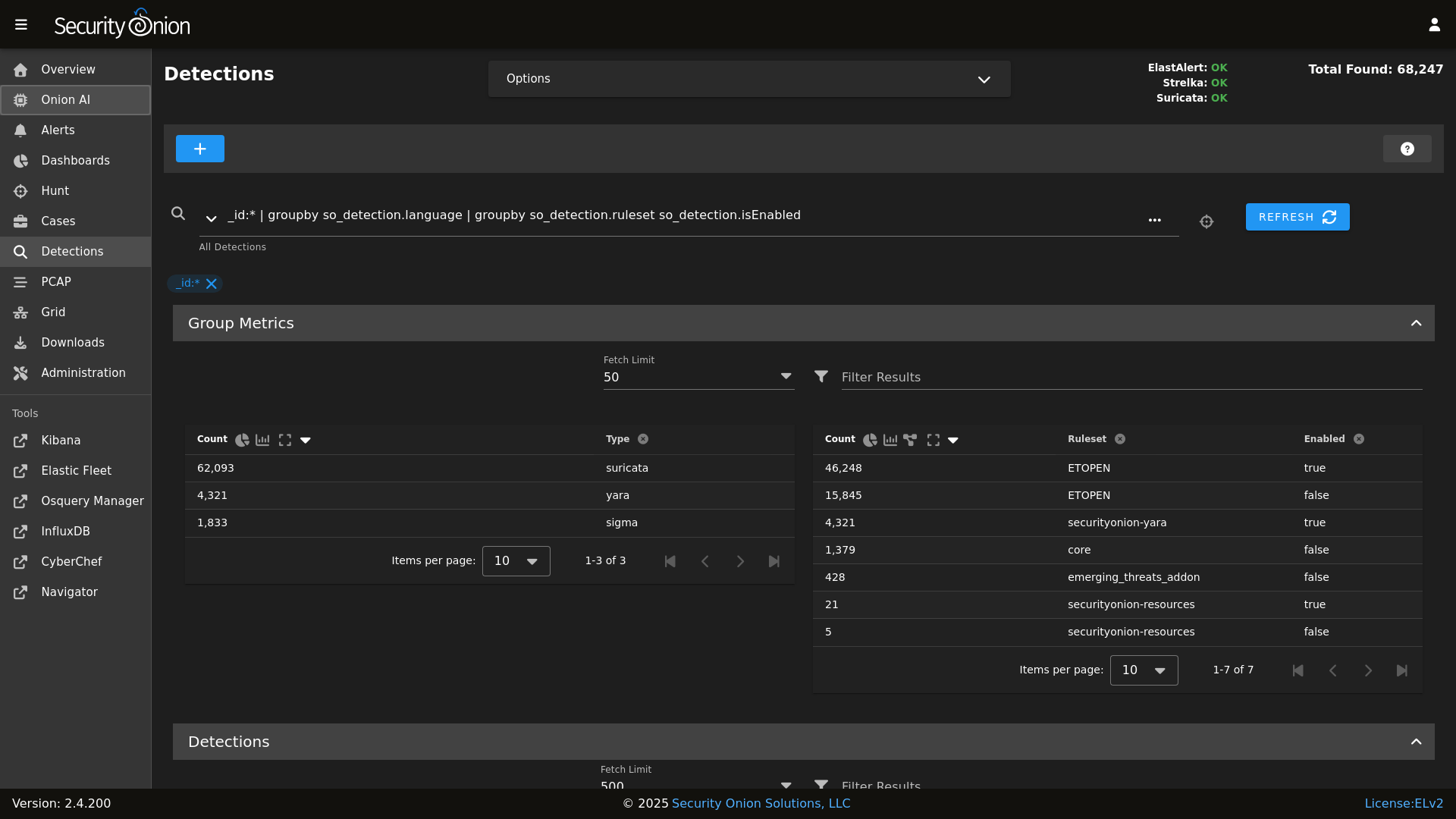

|

Detections

|

||||||

|

|

||||||

|

|

||||||

PCAP

|

PCAP

|

||||||

|

|

||||||

|

|

||||||

Grid

|

Grid

|

||||||

|

|

||||||

|

|

||||||

Config

|

Config

|

||||||

|

|

||||||

|

|

||||||

### Release Notes

|

### Release Notes

|

||||||

|

|

||||||

|

|||||||

@@ -1,3 +1,8 @@

|

|||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0.

|

||||||

|

|

||||||

{% if '2.4' in salt['cp.get_file_str']('/etc/soversion') %}

|

{% if '2.4' in salt['cp.get_file_str']('/etc/soversion') %}

|

||||||

|

|

||||||

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

{% import_yaml '/opt/so/saltstack/local/pillar/global/soc_global.sls' as SOC_GLOBAL %}

|

||||||

@@ -15,6 +20,8 @@ remove_common_so-firewall:

|

|||||||

file.absent:

|

file.absent:

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-firewall

|

||||||

|

|

||||||

|

# This section is used to put the scripts in place in the Salt file system

|

||||||

|

# in case a state run tries to overwrite what we do in the next section.

|

||||||

copy_so-common_common_tools_sbin:

|

copy_so-common_common_tools_sbin:

|

||||||

file.copy:

|

file.copy:

|

||||||

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

- name: /opt/so/saltstack/default/salt/common/tools/sbin/so-common

|

||||||

@@ -43,6 +50,15 @@ copy_so-firewall_manager_tools_sbin:

|

|||||||

- force: True

|

- force: True

|

||||||

- preserve: True

|

- preserve: True

|

||||||

|

|

||||||

|

copy_so-yaml_manager_tools_sbin:

|

||||||

|

file.copy:

|

||||||

|

- name: /opt/so/saltstack/default/salt/manager/tools/sbin/so-yaml.py

|

||||||

|

- source: {{UPDATE_DIR}}/salt/manager/tools/sbin/so-yaml.py

|

||||||

|

- force: True

|

||||||

|

- preserve: True

|

||||||

|

|

||||||

|

# This section is used to put the new script in place so that it can be called during soup.

|

||||||

|

# It is faster than calling the states that normally manage them to put them in place.

|

||||||

copy_so-common_sbin:

|

copy_so-common_sbin:

|

||||||

file.copy:

|

file.copy:

|

||||||

- name: /usr/sbin/so-common

|

- name: /usr/sbin/so-common

|

||||||

|

|||||||

@@ -179,6 +179,21 @@ copy_new_files() {

|

|||||||

cd /tmp

|

cd /tmp

|

||||||

}

|

}

|

||||||

|

|

||||||

|

create_local_directories() {

|

||||||

|

echo "Creating local pillar and salt directories if needed"

|

||||||

|

PILLARSALTDIR=$1

|

||||||

|

local_salt_dir="/opt/so/saltstack/local"

|

||||||

|

for i in "pillar" "salt"; do

|

||||||

|

for d in $(find $PILLARSALTDIR/$i -type d); do

|

||||||

|

suffixdir=${d//$PILLARSALTDIR/}

|

||||||

|

if [ ! -d "$local_salt_dir/$suffixdir" ]; then

|

||||||

|

mkdir -pv $local_salt_dir$suffixdir

|

||||||

|

fi

|

||||||

|

done

|

||||||

|

chown -R socore:socore $local_salt_dir/$i

|

||||||

|

done

|

||||||

|

}

|

||||||

|

|

||||||

disable_fastestmirror() {

|

disable_fastestmirror() {

|

||||||

sed -i 's/enabled=1/enabled=0/' /etc/yum/pluginconf.d/fastestmirror.conf

|

sed -i 's/enabled=1/enabled=0/' /etc/yum/pluginconf.d/fastestmirror.conf

|

||||||

}

|

}

|

||||||

|

|||||||

@@ -201,6 +201,10 @@ if [[ $EXCLUDE_KNOWN_ERRORS == 'Y' ]]; then

|

|||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Unknown column" # Elastalert errors from running EQL queries

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Unknown column" # Elastalert errors from running EQL queries

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|parsing_exception" # Elastalert EQL parsing issue. Temp.

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|parsing_exception" # Elastalert EQL parsing issue. Temp.

|

||||||

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|context deadline exceeded"

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|context deadline exceeded"

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|Error running query:" # Specific issues with detection rules

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|detect-parse" # Suricata encountering a malformed rule

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|integrity check failed" # Detections: Exclude false positive due to automated testing

|

||||||

|

EXCLUDED_ERRORS="$EXCLUDED_ERRORS|syncErrors" # Detections: Not an actual error

|

||||||

fi

|

fi

|

||||||

|

|

||||||

RESULT=0

|

RESULT=0

|

||||||

|

|||||||

98

salt/common/tools/sbin/so-luks-tpm-regen

Normal file

98

salt/common/tools/sbin/so-luks-tpm-regen

Normal file

@@ -0,0 +1,98 @@

|

|||||||

|

#!/bin/bash

|

||||||

|

#

|

||||||

|

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

||||||

|

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

||||||

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

|

# Elastic License 2.0."

|

||||||

|

|

||||||

|

set -e

|

||||||

|

# This script is intended to be used in the case the ISO install did not properly setup TPM decrypt for LUKS partitions at boot.

|

||||||

|

if [ -z $NOROOT ]; then

|

||||||

|

# Check for prerequisites

|

||||||

|

if [ "$(id -u)" -ne 0 ]; then

|

||||||

|

echo "This script must be run using sudo!"

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

ENROLL_TPM=N

|

||||||

|

|

||||||

|

while [[ $# -gt 0 ]]; do

|

||||||

|

case $1 in

|

||||||

|

--enroll-tpm)

|

||||||

|

ENROLL_TPM=Y

|

||||||

|

;;

|

||||||

|

*)

|

||||||

|

echo "Usage: $0 [options]"

|

||||||

|

echo ""

|

||||||

|

echo "where options are:"

|

||||||

|

echo " --enroll-tpm for when TPM enrollment was not selected during ISO install."

|

||||||

|

echo ""

|

||||||

|

exit 1

|

||||||

|

;;

|

||||||

|

esac

|

||||||

|

shift

|

||||||

|

done

|

||||||

|

|

||||||

|

check_for_tpm() {

|

||||||

|

echo -n "Checking for TPM: "

|

||||||

|

if [ -d /sys/class/tpm/tpm0 ]; then

|

||||||

|

echo -e "tpm0 found."

|

||||||

|

TPM="yes"

|

||||||

|

# Check if TPM is using sha1 or sha256

|

||||||

|

if [ -d /sys/class/tpm/tpm0/pcr-sha1 ]; then

|

||||||

|

echo -e "TPM is using sha1.\n"

|

||||||

|

TPM_PCR="sha1"

|

||||||

|

elif [ -d /sys/class/tpm/tpm0/pcr-sha256 ]; then

|

||||||

|

echo -e "TPM is using sha256.\n"

|

||||||

|

TPM_PCR="sha256"

|

||||||

|

fi

|

||||||

|

else

|

||||||

|

echo -e "No TPM found.\n"

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

}

|

||||||

|

|

||||||

|

check_for_luks_partitions() {

|

||||||

|

echo "Checking for LUKS partitions"

|

||||||

|

for part in $(lsblk -o NAME,FSTYPE -ln | grep crypto_LUKS | awk '{print $1}'); do

|

||||||

|

echo "Found LUKS partition: $part"

|

||||||

|

LUKS_PARTITIONS+=("$part")

|

||||||

|

done

|

||||||

|

if [ ${#LUKS_PARTITIONS[@]} -eq 0 ]; then

|

||||||

|

echo -e "No LUKS partitions found.\n"

|

||||||

|

exit 1

|

||||||

|

fi

|

||||||

|

echo ""

|

||||||

|

}

|

||||||

|

|

||||||

|

enroll_tpm_in_luks() {

|

||||||

|

read -s -p "Enter the LUKS passphrase used during ISO install: " LUKS_PASSPHRASE

|

||||||

|

echo ""

|

||||||

|

for part in "${LUKS_PARTITIONS[@]}"; do

|

||||||

|

echo "Enrolling TPM for LUKS device: /dev/$part"

|

||||||

|

if [ "$TPM_PCR" == "sha1" ]; then

|

||||||

|

clevis luks bind -d /dev/$part tpm2 '{"pcr_bank":"sha1","pcr_ids":"7"}' <<< $LUKS_PASSPHRASE

|

||||||

|

elif [ "$TPM_PCR" == "sha256" ]; then

|

||||||

|

clevis luks bind -d /dev/$part tpm2 '{"pcr_bank":"sha256","pcr_ids":"7"}' <<< $LUKS_PASSPHRASE

|

||||||

|

fi

|

||||||

|

done

|

||||||

|

}

|

||||||

|

|

||||||

|

regenerate_tpm_enrollment_token() {

|

||||||

|

for part in "${LUKS_PARTITIONS[@]}"; do

|

||||||

|

clevis luks regen -d /dev/$part -s 1 -q

|

||||||

|

done

|

||||||

|

}

|

||||||

|

|

||||||

|

check_for_tpm

|

||||||

|

check_for_luks_partitions

|

||||||

|

|

||||||

|

if [[ $ENROLL_TPM == "Y" ]]; then

|

||||||

|

enroll_tpm_in_luks

|

||||||

|

else

|

||||||

|

regenerate_tpm_enrollment_token

|

||||||

|

fi

|

||||||

|

|

||||||

|

echo "Running dracut"

|

||||||

|

dracut -fv

|

||||||

|

echo -e "\nTPM configuration complete. Reboot the system to verify the TPM is correctly decrypting the LUKS partition(s) at boot.\n"

|

||||||

@@ -248,7 +248,7 @@ fi

|

|||||||

START_OLDEST_SLASH=$(echo $START_OLDEST | sed -e 's/-/%2F/g')

|

START_OLDEST_SLASH=$(echo $START_OLDEST | sed -e 's/-/%2F/g')

|

||||||

END_NEWEST_SLASH=$(echo $END_NEWEST | sed -e 's/-/%2F/g')

|

END_NEWEST_SLASH=$(echo $END_NEWEST | sed -e 's/-/%2F/g')

|

||||||

if [[ $VALID_PCAPS_COUNT -gt 0 ]] || [[ $SKIPPED_PCAPS_COUNT -gt 0 ]]; then

|

if [[ $VALID_PCAPS_COUNT -gt 0 ]] || [[ $SKIPPED_PCAPS_COUNT -gt 0 ]]; then

|

||||||

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20-sankey%20event.dataset%20event.category%2a%20%7C%20groupby%20-pie%20event.category%20%7C%20groupby%20-bar%20event.module%20%7C%20groupby%20event.dataset%20%7C%20groupby%20event.module%20%7C%20groupby%20event.category%20%7C%20groupby%20observer.name%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

URL="https://{{ URLBASE }}/#/dashboards?q=$HASH_FILTERS%20%7C%20groupby%20event.module*%20%7C%20groupby%20-sankey%20event.module*%20event.dataset%20%7C%20groupby%20event.dataset%20%7C%20groupby%20source.ip%20%7C%20groupby%20destination.ip%20%7C%20groupby%20destination.port%20%7C%20groupby%20network.protocol%20%7C%20groupby%20rule.name%20rule.category%20event.severity_label%20%7C%20groupby%20dns.query.name%20%7C%20groupby%20file.mime_type%20%7C%20groupby%20http.virtual_host%20http.uri%20%7C%20groupby%20notice.note%20notice.message%20notice.sub_message%20%7C%20groupby%20ssl.server_name%20%7C%20groupby%20source_geo.organization_name%20source.geo.country_name%20%7C%20groupby%20destination_geo.organization_name%20destination.geo.country_name&t=${START_OLDEST_SLASH}%2000%3A00%3A00%20AM%20-%20${END_NEWEST_SLASH}%2000%3A00%3A00%20AM&z=UTC"

|

||||||

|

|

||||||

status "Import complete!"

|

status "Import complete!"

|

||||||

status

|

status

|

||||||

|

|||||||

@@ -82,6 +82,36 @@ elastasomodulesync:

|

|||||||

- group: 933

|

- group: 933

|

||||||

- makedirs: True

|

- makedirs: True

|

||||||

|

|

||||||

|

elastacustomdir:

|

||||||

|

file.directory:

|

||||||

|

- name: /opt/so/conf/elastalert/custom

|

||||||

|

- user: 933

|

||||||

|

- group: 933

|

||||||

|

- makedirs: True

|

||||||

|

|

||||||

|

elastacustomsync:

|

||||||

|

file.recurse:

|

||||||

|

- name: /opt/so/conf/elastalert/custom

|

||||||

|

- source: salt://elastalert/files/custom

|

||||||

|

- user: 933

|

||||||

|

- group: 933

|

||||||

|

- makedirs: True

|

||||||

|

- file_mode: 660

|

||||||

|

- show_changes: False

|

||||||

|

|

||||||

|

elastapredefinedsync:

|

||||||

|

file.recurse:

|

||||||

|

- name: /opt/so/conf/elastalert/predefined

|

||||||

|

- source: salt://elastalert/files/predefined

|

||||||

|

- user: 933

|

||||||

|

- group: 933

|

||||||

|

- makedirs: True

|

||||||

|

- template: jinja

|

||||||

|

- file_mode: 660

|

||||||

|

- context:

|

||||||

|

elastalert: {{ ELASTALERTMERGED }}

|

||||||

|

- show_changes: False

|

||||||

|

|

||||||

elastaconf:

|

elastaconf:

|

||||||

file.managed:

|

file.managed:

|

||||||

- name: /opt/so/conf/elastalert/elastalert_config.yaml

|

- name: /opt/so/conf/elastalert/elastalert_config.yaml

|

||||||

|

|||||||

@@ -1,5 +1,6 @@

|

|||||||

elastalert:

|

elastalert:

|

||||||

enabled: False

|

enabled: False

|

||||||

|

alerter_parameters: ""

|

||||||

config:

|

config:

|

||||||

rules_folder: /opt/elastalert/rules/

|

rules_folder: /opt/elastalert/rules/

|

||||||

scan_subdirectories: true

|

scan_subdirectories: true

|

||||||

|

|||||||

@@ -30,6 +30,8 @@ so-elastalert:

|

|||||||

- /opt/so/rules/elastalert:/opt/elastalert/rules/:ro

|

- /opt/so/rules/elastalert:/opt/elastalert/rules/:ro

|

||||||

- /opt/so/log/elastalert:/var/log/elastalert:rw

|

- /opt/so/log/elastalert:/var/log/elastalert:rw

|

||||||

- /opt/so/conf/elastalert/modules/:/opt/elastalert/modules/:ro

|

- /opt/so/conf/elastalert/modules/:/opt/elastalert/modules/:ro

|

||||||

|

- /opt/so/conf/elastalert/predefined/:/opt/elastalert/predefined/:ro

|

||||||

|

- /opt/so/conf/elastalert/custom/:/opt/elastalert/custom/:ro

|

||||||

- /opt/so/conf/elastalert/elastalert_config.yaml:/opt/elastalert/config.yaml:ro

|

- /opt/so/conf/elastalert/elastalert_config.yaml:/opt/elastalert/config.yaml:ro

|

||||||

{% if DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

{% if DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

||||||

{% for BIND in DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

{% for BIND in DOCKER.containers['so-elastalert'].custom_bind_mounts %}

|

||||||

|

|||||||

1

salt/elastalert/files/custom/placeholder

Normal file

1

salt/elastalert/files/custom/placeholder

Normal file

@@ -0,0 +1 @@

|

|||||||

|

THIS IS A PLACEHOLDER FILE

|

||||||

@@ -56,7 +56,7 @@ class SecurityOnionESAlerter(Alerter):

|

|||||||

"event_data": match,

|

"event_data": match,

|

||||||

"@timestamp": timestamp

|

"@timestamp": timestamp

|

||||||

}

|

}

|

||||||

url = f"https://{self.rule['es_host']}:{self.rule['es_port']}/logs-playbook.alerts-so/_doc/"

|

url = f"https://{self.rule['es_host']}:{self.rule['es_port']}/logs-detections.alerts-so/_doc/"

|

||||||

requests.post(url, data=json.dumps(payload), headers=headers, verify=False, auth=creds)

|

requests.post(url, data=json.dumps(payload), headers=headers, verify=False, auth=creds)

|

||||||

|

|

||||||

def get_info(self):

|

def get_info(self):

|

||||||

|

|||||||

6

salt/elastalert/files/predefined/jira_auth.yaml

Normal file

6

salt/elastalert/files/predefined/jira_auth.yaml

Normal file

@@ -0,0 +1,6 @@

|

|||||||

|

{% if elastalert.get('jira_user', '') | length > 0 and elastalert.get('jira_pass', '') | length > 0 %}

|

||||||

|

user: {{ elastalert.jira_user }}

|

||||||

|

password: {{ elastalert.jira_pass }}

|

||||||

|

{% else %}

|

||||||

|

apikey: {{ elastalert.get('jira_api_key', '') }}

|

||||||

|

{% endif %}

|

||||||

2

salt/elastalert/files/predefined/smtp_auth.yaml

Normal file

2

salt/elastalert/files/predefined/smtp_auth.yaml

Normal file

@@ -0,0 +1,2 @@

|

|||||||

|

user: {{ elastalert.get('smtp_user', '') }}

|

||||||

|

password: {{ elastalert.get('smtp_pass', '') }}

|

||||||

@@ -13,3 +13,19 @@

|

|||||||

{% do ELASTALERTDEFAULTS.elastalert.config.update({'es_password': pillar.elasticsearch.auth.users.so_elastic_user.pass}) %}

|

{% do ELASTALERTDEFAULTS.elastalert.config.update({'es_password': pillar.elasticsearch.auth.users.so_elastic_user.pass}) %}

|

||||||

|

|

||||||

{% set ELASTALERTMERGED = salt['pillar.get']('elastalert', ELASTALERTDEFAULTS.elastalert, merge=True) %}

|

{% set ELASTALERTMERGED = salt['pillar.get']('elastalert', ELASTALERTDEFAULTS.elastalert, merge=True) %}

|

||||||

|

|

||||||

|

{% if 'ntf' in salt['pillar.get']('features', []) %}

|

||||||

|

{% set params = ELASTALERTMERGED.get('alerter_parameters', '') | load_yaml %}

|

||||||

|

{% if params != None and params | length > 0 %}

|

||||||

|

{% do ELASTALERTMERGED.config.update(params) %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

|

{% if ELASTALERTMERGED.get('smtp_user', '') | length > 0 %}

|

||||||

|

{% do ELASTALERTMERGED.config.update({'smtp_auth_file': '/opt/elastalert/predefined/smtp_auth.yaml'}) %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

|

{% if ELASTALERTMERGED.get('jira_user', '') | length > 0 or ELASTALERTMERGED.get('jira_key', '') | length > 0 %}

|

||||||

|

{% do ELASTALERTMERGED.config.update({'jira_account_file': '/opt/elastalert/predefined/jira_auth.yaml'}) %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

|

{% endif %}

|

||||||

|

|||||||

@@ -2,6 +2,99 @@ elastalert:

|

|||||||

enabled:

|

enabled:

|

||||||

description: You can enable or disable Elastalert.

|

description: You can enable or disable Elastalert.

|

||||||

helpLink: elastalert.html

|

helpLink: elastalert.html

|

||||||

|

alerter_parameters:

|

||||||

|

title: Alerter Parameters

|

||||||

|

description: Optional configuration parameters for additional alerters that can be enabled for all Sigma rules. Filter for 'Alerter' in this Configuration screen to find the setting that allows these alerters to be enabled within the SOC ElastAlert module. Use YAML format for these parameters, and reference the ElastAlert 2 documentation, located at https://elastalert2.readthedocs.io, for available alerters and their required configuration parameters. A full update of the ElastAlert rule engine, via the Detections screen, is required in order to apply these changes. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

multiline: True

|

||||||

|

syntax: yaml

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

jira_api_key:

|

||||||

|

title: Jira API Key

|

||||||

|

description: Optional configuration parameter for Jira API Key, used instead of the Jira username and password. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

sensitive: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

jira_pass:

|

||||||

|

title: Jira Password

|

||||||

|

description: Optional configuration parameter for Jira password. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

sensitive: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

jira_user:

|

||||||

|

title: Jira Username

|

||||||

|

description: Optional configuration parameter for Jira username. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

smtp_pass:

|

||||||

|

title: SMTP Password

|

||||||

|

description: Optional configuration parameter for SMTP password, required for authenticating email servers. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

sensitive: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

smtp_user:

|

||||||

|

title: SMTP Username

|

||||||

|

description: Optional configuration parameter for SMTP username, required for authenticating email servers. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

forcedType: string

|

||||||

|

files:

|

||||||

|

custom:

|

||||||

|

alertmanager_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to an AlertManager server. To utilize this custom file, the alertmanager_ca_certs key must be set to /opt/elastalert/custom/alertmanager_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

gelf_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to a Graylog server. To utilize this custom file, the graylog_ca_certs key must be set to /opt/elastalert/custom/graylog_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

http_post_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to a generic HTTP server, via the legacy HTTP POST alerter. To utilize this custom file, the http_post_ca_certs key must be set to /opt/elastalert/custom/http_post2_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

http_post2_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to a generic HTTP server, via the newer HTTP POST 2 alerter. To utilize this custom file, the http_post2_ca_certs key must be set to /opt/elastalert/custom/http_post2_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

ms_teams_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to Microsoft Teams server. To utilize this custom file, the ms_teams_ca_certs key must be set to /opt/elastalert/custom/ms_teams_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

pagerduty_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to PagerDuty server. To utilize this custom file, the pagerduty_ca_certs key must be set to /opt/elastalert/custom/pagerduty_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

rocket_chat_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to PagerDuty server. To utilize this custom file, the rocket_chart_ca_certs key must be set to /opt/elastalert/custom/rocket_chat_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

smtp__crt:

|

||||||

|

description: Optional custom certificate for connecting to an SMTP server. To utilize this custom file, the smtp_cert_file key must be set to /opt/elastalert/custom/smtp.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

smtp__key:

|

||||||

|

description: Optional custom certificate key for connecting to an SMTP server. To utilize this custom file, the smtp_key_file key must be set to /opt/elastalert/custom/smtp.key in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

|

slack_ca__crt:

|

||||||

|

description: Optional custom Certificate Authority for connecting to Slack. To utilize this custom file, the slack_ca_certs key must be set to /opt/elastalert/custom/slack_ca.crt in the Alerter Parameters setting. Requires a valid Security Onion license key.

|

||||||

|

global: True

|

||||||

|

file: True

|

||||||

|

helpLink: elastalert.html

|

||||||

config:

|

config:

|

||||||

disable_rules_on_error:

|

disable_rules_on_error:

|

||||||

description: Disable rules on failure.

|

description: Disable rules on failure.

|

||||||

|

|||||||

@@ -72,5 +72,5 @@ do

|

|||||||

printf "\n### $GOOS/$GOARCH Installer Generated...\n"

|

printf "\n### $GOOS/$GOARCH Installer Generated...\n"

|

||||||

done

|

done

|

||||||

|

|

||||||

printf "\n### Cleaning up temp files in /nsm/elastic-agent-workspace"

|

printf "\n### Cleaning up temp files in /nsm/elastic-agent-workspace\n"

|

||||||

rm -rf /nsm/elastic-agent-workspace

|

rm -rf /nsm/elastic-agent-workspace

|

||||||

|

|||||||

@@ -3591,6 +3591,68 @@ elasticsearch:

|

|||||||

set_priority:

|

set_priority:

|

||||||

priority: 50

|

priority: 50

|

||||||

min_age: 30d

|

min_age: 30d

|

||||||

|

so-logs-detections_x_alerts:

|

||||||

|

index_sorting: false

|

||||||

|

index_template:

|

||||||

|

composed_of:

|

||||||

|

- so-data-streams-mappings

|

||||||

|

- so-fleet_globals-1

|

||||||

|

- so-fleet_agent_id_verification-1

|

||||||

|

- so-logs-mappings

|

||||||

|

- so-logs-settings

|

||||||

|

data_stream:

|

||||||

|

allow_custom_routing: false

|

||||||

|

hidden: false

|

||||||

|

index_patterns:

|

||||||

|

- logs-detections.alerts-*

|

||||||

|

priority: 501

|

||||||

|

template:

|

||||||

|

mappings:

|

||||||

|

_meta:

|

||||||

|

managed: true

|

||||||

|

managed_by: security_onion

|

||||||

|

package:

|

||||||

|

name: elastic_agent

|

||||||

|

settings:

|

||||||

|

index:

|

||||||

|

lifecycle:

|

||||||

|

name: so-logs-detections.alerts-so

|

||||||

|

mapping:

|

||||||

|

total_fields:

|

||||||

|

limit: 5001

|

||||||

|

number_of_replicas: 0

|

||||||

|

sort:

|

||||||

|

field: '@timestamp'

|

||||||

|

order: desc

|

||||||

|

policy:

|

||||||

|

_meta:

|

||||||

|

managed: true

|

||||||

|

managed_by: security_onion

|

||||||

|

package:

|

||||||

|

name: elastic_agent

|

||||||

|

phases:

|

||||||

|

cold:

|

||||||

|

actions:

|

||||||

|

set_priority:

|

||||||

|

priority: 0

|

||||||

|

min_age: 60d

|

||||||

|

delete:

|

||||||

|

actions:

|

||||||

|

delete: {}

|

||||||

|

min_age: 365d

|

||||||

|

hot:

|

||||||

|

actions:

|

||||||

|

rollover:

|

||||||

|

max_age: 1d

|

||||||

|

max_primary_shard_size: 50gb

|

||||||

|

set_priority:

|

||||||

|

priority: 100

|

||||||

|

min_age: 0ms

|

||||||

|

warm:

|

||||||

|

actions:

|

||||||

|

set_priority:

|

||||||

|

priority: 50

|

||||||

|

min_age: 30d

|

||||||

so-logs-elastic_agent:

|

so-logs-elastic_agent:

|

||||||

index_sorting: false

|

index_sorting: false

|

||||||

index_template:

|

index_template:

|

||||||

|

|||||||

@@ -56,6 +56,7 @@

|

|||||||

{ "set": { "if": "ctx.exiftool?.Subsystem != null", "field": "host.subsystem", "value": "{{exiftool.Subsystem}}", "ignore_failure": true }},

|

{ "set": { "if": "ctx.exiftool?.Subsystem != null", "field": "host.subsystem", "value": "{{exiftool.Subsystem}}", "ignore_failure": true }},

|

||||||

{ "set": { "if": "ctx.scan?.yara?.matches instanceof List", "field": "rule.name", "value": "{{scan.yara.matches.0}}" }},

|

{ "set": { "if": "ctx.scan?.yara?.matches instanceof List", "field": "rule.name", "value": "{{scan.yara.matches.0}}" }},

|

||||||

{ "set": { "if": "ctx.rule?.name != null", "field": "event.dataset", "value": "alert", "override": true }},

|

{ "set": { "if": "ctx.rule?.name != null", "field": "event.dataset", "value": "alert", "override": true }},

|

||||||

|

{ "set": { "if": "ctx.rule?.name != null", "field": "rule.uuid", "value": "{{rule.name}}", "override": true }},

|

||||||

{ "rename": { "field": "file.flavors.mime", "target_field": "file.mime_type", "ignore_missing": true }},

|

{ "rename": { "field": "file.flavors.mime", "target_field": "file.mime_type", "ignore_missing": true }},

|

||||||

{ "set": { "if": "ctx.rule?.name != null && ctx.rule?.score == null", "field": "event.severity", "value": 3, "override": true } },

|

{ "set": { "if": "ctx.rule?.name != null && ctx.rule?.score == null", "field": "event.severity", "value": 3, "override": true } },

|

||||||

{ "convert" : { "if": "ctx.rule?.score != null", "field" : "rule.score","type": "integer"}},

|

{ "convert" : { "if": "ctx.rule?.score != null", "field" : "rule.score","type": "integer"}},

|

||||||

|

|||||||

@@ -394,6 +394,7 @@ elasticsearch:

|

|||||||

so-logs-darktrace_x_ai_analyst_alert: *indexSettings

|

so-logs-darktrace_x_ai_analyst_alert: *indexSettings

|

||||||

so-logs-darktrace_x_model_breach_alert: *indexSettings

|

so-logs-darktrace_x_model_breach_alert: *indexSettings

|

||||||

so-logs-darktrace_x_system_status_alert: *indexSettings

|

so-logs-darktrace_x_system_status_alert: *indexSettings

|

||||||

|

so-logs-detections_x_alerts: *indexSettings

|

||||||

so-logs-f5_bigip_x_log: *indexSettings

|

so-logs-f5_bigip_x_log: *indexSettings

|

||||||

so-logs-fim_x_event: *indexSettings

|

so-logs-fim_x_event: *indexSettings

|

||||||

so-logs-fortinet_x_clientendpoint: *indexSettings

|

so-logs-fortinet_x_clientendpoint: *indexSettings

|

||||||

|

|||||||

@@ -2,12 +2,10 @@

|

|||||||

{% set DEFAULT_GLOBAL_OVERRIDES = ELASTICSEARCHDEFAULTS.elasticsearch.index_settings.pop('global_overrides') %}

|

{% set DEFAULT_GLOBAL_OVERRIDES = ELASTICSEARCHDEFAULTS.elasticsearch.index_settings.pop('global_overrides') %}

|

||||||

|

|

||||||

{% set PILLAR_GLOBAL_OVERRIDES = {} %}

|

{% set PILLAR_GLOBAL_OVERRIDES = {} %}

|

||||||

{% if salt['pillar.get']('elasticsearch:index_settings') is defined %}

|

{% set ES_INDEX_PILLAR = salt['pillar.get']('elasticsearch:index_settings', {}) %}

|

||||||

{% set ES_INDEX_PILLAR = salt['pillar.get']('elasticsearch:index_settings') %}

|

|

||||||

{% if ES_INDEX_PILLAR.global_overrides is defined %}

|

{% if ES_INDEX_PILLAR.global_overrides is defined %}

|

||||||

{% set PILLAR_GLOBAL_OVERRIDES = ES_INDEX_PILLAR.pop('global_overrides') %}

|

{% set PILLAR_GLOBAL_OVERRIDES = ES_INDEX_PILLAR.pop('global_overrides') %}

|

||||||

{% endif %}

|

{% endif %}

|

||||||

{% endif %}

|

|

||||||

|

|

||||||

{% set ES_INDEX_SETTINGS_ORIG = ELASTICSEARCHDEFAULTS.elasticsearch.index_settings %}

|

{% set ES_INDEX_SETTINGS_ORIG = ELASTICSEARCHDEFAULTS.elasticsearch.index_settings %}

|

||||||

|

|

||||||

@@ -19,6 +17,12 @@

|

|||||||

{% set ES_INDEX_SETTINGS = {} %}

|

{% set ES_INDEX_SETTINGS = {} %}

|

||||||

{% do ES_INDEX_SETTINGS_GLOBAL_OVERRIDES.update(salt['defaults.merge'](ES_INDEX_SETTINGS_GLOBAL_OVERRIDES, ES_INDEX_PILLAR, in_place=False)) %}

|

{% do ES_INDEX_SETTINGS_GLOBAL_OVERRIDES.update(salt['defaults.merge'](ES_INDEX_SETTINGS_GLOBAL_OVERRIDES, ES_INDEX_PILLAR, in_place=False)) %}

|

||||||

{% for index, settings in ES_INDEX_SETTINGS_GLOBAL_OVERRIDES.items() %}

|

{% for index, settings in ES_INDEX_SETTINGS_GLOBAL_OVERRIDES.items() %}

|

||||||

|

{# if policy isn't defined in the original index settings, then dont merge policy from the global_overrides #}

|

||||||

|

{# this will prevent so-elasticsearch-ilm-policy-load from trying to load policy on non ILM manged indices #}

|

||||||

|

{% if not ES_INDEX_SETTINGS_ORIG[index].policy is defined and ES_INDEX_SETTINGS_GLOBAL_OVERRIDES[index].policy is defined %}

|

||||||

|

{% do ES_INDEX_SETTINGS_GLOBAL_OVERRIDES[index].pop('policy') %}

|

||||||

|

{% endif %}

|

||||||

|

|

||||||

{% if settings.index_template is defined %}

|

{% if settings.index_template is defined %}

|

||||||

{% if not settings.get('index_sorting', False) | to_bool and settings.index_template.template.settings.index.sort is defined %}

|

{% if not settings.get('index_sorting', False) | to_bool and settings.index_template.template.settings.index.sort is defined %}

|

||||||

{% do settings.index_template.template.settings.index.pop('sort') %}

|

{% do settings.index_template.template.settings.index.pop('sort') %}

|

||||||

|

|||||||

@@ -5,6 +5,6 @@

|

|||||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

# https://securityonion.net/license; you may not use this file except in compliance with the

|

||||||

# Elastic License 2.0.

|

# Elastic License 2.0.

|

||||||

|

|

||||||

|

. /usr/sbin/so-common

|

||||||

|

|

||||||

|

curl -K /opt/so/conf/elasticsearch/curl.config -s -k -L "https://localhost:9200/_cat/indices?pretty&v&s=index"

|

||||||

curl -K /opt/so/conf/elasticsearch/curl.config-X GET -k -L "https://localhost:9200/_cat/indices?v&s=index"

|

|

||||||

|

|||||||

@@ -133,7 +133,7 @@ if [ ! -f $STATE_FILE_SUCCESS ]; then

|

|||||||

for i in $pattern; do

|

for i in $pattern; do

|

||||||

TEMPLATE=${i::-14}

|

TEMPLATE=${i::-14}

|

||||||

COMPONENT_PATTERN=${TEMPLATE:3}

|

COMPONENT_PATTERN=${TEMPLATE:3}

|

||||||

MATCH=$(echo "$TEMPLATE" | grep -E "^so-logs-|^so-metrics" | grep -v osquery)

|

MATCH=$(echo "$TEMPLATE" | grep -E "^so-logs-|^so-metrics" | grep -vE "detections|osquery")

|

||||||

if [[ -n "$MATCH" && ! "$COMPONENT_LIST" =~ "$COMPONENT_PATTERN" ]]; then

|

if [[ -n "$MATCH" && ! "$COMPONENT_LIST" =~ "$COMPONENT_PATTERN" ]]; then

|

||||||

load_failures=$((load_failures+1))

|

load_failures=$((load_failures+1))

|

||||||

echo "Component template does not exist for $COMPONENT_PATTERN. The index template will not be loaded. Load failures: $load_failures"

|

echo "Component template does not exist for $COMPONENT_PATTERN. The index template will not be loaded. Load failures: $load_failures"

|

||||||

|

|||||||

@@ -11,6 +11,8 @@ idh_sshd_selinux:

|

|||||||

- sel_type: ssh_port_t

|

- sel_type: ssh_port_t

|

||||||

- prereq:

|

- prereq:

|

||||||

- file: openssh_config

|

- file: openssh_config

|

||||||

|

- require:

|

||||||

|

- pkg: python_selinux_mgmt_tools

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|

||||||

openssh_config:

|

openssh_config:

|

||||||

|

|||||||

@@ -15,3 +15,9 @@ openssh:

|

|||||||

- enable: False

|

- enable: False

|

||||||

- name: {{ openssh_map.service }}

|

- name: {{ openssh_map.service }}

|

||||||

{% endif %}

|

{% endif %}

|

||||||

|

|

||||||

|

{% if grains.os_family == 'RedHat' %}

|

||||||

|

python_selinux_mgmt_tools:

|

||||||

|

pkg.installed:

|

||||||

|

- name: policycoreutils-python-utils

|

||||||

|

{% endif %}

|

||||||

|

|||||||

@@ -9,7 +9,7 @@ idstools:

|

|||||||

forcedType: string

|

forcedType: string

|

||||||

helpLink: rules.html

|

helpLink: rules.html

|

||||||

ruleset:

|

ruleset:

|

||||||

description: 'Defines the ruleset you want to run. Options are ETOPEN or ETPRO. Once you have changed the ruleset here, you will need to wait for the rule update to take place (every 8 hours), or you can force the update by nagivating to Detections --> Options dropdown menu --> Suricata --> Full Update. WARNING! Changing the ruleset will remove all existing Suricata rules of the previous ruleset and their associated overrides. This removal cannot be undone.'

|

description: 'Defines the ruleset you want to run. Options are ETOPEN or ETPRO. Once you have changed the ruleset here, you will need to wait for the rule update to take place (every 24 hours), or you can force the update by nagivating to Detections --> Options dropdown menu --> Suricata --> Full Update. WARNING! Changing the ruleset will remove all existing non-overlapping Suricata rules of the previous ruleset and their associated overrides. This removal cannot be undone.'

|

||||||

global: True

|

global: True

|

||||||

regex: ETPRO\b|ETOPEN\b

|

regex: ETPRO\b|ETOPEN\b

|

||||||

helpLink: rules.html

|

helpLink: rules.html

|

||||||

|

|||||||

@@ -73,17 +73,6 @@ manager_sbin:

|

|||||||

- exclude_pat:

|

- exclude_pat:

|

||||||

- "*_test.py"

|

- "*_test.py"

|

||||||

|

|

||||||

yara_update_scripts:

|

|

||||||

file.recurse:

|

|

||||||

- name: /usr/sbin/

|

|

||||||

- source: salt://manager/tools/sbin_jinja/

|

|

||||||

- user: socore

|

|

||||||

- group: socore

|

|

||||||

- file_mode: 755

|

|

||||||

- template: jinja

|

|

||||||

- defaults:

|

|

||||||

EXCLUDEDRULES: {{ STRELKAMERGED.rules.excluded }}

|

|

||||||

|

|

||||||

so-repo-file:

|

so-repo-file:

|

||||||

file.managed:

|

file.managed:

|

||||||

- name: /opt/so/conf/reposync/repodownload.conf

|

- name: /opt/so/conf/reposync/repodownload.conf

|

||||||

|

|||||||

@@ -201,11 +201,7 @@ function add_idh_to_minion() {

|

|||||||

"idh:"\

|

"idh:"\

|

||||||

" enabled: True"\

|

" enabled: True"\

|

||||||

" restrict_management_ip: $IDH_MGTRESTRICT"\

|

" restrict_management_ip: $IDH_MGTRESTRICT"\

|

||||||

" services:" >> "$PILLARFILE"

|

" " >> $PILLARFILE

|

||||||

IFS=',' read -ra IDH_SERVICES_ARRAY <<< "$IDH_SERVICES"

|

|

||||||

for service in ${IDH_SERVICES_ARRAY[@]}; do

|

|

||||||

echo " - $service" | tr '[:upper:]' '[:lower:]' | tr -d '"' >> "$PILLARFILE"

|

|

||||||

done

|

|

||||||

}

|

}

|

||||||

|

|

||||||

function add_logstash_to_minion() {

|

function add_logstash_to_minion() {

|

||||||

|

|||||||

@@ -438,7 +438,13 @@ post_to_2.4.60() {

|

|||||||

}

|

}

|

||||||

|

|

||||||

post_to_2.4.70() {

|

post_to_2.4.70() {

|

||||||

echo "Nothing to apply"

|

printf "\nRemoving idh.services from any existing IDH node pillar files\n"

|

||||||

|

for file in /opt/so/saltstack/local/pillar/minions/*.sls; do

|

||||||

|

if [[ $file =~ "_idh.sls" && ! $file =~ "/opt/so/saltstack/local/pillar/minions/adv_" ]]; then

|

||||||

|

echo "Removing idh.services from: $file"

|

||||||

|

so-yaml.py remove "$file" idh.services

|

||||||

|

fi

|

||||||

|

done

|

||||||

POSTVERSION=2.4.70

|

POSTVERSION=2.4.70

|

||||||

}

|

}

|

||||||

|

|

||||||

@@ -583,7 +589,9 @@ up_to_2.4.60() {

|

|||||||

|

|

||||||

up_to_2.4.70() {

|

up_to_2.4.70() {

|

||||||

playbook_migration

|

playbook_migration

|

||||||

|

suricata_idstools_migration

|

||||||

toggle_telemetry

|

toggle_telemetry

|

||||||

|

add_detection_test_pillars

|

||||||

|

|

||||||

# Kafka configuration changes

|

# Kafka configuration changes

|

||||||

|

|

||||||

@@ -603,6 +611,18 @@ up_to_2.4.70() {

|

|||||||

INSTALLEDVERSION=2.4.70

|

INSTALLEDVERSION=2.4.70

|

||||||

}

|

}

|

||||||

|

|

||||||

|

add_detection_test_pillars() {

|

||||||

|

if [[ -n "$SOUP_INTERNAL_TESTING" ]]; then

|

||||||

|

echo "Adding detection pillar values for automated testing"

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.elastalertengine.allowRegex SecurityOnion

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.elastalertengine.failAfterConsecutiveErrorCount 1

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.strelkaengine.allowRegex "EquationGroup_Toolset_Apr17__ELV_.*"

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.strelkaengine.failAfterConsecutiveErrorCount 1

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.suricataengine.allowRegex "(200033\\d|2100538|2102466)"

|

||||||

|

so-yaml.py add /opt/so/saltstack/local/pillar/soc/soc_soc.sls soc.config.server.modules.suricataengine.failAfterConsecutiveErrorCount 1

|

||||||

|

fi

|

||||||

|

}

|

||||||

|

|

||||||

toggle_telemetry() {

|

toggle_telemetry() {

|

||||||

if [[ -z $UNATTENDED && $is_airgap -ne 0 ]]; then

|

if [[ -z $UNATTENDED && $is_airgap -ne 0 ]]; then

|

||||||

cat << ASSIST_EOF

|

cat << ASSIST_EOF

|

||||||

@@ -637,6 +657,38 @@ ASSIST_EOF

|

|||||||

fi

|

fi

|

||||||

}

|

}

|

||||||

|

|

||||||

|

suricata_idstools_migration() {

|

||||||

|

#Backup the pillars for idstools

|

||||||

|

mkdir -p /nsm/backup/detections-migration/idstools

|

||||||

|

rsync -av /opt/so/saltstack/local/pillar/idstools/* /nsm/backup/detections-migration/idstools

|

||||||

|

if [[ $? -eq 0 ]]; then

|

||||||

|

echo "IDStools configuration has been backed up."

|

||||||

|

else

|

||||||

|

fail "Error: rsync failed to copy the files. IDStools configuration has not been backed up."

|

||||||

|

fi

|

||||||

|

|

||||||

|

#Backup Thresholds

|

||||||

|

mkdir -p /nsm/backup/detections-migration/suricata

|

||||||

|

rsync -av /opt/so/saltstack/local/salt/suricata/thresholding /nsm/backup/detections-migration/suricata

|

||||||

|

if [[ $? -eq 0 ]]; then

|

||||||

|

echo "Suricata thresholds have been backed up."

|

||||||

|

else

|

||||||

|

fail "Error: rsync failed to copy the files. Thresholds have not been backed up."

|

||||||

|

fi

|

||||||

|

|

||||||

|

#Backup local rules

|

||||||

|

mkdir -p /nsm/backup/detections-migration/suricata/local-rules

|

||||||

|

rsync -av /opt/so/rules/nids/suri/local.rules /nsm/backup/detections-migration/suricata/local-rules

|

||||||

|

if [[ -f /opt/so/saltstack/local/salt/idstools/rules/local.rules ]]; then

|

||||||

|

rsync -av /opt/so/saltstack/local/salt/idstools/rules/local.rules /nsm/backup/detections-migration/suricata/local-rules/local.rules.bak

|

||||||

|

fi

|

||||||

|

|

||||||

|

#Tell SOC to migrate

|

||||||

|

mkdir -p /opt/so/conf/soc/migrations

|

||||||

|

echo "0" > /opt/so/conf/soc/migrations/suricata-migration-2.4.70

|

||||||

|

chown -R socore:socore /opt/so/conf/soc/migrations

|

||||||

|

}

|

||||||

|

|

||||||

playbook_migration() {

|

playbook_migration() {

|

||||||

# Start SOC Detections migration

|

# Start SOC Detections migration

|

||||||

mkdir -p /nsm/backup/detections-migration/{suricata,sigma/rules,elastalert}

|

mkdir -p /nsm/backup/detections-migration/{suricata,sigma/rules,elastalert}

|

||||||

@@ -648,22 +700,21 @@ playbook_migration() {

|

|||||||

if grep -A 1 'playbook:' /opt/so/saltstack/local/pillar/minions/* | grep -q 'enabled: True'; then

|

if grep -A 1 'playbook:' /opt/so/saltstack/local/pillar/minions/* | grep -q 'enabled: True'; then

|

||||||

|

|

||||||

# Check for active Elastalert rules

|

# Check for active Elastalert rules

|

||||||

active_rules_count=$(find /opt/so/rules/elastalert/playbook/ -type f -name "*.yaml" | wc -l)

|

active_rules_count=$(find /opt/so/rules/elastalert/playbook/ -type f \( -name "*.yaml" -o -name "*.yml" \) | wc -l)

|

||||||

|

|

||||||

if [[ "$active_rules_count" -gt 0 ]]; then

|

if [[ "$active_rules_count" -gt 0 ]]; then

|

||||||

# Prompt the user to AGREE if active Elastalert rules found

|

# Prompt the user to press ENTER if active Elastalert rules found

|

||||||

echo

|

echo

|

||||||

echo "$active_rules_count Active Elastalert/Playbook rules found."

|

echo "$active_rules_count Active Elastalert/Playbook rules found."

|

||||||

echo "In preparation for the new Detections module, they will be backed up and then disabled."

|

echo "In preparation for the new Detections module, they will be backed up and then disabled."

|

||||||

echo

|

echo

|

||||||

echo "If you would like to proceed, then type AGREE and press ENTER."

|

echo "Press ENTER to proceed."

|

||||||

echo

|

echo

|

||||||

# Read user input

|

# Read user input

|

||||||

read INPUT

|

read -r

|

||||||

if [ "${INPUT^^}" != 'AGREE' ]; then fail "SOUP canceled."; fi

|

|

||||||

|

|

||||||

echo "Backing up the Elastalert rules..."

|

echo "Backing up the Elastalert rules..."

|

||||||

rsync -av --stats /opt/so/rules/elastalert/playbook/*.yaml /nsm/backup/detections-migration/elastalert/

|

rsync -av --ignore-missing-args --stats /opt/so/rules/elastalert/playbook/*.{yaml,yml} /nsm/backup/detections-migration/elastalert/

|

||||||

|

|

||||||

# Verify that rsync completed successfully

|

# Verify that rsync completed successfully

|

||||||

if [[ $? -eq 0 ]]; then

|

if [[ $? -eq 0 ]]; then

|

||||||

@@ -1029,6 +1080,7 @@ main() {

|

|||||||

backup_old_states_pillars

|

backup_old_states_pillars

|

||||||

fi

|

fi

|

||||||

copy_new_files

|

copy_new_files

|

||||||

|

create_local_directories "/opt/so/saltstack/default"

|

||||||

apply_hotfix

|

apply_hotfix

|

||||||

echo "Hotfix applied"

|

echo "Hotfix applied"

|

||||||

update_version

|

update_version

|

||||||

@@ -1095,6 +1147,7 @@ main() {

|

|||||||

echo "Copying new Security Onion code from $UPDATE_DIR to $DEFAULT_SALT_DIR."

|

echo "Copying new Security Onion code from $UPDATE_DIR to $DEFAULT_SALT_DIR."

|

||||||

copy_new_files

|

copy_new_files

|

||||||

echo ""

|

echo ""

|

||||||

|

create_local_directories "/opt/so/saltstack/default"

|

||||||

update_version

|

update_version

|

||||||

|

|

||||||

echo ""

|

echo ""

|

||||||

|

|||||||

@@ -1,51 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

NOROOT=1

|

|

||||||

. /usr/sbin/so-common

|

|

||||||

|

|

||||||

{%- set proxy = salt['pillar.get']('manager:proxy') %}

|

|

||||||

{%- set noproxy = salt['pillar.get']('manager:no_proxy', '') %}

|

|

||||||

|

|

||||||

# Download the rules from the internet

|

|

||||||

{%- if proxy %}

|

|

||||||

export http_proxy={{ proxy }}

|

|

||||||

export https_proxy={{ proxy }}

|

|

||||||

export no_proxy="{{ noproxy }}"

|

|

||||||

{%- endif %}

|

|

||||||

|

|

||||||

repos="/opt/so/conf/strelka/repos.txt"

|

|

||||||

output_dir=/nsm/rules/yara

|

|

||||||

gh_status=$(curl -s -o /dev/null -w "%{http_code}" https://github.com)

|

|

||||||

clone_dir="/tmp"

|

|

||||||

if [ "$gh_status" == "200" ] || [ "$gh_status" == "301" ]; then

|

|

||||||

|

|

||||||

while IFS= read -r repo; do

|

|

||||||

if ! $(echo "$repo" | grep -qE '^#'); then

|

|

||||||

# Remove old repo if existing bc of previous error condition or unexpected disruption

|

|

||||||

repo_name=`echo $repo | awk -F '/' '{print $NF}'`

|

|

||||||

[ -d $output_dir/$repo_name ] && rm -rf $output_dir/$repo_name

|

|

||||||

|

|

||||||

# Clone repo and make appropriate directories for rules

|

|

||||||

git clone $repo $clone_dir/$repo_name

|

|

||||||

echo "Analyzing rules from $clone_dir/$repo_name..."

|

|

||||||

mkdir -p $output_dir/$repo_name

|

|

||||||

# Ensure a copy of the license is available for the rules

|

|

||||||

[ -f $clone_dir/$repo_name/LICENSE ] && cp $clone_dir/$repo_name/LICENSE $output_dir/$repo_name

|

|

||||||

|

|

||||||

# Copy over rules

|

|

||||||

for i in $(find $clone_dir/$repo_name -name "*.yar*"); do

|

|

||||||

rule_name=$(echo $i | awk -F '/' '{print $NF}')

|

|

||||||

cp $i $output_dir/$repo_name

|

|

||||||

done

|

|

||||||

rm -rf $clone_dir/$repo_name

|

|

||||||

fi

|

|

||||||

done < $repos

|

|

||||||

|

|

||||||

echo "Done!"

|

|

||||||

|

|

||||||

/usr/sbin/so-yara-update

|

|

||||||

|

|

||||||

else

|

|

||||||

echo "Server returned $gh_status status code."

|

|

||||||

echo "No connectivity to Github...exiting..."

|

|

||||||

exit 1

|

|

||||||

fi

|

|

||||||

@@ -1,41 +0,0 @@

|

|||||||

#!/bin/bash

|

|

||||||

# Copyright Security Onion Solutions LLC and/or licensed to Security Onion Solutions LLC under one

|

|

||||||

# or more contributor license agreements. Licensed under the Elastic License 2.0 as shown at

|

|

||||||

# https://securityonion.net/license; you may not use this file except in compliance with the

|

|

||||||

# Elastic License 2.0.

|

|

||||||

|

|

||||||

NOROOT=1

|

|

||||||

. /usr/sbin/so-common

|

|

||||||

|

|

||||||

echo "Starting to check for yara rule updates at $(date)..."

|

|

||||||

|

|

||||||

newcounter=0

|

|

||||||

excludedcounter=0

|

|

||||||

excluded_rules=({{ EXCLUDEDRULES | join(' ') }})

|

|

||||||

|

|

||||||

# Pull down the SO Rules

|

|

||||||

SORULEDIR=/nsm/rules/yara

|

|

||||||

OUTPUTDIR=/opt/so/saltstack/local/salt/strelka/rules

|

|

||||||

|

|

||||||

mkdir -p $OUTPUTDIR

|

|

||||||

# remove all rules prior to copy so we can clear out old rules

|

|

||||||

rm -f $OUTPUTDIR/*

|

|

||||||

|

|

||||||

for i in $(find $SORULEDIR -name "*.yar" -o -name "*.yara"); do

|

|

||||||

rule_name=$(echo $i | awk -F '/' '{print $NF}')

|

|

||||||

if [[ ! "${excluded_rules[*]}" =~ ${rule_name} ]]; then

|

|

||||||

echo "Adding rule: $rule_name..."

|

|

||||||

cp $i $OUTPUTDIR/$rule_name

|

|

||||||

((newcounter++))

|

|

||||||

else

|

|

||||||

echo "Excluding rule: $rule_name..."

|

|

||||||

((excludedcounter++))

|

|

||||||

fi

|

|

||||||

done

|

|

||||||

|

|

||||||

if [ "$newcounter" -gt 0 ] || [ "$excludedcounter" -gt 0 ];then

|

|

||||||

echo "$newcounter rules added."

|

|

||||||

echo "$excludedcounter rule(s) excluded."

|

|

||||||

fi

|

|

||||||

|

|

||||||

echo "Finished rule updates at $(date)..."

|

|

||||||

@@ -80,9 +80,17 @@ socmotd:

|

|||||||

- mode: 600

|

- mode: 600

|

||||||

- template: jinja

|

- template: jinja

|

||||||

|

|

||||||

|

filedetectionsbackup:

|

||||||

|

file.managed:

|

||||||

|

- name: /opt/so/conf/soc/so-detections-backup.py

|

||||||

|

- source: salt://soc/files/soc/so-detections-backup.py

|

||||||

|

- user: 939

|

||||||

|

- group: 939

|

||||||

|

- mode: 600

|

||||||

|

|

||||||

crondetectionsruntime:

|

crondetectionsruntime:

|

||||||

cron.present:

|

cron.present:

|

||||||

- name: /usr/local/bin/so-detections-runtime-status cron

|

- name: /usr/sbin/so-detections-runtime-status cron

|

||||||

- identifier: detections-runtime-status

|

- identifier: detections-runtime-status

|

||||||

- user: root

|

- user: root

|

||||||

- minute: '*/10'

|

- minute: '*/10'

|

||||||

@@ -91,6 +99,17 @@ crondetectionsruntime:

|

|||||||

- month: '*'

|

- month: '*'

|

||||||

- dayweek: '*'

|

- dayweek: '*'

|

||||||

|

|

||||||

|

crondetectionsbackup:

|

||||||

|

cron.present:

|

||||||

|

- name: python3 /opt/so/conf/soc/so-detections-backup.py &>> /opt/so/log/soc/detections-backup.log

|

||||||

|

- identifier: detections-backup

|

||||||

|

- user: root

|

||||||

|

- minute: '0'

|

||||||

|

- hour: '0'

|

||||||

|

- daymonth: '*'

|

||||||

|

- month: '*'

|

||||||

|

- dayweek: '*'

|

||||||

|

|

||||||

socsigmafinalpipeline:

|

socsigmafinalpipeline:

|

||||||

file.managed:

|

file.managed:

|

||||||

- name: /opt/so/conf/soc/sigma_final_pipeline.yaml

|

- name: /opt/so/conf/soc/sigma_final_pipeline.yaml

|

||||||

|

|||||||

@@ -78,6 +78,12 @@ soc:

|

|||||||

target: ''

|

target: ''

|

||||||

links:

|

links:

|

||||||

- '/#/hunt?q=(process.entity_id:"{:process.entity_id}" OR process.entity_id:"{:process.Ext.ancestry|processAncestors}") | groupby event.dataset | groupby -sankey event.dataset event.action | groupby event.action | groupby process.parent.name | groupby -sankey process.parent.name process.name | groupby process.name | groupby process.command_line | groupby host.name user.name | groupby source.ip source.port destination.ip destination.port | groupby dns.question.name | groupby dns.answers.data | groupby file.path | groupby registry.path | groupby dll.path'

|

- '/#/hunt?q=(process.entity_id:"{:process.entity_id}" OR process.entity_id:"{:process.Ext.ancestry|processAncestors}") | groupby event.dataset | groupby -sankey event.dataset event.action | groupby event.action | groupby process.parent.name | groupby -sankey process.parent.name process.name | groupby process.name | groupby process.command_line | groupby host.name user.name | groupby source.ip source.port destination.ip destination.port | groupby dns.question.name | groupby dns.answers.data | groupby file.path | groupby registry.path | groupby dll.path'

|

||||||

|

- name: actionRelatedAlerts

|

||||||

|

description: actionRelatedAlertsHelp

|

||||||

|

icon: fa-bell

|

||||||

|

links:

|

||||||

|

- '/#/alerts?q=rule.uuid: {:so_detection.publicId|escape} | groupby rule.name event.module* event.severity_label'

|

||||||

|

target: ''

|

||||||

eventFields:

|

eventFields:

|

||||||

default:

|

default:

|

||||||

- soc_timestamp

|

- soc_timestamp

|

||||||

@@ -1252,6 +1258,28 @@ soc:

|

|||||||

- event_data.destination.port

|

- event_data.destination.port

|

||||||

- event_data.process.executable

|

- event_data.process.executable

|

||||||

- event_data.process.pid

|

- event_data.process.pid

|

||||||

|

':netflow:':

|

||||||

|

- soc_timestamp

|

||||||

|

- event.dataset

|

||||||

|

- source.ip

|

||||||

|

- source.port

|

||||||

|

- destination.ip

|

||||||

|

- destination.port

|

||||||

|

- network.type

|

||||||

|

- network.transport

|

||||||

|

- network.direction

|

||||||

|

- netflow.type

|

||||||

|

- netflow.exporter.version

|

||||||

|

- observer.ip

|

||||||

|

':soc:':

|

||||||

|

- soc_timestamp

|

||||||

|

- event.dataset

|

||||||

|

- source.ip

|

||||||

|

- soc.fields.requestMethod

|

||||||

|

- soc.fields.requestPath

|

||||||

|

- soc.fields.statusCode

|

||||||

|

- event.action

|

||||||

|

- soc.fields.error

|

||||||

server:

|

server:

|

||||||

bindAddress: 0.0.0.0:9822

|

bindAddress: 0.0.0.0:9822

|

||||||

baseUrl: /

|

baseUrl: /

|

||||||

@@ -1278,7 +1306,7 @@ soc:

|

|||||||

so-import:

|

so-import:

|

||||||

- securityonion-resources+critical

|

- securityonion-resources+critical

|

||||||

- securityonion-resources+high

|

- securityonion-resources+high

|

||||||

communityRulesImportFrequencySeconds: 28800

|